The Centre for Exploratory Altruism Research (CEARCH) is an EA organization working on cause prioritization research as well as grantmaking and donor advisory. This project was commissioned by the leadership of the Meta Charity Funders (MCF) – also known as the Meta Charity Funding Circle (MCFC) – with the objective of identifying what is underfunded vs overfunded in EA meta. The views expressed in this report are CEARCH's and do not necessarily reflect the position of the MCF.

Generally, by meta we refer to projects whose theory of change is indirect, and involving improving the EA movement's ability to do good – for example, via cause/intervention/charity prioritization (i.e. improving our knowledge of what is cost-effective); effective giving (i.e. increasing the pool of money donated in an impact-oriented way); or talent development (i.e. increasing the pool and ability of people willing and able to work in impactful careers).

The full report may be found here (link). Note that the public version of the report is partially redacted, to respect the confidentiality of certain grants, as well as the anonymity of the people whom we interviewed or surveyed.

Quantitative Findings

Detailed Findings

To access our detailed findings, refer to our spreadsheet (link).

Overall Meta Funding

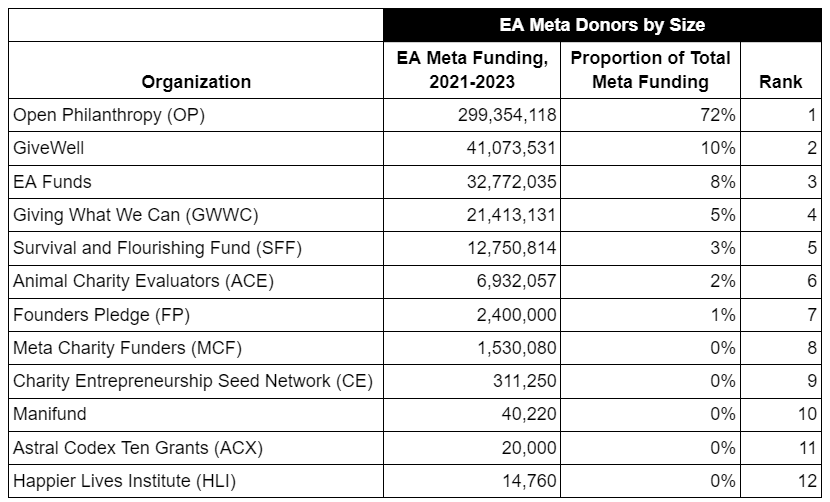

Aggregate EA meta funding saw rapid growth and equally rapid contraction over 2021 to 2023 – growing from 109 million in 2021 to 193 million in 2022, before shrinking back to 117 million in 2023. The analysis excludes FTX, as ongoing clawbacks mean that their funding has functioned less as grants and more as loans.

- Open Philanthropy is by far the biggest funder in the space, and changes in the meta funding landscape are largely driven by changes in OP's spending. And indeed, OP's global catastrophic risks (GCR) capacity building grants tripled from 2021 to 2022, before falling to twice the 2021 baseline in 2023.

- This finding is in line with Tyler Maule's previous analysis.

Meta Funding by Cause Area

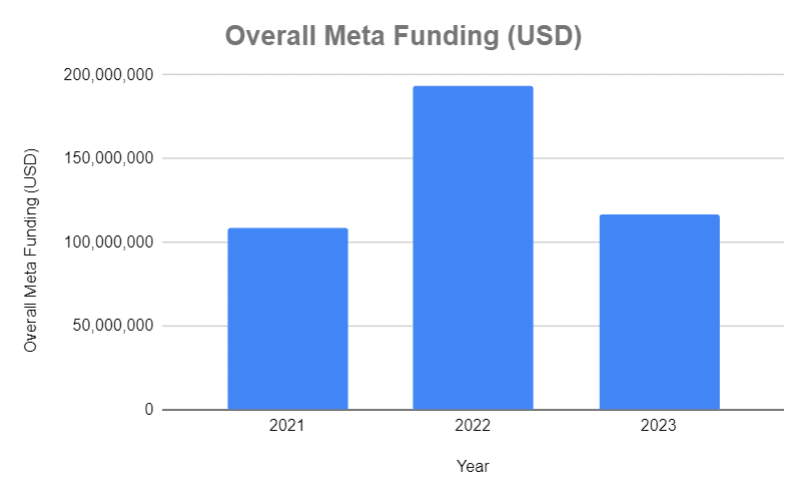

The funding allocation by cause was, in descending order: (most funding) longtermism (i.e. AI, biosecurity, nuclear etc) (274 million) >> global health and development (GHD) (67 million) > cross-cause (53 million) > animal welfare (25 million) (least funding).

Meta Funding by Intervention

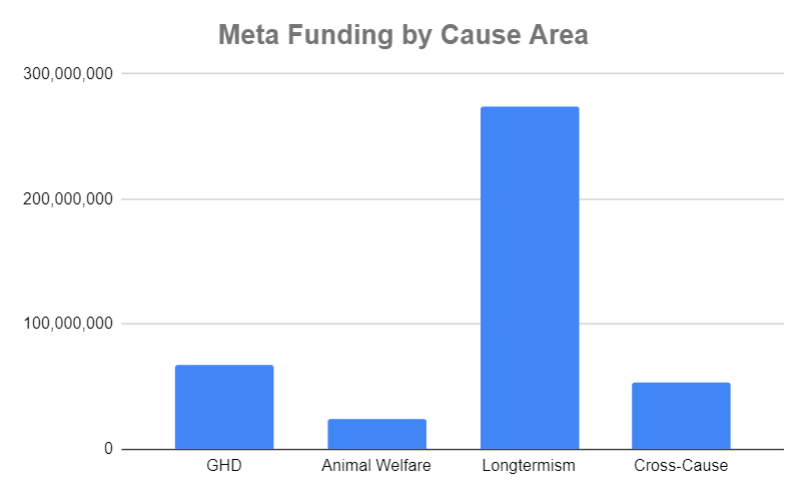

The funding allocation by intervention was, in descending order: (most funding) other/miscellaneous (e.g. general community building, including by national/local EA organizations; events; community infrastructure; co-working spaces; fellowships for community builders; production of EA-adjacent media content; translation projects; student outreach; and book purchases etc) (193 million) > talent (121 million) > prioritization (92 million) >> effective giving (13 million) (least funding). One note of caution – we believe our results overstate how well funded prioritization is, relative to the other three intervention types.

- We take into account what grantmakers spend internally on prioritization research, but for lack of time, we do not perform an equivalent analysis for non-grantmakers (i.e. imputing their budget to effective giving, talent, and other/miscellaneous).

- For simplicity, we classified all grantmakers (except GWWC) as engaging in prioritization, though some grantmakers (e.g. Founders Pledge, Longview) also do effective giving work.

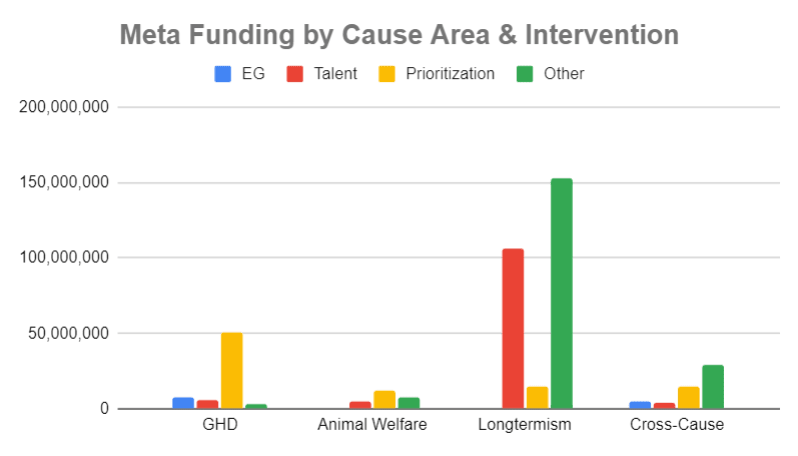

Meta Funding by Cause Area & Intervention

The funding allocation by cause/intervention subgroup was as follows:

- The areas with the most funding were: longtermist other/miscellaneous (153 million) > longtermist talent (106 million) > GHD prioritization (51 million).

- The areas with the least funding were GHD other/miscellaneous (3,000,000) > animal welfare effective giving (500,000) > longtermist effective giving (100,000).

Meta Funding by Organization & Size Ranking

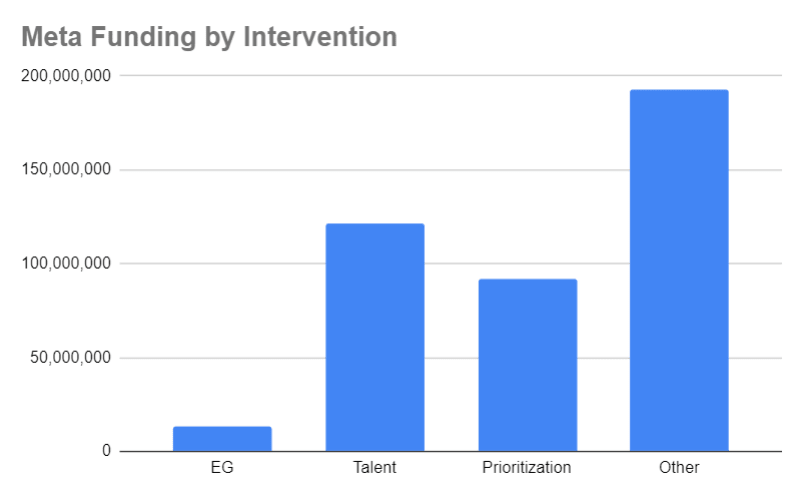

Open Philanthropy is by far the largest funder in the meta space, having given 299 million between 2021-2023; the next two biggest funders are GiveWell (41 million), which also receives OP funding, and EA Funds (which is also funded by OP to a very significant extent).

Meta Funding by Cause Area & Organization

- OP has allocated the lion's share of its meta funding towards longtermism.

- EA Funds, MCF, GWWC and CE have prioritized cross-cause meta.

- As expected, GiveWell, ACE and SFF naturally focus on GHD, animal welfare and longtermist meta respectively.

- FP has supported GHD meta, as has HLI.

- ACX and Manifund have mainly (or exclusively) given to longtermist meta.

How Geography Affects Funding

The survey we conducted finds no strong evidence of funding being geographically biased towards the US/UK relative to the rest of the world; however, there is considerable reason to be sceptical of these findings:

- The small sample size leads to low power, and a low probability of rejecting the null hypothesis even if there is a real relationship between geography and funding success.

- Selection bias likely in play. Organizations/individuals who have funding are systematically less likely to take the survey (since they are likely to be busy working on their funded projects – in contrast to organizations/individuals who did not get funding, who might find the survey an outlet for airing their dissatisfaction with funders). Hence, even if it were true that US/UK projects are more likely to be funded, this will not show up in the data, due to funded organizations/individuals self-selecting out of the survey pool.

- Applicants presumably decide to apply for funding based on their perceived chances of success. If US/UK organizations/individuals genuinely have a better chance of securing funding, and knew this fact, then even lower quality projects would choose to apply, thus lowering the average quality of US/UK applications in the pool. Hence, we end up comparing higher quality projects from the rest of the world to lower quality projects from the US/UK, and equal success in fundraising is not evidence that projects of identical quality from different parts of the world have genuinely equal access to funding.

Overall, we would not update much away from a prior that US/UK-based projects indeed find it easier to get funding due to proximity to grantmakers and donors in the US and UK. To put things in context, the biggest grantmakers on our list are generally based in SF or London (though this is also a generalization to the extent that the teams are global in nature).

Core vs Non-Core Funders

Overall, the EA meta grantmaking landscape is highly concentrated, with core funders (i.e. OP, EA Funds & SFF) making up 82% of total funding.

Biggest EA Organizations

The funding landscape is also fairly skewed on the recipients' end, with the top 10 most important EA organizations receiving 31% of total funding.

Expenditure Categories

When EA organizations spend, they tend to spend approximately 58% of their budget on salary, 8% on non-salary staff costs, and 35% on all other costs.

Qualitative Findings

Outline

We interviewed numerous experts, including but not limited to staff employed by (or donors associated with) the following organizations: OP, EA Funds, MCF, GiveWell, ACE, SFF, FP, GWWC, CE, HLI and CEA. We also surveyed the EA community at large. We are grateful to everyone involved for their valuable insights and invaluable help.

Prioritizing Direct vs Meta

Crucial considerations include (a) the cost-effectiveness of the direct work you yourself would otherwise fund, relative to the cost-effectiveness of the direct work the average new EA donor funds; and (b) whether opportunities are getting better or worse.

Prioritizing Between Causes

Whether to prioritize animal welfare relative to GHD depends on welfare ranges and the effectiveness of top animal interventions, particularly advocacy, in light of counterfactual attribution of advocacy success and declining marginal returns. Whether to prioritize longtermism relative to GHD and animal welfare turns on philosophical considerations (e.g. the person-affecting view vs totalism) and epistemic considerations (e.g. theoretical reasoning vs empirical evidence).

Prioritizing Between Interventions

- Effective Giving: Giving What We Can does appear to generate more money than they spend. There are legitimate concerns that GWWC's current estimate of their own cost-effectiveness is overly optimistic, but a previous shallow appraisal by CEARCH identified no significant flaws, and we believe that GWWC's giving multiplier is directionally correct (i.e. positive).

- Effective giving and the talent pipeline are not necessarily mutually exclusive – GWWC is an important source of talent for EA, and it is counterproductive to reduce effective giving outreach efforts.

- There is a strong case to be made that EA has a backwards approach where we should be focusing EG outreach efforts on high income individuals in rich countries, particularly the US, and yet it is these same people who end up doing a lot of the research and direct work (e.g. in AI), while the people who do EG in practice have a lot less intrinsic earning potential.

- Similarly, it is arguable that younger EAs should be encouraged to do earning-to-give/normal careers where they donate, since one has fewer useful skills and less experience while young, and money is the most useful thing one possesses then (especially in a context where children or mortgages are not a concern). In contrast, EAs can contribute more in direct work when older, when in possession of greater practical skills and experience. The exception to this rule would be EA charity entrepreneurship, where younger people can afford to bear more risk.

- Effective giving and the talent pipeline are not necessarily mutually exclusive – GWWC is an important source of talent for EA, and it is counterproductive to reduce effective giving outreach efforts.

- Talent: The flipside to the above discussion is that our talent outreach might be worth refocusing, both geographically and in terms of age. Geographically, India may be a standout opportunity for getting talent to do research/direct work in a counterfactually cheap way. In terms of age prioritization, it is suboptimal that EA focuses more on outreach to university students or young professionals as opposed to mid-career people with greater expertise and experience.

- Prioritization: GHD cause prioritization may be a good investment if a funder has a higher risk tolerance/a greater willingness to accept a hits-based approach (e.g. health policy advocacy) compared to the average GHD funder.

- Community building: This may be a less valuable intervention unless community building organizations have clearer theories of change and externally validated cost-effectiveness analyses that show how their work produces impact and not just engagement.

Open Philanthropy & EA Funds

- Open Philanthropy: (1) The GHW EA team seems likely to continue prioritizing effective giving; (2) the animal welfare team is currently prioritizing a mix of interventions; and (3) the global catastrophic risks (GCR) capacity building team will likely continue prioritizing AGI risk.

- There has been increasing criticism of OP, stemming from (a) cause level disagreements (neartermists vs longtermists, animal welfare vs GHD); (b) strategic disagreements (e.g. lack of leadership; and spending on GCR and EA community building doing little, or perhaps even accelerating AI risk); and (c) concerns over the dominance of OP warping the community to reduce accountability and impact.

- EA Funds: The EA Funds chair has clarified that EAIF would only really coordinate with OP, since they're reliably around; only if the MCF was around for some time, would EA Funds find it worth factoring into their plans.

- On the issue of EA Funds's performance, we found a convergence in opinion amongst both experts and the EA community at large. It was generally thought that (a) EA Funds leadership has not performed too well relative to the complex needs of the ecosystem; and (b) the EAIF in particular has not been operating smoothly. Our own assessment is that the criticism is not without merit, and overall, we are (a) concerned about EAIF's current ability to fully support an effective EA meta ecosystem; and (b) uncertain as to whether this will improve in the medium term. On the positive side, we believe improvement to be possible, and in some respects fairly straightforward (e.g. hiring additional staff to process EAIF grants).

Trends & Uncertainty

- Trends: Potential trends of note within the funding space include (a) more money being available on the longtermist side due to massive differences in willingness-to-pay between different parts of OP; (b) an increasing trend towards funding effective giving; and (c) much greater interest in funding field- and movement-building outside of EA (e.g. AI safety field-building)

- Uncertainty: The meta funding landscape faces high uncertainty in the short-to-medium term, particularly due to (a) turnover at OP (i.e. James Snowden and Claire Zabel moving out of their roles); (b) uncertainty at EA Funds, both in terms of what it wants to do and what funding it has; and (c) the short duration of time since FTX collapsed, with the consequences still unfolding – notably, clawbacks are still occuring.

Meta note: I wouldn’t normally write a comment like this. I don’t seriously consider 99.99% of charities when making my donations; why single out one? I’m writing anyway because comments so far are not engaging with my perspective, and I hope more detail can help 80,000 hours themselves and others engage better if they wish to do so. As I note at the end, they may quite reasonably not wish to do so.

For background, I was one of the people interviewed for this report, and in 2014-2018 my wife and I were one of 80,000 hours’ largest donors. In recent years it has not made my shortlist of donation options. The report’s characterisation of them - spending a huge amount while not clearly being >0 on the margin - is fairly close to my own view, though clearly I was not the only person to express it. All views expressed below are my own.

I think it is very clear that 80,000 hours have had a tremendous influence on the EA community. I cannot recall anyone stating otherwise, so references to things like the EA survey are not very relevant. But influence is not impact. I commonly hear two views for why this influence may not translate into positive impact:

-80,000 hours prioritises AI well above other cause areas. As a result they commonly push people off paths which are high-impact per other worldviews. So if you disagree with them about AI, you’re going to read things like their case studies and be pretty nonplussed. You’re also likely to have friends who have left very promising career paths because they were told they would do even more good in AI safety. This is my own position.

-80,000 hours is likely more responsible than any other single org for the many EA-influenced people working on AI capabilities. Many of the people who consider AI top priority are negative on this and thus on the org as a whole. This is not my own position, but I mention it because I think it helps explain why (some) people who are very pro-AI may decline to fund.

I suspect this unusual convergence may be why they got singled out; pretty much every meta org has funders skeptical of them for cause prioritisation reasons, but here there are many skeptics in the crowd broadly aligned on prioritisation.

Looping back to my own position, I would offer two ‘fake’ illustrative anecdotes:

Alice read Doing Good Better and was convinced of the merits of donating a moderate fraction of her income to effective charities. Later, she came across 80,000 hours and was convinced by their argument that her career was far more important. However, she found herself unable to take any of the recommended positions. As a result she neither donates nor works in what they would consider a high-impact role; it’s as if neither interaction had ever occurred, except perhaps she feels a bit down about her apparent uselessness.

Bob was having impact in a cause many EAs consider a top priority. But he is epistemically modest, and inclined to defer to the apparent EA consensus- communicated via 80,000 hours - that AI was more important. He switched careers and did find a role with solid - but worse - personal fit. The role is well-paid and engaging day-to-day; Bob sees little reason to reconsider the trade-off, especially since ChatGPT seems to have vindicated 80,000 hours’ prior belief that AI was going to be a big deal. But if pressed he would readily acknowledge that it’s not clear how his work actually improves things. In line with his broad policy on epistemics, he points out the EA leadership is very positive on his approach; who is he to disagree?

Alice and Bob have always been possible problems from my perspective. But in recent years I’ve met far more of them than I did when I was funding 80,000 hours. My circles could certainly be skewed here, but when there’s a lack of good data my approach to such situations is to base my own decisions on my own observations. If my circles are skewed, other people who are seeing very little of Alice and Bob can always choose to fund.

On that last note, I want to reiterate that I cannot think of a single org, meta or otherwise, that does not have its detractors. I suspect there may be some latent belief that an org as central as 80,000 hours has solid support across most EA funders. To the best of my knowledge this is not and has never been the case, for them or for anyone else. I do not think they should aim for that outcome, and I would encourage readers to update ~0 on learning such.

Just want to say here (since I work at 80k & commented abt our impact metrics & other concerns below) that I think it's totally reasonable to:

Thanks Arden. I suspect you don't disagree with the people interviewed for this report all that much then, though ultimately I can only speak for myself.

One possible disagreement that you and other commenters brought up that which I meant to respond to in my first comment, but forgot: I would not describe 80,000 hours as cause-neutral, as you try to do here and here. This seems to be an empirical disagreement, quoting from second link:

I don't think that's how it would go. If an individual 80,000 hours member learned things that cause them to downshift their x-risk or AI safety priority, I expect them to leave the org, not for the org to change. Similar observations on hiring. So while all the individuals involved may be cause neutral and open to change in the sense you describe, 80,000 hours itself is not, practically speaking. It's very common for orgs to be more 'sticky' than their constituent employees in this way.

I appreciate it's a weekend, and you should feel free to take your time to respond to this if indeed you respond at all. Sorry for missing it in the first round.

Speaking in a personal capacity here --

We do try to be open to changing our minds so that we can be cause neutral in the relevant sense, and we do change our cause rankings periodically and spend time and resources thinking about them (in fact we’re in the middle of thinking through some changes now). But how well set up are we, institutionally, to be able to in practice make changes as big as deprioritising risks from AI if we get good reasons to? I think this is a good question, and want to think about it more. So thanks!

Many of the things the EA Survey shows 80,000 Hours doing (e.g. introducing people to EA in the first place, helping people get more involved with EA, making people more likely to remain engaged with EA, introducing people to ideas and contacts that they think are important for their impact, helping people (by their own lights) have more impact), are things which supporters of a wide variety of worldviews and cause areas could view as valuable. Our data suggests that it is not only people who prioritise longtermist causes who are report these benefits from 80,000 Hours.

Hi David,

There is a significant overlap between EA and AI safety, and it is often unclear whether people supposedly working on AI safety are increasing/decreasing AI risk. So I think it would be helpful if you could point to some (recent) data on how many people are being introduced to global health and development, and animal welfare via 80,000 Hours.

Thanks Vasco.

We actually have a post on this forthcoming, but I can give you the figures for 80,000 Hours specifically now.

Thanks, David! Strongly upvoted.

To clarify, are those numbers relative to the people who got to know about EA in 2023 (via 80,000 Hours or any source)?

Thanks Vasco!

These are numbers from the most recent full EA Survey (end of 2022), but they're not limited only to people who joined EA in the most recent year. Slicing it by individual cohorts would reduce the sample size a lot.

My guess is that it would also increase the support for neartermist causes among all recruits (respondents tend to start out neartermist and become more longtermist over time).

That said, if we do look at people who joined EA in 2021 or later (the last 3 years seems decently recent to me, I don't have the sense that 80K's recruitment has changed so much in that time frame, n=1059), we see:

ChatGPT is just the tip of the iceberg here.

GPT4 is significantly more powerful than 3.5. Google now has a multi-modal model that can take in sound, images and video and a context window of up to a million tokens. Sora can generate amazing realistic videos. And everyone is waiting to see what GPT5 can do.

Further, the Center for AI Safety open letter has demonstrated that it isn't just our little community that is worried about these things, but a large number of AI experts.

Their 'AI is going to be a big thing' bet seems to have been a wise call, at least at the current point in time. Of course, I'm doing AI Safety movement building, so I'm a bit biased here, and maybe we'll think differently down the line, but right now they're clearly ahead.

(I work for EA Funds, including EAIF, helping out with public communications among other work. I'm not a grantmaker on EAIF and I'm not responsible for any decision on any specific EAIF grant).

Hi. Thanks for writing this. I appreciate you putting the work in this, even though I strongly disagree with the framing of most of the doc that I feel informed enough to opine on, as well as most of the object-level.

Ultimately, I think the parts of your report about EA Funds are mostly incorrect or substantively misleading, given the best information I have available. But I think it’s possible I’m misunderstanding your position or I don’t have enough context. So please read the following as my own best understanding of the situation, which can definitely be wrong. But first, onto the positives:

There are also some things the report mentioned that we have also been tracking, and I believe we have substantial room for improvement:

Now, onto the disagreements:

Procedurally:

Substantively:

Semantically:

I originally want to correct misunderstandings and misrepresentations of EA Funds’ positions more broadly in the report. However I think there were just a lot of misunderstandings overall, so I think it's simpler for people to just assume I contest almost every categorization of the form “EA funds believes X”. A few select examples:

Note to readers: I reached out to Joel to clarify some of these points before posting. I really appreciate his prompt responses! Due to time constraints, I decided to not send him a copy of this exact comment before posting publicly.

I personally have benefited greatly from talking to specialist advisors in biosecurity.

From GPT4

“The median time to receive a response for an academic grant can vary significantly depending on the funding organization, the field of study, and the specific grant program. Generally, the process can take anywhere from a few months to over a year. ”

“The timeline for receiving a response on grant applications can vary across different fields and types of grants, but generally, the processes are similar in length to those in the academic and scientific research sectors.”

“Smaller grants in this field might be decided upon quicker, potentially within 3 to 6 months [emphasis mine], especially if they require less funding or involve fewer regulatory hurdles.”

Being funded by grants kind of sucks as an experience compared to e.g. employment; I dislike adding to such frustrations. There are also several cases I’m aware of where counterfactually impactful projects were not taken due to funders being insufficiently able to fund things in time, in some of those incidences I'm more responsible than anybody else.

Hi Linch,

Thanks for engaging. I appreciate that we can have a fairly object-level disagreement over this issue; it's not personal, one way or another.

Meta point to start: We do not make any of these criticisms of EA Funds lightly, and when we do, it's against our own interests, because we ourselves are potentially dependent on EAIF for future funding.

To address the points brought up, generally in the order that you raised them:

(1) On the fundamental matter of publication. I would like to flag out that, from checking the email chain plus our own conversation notes (both verbatim and cleaned-up), there was no request that this not be publicized.

For all our interviews, whenever someone flagged out that X data or Y document or indeed the conversation in general shouldn't be publicized, we respected this and did not do so. In the public version of the report, this is most evident in our spreadsheet where a whole bunch of grant details have been redacted; but more generally, anyone with the "true" version of the report shared with the MCF leadership will also be able to spot differences. We also redacted all qualitative feedback from the community survey, and by default anonymized all expert interviewees who gave criticisms of large grantmakers, to protect them from backlash.

I would also note that we generally attributed views to, and discussed, "EA Leadership" in the abstract, both because we didn't want to make this a personal criticism, and also because it afforded a degree of anonymity.

At the end of the day, I apologize if the publication was not in line with what EA Funds would have wanted - I agree it's probably a difference in norms. In a professional context, I'm generally comfortable with people relaying that I said X in private, unless there was an explicit request not to share (e.g. I was talking to a UK-based donor yesterday, and I shared a bunch of my grantmaking views. If he wrote a post on the forum summarizing the conversations he had with a bunch of research organizations and donor advisory orgs, including our own, I wouldn't object). More generally, I think if we have some degree of public influence (including by the money we control) it would be difficult from the perspective of public accountability if "insiders" such as ourselves were unwilling to share with the public what we think or know.

(2) For the issue of CEA stepping in: In our previous conversation, you relayed that you asked a senior person at CEA and they in turn said that "they’re aware of some things that might make the statement technically true but misleading, and they are not aware of anything that would make the statement non-misleading, although this isn’t authoritative since many thing happened at CEA". For the record, I'm happy to remove this since the help/assistance, if any, doesn't seem too material one way or another.

(3) For whether it's fair to characterize EAIF's grant timelines as unreasonably long. As previously discussed, I think the relevant metric is EAIF's own declared timetable ("The Animal Welfare Fund, Long-Term Future Fund and EA Infrastructure Fund aim to respond to all applications in 2 months and most applications in 3 weeks."). This is because organizations and individuals make plans based on when they expect to get an answer - when to begin applying; whether to start or stop projects; whether to go find another job; whether to hire or fire; whether to reach out to another grantmaker who isn't going to support you until and unless you have already exhausted the primary avenues of potential funding.

(4) The issue of the major donor we relayed was frustrated/turned off. You flag out that you're keeping tabs on all the major donors, and so don't think the person in question is major. While I agree that it's somewhat subjective - it's also true that this is a HNWI who, beyond their own giving, is also sitting on the legal or advisory boards many other significant grantmakers and philanthropic outfits. Also, knowledgeable EAs in the space have generally characterized this person as an important meta funder to me (in the context of my own organization then thinking of fundraising, and being advised as to whom to approach). So even if they aren't major in the sense that OP (or EA Funds are), they could reasonably be considered fairly significant. In any case, the discussion is backwards, I think - I agree that they don't play as significant a role in the community right now (and so you assessment of them as non-major is reasonable), but that would be because of the frustration they have had with EA Funds (and, to be fair, the EA community in general, I understand). So perhaps it's best to understand this as potentially vs currently major.

(5) On whether it's fair to characterize EA Funds leadership as being strongly dismissive of cause prioritization. We agree that grants have been made to RP; so the question is cause prioritization outside OP and OP-funded RP. Our assessment of EA Fund's general scepticism of prioritization was based, among other things, on what we reported in the previous section "They believe cause prioritization is an area that is talent constrained, and there aren't a lot of people they feel great giving to, and it's not clear what their natural pay would be. They do not think of RP as doing cause prioritization, and though in their view RP could absorb more people/money in a moderately cost-effective way, they would consider less than half of what they do cause prioritization. In general, they don't think that other funders outside of OP need to do work on prioritization, and are in general sceptical of such work." In your comment, you dispute that the bolded part in particular is true, saying "AFAIK nobody at EA Funds believes this."

We have both verbatim and cleaned up/organized notes on this (n.b. we shared both with you privately). So it appears we have a fundamental disagreement here (and also elsewhere) as to whether what we noted down/transcribed is an accurate record of what was actually said.

TLDR: Fundamentally, I stand by the accuracy of our conversation notes.

(a) Epistemically, it's more likely that one doesn't remember what one said previously vs the interviewer (if in good faith) catastrophically misunderstanding and recording down something that wholesale wasn't said at all (as opposed to a more minor error - we agree that that can totally happen; see below)

(b) From my own personal perspective - I used to work in government and in consulting (for governments). It was standard practice to have notes of meeting, as made by junior staffers and then submitted to more senior staff for edits and approval. Nothing resembling this happened to either me or anyone else (i.e. just total misunderstanding tantamount to fabrication, in saying that that XYZ was said when nothing of the sort took place).

(c) My word does not need to be taken for this. We interviewed other people, and I'm beginning to reach out to them again to check that our notes matched what they said. One has already responded (the person we labelled Expert 5 on Page 34 of the report); they said "This is all broadly correct" but requested we made some minor edits to the following paragraphs (changes indicated by bold and

strikethrough)For the latter, the expert reports both himself and others finding communications with EA Funds leadership difficult and the conversations confusing.

For the substantive concerns – beyond the long wait times EAIF imposes on grantees, the expert was primarily worried that EA Funds leadership has been unreceptive to new ideas and that they are unjustifiably confident that EA Funds is fundamentally correct in its grantmaking decisions. In particular, it appears to the expert that EA Funds leadership does not believe that additional sources of meta funding would be useful for non-EAIF grants [phrase added] – they believe that projects unfunded by EAIF do not deserve funding at all (rather than some projects perhaps not being the right fit for the EAIF, but potentially worth funding by other funders with different ethical worldviews, risk aversion or epistemics). Critically, the expert reports that another major meta donor found EA Funds leadership frustrating to work with,

and so ended up disengaging from further meta grantmaking coordinationand this likely is one reason they ended up disengagement from further meta grantmaking coordination [replaced].My even handed interpretation of this overall situation (trying to be generous to everyone) is that what was reported here ("In general, they don't think that other funders outside of OP need to do work on prioritization") was something the EA Funds interviewee said relatively casually (not necessarily a deep and abiding view, and so not something worth remembering) - perhaps indicative of scepticism of a lot of cause prioritization work but not literally thinking nothing outside OP/RP is worth funding. (We actually do agree with this scepticism, up to an extent).

(6) On whether our statement that “EA Funds leadership doesn't believe that there is more uncertainty now with EA Fund's funding compared to other points in time” is accurate. You say that this is clearly false. Again, I stand by the accuracy of our conversation notes. And in fact, I actually do personally and distinctively remember this particular exchange, because it stood out, as did the exchange that immediately followed, on whether OP's use of the fund-matching mechanism creates more uncertainty.

My generous interpretation of this situation is, again, some things may be said relatively casually, but may not be indicative of deep, abiding views.

(8) For the various semantic disagreements. Some of it we discussed above (e.g. the OP cause prioritization stuff); for the rest -

On whether this part is accurate: “Leadership is of the view that the current funding landscape isn't more difficult for community builders”. Again, we do hold that this was said, based on the transcripts. And again, to be even handed, I think your interpretation (b) is right - probably your team is thinking of the baseline as 2019, while we were thinking mainly of 2021-now.

On whether this part is accurate: “The EA Funds chair has clarified that EAIF would only really coordinate with OP, since they're reliably around; only if the [Meta-Charity Funders] was around for some time, would EA Funds find it worth factoring into their plans. ” I don't think we disagree too much, if we agree that EA Fund's position is that coordination is only worthwhile if the counterpart is around for a bit. Otherwise, it's just some subjective disagreement on what what coordination is or what significant degrees of it amount to.

On this statement: "[EA funds believes] “so if EA groups struggle to raise money, it's simply because there are more compelling opportunities available instead.”

In our discussion, I asked about the community building funding landscape being worse; the interviewee disagreed with this characterization, and started discussing how it's more that standards have risen (which we agree is a factor). The issue is that the other factor of objectively less funding being available was not brought up, even though it is, in our view, the dominant factor (and if you asked community builders this will be all they talk about). I think our disagreement here is partly subjective - over what a bad funding landscape is, and also the right degree of emphasis to put on rising standards vs less funding.

(9) EA Funds not posting reports or having public metrics of successes. Per our internal back-and-forth, we've clarified that we mean reports of success or having public metrics of success. We didn't view reports on payouts to be evidence of success, since payouts are a cost, and not the desired end goal in itself. This contrasts with reports on output (e.g. a community building grant actually leading to increased engagement on XYZ engagement metrics) or much more preferably, report on impact (e.g. and those XYZ engagement metrics leading to actual money donated to GiveWell, from which we can infer that X lives were saved). Like, speaking for my own organization, I don't think the people funding our regranting budgets would be happy if I reported the mere spending as evidence of success.

(OVERALL) For what it's worth, I'm happy to agree to disagree, and call it a day. Both your team and mine are busy with our actual work of research/grantmaking/etc, and I'm not sure if further back and forth will be particularly productive, or a good use of my time or yours.

I'm going to butt in with some quick comments, mostly because:

I'm sharing comments and suggestions below, using your (Joel's) numbering. (In general, I'm not sharing my overall views on EA Funds or the report. I'm just trying to clarify some confusions that seem resolvable, based on the above discussion, and suggest changes that I hope would make the report more useful.)

I also want to say that I appreciate the work that has gone into the report and got value from e.g. the breakdown of quantitative data about funding — thanks for putting that together.

And I want to note potential COIs: I'm at CEA (although to be clear I don't know if people at CEA agree with my comment here), briefly helped evaluate LTFF grants in early 2022, and Linch was my manager when I was a fellow at Rethink Priorities in 2021.

E.g.

In relation to this claim: "They do not think of RP as doing cause prioritization, and though in their view RP could absorb more people/money in a moderately cost-effective way, they would consider less than half of what they do cause prioritization."

"...we mean reports of success or having public metrics of success. We didn't view reports on payouts to be evidence of success, since payouts are a cost, and not the desired end goal in itself. This contrasts with reports on output (e.g. a community building grant actually leading to increased engagement on XYZ engagement metrics) or much more preferably, report on impact (e.g. and those XYZ engagement metrics leading to actual money donated to GiveWell, from which we can infer that X lives were saved)."

Thanks for the clarifications, Joel.

It is clear from Linch's comment that he would have liked to see a draft of the report before it was published. Did you underestimate the interest of EA Funds in reviewing the report before its publication, or did you think their interest in reviewing the report was not too relevant? I hope the former.

I’m one of the people interviewed by Joel and I’d like to share a bit about my experience, as it could serve as an additional datapoint for how this report was constructed.

For some context, I’m on the Groups Team at CEA, where I manage the Community Building Grants program. I reached out to Joel to see how I could support his research, as I was in favour of somebody looking into this question.

The key points I’d like to bring up are:

I'm surprised by the scepticism re 80k. The OP EA/LT survey from 2020 seems to suggest one can be quite confident of the positive impact 80k had on the small (but important) population surveyed. As the authors noted in their summary:

Hey, Arden from 80,000 Hours here –

I haven't read the full report, but given the time sensitivity with commenting on forum posts, I wanted to quickly provide some information relevant to some of the 80k mentions in the qualitative comments, which were flagged to me.

Regarding whether we have public measures of our impact & what they show

It is indeed hard to measure how much our programmes counterfactually help move talent to high impact causes in a way that increases global welfare, but we do try to do this.

From the 2022 report the relevant section is here. Copying it in as there are a bunch of links.

Some elaboration:

Regarding the extent to which we are cause neutral & whether we've been misleading about this

We do strive to be cause neutral, in the sense that we try to prioritize working on the issues where we think we can have the highest marginal impact (rather than committing to a particular cause for other reasons).

For the past several years we've thought that the most pressing problem is AI safety, so have put much of our effort there (Some 80k programmes focus on it more than others – I reckon for some it's a majority, but it hasn't been true that as an org we “almost exclusively focus on AI risk.” (a bit more on that here.))

In other words, we're cause neutral, but not cause *agnostic* - we have a view about what's most pressing. (Of course we could be wrong or thinking about this badly, but I take that to be a different concern.)

The most prominent place we describe our problem prioritization is our problem profiles page – which is one of our most popular pages. We describe our list of issues this way: "These areas are ranked roughly by our guess at the expected impact of an additional person working on them, assuming your ability to contribute to solving each is similar (though there’s a lot of variation in the impact of work within each issue as well). (Here's also a past comment from me on a related issue.)

Regarding the concern about us harming talented EAs by causing them to choose bad early career jobs

To the extent that this has happened this is quite serious – helping talented people have higher impact careers is our entire point! I think we will always sometimes fail to give good advice (given the diversity & complexity of people's situations & the world), but we do try to aggressively minimise negative impacts, and if people think any particular part of our advice is unhelpful, we'd like them to contact us about it! (I'm arden@80000hours.org & I can pass them on to the relevant people.)

We do also try to find evidence of negative impact, e.g. using our user survey, and it seems dramatically less common than the positive impact (see the stats above), though there are of course selection effects with that kind of method so one can't take that at face value!

Regarding our advice on working at AI companies and whether this increases AI risk

This is a good worry and we talk a lot about this internally! We wrote about this here.

I would also add these results, which I think are, if anything, even more relevant to assessing impact:

Hi Arden,

Thanks for engaging.

(1) Impact measures: I'm very appreciative of the amount of thought that went into developing the DIPY measure. The main concern (from the outside) with respect to DIPY is that it is critically dependent on the impact-adjustment variable - it's probably the single biggest driver of uncertainty (since causes can vary by many magnitudes). Depending on whether you think the work is impactful (or if you're sceptical, e.g. because you're an AGI sceptic or because you're convinced of the importance of preventing AGI risk but worried about counterproductivity from getting people into AI etc), the estimate will fluctuate very heavily (and could be zero or significantly negative). From the perspective of an external funder, it's hard to be convinced of robust cost-effectiveness (or speaking for myself, as a researcher, it's hard to validate).

(2) I think we would both agree that AGI (and to a lesser extent, GCR more broadly) is 80,000 Hour's primary focus.

I suppose the disagreement then is the extent to which neartermist work gets any focus at all. This is to some extent subjective, and also dependent on hard-to-observe decision-making and resource-allocation done internally. With (a) the team not currently planning to focus on neartermist content for the website (the most visible thing), (b) the career advisory/1-1 work being very AGI-focused too (to my understanding), and (c) fundamentally, OP being 80,000 Hour's main funder, and all of OP's 80k grants being from the GCR capacity building team over the past 2-3 years - I think from an outside perspective, a reasonable assumption is that AGI/GCR is >=75% of marginal resources committed. I exclude the job board from analysis here because I understand it absorbs comparatively little internal FTE right now.

The other issue we seem to disagree on is whether 80k has made its prioritization sufficiently obvious. It appreciate that this is somewhat subjective, but it might be worth erring on the side of being too obvious here - I think the relevant metric would be "Does a average EA who looks at the job board or signs up for career consulting understand that 80,000 Hours prefers I prioritize AGI?", and I'm not sure that's the case right now.

(3) Bad career jobs - this was a concern aired, but we didn't have too much time to investigate it - we just flag it out as a potential risk for people to consider.

(4) Similarly, we deprioritized the issue of whether getting people into AI companies worsens AI risk. We leave it up to potential donors to be something they might have to weigh and consider the pros and cons of (e.g. per Ben's article) and to make their decisions accordingly.

Hey Joel, I'm wondering if you have recommendations on (1) or on the transparency/clarity element of (2)?

(Context being that I think 80k do a good job on these things, and I expect I'm doing a less good job on the equivalents in my own talent search org. Having a sense of what an 'even better' version might look like could help shift my sort of internal/personal overton window of possibilities.)

Hi Jamie,

For (1) I'm agree with 80k's approach in theory - it's just that cost-effectiveness is likely heavily driven by the cause-level impact adjustment - so you'll want to model that in a lot of detail.

For (2), I think just declaring up front what you think is the most impactful cause(s) and what you're focusing on is pretty valuable? And I suppose when people do apply/email, it's worth making that sort of caveat as well. For our own GHD grantmaking, we do try to declare on our front page that our current focus is NCD policy and also if someone approaches us raising the issue of grants, we make clear what our current grant cycle is focused on.

Hope my two cents is somewhat useful!

Makes sense on (1). I agree that this kind of methodology is not very externally legible and depends heavily on cause prioritisation, sub-cause prioritisation, your view on the most impactful interventions, etc. I think it's worth tracking for internal decision-making even if external stakeholders might not agree with all the ratings and decisions. (The system I came up with for Animal Advocacy Careers' impact evaluation suffered similar issues within animal advocacy.)

For (2), I'm not sure why you don't think 80 do this. E.g. the page on "What are the most pressing world problems?" has the following opening paragraph:

Then the actual ranking is very clear: AI 1, pandemics 2, nuclear war 3, etc.

And the advising page says quite prominently "We’re most helpful for people who... Are interested in the problems we think are most pressing, which you can read about in our problem profiles." The FAQ on "What are you looking for in the application?" mentions that one criterion is "Are interested in working on our pressing problems".

Of course it would be possible to make it more prominent, but it seems like they've put these things pretty clearly on the front.

It seems pretty reasonable to me that 80k would want to talk to people who seem promising but don't share all the same cause prio views as them; supporting people to think through cause prio seems like a big way they can add value. So I wouldn't expect them to try to actively deter people who sign up and seem worth advising but, despite the clear labelling on the advising page, don't already share the same cause prio rankings as 80k. You also suggest "when people do apply/email, it's worth making that sort of caveat as well", and that seems in the active deterrence ballpark to me; to the effect of 'hey are you sure you want this call?'

On (2). If you go to 80k's front page (https://80000hours.org/), there is no mention that the organizational's focus is AGI or that they believe it to be the most important cause. For the other high-level pages accessible from the navigation bar, things are similar not obvious. For example, in "Start Here", you have to read 22 paragraphs down to understand 80k's explicit prioritization of x-risk over other causes. In the "Career Guide", it's about halfway down the page. If the 1-1 advising tab, you have to go down to the FAQs at the bottom of the page, and even then it only refers to "pressing problems" and links back to the research page. And on the research page itself, the issue is that it doesn't give a sense that the organization strongly recommends AI over the rest, or that x-risk gets the lion's share of organizational resources.

I'm not trying to be nitpicky, but trying to convey that a lot of less engaged EAs (or people who are just considering impactful careers) are coming in, reading the website, and maybe browsing the job board or thinking of applying for advising - without realizing just how convinced on AGI 80k is (and correspondingly, not realizing how strongly they will be sold on AGI in advisory calls). This may not just be less engaged EAs too, depending on how you defined engaged - like I was reading Singer since two decades ago; have been a GWWC pledger since 2014; and whenever giving to GiveWell have actually taken the time to examine their CEAs and research reports. And yet until I actually moved into direct EA work via the CE incubation program, I didn't realize how AGI-focused 80k was.

People will never get the same mistaken impression when looking at Non-Linear or Lightcone or BERI or SFF. I think part of the problem is (a) putting up a lot of causes on the problems page, which gives the reader the impression of a big tent/broad focus, and (b) having normie aesthetics (compare: longtermist websites). While I do think it's correct and valuable to do both, the downside is that without more explicit clarification (e.g. what Non-Linear does, just bluntly saying on the front page in font 40: "We incubate AI x-risk nonprofits by connecting founders with ideas, funding, and mentorship"), the casual reader of the website doesn't understand that 80k basically works on AGI.

Yeah many of those things seem right to me.

I suspect the crux might be that I don't necessarily think it's a bad thing if "the casual reader of the website doesn't understand that 80k basically works on AGI". E.g. if 80k adds value to someone as they go through the career guide, even if they don' realise that "the organization strongly recommends AI over the rest, or that x-risk gets the lion's share of organizational resources", is there a problem?

I would be concerned if 80k was not adding value. E.g. I can imagine more salesly tactics that look like making a big song and dance about how much the reader needs their advice, without providing any actual guidance until they deliver the final pitch, where the reader is basically given the choice of signing up for 80k's view/service, or looking for some alternative provider/resource that can help them. But I don't think that that's happening here.

I can also imagine being concerned if the service was not transparent until you were actually on the call, and then you received some sort of unsolicited cause prioritisation pitch. But again, I don't think that's what's happening; as discussed, it's pretty transparent on the advising page and cause prio page what they're doing.

Very interesting report. It provided a lot of visibility into how these funders think.

I would have embraced that more in the past, but I'm a lot more skeptical of this these days and I think that if it were possible then it would have worked out. For many tasks, EA wants the best talent that is available. Top talent is best able to access overseas opportunities and so the price is largely independent of current location.

I agree that there is a lot of alpha in reaching out to mid-career professionals - if you are able to successfully achieve this. This work is a lot more challenging - mid-career professionals are often harder to reach, less able to pivot and have less time available to upskill. Less people are able to do this kind of outreach because these professionals may take current students or recent grads less seriously. So for a lot of potential movement builders, focusing on students or young professionals is a solid play because it is the best combination of impact and personal fit.

As an on-the-ground community builder, I'm skeptical of your take. So many people that I've talked to became interested in EA or AI safety through 80,000 Hours. Regarding: "misleading the community that it is cause neutral while being almost exclusively focused on AI risk", I was more concerned about this in the past, but I feel that they're handling this pretty well these days. Would be quite interested to hear if you had specific ways you thought they should change. Regarding, causing early career EA's to choose suboptimal early career jobs that mess up their CVs, I'd love to hear more detail on this if you can. Has anyone written up a post on this?

Rethink Priorities seems to be a fairly big org - especially taking into account that it operates on the meta-level - so I understand why OpenPhil might be reluctant to spend even more money there. I suspect that there's a feeling that even if they are doing good work, that meta work should only be a certain portion of their budge. I wouldn't take that as a strong signal.

As someone on the ground, I can say that community building has translated to talent moved at the very least. Money moved is less visible to me because a lot of people aren't shouting about the pledges from the rooftops, plus the fact that donations are very heavy-tailed. Would love to hear some thoughtful discussion of how this could be better measured.

Very curious about what impressed Open Philanthropy about these people since I'm not familiar with their work. Would be keen to learn more though!

Hi Chris,

Just to respond to the points you raised

(1) With respect to prioritize India/developing country talent, it probably depends on the type of work (e.g. direct work in GHD/AW suffers less for this), but in any case, the pool of talent is big, and the cost savings are substantial, so it might be reasonably to go this route regardless.

(2) Agreed that it's challenging, but I guess it's a chicken vs egg problem - we probably have to start somewhere (e.g. HIP etc does good work in the space, we understand).

(3) For 80k, see my discussion with Arden above - AGB's views are also reasonably close to my own.

(4) On Rethink - to be fair, our next statement after that sentence is "This objection holds less water if one is disinclined to accept OP's judgement as final." I think OP's moral weights work, especially, is very valuable.

(5) There's a huge challenge over valuing talent, especially early career talent (especially if you consider the counterfactual being earning to give at a normal job). One useful heuristic is: Would the typical EA organization prefer an additional 5k in donations (from an early career EA giving 10% of their income annually) or 10 additional job applications to a role? My sense from talking to organizations in the space is that (a) the smaller orgs are far more funding constrained, so prefer the former, and (b) the bigger orgs are more agnostic, because funding is less a challenge but also there is a lot of demand for their jobs anyway.

(6) I can't speak for OP specifically, but I (and others in the GHD policy space I've spoken to) think that Eirik is great. And generally, in GHD, the highest impact work is convincing governments to change the way they do things, and you can't really do that without positions of influence.

For 1: A lot of global health and development is much less talent-hungry than animal welfare work or x-risk work. Take for example the Against Malaria Foundation. They receive hundreds of millions of dollars, but they only have a core team of 13. Sure you need a bunch of people to hand out bed nets, but the requirements for that aren't that tight and sure you need some managers, but lots of people are capable of handling this kind of logistics, you don't really have to headhunt them. I suppose this could change if there was more of a pivot into policy where talent really matters. However, in that case, you would probably want people from the country whose policy you want to influence, moreso then thinking about cost.

For 5: It's not clear to me that the way you're thinking about this makes sense to me. If you're asking about the trade-off between direct work and donations, it seems as though we should ask about $5k from the job vs. a new candidate who is better than your current candidate as, in a lot of circumstances, they will have the option of doing earn to give so long as they don't take an EA job (I suppose there is the additional factor of how much chasing EA jobs detracts from chasing earn to give jobs).

1) It agree that policy talent is important but comparatively scarce, even in GHD. It's the biggest bottleneck that Charity Entrepreneurship is facing on incubating GHD policy organizations right now, unfortunately.

5) I don't think it's safe to assume that the new candidate is better than your current candidate? While I agree that's fine for dedicated talent pipeline programmes, I'm not confident of making this assumption for general community building, is by its nature less targeted and typically more university/early-career oriented.

My point was that presumably the org thinks they're better if they decide to hire them as opposed to the next best person.

I apologize if we're talking at cross purposes, but the original idea I was trying to get across is that when valuing additional talent from community building, there is the opportunity cost of a non-EA career where you just give. So basically you're comparing (a) the value of money from that earning to give vs (b) the value of the same individual trying for various EA jobs.

The complication is that (i) the uncertainty of the individual really following through on the intention to earn to give (or going into an impactful career) applies to both branches; however, (ii) the uncertainty of success only applies to (b). If they really try to earn to give they can trivially succeed (e.g. give 10% of the average American salary - so maybe $5k, ignoring adjustments for lower salaries for younger individual and higher salaries for typically elite educated EAs). However, if they apply to a bunch of EA jobs, the aren't necessarily going to succeed (i.e. they aren't necessary going to be better than the counterfactual hire). So ultimately we're comparing the value an additional $5k annual donation vs additional ~10 applications of average quality to various organizations (depends on how many organizations an application will apply to per annum - very uncertain).

I also can't speak with certainty as to how organizations will choose, but my sense is that (a) smaller EA organizations are funding constrained and would prefer getting the money; while (b) larger EA organizations are more agnostic because they have both more money and the privilege of getting the pick of the crop for talent (c.f. high demand for GiveWell/OP jobs).

Okay, I guess parts of that framework make a bit more sense now that you've explained it.

At the same time, it feels that people can always decide to earn to give if they fail to land an EA-relevant gig, so I'm not sure why you're modeling it as a $5k annual donation vs. a one-time $5k donation for someone spending a year focusing on upskilling for EA roles. Maybe you could add an extra factor for the slowdown in their career advancement, but $50k extra per year is unrealistic.

I think it's also worth considering that there are selection effects here. So insofar as EA promotes direct work, people with higher odds of being successful in landing a direct work position are more likely to pursue that and people with better earn-to-give potential are less likely to take the advice.

Additionally, I wonder whether the orgs you surveyed understood ten additional applications as ten additional average applications or ten additional applications from EA's (more educated and valued-aligned than the general population) who were dedicated enough to actually follow through on earning to give.

I think you're right in pointing out the limitations of the toy model, and I strongly agree that the trade-off is not as stark as it seems - it's more realistic that we model it aa a delay from applying to EA jobs before settling for a non EA job (and that this wont be like a year or anything)

However, I do worry that the focus on direct work means people generally neglect donations as a path to impact and so the practical impact of deciding to go for an EA career is that people decide not to give. An unpleasant surprise I got from talking to HIP and others in the space is that the majority of EAs probably don't actually give. Maybe it's the EA boomer in me speaking, but it's a fairly different culture compared to 10+ years ago where being EA meant you bought into the drowning child arguments and gave 10% or more to whatever cause you thought most important

I’m confused by the use of the term “expert” throughout this report. What exactly is the expertise that these individual donors and staff members are meant to be contributing? Something more neutral like ‘stakeholder’ seems more accurate.

Generally, they have a combination of the following characteristics: (a) a direct understanding of what their own grantmaking organization is doing and why, (b) deep knowledge of the object-level issue (e.g. what GHD/animal welfare/longtermist projects to fund, and (c) extensive knowledge of the overall meta landscape (e.g. in terms of what other important people/organizations there are, the background history of EA funding up to a decade in the past, etc).

I'd suggest using a different term or explicitly outlining how you use "expert" (ideally both in the post and in the report, where you first use the term) since I'm guessing that many readers will expect that if someone is called "expert" in this context, they're probably "experts in EA meta funding" specifically — e.g. someone who's been involved in the meta EA funding space for a long time, or someone with deep knowledge of grantmaking approaches at multiple organizations. (As an intuition pump and personal datapoint, I wouldn't expect "experts" in the context of a report on how to run good EA conference sessions to include me, despite the fact that I've been a speaker at EA Global a few times.) Given your description of "experts" above, which seems like it could include (for instance) someone who's worked at a specific organization and maybe fundraised for it, my sense is that the default expectation of what "expert" means in the report would this be mistaken.

Relatedly, I'd appreciate it if you listed numbers (and possibly other specific info) in places like this:

E.g. the excerpt above might turn into something like the following:

We interviewed [10?] [experts], including staff at [these organizations] and donors who have supported [these organizations]. We also ran an "EA Meta Funding Survey" of people involved in the EA community and got 25 responses.

This probably also applies in places where you say things like "some experts" or that something is "generally agreed". (In case it helps, a post I love has a section on how to be (epistemically) legible.)

I’m inferring from other comments that AGB as an individual EtG donor is the “expert [who] funded [80k] at an early stage of their existence, but has not funded them since” mentioned in the report. If this is the case, how do individual EtG donors relate to the criteria you mention here?

Executive summary: The EA meta funding landscape saw rapid growth and contraction from 2021-2023, with Open Philanthropy being the largest funder and longtermism receiving the most funding, while the landscape faces high uncertainty in the short-to-medium term.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.

I think this is conditional on the object-level positions available being reasonably well-suited to mid-career folks. For instance, job security becomes increasingly important as one ages. Even to the extent that successful mid-career folks face a risk of being let go in their current positions, they know they should be able to find another position in their field pretty easily. Likewise, positions in some cause areas may not pay well enough to attract much interest from successful mid-career professionals in certain geographic locations who have a kid and a mortgage. Those areas are probably better off with the current recruiting focus, at least in developed countries.

I think this is a legitimate concern, but it's not clear to me that it outweighs the benefits, especially for roles where experience is essential.