Key points

- We surveyed UK respondents, asking them to list (without prompting) the three factors they thought most likely to cause human extinction in the next 100 years.

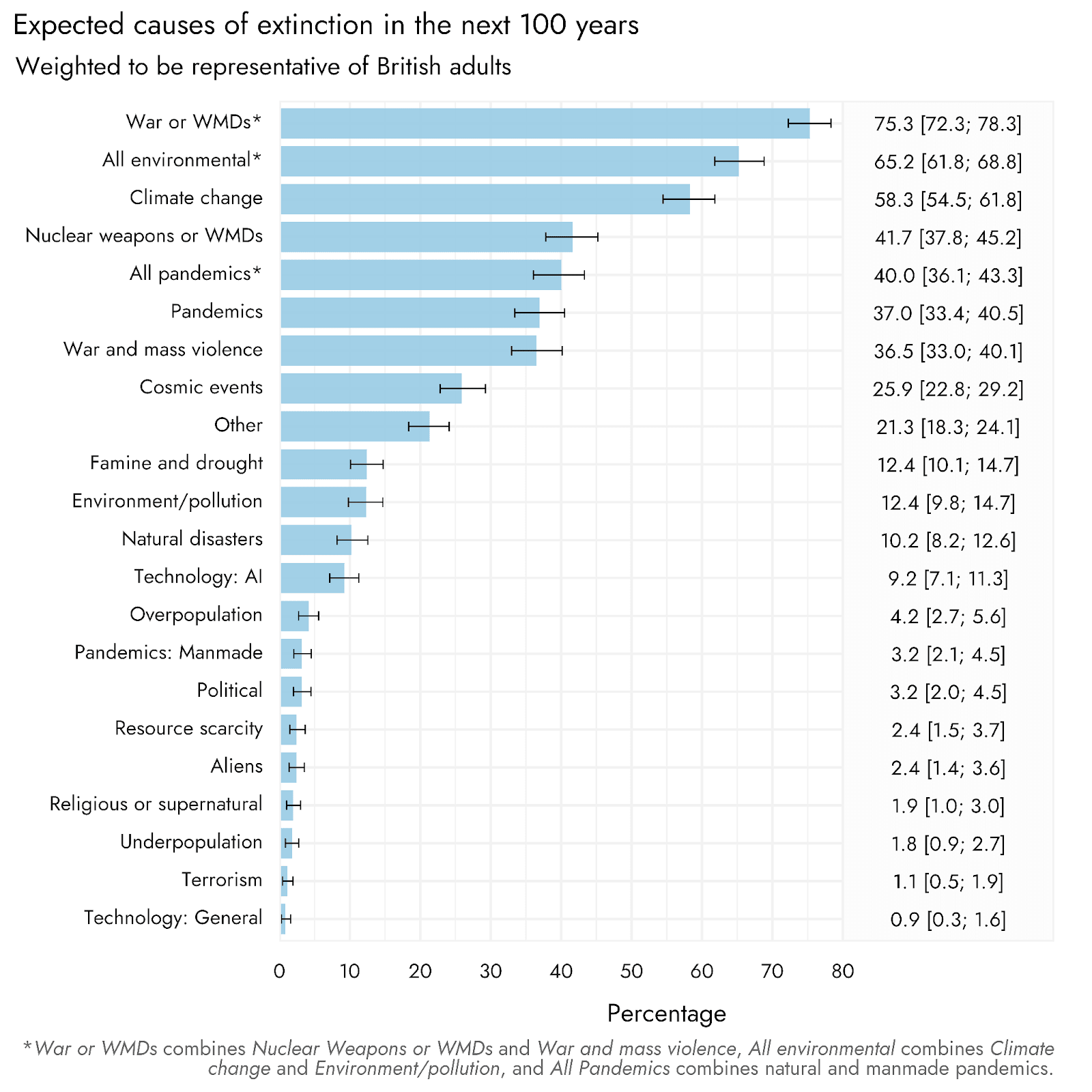

- We estimate AI risk to be reported by 9% of British adults, at a similar level to Natural disasters (10%)

- The relative ordering of risks aligns well with previous polling from YouGov in 2023, using an alternative methodology. Climate change (58%) and Nuclear weapons/WMDs (42%) were top concerns, followed by Pandemics (37%).

- Some demographic effects were quite large, such as that men were substantially more likely than women to raise the issue of nuclear weapons.

- Polling suggests AI risk is not a wholly niche issue, but is substantially less of a concern to the public than cases such as nuclear weapons or the climate.

This post is also available on Rethink Priorities' website, or to download as a PDF here.

Introduction

Increasing attention is being paid to risks from AI, but it is unclear to what extent the general public are concerned about or even aware of AI as a serious risk. This has relevance for understanding to what extent, and in what manner, we should engage in outreach about AI risk. Public perception of AI risk may also affect the viability and palatability of AI policy proposals.

Previous work from Rethink Priorities has considered US respondents’ expectations of extinction from AI by 2100, finding that while the single most common response was a very low (~1%) likelihood, the median estimate was around a 15% likelihood. YouGov has also conducted longitudinal polling that asked respondents to pick up to three factors they think most likely to cause extinction from a range of possible threats. Approximately 17% of respondents selected AI/robots, relative to 55% selecting Nuclear war and 38% picking Climate change, in 2023. The Existential Risk Observatory (ERO) took a different approach, asking American and Dutch respondents to freely respond with what they thought were the top three possible causes of extinction by 2100. This ‘open comment’ approach may be more likely to pick up on those threats that more spontaneously occur to respondents and are more ‘top of mind’. It also precludes respondents selecting risks that they had previously never considered or heard of before[1]. It therefore represents an interesting alternative to ratings or selection of items that respondents are provided with. ERO reported that approximately 7% of US respondents and 11% of Dutch respondents included AI risk in their open responses.

Rethink Priorities were asked to include such an open comment question in our large scale Pulse survey, but this was not feasible due to the excessive time requirements and consequent cost that open comments represent in large scale studies. However, we agree that this open comment format can provide insights into people’s spontaneous risk perceptions. Accordingly, between July 31st and August 2nd 2024, we fielded a survey including this open comment format to ~1500 adults from Great Britain. The results of this survey are presented here. Our work builds upon previous work using this open comment format by drawing upon a substantially larger sample size, weighting responses to be representative of the British adult population, providing estimates for perceptions of other risks, providing assessments of how various demographic features may be associated with different risks, and analyzing associations amongst risks.

Methodology in brief

Between July 31st and August 2nd 2024 we fielded an online survey on Prolific to approximately 1500 adults resident in Great Britain, with a final sample size of 1452 following quality checks. Besides demographic information, the key question was an open comment about what respondents considered to be the most likely causes of extinction in the next 100 years: “In the spaces provided below, please list three things that you think would be most likely to lead to the extinction of humanity in the next 100 years”. Based upon a pilot study of this same question conducted previously, previous research on perceived risks of extinction, and assessment of these responses, we generated a range of perceived extinction risks into which responses could be categorized. We then used GPT-4o-mini to categorize each response according to these categories and rate how well the response fitted into that category, as well as to separately check each categorization and confirm or dispute the assigned category. These categorizations were then manually checked and confirmed/edited[2]. The possible categories were:

- Nuclear weapons or WMDs – References to nuclear war or to nuclear weapons, nuclear radiation, as well as other weapons of mass destruction such as chemical weapons. References to nuclear energy or nuclear accidents are not included.

- War and mass violence – References to war more generally, world wars, fighting amongst nations, and mass violence, that does not reference nuclear weapons or other WMDS.

- Climate change – specific references to climate change or global warming, or to things that are confidently related to climate change e.g., rising sea levels, but not things like drought or hurricanes. This category should not include simple references to ‘weather’. References to oil and fossil fuels can be included, but not if this reference is talking about a lack of fossil fuels.

- Environment/pollution - references to other environmental issues not specifically related to global warming, such as references to pollution or air quality, or simply the environment.

- Natural disasters – not referencing climate change or the environment, but talking about more general extreme natural events such as storms, fires, hurricanes, tsunamis, and earthquakes.

- Technology: AI – references to robots, AI, or artificial intelligence.

- Technology: General – references to technology more generally, but not specifically mentioning AI.

- Cosmic events – references to things such as asteroids, meteors, solar flares, and other cosmic events.

- Famine and drought/food and water shortages – references to things such as famine, food shortages, lack of water, and drought.

- Resource scarcity - references to a lack of resources or using up all our resources, excluding cases that would better be placed in famine and drought

- Religious or supernatural – references to things such as simply God, the judgment of god, the coming of the apocalypse, and other clear references to the intervention of God.

- Underpopulation – references to population collapse, people not having children, infertility, and similar things indicating concern over underpopulation

- Overpopulation – references to overpopulation, overcrowding, too many people, and similar things indicating concerns to do with overpopulation.

- Pandemics – references to pandemic viruses and diseases, including references to specific cases such as COVID. If people simply mention ‘diseases’ then we assume they mean something like a pandemic, but things such as ‘cancer’ or ‘obesity’ are not included.

- Pandemics: Manmade - As above, but where the person notes that these are the result of human caused experimentation or bioterrorism.

- Terrorism – references to acts of terrorism or violent extremism, but not including bioterrorism

- Political – references to more general political stances or concerns, such as blaming a particular political figure, party, or ideology.

- Aliens - references to aliens or extraterrestrials, including alien invasion and things such as UFOs.

- Jibberish – when people put a nonsensical comment/key mashed - if more than one jibberish response was detected for a respondent then they were excluded.

- Other – other answers that do not fit any of the above categories.

For our topline percentage estimates of the British public selecting each of the different risks, responses were weighted to be representative of the British adult population according to Age, Ethnicity, Gender, Region, Education, and 2024 General Election vote.

Expected causes of extinction

Figure 1 shows the estimated proportions of the British public who would freely recall different types of risk. For single categories, the single most likely answer was climate change at 58%, followed by Nuclear Weapons/WMDs at 42%, and thirdly Pandemics at 37%. If combining some categories, the more general category of War or WMDs comes in top place, being referenced by 75% of people, with general environmental concerns (combining climate change with more general environmental/pollution references) coming second at 65%. We estimate 9% of the British public would raise concerns over AI risk. This places AI risk slightly behind Natural Disasters (at 10%), but substantially behind Cosmic Events (at 25%). In terms of the relative placement of these risks, these findings follow quite closely those of YouGov, for which the order was Nuclear War (55%), Climate Change (38%), Pandemics (29%), Meteor/Asteroid (20%; i.e., Cosmic Events), and then AI at 17% (paired with another environmental concern of bees dying out). The percentage estimated to pick AI in the present study was also approximately similar to the 7-11% suggested by the ERO.

Figure 1: Population-adjusted estimates for the probability of picking each cause of extinction.

Associations between demographic variables and selected risks

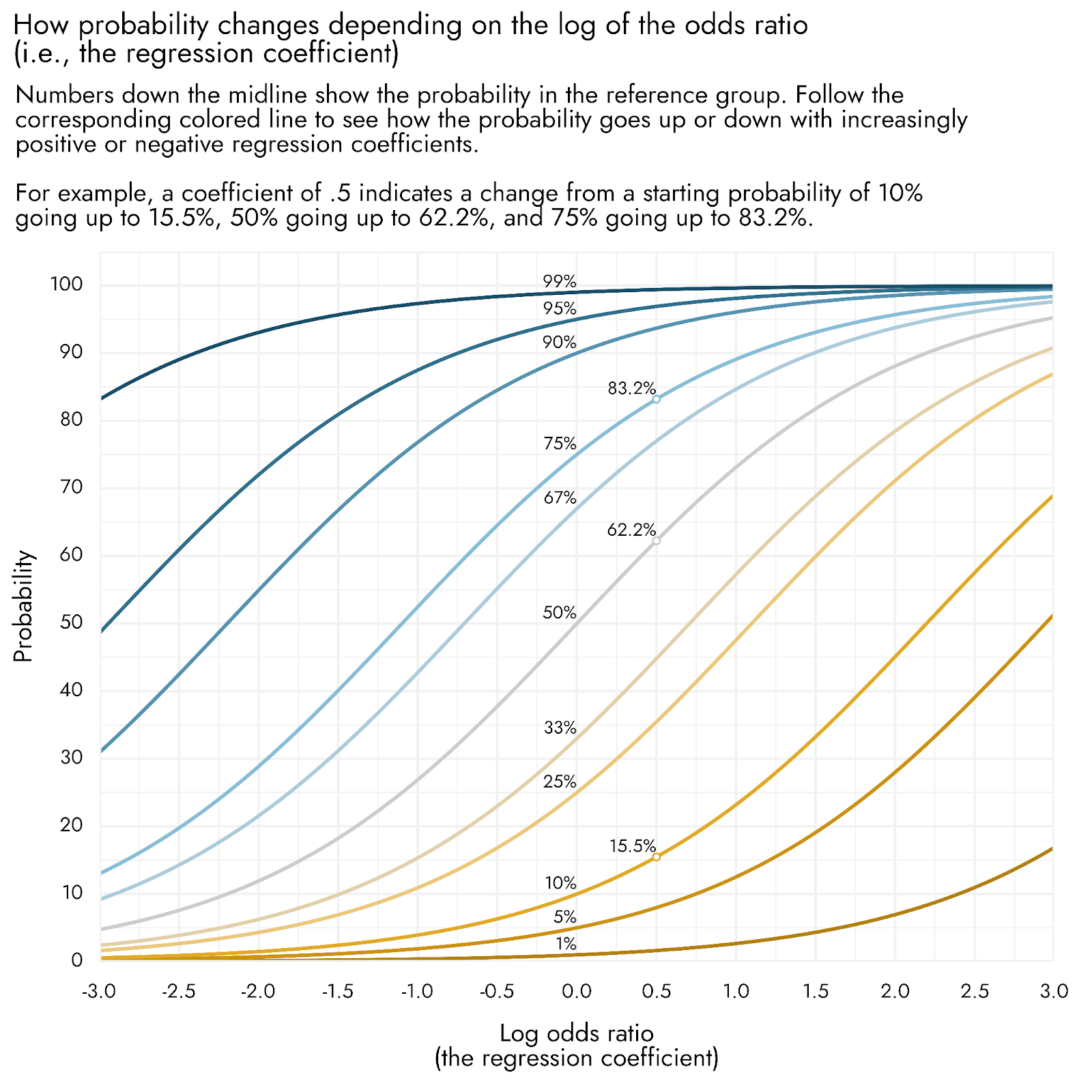

Besides overall population estimates, it may be of interest to assess whether certain demographic features are associated with higher or lower likelihoods of selecting each category. In the plots below, we’ve focused on the most important outcomes considering their prevalence in the population and their relevance to the field of existential risk: Nuclear Weapons/WMDs, Pandemics, Climate Change, and AI. Plots depict the coefficients from binary logistic regression models that included as predictors Gender, Ethnicity, Education (modeled from -1 = Less than GCSE, 0 = GCSE or A-levels, 1 = Graduate or Postgraduate degree), Age (centered at age 45 and divided by 5, i.e., the coefficient represents a change up or down in 5 years of age), and 2024 General Election vote[3]. The implications of such regression coefficients can be a little difficult to intuitively grasp, so we have provided a graph below that can aid in interpretation (Figure 2).

Figure 2: Visual aid for interpreting binary logistic regression coefficients.

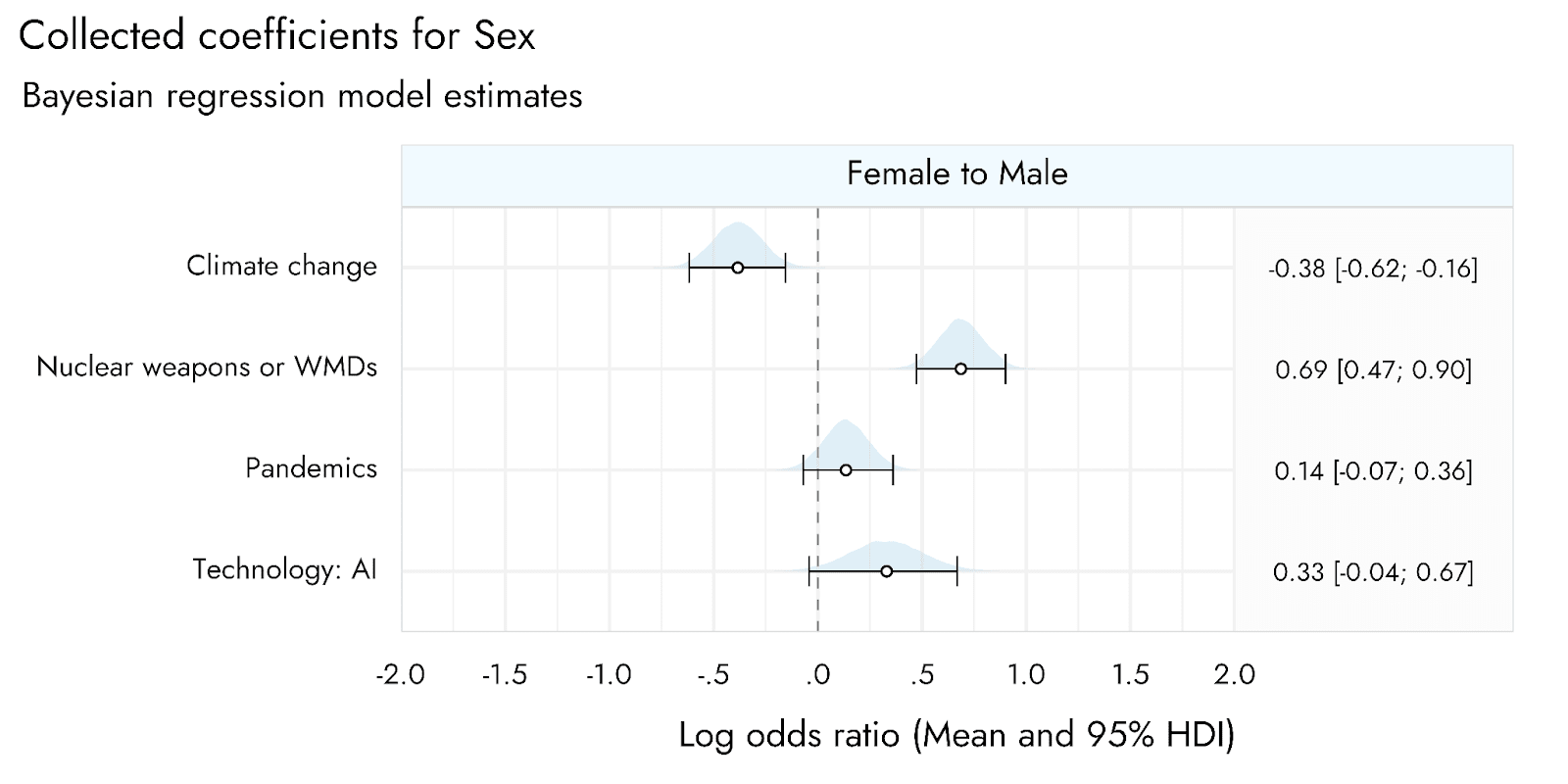

With respect to sex, relative to female respondents, we found that male respondents were reliably more likely to mention Nuclear Weapons/WMDs, and less likely to report Climate change, as causes of extinction (Figure 3). There was also a tendency for male respondents to mention AI more, although the coefficient for this estimate indicates that negligible or no differences between male and female respondents were well within the realm of possibility.

Figure 3: Associations between respondent Sex and the likelihood of selecting each of four key extinction risks.

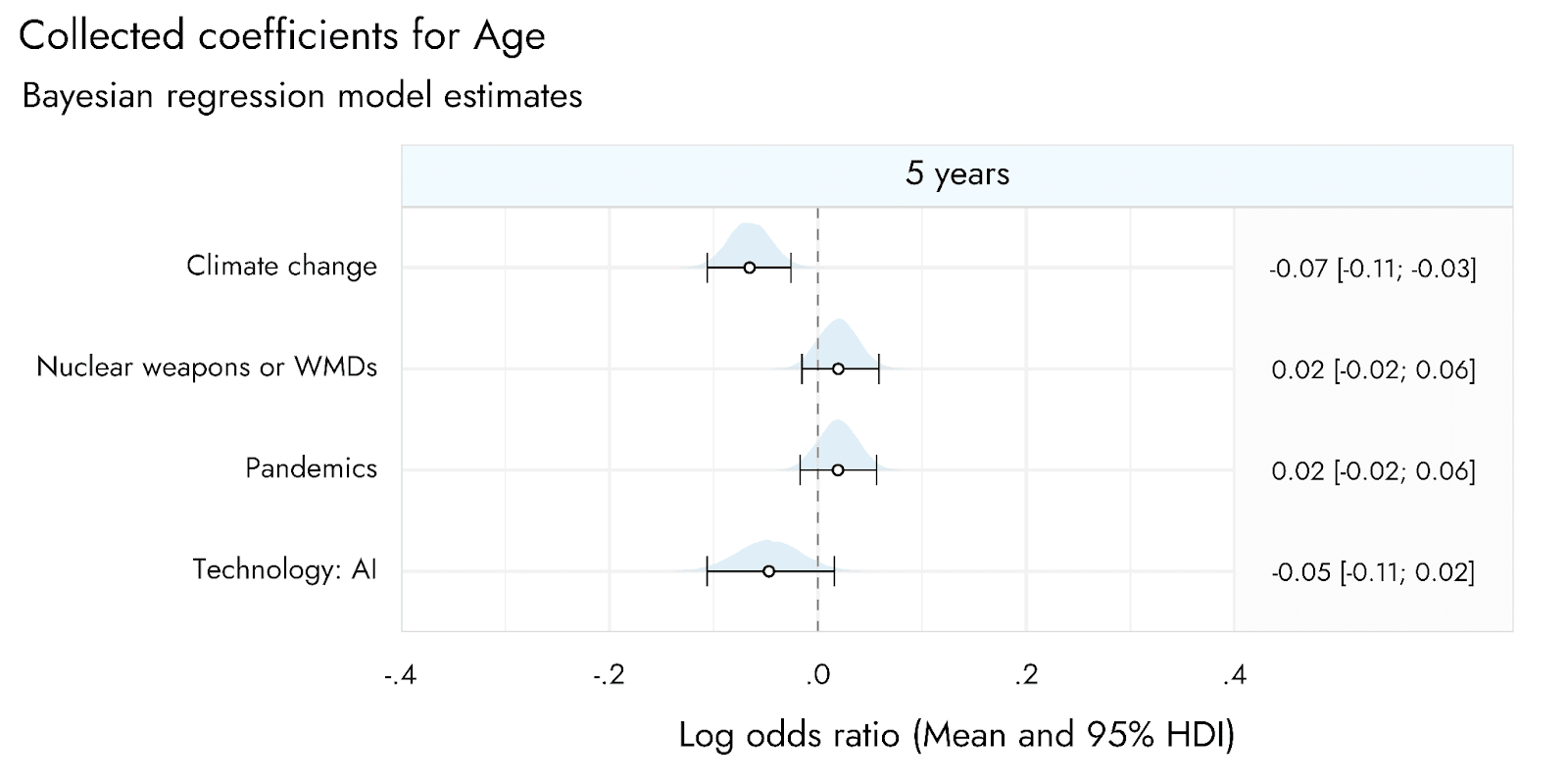

With respect to Age, we found that being older was associated with a decreasing tendency to raise climate change as a potential source of extinction (Figure 4). The coefficient for this effect was quite small (-.07), but would be multiplied by, for example, 4 if the age groups you were comparing differed by 20 years, as the coefficient reflects a shift of 5 years.

Figure 4: Associations between respondent Age and the likelihood of selecting each of four key extinction risks.

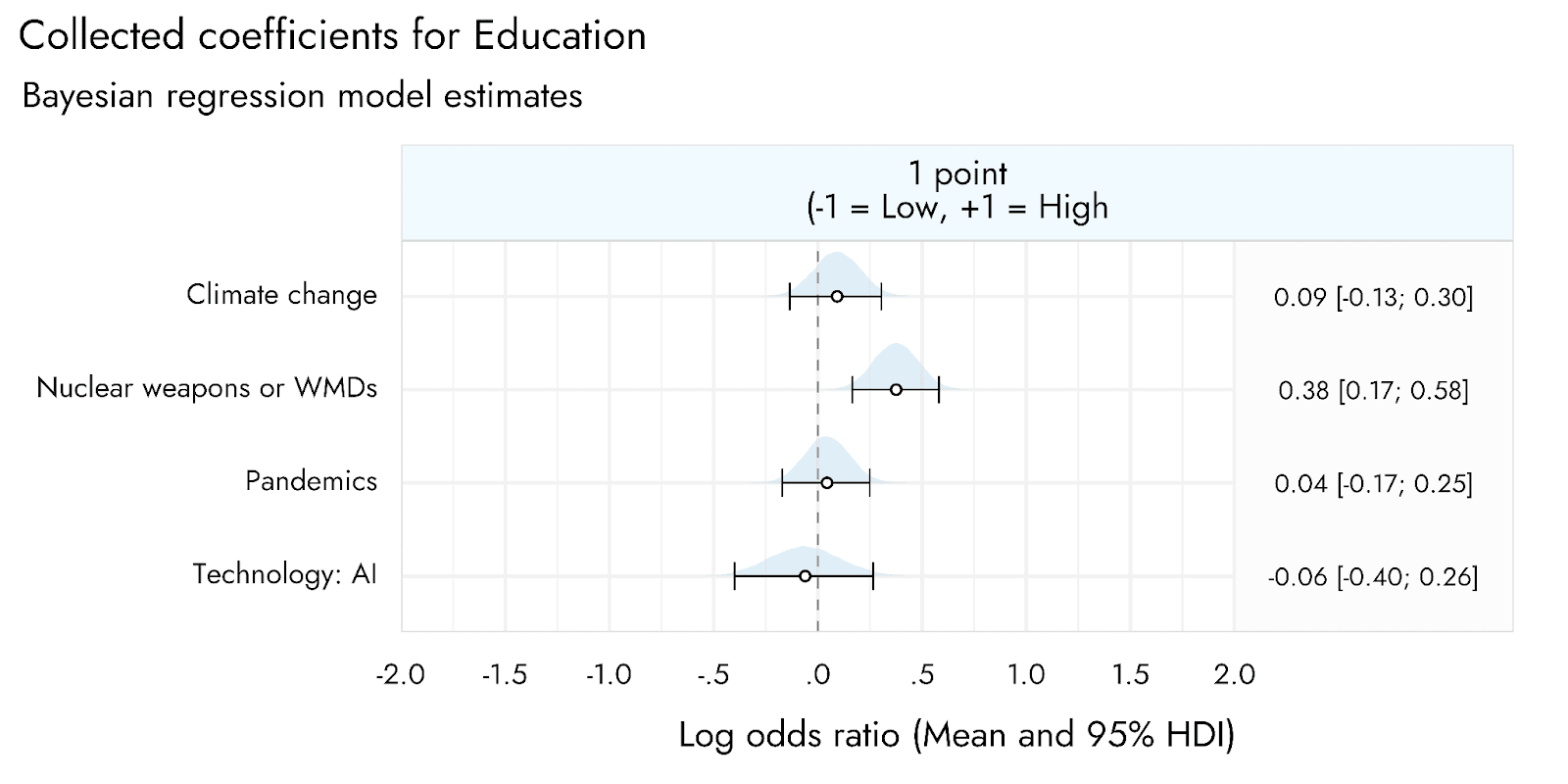

For education, we found that higher levels of education were associated with a greater tendency to raise Nuclear War/WMDs as a potential extinction risk, with coefficients for the other risks centering around no differences (Figure 5).

Figure 5: Associations between respondent Education level and the likelihood of selecting each of four key extinction risks.

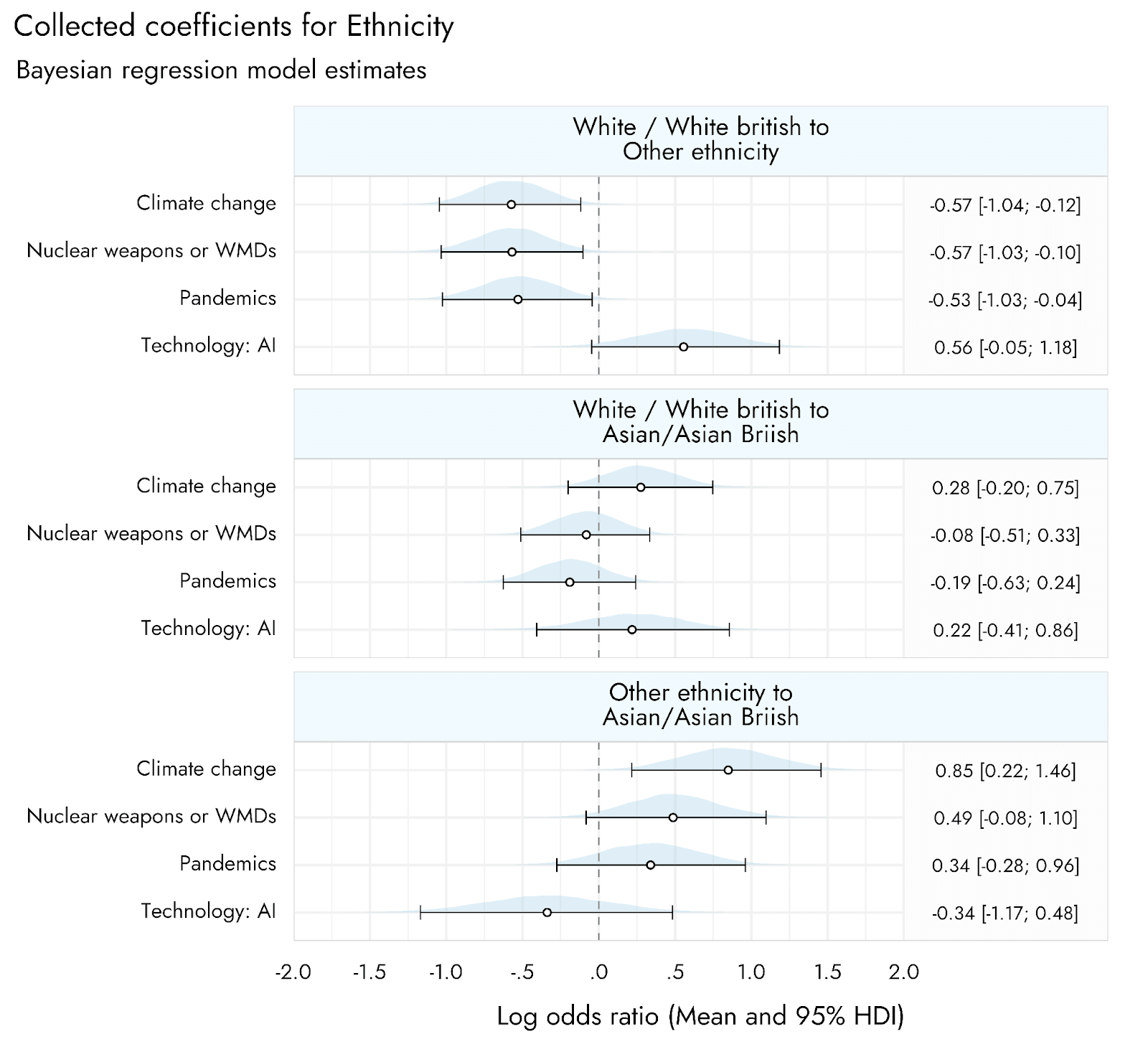

For ethnicity, a somewhat interesting pattern emerged when comparing White/White British respondents with those of Other ethnicities (other ethnicities besides Asian/Asian British): for Climate Change, Nuclear Weapons/WMDs, and Pandemics, identifying as one of these other ethnicities was associated with lower likelihoods of mentioning the risk relative to White British respondents, whereas this tended in the other direction for AI risk (Figure 6).

Figure 6: Associations between respondent Ethnicity and the likelihood of selecting each of four key extinction risks.

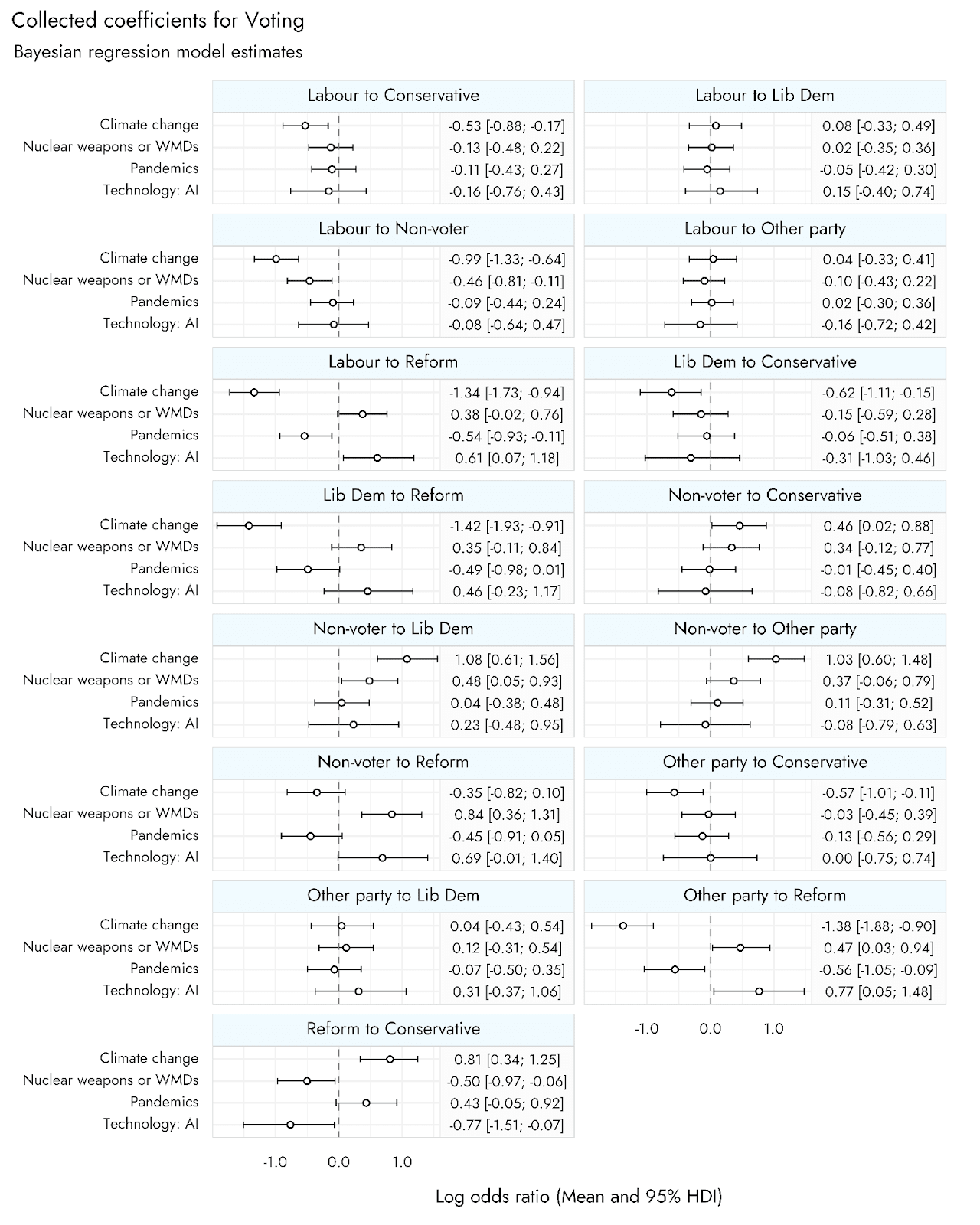

Finally, with respect to politics, several potentially interesting effects were observed (Figure 7). We found that those voting for relatively more left wing or liberal parties (Labour, Liberal Democrat) tended to be more likely to raise the issue of Climate change than relatively more right wing/conservative voters (Conservatives, Reform). Those who did not vote also seemed especially less likely to raise Climate change. One surprising observation was that Reform voters tended to be the most likely to raise the issue of AI. This is surprising in that, among all the parties, Reform were notable in not raising AI concerns in their manifesto. We think this potential effect should be interpreted cautiously, as we have no immediate explanation for it. One possibility may simply be that the much lower propensity for Reform voters to bring up the most commonly reported risk, Climate change (and to a lesser extent pandemics), left many such voters with space to include a less obvious risk. It would be interesting in future research to assess different voters’ ratings of the likelihood of or concern about extinction from various risks that they are provided with, as opposed to free recall. If in that case Reform voters also gave higher credence to AI risk than did those of other political parties, then it would indicate that this difference in AI risk perception among Reform voters is not just an artifact of the free recall methodology.

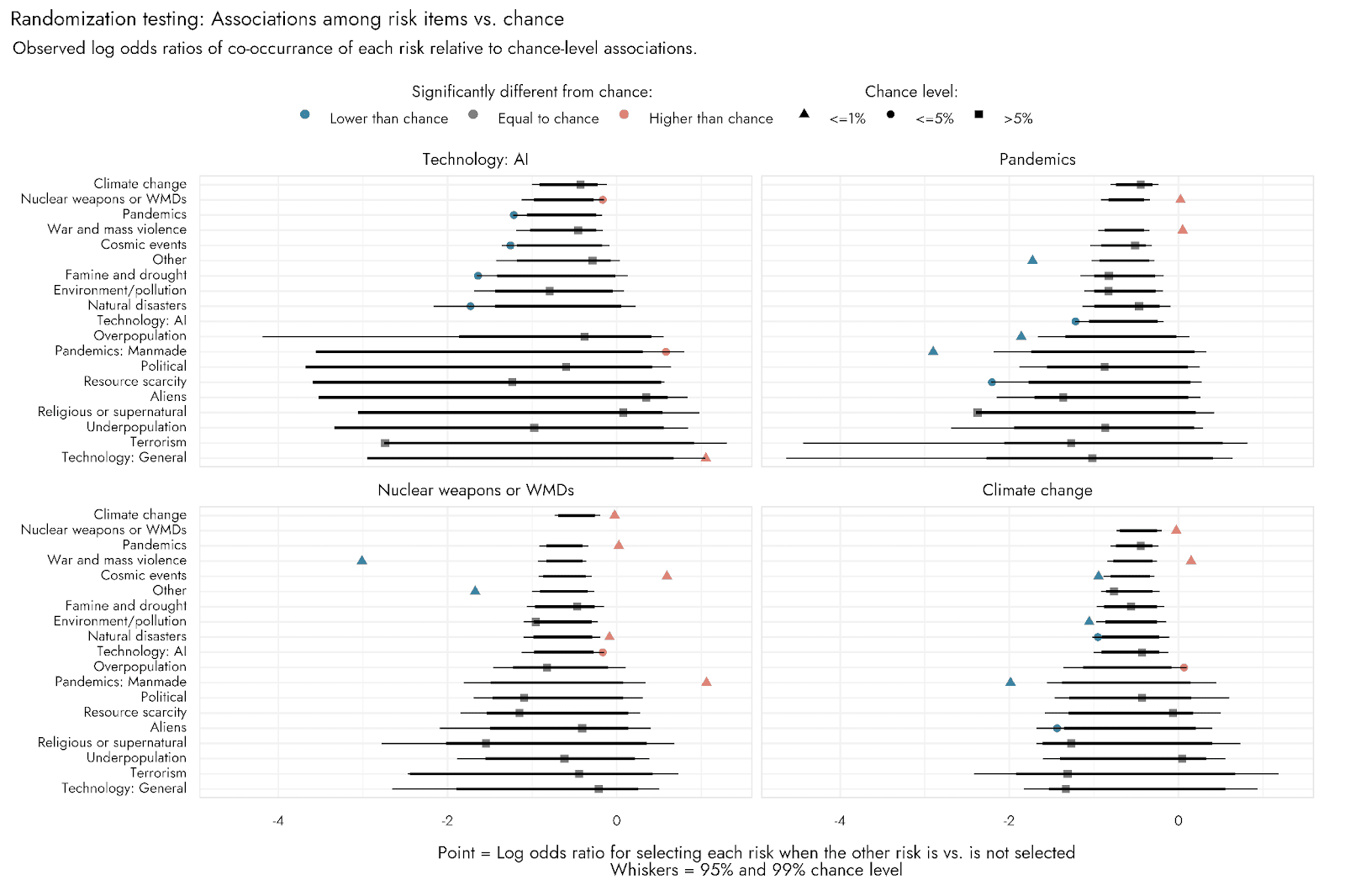

Associations amongst risks can be found in the Appendix - we did not observe associations that we believe to be of sufficient robustness and interest to emphasize, though we expect that there may be interesting patterns among perceived risks if respondents were presented with all risks and provided ratings for them, which would provide greater power than a selection of up to three risks.

Figure 7: Associations between respondent 2024 vote and the likelihood of selecting each of four key extinction risks.

Discussion

Based on the freely chosen risk selections of the British public, we found the War/Nuclear War and WMDs ranked first and foremost among perceived risks of extinction, followed by Climate Change/Environmental concerns, then Pandemics, and then Cosmic risks. This ranking of events is remarkably consistent with YouGov polling of the British public using an alternative format, in which respondents were provided with a range of possible risks and required to select up to three from among them. As with the YouGov polling, AI was ranked lower than these risks, and similarly to Natural Disasters.

The precise percentages of different risks being selected differed between free recall and pollster-provided choices. For example, when AI was provided to respondents in YouGov polling, it was selected by around 17% of respondents, whereas we estimate it would be raised freely by around 9% of the British public. For other risks, free recall produced higher percentages than when choices were provided: our estimate for climate change was 58%, compared with 38% in the YouGov polling. Some such percentage differences may be due to our respondents having overwhelmingly selected three options, whereas summing the percentages for the YouGov poll suggests most people selected two items. This would naturally lead to lower percentages in the YouGov polling. That AI was more prevalent when provided as an option may therefore be informative. Two speculative possibilities are that people do not spontaneously entertain the idea of existential AI risk, but find it plausible when prompted with it. Alternatively, people may consider it, but think it may look silly to say so and therefore self-censor - this concern would be mitigated if AI risk were presented as an option for them, indicating it is an acceptable answer to provide.

The free recall methodology used in this study provides interesting insights into spontaneously-generated risk perceptions. It avoids some important limitations of surveys that ask respondents to select the most likely causes of extinction from a fixed list, which risks prompting respondents to consider particular causes, while ignoring others. However, at the same time, this method will only capture risks that people would think of spontaneously, without prompting, even though they might cite other risks as likely if asked about them directly. In addition, any method that limits the number of options that can be indicated, whether through free response or selections from a list, means that certain demographic trends could be partly explained by this restriction, and there may be less ability to detect and cleanly interpret associations amongst items.

A final limitation is that we only know that something was perceived as a potential risk, and not how likely this was considered to be. A prior survey we conducted with US respondents suggested a median response of 15% probability that AI would cause human extinction before 2100. Further interesting work could be done to apply a different methodology with the British public and assess whether the observed demographic trends and associations amongst risks are preserved, as well as to field such questions to members of the US public for comparison.

Taken in combination with other polling, our findings suggest that the possibility of extinction from AI is not a completely niche concern in the general public. AI was raised as a potential cause of extinction by members of the British public who could freely choose any risk that came to mind. However, AI risk still ranked behind concerns such as climate change and nuclear war[4].

Rethink Priorities is a think-and-do tank dedicated to informing decisions made by high-impact organizations and funders across various cause areas. We invite you to explore our research database and stay updated on new work by subscribing to our newsletter.

Appendix: Associations between causes of extinction

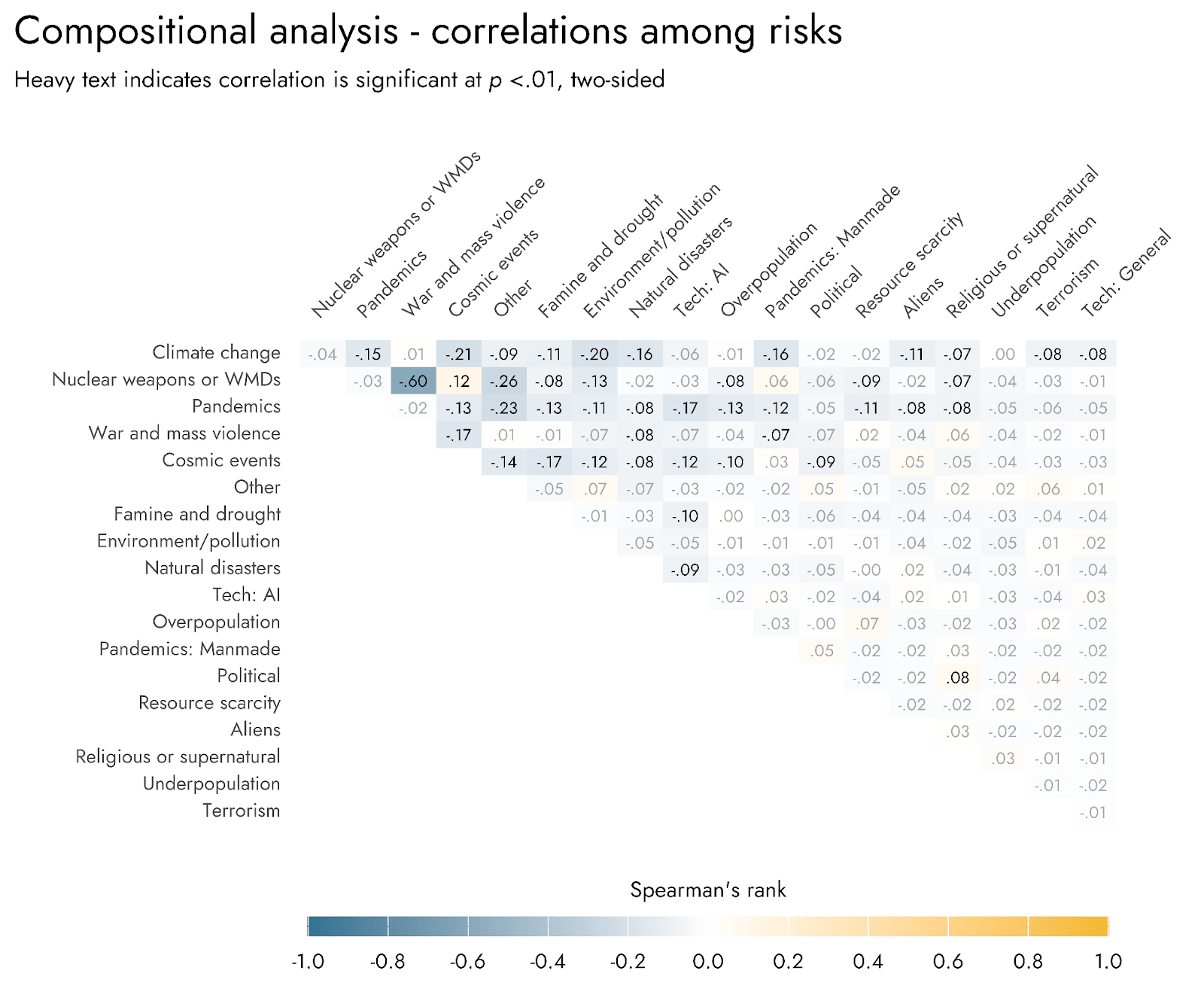

In addition to looking at associations between demographic variables and each risk category, we explored associations amongst the risks. Because people are limited to only selecting up to three risks, by default, all items will have negative correlations with one another: the fact that one risk was selected means that there is less opportunity for the other risks to appear among the selected items. We used two approaches to address this: firstly, a compositional analysis approach in which the item selections are transformed in such a way that this default negative association is mitigated[5]. Secondly, a randomization-based approach, in which we generated 1000 permutations of the data in which the observed dependencies amongst the items were removed, while preserving the relative counts of different items and the number of items each respondent picked. This enables testing of the extremity of observed associations among the items relative to associations that arise simply by chance, by virtue of the general structure of the data.

Results from the compositional analysis are presented in the correlation matrix below (Figure A1). Results from the randomization-based analysis are presented in Figure A2.

Figure A1: Pairwise Spearman’s rank correlations between selected risks.

Figure A2: Associations between selected risks as assessed by randomization testing.

This investigation of associations among perceived risks did not reveal any especially insightful associations among the observed risk items. In both forms of analysis, the strongest associations were a negative association between choosing Nuclear Weapons/WMDS and War and Mass Violence, and between choosing Climate Change and Environment/Pollution. This is most likely because in each case, the specific choices were representatives of more overarching categories for respondents (i.e., if they specifically chose Nuclear War, they would not also choose War, and if they chose War, they might have felt it was implicit in this that they were including Nuclear war. The same applies for specifically referencing climate change relative to referencing more general environmental concerns). This fits the observation that the overarching categories constructed from these more specific categories were quite close to a simple summation of those specific categories.

Tentatively, we highlight a handful of associations that were apparent across analytic approaches for our key risks of interest. Firstly, concerns about Overpopulation appeared to be attenuated amongst those who raised the concern of Pandemics. Expecting that diseases might wipe out large proportions of the population could be a check on concerns about the earth becoming overpopulated. Respondents noting concern over Nuclear risk were more likely to also select Cosmic events. Though highly speculative, it is possible that such respondents are more attuned to highly tangible/dramatic forms of extinction, exemplified by nuclear explosions or asteroid strikes.

However, we would urge against over-interpreting these associations. The difficulties of assessing associations due to the inherent negative associations this free recall methodology induces, as well as the binary nature of the data it produces, may be an argument for also including a more typical ratings-based or probability-based assessment of a pre-specified selection of risks. This would increase the power to detect associations among different risks.

- ^

This is not a strict benefit, as in some cases you may wish to know, if people were aware of some issue, how does this affect their perception. Whether freely recalling items vs. ratings items is preferred depends on the research question at hand.

- ^

The categorizations provided by GPT are very good, although we have not fully formally assessed this and therefore consider it necessary to perform manual checking. However, by sorting the responses according to how they were categorized, as well as the confidence and disputation ratings, such manual checking can be done much more rapidly than if manual coding were done from scratch.

- ^

We also included region as a random effect, such that the estimates account for general regional variation, but we do not provide estimates by region.

- ^

This was a recurring theme in one of our prior surveys of US respondents.

- ^

Specifically, a centered log-ratio transformation was used. This approach required filtering for those respondents who selected exactly 3 items, resulting in a sample size of 1302.

Thanks! Do you want this shared more widely?

Note that I estimate that putting these findings on a reasonably nice website (I generally use Webflow) with some nice interactive embedded (Flourish is free to use and very functional at the free tier) would take between 12-48 hours of work. You could probably reuse a lot of the work in the future if you do future waves.

I am also wondering if someone should do a review/meta-analysis to aggregate public perception of AI and other risks? There are now many surveys and different results, so people would probably value a synthesis.

Thanks Peter! We'd be happy for this to be shared more widely.

Agreed. I'd also be interested to see more work systematically assessing how these responses vary across different means of posing the question.

Hi Peter, thanks - I'll be updating the post on Monday to link to where it is now on our website with the PDF version, but I may be adding a little information related to AAPOR public opinion guidelines in the PDF first. Sharing widely after that would be very much appreciated!

Ok, I plan to share the PDF, so let me know when it is good to go!

Thanks so much, it's updated now - this is a direct link to the pdf that should work for you https://rethinkpriorities.org/s/British-public-perception-of-existential-risks.pdf

Nice study!

At first glance, results seem pretty similar to what we found earlier (https://www.existentialriskobservatory.org/papers_and_reports/Trends%20in%20Public%20Attitude%20Towards%20Existential%20Risk%20And%20Artificial%20Intelligence.pdf), giving confidence in both studies. The question you ask is the same as well, great for comparison! Your study seems a bit more extensive than what we did, which seems very useful.

Would be amazing to know whether a tipping point in awareness, according to (non xrisk) literature expected to occur somewhere between 10% and 25% awareness, will also occur for AI xrisk!

Thanks for doing this!

I don't know how useful the results are, as extinction is not the only existentially catastrophic scenario I care about. And I wonder if and how the ranking changes when the question is about existential catastrophe. For example, do people think AI is unlikely to cause extinction but likely to cause a bad form of human disempowerment?

Thanks Siebe!

I think that would also be interesting, but there's still value in replicating previous surveys which focused on extinction specifically.

I also think that focus on extinction specifically may also be useful for tracking changes in concerns about severe risks from AI. Assessing perceptions of other severe risks from AI will be a lot more methodologically challenging (since there's more of a continuum from very severe non-extinction threats to less severe threats (e.g. will harm autonomy in some much less severe way), so it will be difficult to ensure respondents are thinking of non-extinction threats which are of interest to us. That said, I agree that studying what non-extinction threats people perceive from AI would be independently interesting and important.