tl;dr: Advocacy to the public is a large and neglected opportunity to advance AI Safety. AI Safety as a field is unfamiliar with advocacy, and it has reservations, some founded and others not. A deeper understanding of the dynamics of social change reveals the promise of pursuing outside game strategies to complement the already strong inside game strategies. I support an indefinite global Pause on frontier AI and I explain why Pause AI is a good message for advocacy. Because I’m American and focused on US advocacy, I will mostly be drawing on examples from the US. Please bear in mind, though, that for Pause to be a true solution it will have to be global.

The case for advocacy in general

Advocacy can work

I’ve encountered many EAs who are skeptical about the role of advocacy in social change. While it is difficult to prove causality in social phenomena like this, there is a strong historical case that advocacy has been effective at bringing about the intended social change through time (whether that change ended up being desirable or not). A few examples:

- Though there were many other economic and political factors that contributed, it is hard to make a case that the US Civil War had nothing to do with humanitarian concern for enslaved people– concern that was raised by advocacy. The people’s, and ultimately the US government’s, will to abolish slavery was bolstered by a diverse array of advocacy tactics, from Harriet Beecher Stowe’s writing of Uncle Tom’s Cabin to Frederick Douglass’s oratory to the uprisings of John Brown.

- The US National Women’s Party is credited with pressuring Woodrow Wilson and federal and state legislators into supporting the 19th Amendment, which guaranteed women the right to vote, through its “aggressive agitation, relentless lobbying, clever publicity stunts, and creative examples of civil disobedience and nonviolent confrontation”.

- The nationwide prohibition of alcohol in the US (1920-1933) is credited to the temperance movement, which had all manner of advocacy gimmicks including the slogan “the lips that touch liquor shall never touch mine”, and the stigmatization of drunk driving and the legal drinking age of 21 is directly linked to Mothers Against Drunk Drivers.

Even if advocacy only worked a little of the time or only served to tip the balance of larger forces, the stakes of AI risk are so high and AI risk advocacy is currently so neglected that I see a huge opportunity.

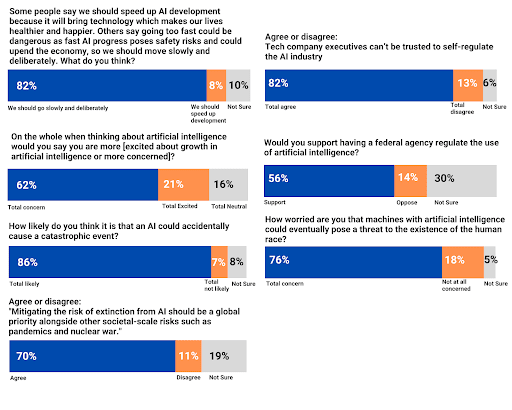

We can now talk to the public about AI risk

With the release of ChatGPT and other advances in state-of-the-art artificial intelligence in the last year, the topic of AI risk has entered the Overton window and is no longer dismissed as “sci-fi”. But now, as Anders Sandberg put it, the Overton window is moving so fast it’s “breaking the sound barrier”. The below poll from AI Policy Institute and YouGov (release 8/11/23) shows comfortable majorities among US adults on questions about AI x-risk (76% worry about extinction risks from machine intelligence), slowing AI (82% say we should go slowly and deliberately), and government regulation of the AI industry (82% say tech executives can’t be trusted to self-regulate).

What having the public’s support gets us

- Opinion polls and voters that put pressure on politicians. Constituent pressure on politicians gives the AI Safety community more power to get effective legislation passed– that is, legislation which addresses safety concerns and requires us to compromise less with other interests– and it gives the politicians more power against the AI industry lobby.

- The ability to leverage external pressure to improve existing strategies. With external pressure, ARC, for example, wouldn’t have to worry as much about being denied access to frontier models. With enough external pressure, the AI companies might be begging ARC to evaluate their work so that the people and the government get off their back! There isn’t much reason for AI companies to agree to corporate campaigns that ask them to adopt voluntary changes now, but those campaigns would be a lot more successful if pledging to them allowed AI companies to improve their image to a skeptical public.

- The power of the government to slow industry on our side. Usually, it is considered a con of regulation that it slows or inhibits an industry. But, here, that’s the idea! Just to give an example, even policies that require actors to simply enumerate the possible effects of their proposals can immensely slow large projects. The National Environmental Protection Act (NEPA) requires assessments of possible effects on the environment to be written ahead of certain construction projects. It has been estimated (pdf, p. 14-15) that government agencies alone spend $1 billion a year preparing these assessments, and the average time it takes a federal entity to complete an assessment is 3.4 years. (The cost to private industry is not reported but expected to be commensurate.) In a world where some experts are talking about 5 year AI x-risk timelines, adding a few years to the AI development process would be a godsend.

Social change works best inside and outside the system

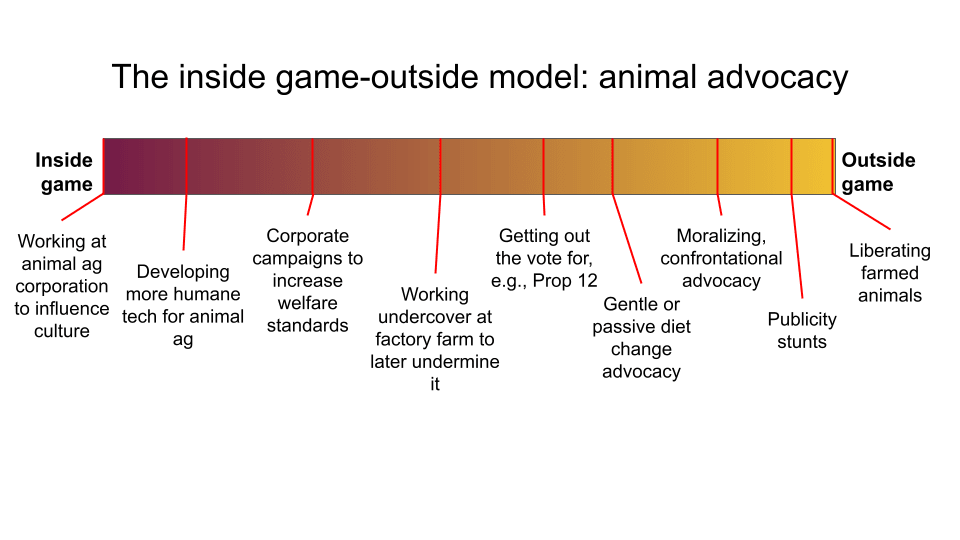

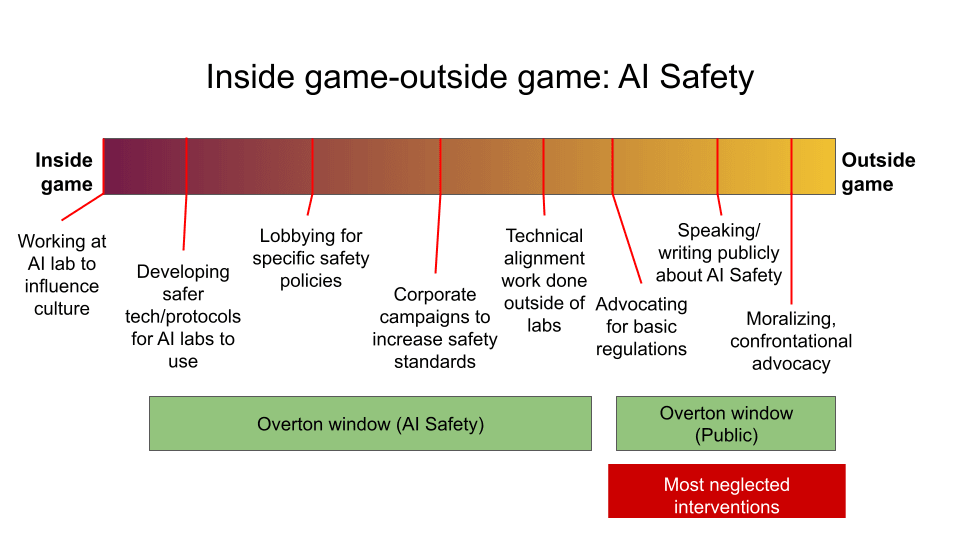

I believe that, for a contingent historical reason, EA AI Safety is only exploiting half of the spectrum of interventions. Because AI risk was previously outside the Overton window (and also, I believe, due to the technical predilections and expertise of its members), the well-developed EA AI Safety interventions tend to be “inside game”. Inside game means working within the system you’re trying to change, and outside game means working outside the system to put external pressure on it. The inside-outside game dynamic has been written about before in EA, to describe animal welfare tactics (incrementalist and welfarist vs radical liberationist). I also find it easier to start with the well-fleshed out example of my former cause area.

Here I’ve laid out some examples of interventions that fall across the inside-outside game spectrum in animal advocacy. I believe the spread in animal advocacy as a whole is fairly well-balanced. Effective animal altruist (EAA) interventions tend to be on the inside side, and rightly so, as these interventions were more neglected in the existing space when EAAs came on the scene. Animal welfare corporate campaigns and the ballot initiatives Question 3 (Massachusetts) and Proposition 12 (California) are responsible for a huge amount of EA’s impact. I have heard from several people/organizations that they want to create “a Humane League for AI” and do corporate campaigns for AI reforms as a superior alternative to the public advocacy I was proposing, but the Humane League holds protests. Not only that, the Humane League is situated in an ecosystem where, if they don’t highlight the conditions animals are in, someone else, like the more radical Direct Action Everywhere, might do something like break into factory farms in the middle of the night, rescue some animals, and film the conditions they were in. The animal agriculture companies are more disposed to make voluntary welfare commitments when doing so is better for them than the alternative. This is how inside-gamers and outside-gamers can work together to get more of the change they want.

In AI Safety, inside game interventions, like working at an AI company, can confuse the public about the level of danger we are in, because it’s not intuitive to most people that you would help build a dangerous technology to protect us from it. This can lead the public to think inside gamers are insincere, or attempting something like “regulatory capture”.

There’s also a risk to the AI Safety movement of getting captured by industry by hanging around inside it or depending on it for a paycheck. I believe AI Safety is already too complacent with the harms of AI companies and too friendly to AI company-adjacent narratives, such as that AI isn’t too dangerous to build because other technologies have been made safely, that (essentially) only their technology can solve alignment, or that cooperation with them to gain access to their models is the best way to pursue alignment (based on private conversations, I believe this is ARC’s approach). Not only does outside game directly synergize with inside game, but advocacy of the vanguard position pushes the Overton window in that direction, further increasing the chance of success through inside game by making those interventions seem more moderate and less controversial.

Pros and potential pitfalls of advocacy

Other pros of advocacy

Besides being the biggest opportunity we have at the moment, there are other pluses to advocacy:

- We can recruit entirely new people to do it, because it draws on a different skill set. There’s no need to compete with alignment or existing governance work. I myself was not working on AI Safety before Pause advocacy was on the table.

- It’s relatively financially cheap. The efficacy of many forms of advocacy, like open letters and protests, depends on sweat equity and getting buy-in, which are hard, but they don’t take a lot of money or materials. (Right now I’m fundraising for my own salary and very little else.)

- Unlike technical alignment or policy initiatives, advocacy is ready to go, because advocacy’s role is not to provide granular policy mechanisms (the letter of the law), but to communicate what we want and why (the spirit of the law).

- Personally, I feel wrong not to share warnings with the public (when we aren’t on track to fix the problem ourselves in a way that requires secrecy), so raising awareness of the problem along with a viable solution to the problem feels like a good in itself.

- And, finally, I predict that advocacy activities could be a big morale boost, if we’d let them. Do you remember the atmosphere of burnout and resignation after the “Death with Dignity” post? The feeling of defeat on technical alignment? Well, there’s a new intervention to explore! And it flexes different muscles! And it could even be a good time!

Misconceptions about advocacy

“AI Safety advocacy will reflect negatively on the entire community and harm inside gamers.”

Many times since April, people have expressed their fear to me that the actions of anyone under a banner of AI Safety will reflect on everyone working on the cause and alienate potential allies. The most common fear is that AI companies will not cooperate with the AI Safety community if other people in the community are uncooperative with them, but I’ve also heard concerns about new and inexperienced people getting into AI policy, such as that uncouth advocates will upset diplomatic relationships in Washington. This does seem possible to me, but I can’t help but think the new people are mostly where the current DC AI insiders were ~5 years ago and they will probably catch up.

Funnily enough, even though animal advocates do radical stunts, you do not hear this fear expressed much in animal advocacy. If anything, in my experience, the existence of radical vegans can make it easier for “the reasonable ones” to gain access to institutions. Even just within EAA, Good Food Institute celebrates that meat-producer Tyson Foods invests in a clean meat startup at the same time the Humane League targets Tyson in social media campaigns. When the community was much smaller and the idea of AI risk more fringe, it may have been truer that what one member did would be held against the entire group. But today x-risk is becoming a larger and larger topic of conversation that more people have their own opinions on, and the risk of the idea of AI risk getting contaminated by what some people do in its name grows smaller.

“The asks are not specific or realistic enough.”

The goal of advocacy is not to formulate ideal policies. The letter of the law is not ultimately in any EA’s hands because, for example, US bills go through democratically elected legislatures that have their own processes of debate and compromise. Suggesting mechanistic policies (the letter of the law) is important work, but it is not sufficient— advocacy means communicating and showing popular support for what we want those policies to achieve (the spirit of the law) to have the greatest chance of obtaining effective policies that address the real problems.

Downsides to advocacy

The biggest downside I see to advocacy is the potential of politicizing AI risk. Just imagine this nightmare scenario: Trump says he wants to “Pause AI”. Overnight, the phrase becomes useless, just another shibboleth, and now anyone who wants to regulate AI is lumped in with Trump. People who oppose Trump begin to identify with accelerationism because Pause just seems unsavory now. Any discussion of the matter among politicians devolves into dog whistles to their bases. This is a real risk that any cause runs when it seeks public attention, and unfortunately I don’t think there’s much we can do to avoid it. Unfortunately, though, AI is going to become politicized whether we get involved in it or not. (I would argue that many of the predominant positions on AI in the community are already markers of grey tribe membership.) One way to mitigate the risk of our message becoming politicized is to have a big tent movement with a diverse coalition under it, making it harder to pigeonhole us.

Downside risks of continuing the status quo

If we change nothing and the AI Safety community remains overwhelmingly focused on technical alignment and people quietly attempting to reach positions of influence in government, I predict:

- AI labs will control the narrative. If we continue to abdicate advocacy, the public will still receive advocacy messages. They will just be from the AI industry or politicians hoping to spin the issue in their favor. The politics will still get done, just by someone else, with less concern about or insight into AI Safety.

- The EA AI Safety community will continue to entrench itself in strategies that help AI labs, but much of the influence that was hoped for in return will never materialize. The AI companies do not need the EA community and have little reason more than beneficence to do what the EA community wants. We should not be entrusting our future to Sam Altman’s beneficence.

- The EA AI Safety community will continue entrusting much of its alignment-only agenda to racing AI labs, despite suspecting that timelines are likely too short for it to work.

- It may be too late to initiate a Pause later, even if more EAs conclude that is needed, because the issue has become too politicized or because AI labs are too mixed up in their own regulation.

- Society becomes “entangled” with advanced AI and likes using it. People are less amenable to pausing later, even if the danger is clearer.

The case for advocating AI Pause

My broad goal for AI Safety advocacy is to shift the burden of proof to its rightful place– onto AI companies to prove their product is safe–, rather than where it currently seems to be– on the rest of us to prove that AGI is potentially dangerous. There are other paths to victory, but, in my opinion, AI Pause is the best message to get us there. When I say AI Pause, I mean a global, indefinite moratorium on the development of frontier models until it is safe to proceed.

Pros and pitfalls of AI Pause

The public is shockingly supportive of pausing AI development. YouGov did a poll of US adults in the week following the FLI Letter release which showed majority support (58-61% across different framings) for a pause on AI research (Rethink Priorities replicated the poll and got 51%). Since then, support for similar statements has remained high among Americans. The most recent such poll, conducted August 21-27, shows 58% support for a 6-month pause.

Pause is possible to implement by taking advantage of chokepoints in the current development pipeline, such as Nvidia’s near monopoly on chip production and large amount of compute needed for training. It may not always be the case that we have these chokepoints, but by instituting a Pause now, we can slow further changes to the development landscape and have more time to adapt to them.

Because of the substantial resources required, there are also a small number of actors trying to develop frontier ML models today, which makes monitoring not only possible, but realistic. A future where monitoring and compute limitations are very difficult is conceivable, but if we start a Pause ASAP, we can bootstrap our way to at least slowing illicit model development indefinitely, if necessary.

Pause is a robust position and message. Advocacy messages have to be short, clear, and memorable. Many related AI Safety messages take far too many words to accurately convey (and even then, there’s always room for debate!). I do not consider “Align AI” a viable message for advocacy because the topic of alignment is nuanced and complex and misunderstanding even subtle aspects could lead to bad policies and bad outcomes. Similarly, a message like “Regulate AI” is confusing because there are many conflicting ways to regulate any aspect of AI depending on the goal. “Pause AI” is a simple and clear ask that is hard to misinterpret in a harmful way, and it entails sensible regulation and time for alignment research.

A Pause would address all AI harms (or keep those that have already arrived from getting worse), from employment displacement and labor issues to misinformation and the manipulation of social reality to weaponization to x-risk. Currently, AI companies are ahead of regulatory authorities and voters, who are still wrapping their heads around new AI developments. By default, they are being allowed to proceed until their product is proven too dangerous. The Pause message turns that around and puts it on labs to prove that their product is safe enough to develop.

Pause gives the chance for more and better alignment research to take place and it allows for the possibility that alignment doesn’t happen. On balance, I think Pause is not just a good advocacy message but would actually be the best way forward. There is, however, one major potential harm from a Pause policy that merits mentioning: hardware overhang, specifically hardware overhang due to improvements in training algorithms. If compute is limited but the algorithms for using compute to train models continue to get better, which seems likely and is more difficult to regulate than hardware, then using those algorithms on more compute could lead to discontinuities in capabilities that are hard to predict. It could mean that the next large training run puts us unexpectedly over the line into unaligned superintelligence. If putting limits on compute could make increases in training compute more dangerous, that risk needs to be weighed and accounted for. It’s conceivable that this form of overhang could present such a risk that a Pause was too dangerous. One way of mitigating this possibility is to regulate scheduled, controlled increases in compute allowed for training, which Jaime Sevilla has referred to as a “moving bright line”. I don’t believe this compute overhang objection defeats Pause because, on balance, I expect Pause to get us more time for alignment research and to implement solutions to overhang, such as more tightly controlling the production of hardware or through a greater understanding of the training process.

Pause advocacy can be helpful immediately because it doesn’t require us to hammer out exact policies. I have heard the argument that a Pause would be best if it stopped development right at the cusp of superintelligence, so that we could study the models most like superintelligence, and so Pause advocacy should start later. (1) I don’t know how people think they know where the line is right to up to superintelligence before which development is safe, (2) the Pause would be much more precarious if the next step after breaching it was superintelligence, so we should aim to stop with some cushion if we want the Pause to work, and (3) it will take an unknown amount of time to win support for and implement a Pause, so it’s risky to try to time its execution precisely.

How AI Pause advocacy can effect change

AI Pause advocacy could reduce p(doom) and p(AI harm) via many paths.

- If we advocate Pause, it could lead to a Pause, which would be good (as discussed in the previous section).

- Politicians will take note of public opinion via polls, letters, calls, public writing, protests, etc. and will consider Pause proposals safer bets.

- Some people in power will become directly convinced that Pause is the right policy and use their influence to advocate it.

- Some voters will be convinced that Pause is the right way forward and vote accordingly.

- When we advocate for Pause, it pushes the Overton window for many other AI Safety interventions aimed at x-risk which would also be good if implemented, including alignment or other regulatory schemes. The Pause message combats memes about safety having to be balanced with “progress”, so it creates more room for other kinds of regulation that are focused on x-risk mitigation.

- When we advocate Pause, it shifts the burden of proof from us to prove AI could be dangerous onto those making AI to prove it is safe. This is helpful for many AI Safety strategies, not just Pause.

- For example, a rigorous licensing and monitoring regime or regulations that put an economic burden on the AI industry will become more realistic when the public sees AI development as a risky activity, because politicians will have the support/pressure they need to combat the industry lobby.

- AI companies may voluntarily adopt stronger safety measures for the sake of their public images and to gain the favor of regulators.

- When we advocate Pause, it re-anchors the discussion on a (I think, more appropriate) baseline of not developing AGI instead of the status quo. This will reduce loss aversion toward capabilities gains that might currently seem inevitable and reduce the ability of opponents to paint AI Safety as “Luddite”.

- Much of the public is baffled by the debate about AI Safety, and out of that confusion, AI companies can position themselves as the experts and seize control of the conversation. AI Safety is playing catch-up, and alignment is a difficult topic to teach the masses. Pause is a simple and clear message that the public can understand and get behind that bypasses complex technical jargon and gets right to the heart of the debate– if AI is so risky to build, why are we building it?

- Pause as a message fails safe– it doesn’t pattern match to anything dangerous the way that alignment proposals that involve increasing capabilities do. We have to be aware of how little control we will have over the specifics of how a message to the public manifests in policy. More complex and subtle proposals may be more appealing to EAs, but each added bit of complexity that is necessary to get the proposal right makes it more likely to be corrupted.

Audience questions

Comments on these topics would be helpful to me:

- If you think you’ve identified a double crux with me, please share below!

- To those working in more “traditional” AI Safety: Where in your work would it be helpful to have public support?

- If there’s something unappealing to you about advocacy that wasn’t addressed here or previously in the debate, can you articulate it?

This post is part of AI Pause Debate Week. Please see this sequence for other posts in the debate.

Nice post! If anyone reading this would like to see examples of outside game interventions, check out the Existential Risk Observatory and PauseAI.

Thanks for working on this, Holly, I really appreciate more people thinking through these issues and found this interesting and a good overview over considerations I previously learned about.

I'm possibly much more concerned than you about politicization and a general vague feeling of downside risks. You write:

I spontaneously feel like I'd want you to spend more time thinking about politicization risks than this cursory treatment here indicates.

More generally, I'm pretty positively surprised with how things are going on the political side of AI, and I'm a bit protective of it. While I don't have any insider knowledge and haven't thought much about all of this, I see bipartisan and sensible sounding stuff from Congress, I see Ursula von der Leyen saying AI is a potential x-risks in front of the EU parliament, I see the UK AI Safety Summit, I see the Frontier Model Forum, the UN says things about existential risks. As a consequence, I'd spontaneously rather see more reasonable voices being supportive and encouraging and protective of the current momentum, rather than potentially increasing the adversarial tone and "politicization noise", making things more hot-button, less open and transparent, etc.

One random concrete way public protests could affect things negatively: If AI pause protests would have started half a year

agoealier, would e.g. Microsoft chief executives still have signed the CAIS open letter?Great post, I agree with most of it!

Overall, I'm in favor of more (well-executed) public advocacy à la AI Pause (though I do worry a lot about various backfire risks (also, I wonder whether a message like "AI slow" may be better)), and I commend you for taking the initiative despite it (I imagine) being kinda uncomfortable or even scary at times!

(ETA: I've become even more uncertain about all of this. I might still be slightly in favor of (well-executed) AI Pause public advocacy but would probably prefer emphasizing messages like conditional AI Pause or AI Slow, and yeah, it all really depends greatly on the execution.)

The inside-outside game spectrum seems very useful. We might want to keep in mind another (admittedly obvious) spectrum, ranging from hostile/confrontational to nice/considerate/cooperative.

Two points in your post made me wonder whether you view the outside-game as necessarily being more on the hostile/confrontational end of the spectrum:

1) As an example for outside-game you list “moralistic, confrontational advocacy” (emphasis mine).

2) You also write (emphasis mine):

This implicitly characterizes the outer game with radical stunts, radical, and “unreasonable” people.

However, my sense is that outside-game interventions (hereafter: activism or public advocacy) can differ enormously on the hostility vs. considerateness dimension, even while holding other effects (such as efficacy) constant.

The obvious example is Martin Luther King’s activism, perhaps most succinctly characterized by his famous “I have a Dream” speech which was non-confrontational and emphasized themes of cooperation, respect, and even camaraderie.[1] (In fact, King was criticized by others for being too compromising.[2]) On the hostile/confrontational side of the spectrum you had people like Malcolm X, or the Black Panther Party.[3] In the field of animal advocacy, you have organizations like PETA on the confrontational end of the spectrum and, say, Mercy for Animals on the more considerate side.

As you probably have guessed, I prefer considerate activism over more confrontational activism. For example, my guess is that King and Mercy for Animals have done much more good for African Americans and animals, respectively, than Malcolm X and PETA.

(As an aside and to be super clear, I didn’t want to suggest that you or AI Pause is or will be disrespectful/hostile and, say, throw paper clips at Meta employees! :P )

A couple of weak arguments in favor of considerate/cooperative public advocacy over confrontational/hostile advocacy:

Taking a more confrontational tone makes everyone more emotional and tense, which probably decreases truth-seeking, scout-mindset, and the general epistemic quality of discourse. It also makes people more aggressive and might escalate conflict, and dangerous emotional and behavioral patterns such as spite, retaliation, or even (threats of) violence. It may also help to bring about a climate where the most outrage-inducing message spreads the fastest. Last, since this is EA, here’s the obligatory option value argument: It seems easier to go from a more considerate to a more confrontational stance than vice versa.

As an aside, (and contrary to what you write in the above quote), I often have heard the fear expressed that the actions of radical vegans will backfire. I’ve certainly witnessed that people were much less receptive to my animal welfare arguments because they’ve had bad experiences with “unreasonable” vegans who e.g. yelled expletives at them.[4] I think you can also see this reflected in the general public where vegans don’t have a great reputation, partly based on the aggressive actions of a few confrontational and hostile vegans or vegan organizations like PETA.

Political science research (e.g., Simpson et al., 2018) also seems to suggest that nonviolent protests are better than violent protests. (Of course, I’m not trying to imply that you were arguing for violent protests, in fact, you repeatedly say (in other places) that you’re organizing a nonviolent protest!) Importantly, the Simpson et al. paper suggests that violent protests make the protester side appear unreasonable and that this is the mechanism that causes the public to support this side less. It seems plausible to me that more confrontational and hostile public activism, even if it’s nonviolent, is more likely to appear unreasonable (especially when it comes to movements that might seem a bit fringe and which don’t yet have a long history of broad public support).

In general, I worry that increasing hostility/conflict, in particular in the field of AI, may be a risk factor for x-risk and especially s-risks. Of course, many others have written about the value of compromise/being nice and the dangers of unnecessary hostility, e.g., Schubert & Cotton-Barratt (2017), Tomasik (many examples, most relevant 2015), and Baumann (here and here).

Needless to say, there are risks to being too nice/considerate but I think they are outweighed by the benefits though it obviously depends on the specifics. (As you imply in your post, it’s probably also true that all public protests, by their very nature, are more confrontational than silently working out compromises behind closed doors. Still, my guess is that certain forms of public advocacy can score fairly high on the considerateness dimension while still being effective.)

To summarize, it may be valuable to emphasize considerateness (along other desiderate such as good epistemics) as a core part of the AI Pause movement's memetic fabric, to minimize the probability that it will become more hostile in the future since we will probably have only limited memetic control over the movement once it gets big. This may also amount to pulling the rope sideways, in the sense that public advocacy against AI risk may be somewhat overdetermined (?) but we are perhaps at an inflection point where we can shape its overall tone / stance on the confrontational vs. considerate spectrum.

Examples: “former slaves and the sons of former slave owners will be able to sit down together at the table of brotherhood” and “little black boys and black girls will be able to join hands with little white boys and white girls as sisters and brothers”.

From Wikipedia: “Some Black leaders later criticized the speech (along with the rest of the march) as too compromising. Malcolm X later wrote in his autobiography: "Who ever heard of angry revolutionaries swinging their bare feet together with their oppressor in lily pad pools, with gospels and guitars and 'I have a dream' speeches?

To be fair, their different tactics were probably also the result of more extreme religious and political beliefs.

I should note that I probably have much less experience with animal advocacy than you.

Thanks, David :)

There’s such a wide-open field here that we can make a lot of headway with nice tactics. No question from me that should be the first approach, and I would be thrilled and relieved if that just kept working. There’s no reason to rush to hostility, and I don’t know if I would be able to run a group like that if I thought it was coming to that, but there may one day be a place for (nonviolent) hostile advocacy.

I sometimes see people make a similar point to yours in an illogical way, basically asserting that hostility never works, and I don’t agree with that. People think they hate PETA while updating in their direction about whatever issues they are advocating for and promptly forgetting they ever thought anything different. It’s a difficult role to play but I think PETA absolutely knows what they are doing and how to influence people. It’s common in social change for moderate groups to get the credit, and for people remember disliking the radical groups, but the direction of society’s update was determined by the radical flank pushing the Overton window.

The answer is not “hostility is bad/doesn’t work” or “hostility is good/works”. It depends on the context. It’s an inconvenient truth that hostility sometimes works, and works where nothing else does. I don’t think we should hold it off the table forever.

I also think we should reconceptualize what the AI companies are doing as hostile, aggressive, and reckless. EA is too much in a frame where the AI companies are just doing their legitimate jobs, and we are the ones that want this onerous favor of making sure their work doesn’t kill everyone on earth. If showing hostility works to convey the situation, then hostility could be merited.

Again, though, one amazing thing about not having explored outside game much in AI Safety is that we have the luxury of pushing the Overton window with even the most bland advocacy. I think we should advance that frontier slowly. And I really hope it’s not necessary to advance into hostility.

EDIT: Just to be absolutely clear-- the hard line that advocacy should not cross is violence. I am never using the word "hostility" to refer to violence.

Thanks, makes sense!

I agree that confrontational/hostile tactics have their place and can be effective (under certain circumstances they are even necessary). I also agree that there are several plausible positive radical flank effects. Overall, I'd still guess that, say, PETA's efforts are net negative—though it's definitely not clear to me and I'm by no means an expert on this topic. It would be great to have more research on this topic.[1]

Yeah, I'm sympathetic to such concerns. I sometimes worry about being biased against the more "dirty and tedious" work of trying to slow down AI or public AI safety advocacy. For example, the fact that it took us more than ten years to seriously consider the option of "slowing down AI" seems perhaps a bit puzzling. One possible explanation is that some of us have had a bias towards doing intellectually interesting AI alignment research rather than low-status, boring work on regulation and advocacy. To be clear, there were of course also many good reasons to not consider such options earlier (such as a complete lack of public support). (Also, AI alignment research (generally speaking) is great, of course!)

It still seems possible to me that one can convey strong messages like "(some) AI companies are doing something reckless and unreasonable" while being nice and considerate, similarly to how Martin Luther King very clearly condemned racism without being (overly) hostile.

Agreed. :)

For example, present participants with (hypothetical) i) confrontational and ii) considerate AI pause protest scenarios/messages and measure resulting changes in beliefs and attitudes. I think Rethink Priorities has already done some work in this vein.

I'd guess it's also that advocacy and regulation seemed just less marginally useful in most worlds with the suspected AI timelines of even 3 years ago?

Definitely!

Hmmm, your reply makes me more worried than before that you'll engage in actions that increase the overall adversarial tone in a way that seems counterproductive to me. :')

I'm not completely sure what you refer to with "legitimate jobs", but I generally have the impression that EAs working on AI risks have very mixed feelings about AI companies advancing cutting edge capabilities? Or sharing models openly? And I think reconceptualizing "the behavior of AI companies" (I would suggest trying to be more concrete in public, even here) as aggressive and hostile will itself be perceived as hostile, which you said you wouldn't do? I think that's definitely not "the most bland advocacy" anymore?

Also, the way you frame your pushback makes me worry that you'll loose patience with considerate advocacy way too quickly:

It would be convenient for me to say that hostility is counterproductive but I just don’t believe that’s always true. This issue is too important to fall back on platitudes or wishful thinking.

I don’t know what to say if my statements led you to that conclusion. I felt like I was saying the opposite. Are you just concerned that I think hostility can be an effective tactic at all?

David - you make some excellent points here. I agree that being agreeable vs. disagreeable might be largely orthogonal to playing the 'inside game' vs. the 'outside game'. (Except that highly disagreeable people trying to play the inside game might get ostracized from inside-game organizations, e.g. fired from OpenAI.)

From my evolutionary psychology perspective, if agreeableness always worked for influencing others, we'd have all evolved to be highly agreeable; if disagreeableness always worked, we'd all have evolved to be highly disagreeable. The basic fact that people differ in the Big Five trait of Agreeableness (we psychologists tend to capitalize well-established personality traits) suggests that, at the trait level, there are mixed costs and benefits for being at any point along the Agreeableness spectrum. And of course, at the situation level, there are also mixed costs and benefits for pursuing agreeable vs. disagreeable strategies in any particular social context.

So, I think there are valid roles for people to use a variety of persuasion and influence tactics when doing advocacy work, and playing the outside game. On X/Twitter for example, I tend to be pretty disagreeable when I'm arguing with the 'e/acc' folks who dismiss AI safety concerns - partly because they often use highly disagreeable rhetoric when criticizing 'AI Doomers' like me. But I tend to be more agreeable when trying to persuade people I consider more open-minded, rational, and well-informed.

I guess EAs can do some self-reflection about their own personality traits and preferred social interaction styles, and adopt advocacy tactics that are the best fit, given who they are.

Thanks, Geoffrey, great points.

I agree that people should adopt advocacy styles that fit them and that the best tactics depend on the situation. What (arguably) matters most is making good arguments and raising the epistemic quality of (online) discourse. This requires participation and if people want/need to use disagreeable rhetoric in order to do that, I don’t want to stop them!

Admittedly, it's hypocritical of me to champion kindness while staying on the sidelines and not participating in, say, Twitter discussions. (I appreciate your engagement there!) Reading and responding to countless poor and obnoxious arguments is already challenging enough, even without the additional constraint of always having to be nice and considerate.

Your point about the evolutionary advantages of different personality traits is interesting. However, (you obviously know this already) just because some trait or behavior used to increase inclusive fitness in the EEA doesn’t mean it increases global welfare today. One particularly relevant example may be dark tetrad traits which actually negatively correlate with Agreeableness (apologies for injecting my hobbyhorse into this discussion :) ).

Generally, it may be important to unpack different notions of being “disagreeable”. For example, this could mean, say, straw-manning or being (passive-)aggressive. These behaviors are often infuriating and detrimental to epistemics so I (usually) don’t like this type of disagreeableness. On the other hand, you could also characterize, say, Stefan Schubert as being “disagreeable”. Well, I’m a big fan of this type of “disagreeableness”! :)

David -- nice comment; thanks.

I agree that Dark Tetrad traits applied to modern social media are often counter-productive (e.g. anonymous trolls trolling).

And, you're right that there are constructive ways to be disagreeable, and toxic ways to be disagreeable -- just as there are toxic ways to be overly agreeable! (eg validating people's false claims or misguided reactions).

Great post! Some highlights [my emphasis in bold]:

This with the additional point that AI Pause should be a much easier sell than animal advocacy as it is each and every person's life on the line, including the people building AI. No standing up for marginalised groups, altruism or do-gooding of any kind is required to campaign for a Pause.

Yes! I think a lot of AI Governance work involving complicated regulation, and appeasing powerful pro-AI-industry actors and those who think the risk-reward balance is in favour of reward, loses sight of this.

It's definitely been refreshing to me to just come out and say the sensible thing. Bite the bullet of "if it's so dangerous, let's just not build it". And this post itself is a morale boost :)

Too true! I can't believe I forgot to mention this in the post!

Holly - this is an excellent and thought-provoking piece, and I agree with most of it. I hope more people in EA and AI Safety take it seriously.

I might just add one point of emphasis: changing public opinion isn't just useful for smoothing the way towards effective regulation, or pressuring AI companies to change their behavior at the corporate policy level, or raising money for AI safety work.

Changing public opinion can have a much more direct impact in putting social pressure on anybody involved in AI research, AI funding, AI management, and AI regulation. This was a key point in my 2023 EA Forum essay on moral stigmatization of AI, and the potential benefits of promoting a moral backlash against the AI industry. Given strong enough public opinion for an AI Pause, or against runaway AGI development, the public can put direct pressure on people involved in AI to take AI safety more seriously, e.g. by socially, sexually, financially, or professionally stigmatizing reckless AI developers.

Great post. I can't help but agree the broad idea given that I'm just finishing up a book that has the main goal of raising awareness of AI safety to a broader audience. Non-technical, average citizens, policy makers, etc. Hopefully out in November.

I'm happy your post exists even if I have (minor?) differences on strategy. Currently, I believe the US Gov sees AI as a consumer item so they link it to innovation and economic good and important things. (Of course, given recent activity, there is some concern about the risks). As such, I'm advocating for safe innovation with firm rules/regs that enable that. If those bars can't be met, then we obviously shouldn't have unsafe innovation. I sincerely want good things from advanced AI, but not if it will likely harm everyone.

Some parts of the US Government are waking up to the extinction threat. By November - following the UK AI Safety Summit and Google's release of Gemini(?) - they might've fully woken up (we can hope).

I consider the consumer regulation route complementary to what I’m doing and I think a diversity of approaches is more robust, as well.

I didn’t know about your book! Happy to hear it :)

I think it's potentially misleading to talk about public opinion on AI exclusively in terms of US polling data, when we know the US is one of the most pessimistic countries in the world regarding AI, according to Ipsos polling. The figure below shows agreement with the statement "Products and services using artificial intelligence have more benefits than drawbacks", across different countries:

This is especially true given the relatively smaller fraction of the world population that the US and similarly pessimistic countries represent.

For what it's worth, if history is a reliable guide, I expect the United States to have some of the loosest regulations on AI. China, in particular, resisted industrialization for over one hundred years even after losing several wars due to their smaller industrial capacity. And their leadership is currently cracking down on their tech industry more fiercely than the US is cracking down on theirs. Longstanding norms and laws in the West have historically favored technological development more than other nations, and I think that datapoint is stronger evidence than evidence from public opinion polling.

I did point out in the first paragraph that I am American and focusing on US advocacy. The US is going to be a policy leader on this space so I don't think the population argument makes sense.

This question is also different than asking about potential future danger so I'm not sure how to take it. I would answer that today's products and services have more benefits than drawbacks.

Insightful stats! They also show

1) attitudes in Europe close to those in the US. My hunch is that in the EU there could be comparable or even more support for "Pause AI", because of the absence of top AI labs.

2) A correlation with factors such as GDP and freedom of speech. Not sure which effect dominates and what to make of it. But censorship in China surely won't help advocacy efforts.

So the stats make me more hopeful for advocacy impact also in EU & UK. But less so China, which is a relevant player (mixed recent messages on that with the chip advances & economic slowdown).

A very interesting and fresh (at least to my mind) take, thanks again! I also think "Pause AI" is a simple ask, hard to misinterpret. In contrast, "Align AI", "Regulate AI", Govern, Develop Responsibly and others don't have such advantages. Resonates with asks for a "ban" when campaigning for animals, as opposed to welfare improvements.

I do fear however that inappropriate execution can alienate supporters. Over the last several years when I told someone that I was advocating a fur farming ban, often the first reply was that they don't support "our" tacticsm, namely - spilling paint on fur coats and letting animals out of their cages, which is not something my organisation ever did. And that's from generally neutral or sympathetic acquaintances.

The common theme here is a Victim - either the one with a ruined fur coat, or the farmers. For AI the situation is better: the most salient Victims to my mind are a few megarich labs (assuming that the AI Pause applies to the most advanced models/capabilities). It would seem important to stress that products people already use will not be affected (to avoid loss aversion like with meat); and a limited effect on small businesses with open source solutions.

P.S. I am broadly aware about the potential of nonviolent action & that PETA is competent. But do worry that the backlash can be sizeable and lasting enough to make the expected impact negative.

[ETA: I'm worried this comment is being misinterpreted. I'm not saying we should have no regulation. I'm challenging the point about where the burden of proof lies for showing whether a new technology is harmful.]

Can you speak a little more about why you think this is the "rightful place" of the burden of proof? When I think back to virtually every new technology in human history, I don't think the burden of proof was generally considered to be on the inventors to prove that their technology was safe before developing it.

In the vast majority of cases, the way we've dealt with technologies in the past is by allowing essentially laissez faire for inventors at first. Then, for many technologies, after they've been adopted by a substantial fraction of the population for a while, and we've empirically observed the dangers, we place controls on who can produce, sell, and use the technology. For example, we did that with DDT, PCBs, leaded gasoline, and asbestos.

There might be good reasons why we don't want to deal with AI this way, but I generally still think the burden of proof is on other people to show why AI is different than other technologies, rather than being on developers to prove that AI can or will be developed safely.

For particular classes of technologies, like food and medicine, our society thinks that it's too risky to allow companies to sell completely new goods without explicit approval. That's perhaps more in line with what you're proposing for AI. But it's worth noting that the FDA only started requiring proof of safety in 1938. Our current regime in which we require that companies prove that their products are safe before they are allowed to sell them is a distinctly modern and recent phenomenon, rather than some universal norm in human societies.

Moreover, I can't think of a single example in which we've waited for democratic approval for new technologies. My guess is that, if we had done that in the past, then many essential modern day technologies would have never been developed. In a survey covering 20 countries around the world, in almost every single nation, there are more people who say that GM foods are "unsafe" than people who say that GM foods are "safe":

This is despite the fact that GM foods have been studied for decades now, with a strong scientific consensus about their safety. On matters of safety, the general public is typically uninformed and frequently misinformed. I think it is correct to rely far more on credible assessments of safety from experts than public opinion.

Just as a partial reply, it seems weird to me to claim that the groups both best able to demonstrate safety and most technically capable of doing so - the groups making the systems - should get a free pass to tell other people to prove what they are doing is unsafe. That's a really bad incentive.

And I think basically everywhere in the western world, for the past half century or so, we require manufacturers and designers to ensure their products are safe, implicitly or explicitly. Houses, bridges, consumer electronics, and children's toys all get certified for safety. Hell, we even license engineers in most countries and make it illegal for non-licensed engineers to do things like certify building safety. That isn't a democratic control, but it's clearly putting the burden of proof on the makers, not those claiming it might be unsafe.

Sure, there are regulations on manufacturing products. But these regulations are generally based on decades of experience with the technologies, and were only put in place after people started to see harm. They weren't conceived a priori before the technologies had any sizable impact.

...and this risk isn't predictable on priors?

(But if we had decades of experience with computer-based systems not reliably doing exactly what we wanted, you'd admit that this degree of caution on systems we expect to be powerful would be reasonable?)

That's not how modern risk assessment works. Risk registers and mitigation planning are based on proactively identifying risk. To the extent that this doesn't occur before something is built and/or deployed, at the very least, it's a failure of the engineering process. (It also seems somewhat perverse to argue that we need to protect innovation in a specific domain by sticking to the way regulation happened long in the past.)

And in the cases where engineering and scientific analysis has identified risks in advance, but no regulatory system is in place, the legal system has been clear that there is liability on the part of the producers. And given those widely acknowledged dangers, it seems clear that if model developers ignores a known or obvious risk, they are criminally liable for negligence. This isn't the same as restricting by-default-unsafe technologies like drugs and buildings, but at the very least, I think you should agree that one needs to make an argument for why ML models should be treated differently than other technologies with widely acknowledged dangers.

The frame of “burden of proof is on you to show AI is different from other technologies” is bizarre to me.

It’s a bit like if we’re talking about transporting live stock and someone is like “prove transporting dragons is differently than other livestock”. They’re massive, can fly, can breath fire and in many stories are very intelligent.

Where’s this assumption of sameness coming from? And how do you miss all the differences?

Similarly, I don’t know how you can look at AI and think “just another technology”.

AI can invent other technologies, provide strategical advice, act autonomously, self-replicate, ect. It feels like the default should very much be that it needs its own analysis.

I call this the technology bucket error

Those facts provide a reasonable basis for why we should treat dragons differently than livestock when transporting them. I don't think that is really a shifting of the burden of proof, but rather an argument that dragons have met the burden of proof. Do we see that with AI yet? Perhaps. But I think so far most of the arguments for AI risks have been abstract and rely heavily upon theoretical evidence rather than concrete foreseeable harms. I think this type of argument is notoriously unreliable.

I'm also not saying "AI is the same". I'm saying "We shouldn't just assume AI is different a priori".

I agree that AI will eventually be able to do those things, and so we should probably regulate it pretty heavily eventually. But a "pause" would probably include stopping a bunch of harmless AI products too. For example, a lot of people want to stop GPT-5. I'm skeptical, as a practical matter, that OpenAI should have to prove to us that GPT-5 will be safe before releasing it. I think we should probably instead wait until the concrete harms from AI become clearer before controlling it heavily.

My point regarding burden of proof is that something has gone wrong if you think dragons are in the same reference class as pigs, cows, even lions in terms of transportation challenges. And the fact that someone needs to ask for an explicit list is indicative of a mistake somewhere.

I’m not saying that you can’t argue that they are the same. Just that a more reasonable framing would then be more along the lines of, “here’s my surprising conclusion that we can regulate it the same way”.

Almost no past technology have a claim to be a non-Pascalian existential risk to humanity; especially one that's known in advance.

The only exceptions I could think of are nukes, certain forms of bioweapons research, and maybe CFCs in refrigeration.

That appears to assume the conclusion ("AI is dangerous") to explain why the burden of proof is on the inventors to prove AI is safe. But I'm asking about where the burden of proof should lie prior to us already having the answer! If we had used this burden for every prior technology, it's likely that a giant amount of innovation would have been stifled.

Now, you could alternatively think that the burden of proof is on other people to show that a new technology is dangerous, and this burden has already been met for AI. But I think that's a different claim. I was responding to the idea that the burden of proof is on AI developers to prove that their product is safe.

We might be talking past each other. I think the burden of proof that AI-in-principle could be dangerous is on non-inventors, and that has already been met[1]. I think the burden of proof that specific-AI-tech-in-practice is safe should then be on AI manufacturers.

Similarly, if we know no details about nuclear power plants or nuclear weapons, the burden of proof about how scary an abstract "power plant" or "taking some ore from the ground and refining it" should be on concerned people. But after the theoretical case for nuclear scariness is demonstrated, we shouldn't have had to wait until Hiroshima or Nagasaki, or even the Trinity Test, before the burden of proof falls on nuclear weapons manufacturers/states to demonstrate that their potential for accident is low.

As demonstrated by eg, surveys.

I think that makes sense. But I also think that the idea of asking for a "pause" looks a lot more like asking AI developers to prove that AI in the abstract can be safe, whereas an "FDA for new AIs" looks more like asking developers to prove their specific implementations are safe. The distinction between the two ideas here is blurry though, admittedly.

But insofar as this distinction makes sense, I think it should likely push us against a generic pause, and in favor of specific targeted regulations.

Nuclear chain reactions leading to massive explosions are dangerous. We don't have separate prohibition treaties on each specific model of nuke.

Impenetrable multi-trillion-parameter neural networks are dangerous. I think it does make sense for AI developers to prove that AI (as per the current foundation model neural network paradigm) in the abstract can be safe.

I think this is distinctly different from what you claim. In any actual system implementing a pause, the model developer is free "to prove their specific implementations are safe," and go ahead. The question is what the default is - and you've implied elsewhere in this thread that you think that developers should be treated like pre-1938 drug manufacturers, with no rules.

If what you're proposing is instead that there needs to be a regulatory body like the FDA to administer the rules and review cases when a company claims to have sufficient evidence of safety when planning to create a model, with a default rule that it's illegal to develop the model until reviewed, instead of a ban with a review for exceptions when a company claims to have sufficient evidence of safety when planning to create a model, I think the gap between our positions is less blurry than it is primarily semantic.

I think you misread me. I've said across multiple comments that I favor targeted regulations that are based on foreseeable harms after we've gotten more acquainted with the technology. I don't think that's very similar to an indefinite pause, and it certainly isn't the same as "no rules".

That makes sense - I was confused, since you said different things, and some of them were subjunctive, and some were speaking about why you disagree with proposed analogies.

Given your perspective, is loss-of-control from more capable and larger models not a foreseeable harm? If we see a single example of this, and we manage to shut it down, would you then be in favor of a regulate-before-training approach?

Excellent post!

I agree! And this might be a hot take (especially for those who are already deep into AI issues), but I also see the need, first and foremost, to advocate for AI within our EA community.

People interacting on this forum do not, IMO, give a fully representative picture of EAs and tend to be very focused on AI while the broader EA community didn't enter EA for 'longtermist' (as much as I hate using this label that could apply to so many causes labelled as neartermists) purposes/did not make the change between what they think is highly impactful and the recent turning point from CEA to focus a large amount of EA resources on longtermism.

People who have been making career switches and reading about global aid/animal welfare who suddenly find out that more than 50 percent of the talks at EA globals and resources are dedicated to AI rather than other causes, are lost. As a community builder, I am in a weird position where I have to explain why and convince many in my local community that EA's focus is changing (focus coming from the top, the top being closely related to funding decisions etc, not saying these are the same people and it's obv more complex than that but the change towards longtermism and focus on AI is indisputable) for the better.

This results in many EAs feeling highly skeptical about the new focus. It is good that 80k is making simple videos to explain the risks associated with EA, but I still feel that community epistemics are poor when it comes to justify this change, despite 80k very clear website pages about AI safety. The content is there; outreach, not so much.

And my resulting feeling (because its very hard to have actual numbers to gauge the truth) is that on one side we have AI afficionados, ready to switch careers and already in a deep level of knowledge about these topics (usually with the convenient background in STEM, machine learning etc), the same ones that do comment a lot on the forum, and the rest of the EA community that doesn't feel much sense of belonging towards the EA community lately. I was planning to write a post about that but I still need to clarify my thoughts and sharpen my arguments, as you can see how poorly structured my comment is.

So I guess that my take is : before (or at the same time, but it seems more strategic for me to do this before in terms of allocating resources) advocating for AI safety outside of the community, let's do it inside the community.

Footnote : I know about the RethinkPriorities survey that indicates that 70 percent of EAs do consider AI safety as the most impactful thing to work on (I might remember it badly though, not confident at all), but I have my reservations on how representative the survey actually is.

I don't understand why doing outreach to EAs specifically to convince them of this would be an effective focus. It seems none of important, tractable, or neglected. The people in EA who you're talking about, I think, are a small group compared to the general population, they aren't in high-leverage positions to change things relevant to AI, and they are already aware of the topic and have not bought the arguments.

Because you leave from the premises that the majority of the EA community is already convinced and into AI already, which I don't think is true at all, the last post about this showing diagrams of EAs in the community was based purely on intuition and nothing else.

EAs are highly-educated and wealthy people for the vast majority, and their skills are definitely needed in AI. Someone in EA will be much more easily brought onto a job in AI compared to someone who has a vague understanding of it OR doesn't have the skills. So yes I do think they are in high-leverage positions since they already occupy good jobs.

As to bring the arguments, try going against the grain and expressing doubts on the fastness on how AI took over the EA community, how the funding is now distributed, and how does that feel to see the EA forum having its vast majority of posts dedicated to AI. Many of the EA who do think this way are not on the forum and prefer to stand aside since they don't feel like they belong. I don't want to lose these people. And the fact that I am being downvoted to hell every time I dare saying these things is just basic evidence. Everyone who disagrees with me, please explain why instead of just downvoting. That just increases the 'this is not an opinion we condone' without any explanation.

I don't assume that they are convinced, I think that they are aware of the issues. They are also a tiny group compared to the general population - so I think you need a far stronger reason to focus on such a small group instead of the public than what has been suggested.

And I think you're misconstruing my position about EA versus AI safety - I strongly agree that they should be separate, as I've said elsewhere.

Yeah I get your point and factually sure it is a small group. I still think that for cohesive community purposes advocating for AI within EA would be useful, and finding qualified members to work in AI is easier to do within the community than within the public given the profile of EAS.

As to be aware of the issues that is where we disagree. I don't think AI has been brought in a careful, thoughtful way, with good epistemics in the community. AI became a thing for specialists and an evidence very quickly, to the detriment of other EAs who have a hard time adjusting. Ignoring this will not lead to good things and should not be undervalued.

Do you mean "risks associated with AI"?

Yes my bad!

I find your focus on outer game strange. Given the already existing support of the public for going slowly and deliberately, there seems to be a decent case that instead of trying to build public support, we should directly target the policymakers. It's not clear what extra public support buys us here. In fact, I suspect it might be far more valuable to lobby the industry to try to reduce the amount of opposition such laws might receive.

This is a worrying figure to me. If we slow down licensing too much, we almost guarantee that the first super-intelligence is not going to be developed by anyone going through the proper process. Not to mention all of the hours wasted on bureaucratic requirements, rather than actually building an aligned system.

I think "public support" is ambiguous, and by some definitions, it isn't there yet.

One definition is something like "Does the public care about this when they are asked directly?" and this type of support definitely exists, per data like the YouGov poll showing majority support for AI pause.

But there are also polls showing that almost half of U.S. adults "support a ban on factory farming." I think the correct takeaway from those polls is that there's a gap between vaguely agreeing with an idea when asked vs. actually supporting specific, meaningful policies in a proactive way.

So I think the definition of "public support" that could help the safety situation, and which is missing right now, is something like "How does this issue rank when the public is asked what causes will inform their voting decisions in the next election cycle?"

I broadly agree with the conclusion as stated. But I think there are at least a couple of important asymmetries between the factory farming question and the AI question, which mean that we shouldn't expect there to be a gap of a similar magnitude between stated public support and actual public support regarding AI.

I think both of these factors conduce to a larger gap between stated attitudes and actual support in the animal farming case. That said, I think this is an ameliorable problem: in our replications of the SI animal farming results, we found substantially lower support (close to 15%).

So, I think the conclusion to draw is that polling certain questions can find misleadingly high support for different issues (even if you ask a well known survey panel to run the questions), but not that very high support found in surveys just generally doesn't mean anything. [Not that you said this, but I wanted to explain why I don't think it is the case anyway]

Agree, and I want to add that you need to keep up awareness to keep what support we do have from slipping. Even if and when we have legislative victories, there's going to be opposition from industry for the foreseeable future, so there's going to be a role for AI Safety advocacy.

Isn't targeting policymakers still outside game? (If inside game is the big AI companies.)

The licensing would have to come with sufficient enforcement of compute limits that this isn't possible (and any sensible licensing would involve this. How many mega-environment-altering infrastructure projects are built without proper licenses? Sure, they may be rubber-stamped via corrupt officials, but that's another matter..)

I don’t really know those terms very well. Would love clarification from someone.

My understanding (non-expert) is that the inside game is whatever uses the system as is. Outside is things that try to break the system or put pressure in ways that the system generally does not legibly take as inputs. So, talking to existing officials to use existing ways of regulation is maximum inside game. Throwing a coup and enacting dictatorial powers in order to regulate is maximum outside game. Lobbying is more inside, and protesting is more outside. So when we say "target policymakers", the question is how? Are you sending polite emails with reasoned arguments, or are you throwing buckets of computer chips at their car as they drive by? (I do not endorse doing this, and I say this for comedic effect :D )

Chloe Cockburn, who used to lead Open Phil's criminal justice reform work, gives a useful definition here:

'Mass mobilization and structure organizing make up the “outside game.” Those making change by working within government, or other elite or dominant structures, are part of the inside game.'

Using that definition, a coup feels very inside game. But I agree with your general characterisation, Dušan.

I also think it's worth pointing out that the outside game is not just protesting. In the quote, Chloe refers to structure organising and mobilisation.

Here's a contrast between the two:

Structure Organising:

Mobilisation:

In essence, while structure organising focuses on building long-term power and capacity, mobilisation is about rallying people for immediate action. Both approaches have their strengths and can be complementary. For example, a well-organised group with a clear structure can mobilise its members more effectively when the need arises.

I've written more about the difference between structured organising and mobilisation here.

These are not exclusive to each other, but complementary. Calling your local senator is only made stronger if the same senator sees protests on the streets calling for the same thing you are calling for.

The regulations on guns/nuclear weapons/bioweapons mean that most public uses are by people not going through the proper process. Still worth regulating them!