abrahamrowe

Bio

Principal — Good Structures

I previously co-founded and served as Executive Director at Wild Animal Initiative, and was the COO of Rethink Priorities from 2020 to 2024.

Posts 24

Comments233

Topic contributions1

I think that the animal welfare space is especially opaque for strategic reasons. For example, most of the publicly available descriptions of corporate animal welfare strategy are, in my opinion, not particularly accurate. I think most of the actual strategy becoming public would make it significantly less effective. I don't think it is kept secret with a deep amount of intentionality, but more like there is a shared understanding among many of the best campaigners to not share exactly how they are working outside a circle of collaborators to avoid strategies losing effectiveness.

I think outside organizations' ability to evaluate the effectiveness of individual corporate campaigning organizations (including ACE unfortunately) is really low due to this (I think that evaluating ecosystems of organizations / the intervention as a whole is easier though).

(I don't really want to engage much on this because I found it pretty emotionally draining last time — I'll just leave this comment and stop here):

I think asking for feedback prior to publishing these seems really important. To be clear, I'm very sympathetic to the overall claim! I suspect that most published estimates of the impact of marginal dollars in the farmed animal space are way too high (maybe even by orders of magnitude)

I also think the items you raise are important questions for Sinergia to answer!

But, I think getting feedback would be really helpful for you:

- You cite multiple places where Sinergia claims impact, but you cite evidence to the contrary, such as company statements on their sites either existing prior to Sinergia's claimed date, or not existing at all.

- The experience of corporate campaigners universally is that the degree to which you should take company statements about their animal welfare commitments seriously is relatively low. It's just a regular fact of corporate campaigning in many countries that companies have statements on their websites that they either don't follow or don't intend to follow. Often, companies have weaselly language that lets them get out of a commitment, e.g. "we aspire to do X by Y year," etc.

- The language in company statements matter a ton — when I ran corporate campaigns, a major US restaurant company I was campaigning on put up a verbatim version of the Better Chicken Commitment with all of the specifics removed (e.g. "reduce stocking density" instead of "reduce stocking density to X lbs/sqft") and did nothing in their supply chain. This allowed them to tell journalists / others that we were just lying that they had not made the commitment. I worry that Google translating pages loses nuance that might matter here. Google Translating Brazilian law also seems like a huge stretch as evidence — and taking the law at face value as a non-Brazilian lawyer (and not evidence about the interpretation and enforcement of the law) seems like a mistake.

- Getting a commitment on the website is important, but it's only a portion of work. Actually getting the company to make the change is way more work.

- I know nothing about the JBS case, but can tell you that there are many times where a company has a commitment, then behind the scenes the actual work is several years of getting them to honor it. It seems completely plausible, and even routine, that the actual impact credit should go to an organization working to get a company to act on an existing policy, as opposed to getting them to put up the policy in the first place. I don't know anything about Sinergia's claims here, but seems totally plausible that much of their impact comes from this invisible work that you wouldn't learn about without asking them.

I suspect that in your critique, some of your claims are warranted, but others might have much more complicated stories behind them, such as an organization getting a company to actually follow through on a commitment, or getting a law to be enforced. I think that feedback would help draw out where these critiques are accurate, and where they are missing the mark.

Equal Hands — 2 Month Update

Equal Hands is an experiment in democratizing effective giving. Donors simulate pooling their resources together, and voting how to distribute them across cause areas. All votes count equally, independent of someone's ability to give.

You can learn more about it here, and sign up to learn more or join here. If you sign up before December 16th, you can participate in our current round. As of December 7th, 2024 at 11:00pm Eastern time, 12 donors have pledged $2,915, meaning the marginal $25 donor will move ~$226 in expectation to their preferred cause areas.

In Equal Hands’ first 2 months, 22 donors participated and collectively gave $7,495.01 democratically to impactful charity. Including pledges for its third month, that number will likely increase to at least 24, and $10,410.01

Across the first two months, the gifts made by cause area and pseudo-counterfactual effect (e.g. if people had given their own money in line with their voting, rather than following the democratic outcome) has been:

- Animal welfare: $3,133.35, a decrease of $1,662.15

- Global health: $1,694.85, a decrease of $54.15

- Global catastrophic risks: $2,093.91, an increase of $1,520.16

- EA community building: $319.38, an increase of $179.63

- Climate change: $253.52, an increase of $16.52

Interestingly, the primary impact has been money being reallocated from animal welfare to global catastrophic risks. From the very little data that we have, this primarily appears to be because animal welfare-motivated donors are much more likely to pledge large amounts to democratic giving, while GCR-motivated donors are more likely to sign up (or are a larger population in general), but are more likely to give smaller amounts.

- I’m not sure why exactly this is! The motivation should be the same regardless of cause area for small donors — in expectation, the average vote has moved over $200 to each donor’s preferred causes across both of the first two months, so I would expect it to be motivating for donors from various backgrounds, but maybe GCR-motivated donors are more likely to think in this kind of reasoning.

- GCR donors haven’t had as high-retention over the first three months of signups, so currently the third month looks like it might look a bit different — funding is primarily flowing out of animal welfare, and going to a mix of global health and GCRs.

The total administrative time for me to operate Equal Hands has been around 45 minutes per month. I think it will remain below 1 hour per month with up to 100 donors, which is somewhat below what I expected when I started this project.

We’d love to see more people join! I think this project works best by having a larger number of donors, especially people interested in giving above the minimum of $25. If you want to learn more or sign up, you can do so here!

Nice! And yeah, I shouldn't have said downstream. I mean something like, (almost) every intervention has wild animal welfare considerations (because many things end up impacting wild animals), so if you buy that wild animal welfare matters, the complexity of solving WAW problems isn't just a problem for WAI — it's a problem for everyone.

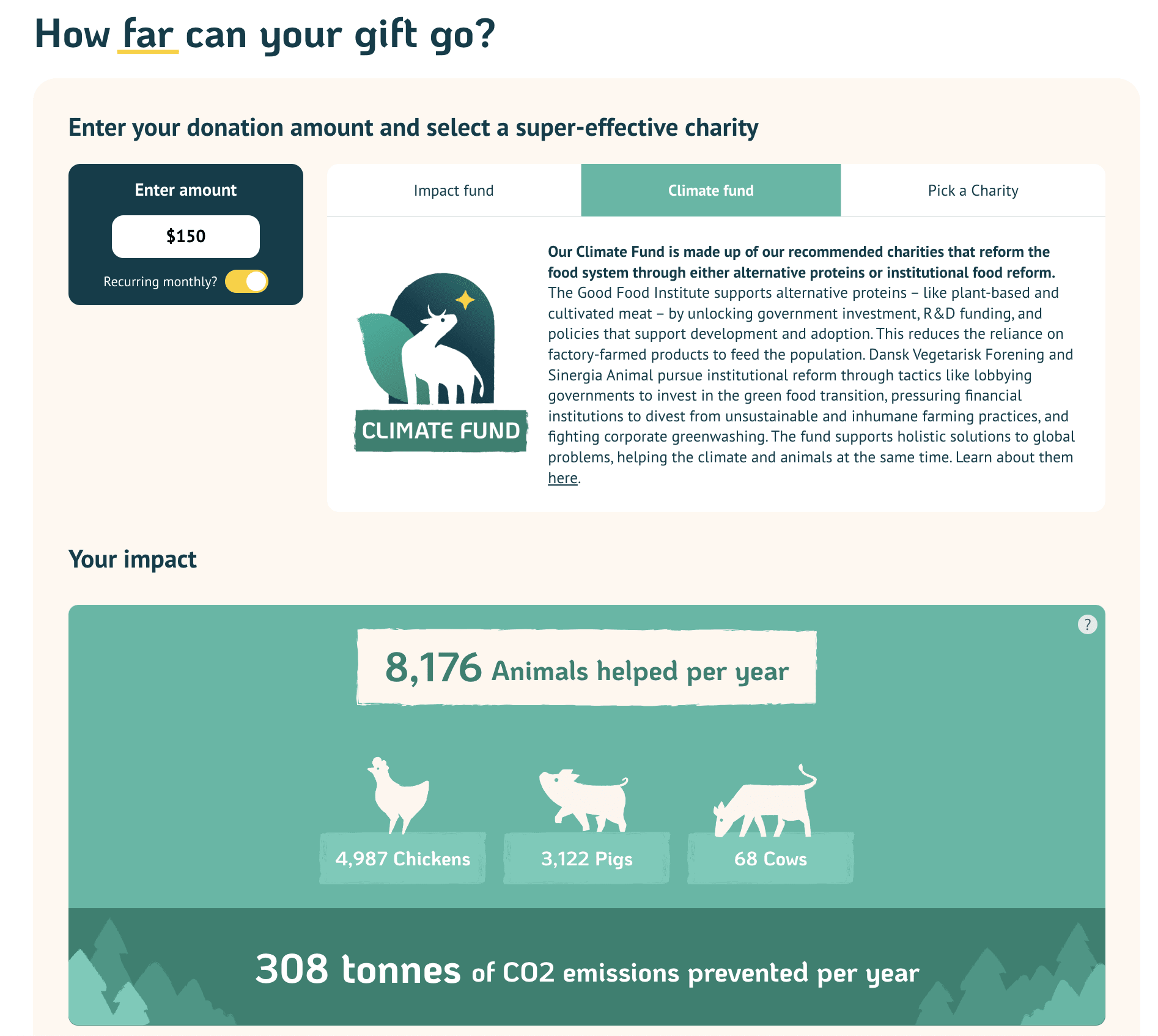

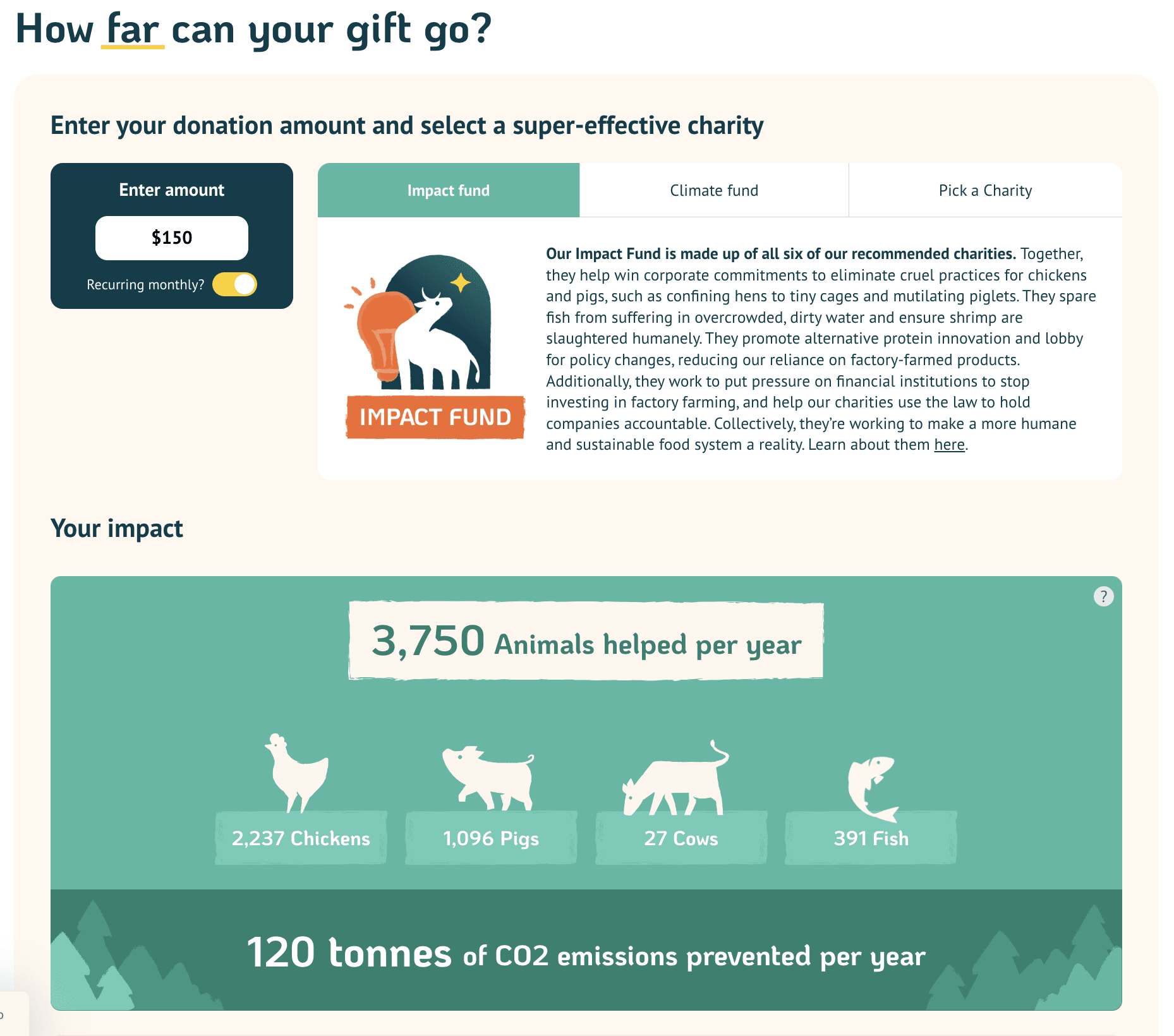

Minor question - I noticed that the website states for the climate fund that the same donation will help a lot more animals than the impact fund (over 2x as many - and mostly driven by chickens and pigs). I know the numbers are likely low confidence, but just curious how you're thinking about those, as to me it was unintuitive to have one labelled "impact fund" that straightforwardly looks worse on animal impacts than the climate fund (and also worse on the climate side!). I didn't quite understand why this was happening from looking at the calculations page (though from the charities in each, I definitely have the sense that the impact fund is better for animals!)

I voted for Wild Animal Initiative, followed by Shrimp Welfare Project and Arthropoda Foundation (I have COIs with WAI and Arthropoda).

- All three cannot be funded by OpenPhil/GVF currently, despite WAI/SWP being heavily funded previously by them.

- I think that wild animal welfare is the single most important animal welfare issue, and it remains incredibly neglected, with just WAI working on it exclusively.

- Despite this challenge, WAI seems to have made a ton of progress on building the scientific knowledge needed to actually make progress on these issues.

- Since founding and leaving WAI, I've just become increasingly optimistic about there being a not-too-long-term pathway to robust interventions to help wild animals, and to wild animal welfare going moderately mainstream within conservation biology/ecology.

- Wild animal welfare is downstream from ~every other cause area. If you think it is a problem, but that we can't do anything about it because the issue is so complicated, then the same is true of the wild animal welfare impacts of basically all other interventions EAs pursue. This seems like a huge issue for knowing the impact of our work. No one is working on this except WAI, and no other issues seem to cut across all causes the way wild animal welfare does.

- SWP seems like they are implementing the most cost-effective animal welfare intervention that is remotely scalable right now.

- In general, I favor funding research, because historically OpenPhil has been far more likely to fund research than other funders, and it is pretty hard for research-focused organizations to compete with intervention-focused organizations in the animal funding scene, despite lots of interventions being downstream from research. Since Arthropoda also does scientific field building / research funding, I added it to my list.

That's too bad! I'll give this feedback to Every.org as they are a moderately aligned nonprofit themselves, and are really receptive to feedback in my experience. FWIW, using them saves organizations a pretty massive amount of bureaucracy / paperwork / compliance-y stuff, so I hope there is a way to use them that can be beneficial for the donors.