(Written in a personal capacity, and not representing either my current employer or former one)

In 2016, I founded Utility Farm, and later merged it with Wild-Animal Suffering Research (founded by Persis Eskander) to form Wild Animal Initiative. Wild Animal Initiative is, by my estimation, a highly successful research organization. The current Wild Animal Initiative staff deserve all the credit for where they have taken the organization, but I’m incredibly proud that I got to be involved early in the establishment of a new field of study, wild animal welfare science, and to see the tiny organization I started in an apartment with a few hundred dollars go on to be recommended by ACE as a Top Charity for 4 years in a row. In my opinion, Wild Animal Initiative has become, under the stewardship of more capable people than I, the single best bet for unlocking interventions that could tackle the vast majority of animal suffering.

Unlike most EA charities today, Utility Farm didn’t launch with a big grant from Open Philanthropy, Survival and Flourish Foundation, or EA Funds. There was no bet made by a single donor on a promising idea. I launched Utility Farm with my own money, which I spent directly on the project. I was making around $35,000 a year at the time working at a nonprofit, and spending maybe $300 a month on the project. Then one day, a donor completely changed the trajectory of the organization by giving us around $500. It’s weird looking at that event through the lens of current EA funding levels — it was a tiny bet, but it took the organization from being a side project that was cash-strapped and completely reliant on my energy and time to an organization that could actually purchase some supplies or hire a contractor for a project.

From there, a few more donors gave us a few thousand dollars each. These funds weren’t enough to hire staff or do anything substantial, but they provided a lifeline for the organization, allowing us to run our first real research projects and to publish our work online.

In 2018, we ran our first major fundraiser. We received several donations of a few thousand dollars, and (if I recall correctly) one gift of $20,000. Soon after, EA Funds granted us $40,000. We could then hire staff for the first time, and make tangible progress toward our mission.

As small as these funds were in the scheme of things, for Utility Farm, they felt sustainable. We didn’t have one donor — we had a solid base of maybe 50 supporters, and no single individual dominated our funding. Our largest donor changing their mind about our work would have been a major disappointment, but not a financial catastrophe. Fundraising was still fairly easy — we weren’t trying to convince thousands of people to give $25. Instead, fundraising consisted of checking in with a few dozen people, sending some emails, and holding some calls. Most of the "fundraising" was the organization doing impactful work, not endless donor engagement.

I now work at a much larger EA organization with around 100x the revenue and 30x the staff. Oddly, we don’t have that many more donors than Utility Farm did back then — maybe around 2-4 times as many small donors, and about the same number giving more than $1,000. This probably varies between organizations — I have a feeling that many organizations doing more direct work than Rethink Priorities have many more donors — but most EA organizations seem to have strikingly few mid-sized donors (e.g., individuals who give maybe $1,000 - $25,000). Often, organizations will have a large cohort of small donors, giving maybe $25-$100, and then they’ll have 2-3 (or even just 1) giant donors, collectively giving 95%+ of the organization's budget across a handful of grants. Although revenue at these organizations have reached a massive scale compared to Utility Farm’s budget, in some senses it feels like they are in a far more tenuous position. For these top-heavy organizations, one donor changing their mind can make or break the business. Even when that “one person” is an incredibly well-informed grantmaker, who is making a difficult call about what work is most important, there is a precariousness to the entire situation that I didn’t feel while working at a theoretically much less well-resourced group. Right now, despite neither directly conducting fundraising nor working at an organization that has had major fundraising issues, an unfortunately large amount of my time is taken up thinking about fundraising instead of our work, because of this precarity.

I find considering donors giving between $1,000 and $25,000 a year particularly interesting, because that seems like a rough proxy for “average people earning to give.” While we (and especially me) might not all be cut out for professions that facilitate making gifts of hundreds of thousands of dollars, many people in the EA community could become software engineers, doctors, lawyers, or other middle-class professionals, who might easily give in the “mid-size donor” range. In fact, employees of many EA organizations could probably give in that range. But most people who I meet in the space aren’t earning to give, or aren’t giving a substantial portion of their income. They are doing direct work or community building, or trying to get into direct work or community building.

I think this is a huge loss for EA. My sense is that earning to give is slowly becoming a less central part of EA, and with it, I think there are huge costs:

- A robust earning to give ecosystem is better for EA organizations.

- For EA organizations, having one donor is a lot worse than having a few dozen or hundred, all things being equal. This is for reasons of both donor influence and administrative/fundraising burden of engagement.

- A robust earning to give ecosystem is better for the EA community.

- Power in the EA community has become increasingly concentrated among a few individuals or grant-making bodies. Concentration of power increases the risk that a bad call by a single individual can harm the community as a whole. More people earning to give decentralizes this power to a certain extent, decreasing this risk.

- One particular kind of risky decision centralized power brokers can make is giving based only on their own values, and not considering the views of the community as a whole. The more people are earning to give, the more likely it is that donation distributions represent the values of the community at large, and given the disagreements and uncertainties across cause areas, might be a better way to allocate funds.

- In particular, the combination of (a) and (b) allows charitable organizations to worry less about what specific people in power think, and respond more to what the community at large thinks, and to rely more on their own on-the-ground judgment of the best way to do their work. This seems to be a really good outcome for charity efficiency, quality of work, and community cohesion as a whole.

Mid-sized earning to give donors might never match the giving potential of institutional donors in the EA space, but it seems possible to reach a world where 5,000 more people give $20,000 annually, generating an additional $100M for effective charity while decentralizing power and building a healthier funding ecosystem for organizations. Millions have been spent on EA community building over the last few years, but little of that seems to focus on pushing people to earn to give, this seems to be a huge missed opportunity.

The past emphasis on earning to give wasn’t perfect — it turns off some people, is easily misunderstood, and can come across as valuing community members in proportion to their incomes. I personally felt an internal change when I took the Giving Pledge in terms of how much I worried about money: I felt like I started to measure some of my self-worth in the community in terms of how much money I made, because it directly translated to impact. I have some thoughts on how EA could return to valuing earning to give while avoiding some of its past mistakes, but I don’t pretend to have all the answers. It would be great to hear from others whether they feel similarly that earning to give has fallen out of fashion in EA, and what impacts (good and bad) this has had.

The above gives a pretty good overview of my position; the below sections give a bit more nuance for those who are interested.

A robust earning to give ecosystem is better for charities

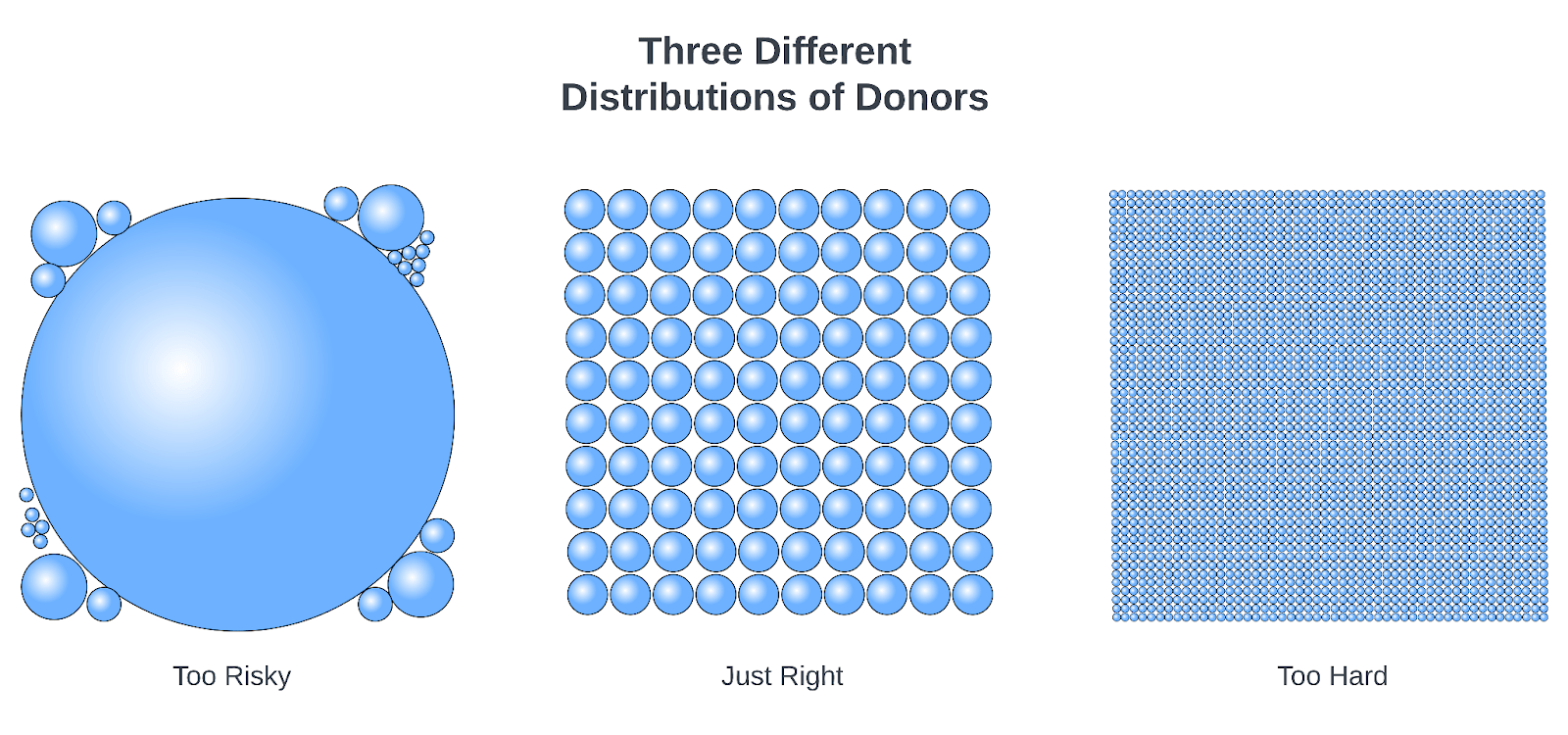

Imagine that a new high-impact charity launches. It needs funding! But getting funding is hard — engaging with donors takes away needed time from important programs. The charity needs $1,000,000 to carry out a promising project. Here are three ways it could get funding from donors:

- It could secure $850,000 of its funding from one donor, and fill in the rest with a mix of mid-sized and smaller donors.

- It could secure $25 from each of 40,000 people.

- It could secure $10,000 from each of 100 people.

The first option is the only option available to most EA organizations right now. Organizations might generally have one giant donor, and a handful of smaller donors fill in gaps in their funding. But it is risky — that singular donor changing their mind about the project might cause it to shut down forever.

The second option might work well for Bernie Sanders but is incredibly difficult without his kind of profile: he needed over 700,000 donations to raise $20,000,000 at an average of $27 each. For an EA organization trying to hire a handful of staff, this model is completely out of the question — getting 40,000 donors is likely impossible, and if it was possible that might be a sign that the intervention isn’t that neglected anyway.

The third option is a comparatively easy and far more resilient way to build an effective organization. If the EA community embraced earning to give as a way to participate in the community, this approach seems like it would be achievable for a much larger number of organizations.

Having relatively few donors is good for charities, but having one is too few.

Fundraising is exhausting, distracting, and can be anxiety-inducing. Assuming a project is doing great work, spending as little time as possible on fundraising is ideal. But relying on relationships with only a few individuals comes with issues — most of all, what happens if those funders don’t renew their gifts, and the project collapses? If 85% of an organization’s funding comes from one donor, this is a major threat. A relationship with a single grantmaker completely shapes the organization's trajectory.

If that grantmaker were replaced by a few dozen mid-sized donors, the risk profile completely changes. The organization might not need to invest heavily in fundraising — a few dozen emails sent a year, updating people on projects, etc., isn’t that much work. And, a few individuals changing their minds, or a few grant-makers shifting their focus, isn’t devastating — the project can likely afford to lose some of its donors. Finally, for an organization with a mid to large donor base, a large portion of its donors stopping giving is probably an important signal about the project’s work, impact, or decisions: many people have become convinced it isn't worth continuing to fund.

A robust earning to give ecosystem is better for the EA community

EA has a large amount of resources (money, people, organizations) that are steered by a small number of individuals. Even when grant-makers and major donors are thoughtful about the resulting power dynamics, it’s still a bit of a nerve-wracking reality that the livelihoods of most people in EA are pretty reliant on the decisions of a couple dozen people.

One way to decentralize power is to increase the number of funders. Past authors have cited various ways to do this, including establishing regranting programs (such as Manifund), bringing in more large donors, or pushing earning to give. Increasing the number of people who are earning to give is the only suggestion I’ve seen that truly decentralizes power. Both regranting and large donor outreach will inherently only enable a handful more people to contribute to decision-making. More donors equals more decentralization, and given the difficulties of reaching tens of thousands of small donors, more earning to give seems like the best path for decentralization in EA.

Increasing the numbers of mid-level donors also seems like it could decentralize power relatively quickly — it likely doesn’t take significant effort to get a few hundred more people earning to give at medium-ish levels. Compared to many of the accomplishments of EA over the last decade, finding a few thousand more donors might be ambitious, but seems completely possible. And this somewhat decentralized structure for funding within EA seems like a reasonable, healthy goal for the community.

More donors means that funding matches community priorities

The more donors there are in the space relative to total dollars, the closer the overall funding distribution will be to community priorities. This seems good: it seems clear that there ultimately are judgment calls on how to do good effectively. We can use tools to hone the nature of those disagreements, but ultimately, if one person values animals’ lives highly, and another thinks the likelihood of an existential catastrophe is particularly elevated right now, they might disagree on the best place to spend charitable resources. One way to address these reasonable disagreements is for funding to be aggregated according to the beliefs of the community. This is democratizing for EA, and might also be a reasonable way to address the uncertainty inherent in trying to do good.

It seems unlikely that major funders in the space would reallocate funds according to community priorities (except via proxies like social pressure), so the best tool we have for now to distribute funding according to the priorities of community members is to increase the number of donors giving sizably to organizations in the space. This seems like a benefit that increasing earning to give produces for the community.

The success of EA shouldn’t only be measured by how much money is moved by the community

Before working in EA, I was a fundraiser for an animal shelter. I would spend an entire year conducting multiple engagements with a single donor to solicit a minor gift. This was inefficient. That organization, and many like it, spend huge portions of their budgets on fundraising.

When I came to EA, one of the most striking things was the generosity — I had never encountered a space where ordinary people were so generous, caring, and humble. It was genuinely shocking to encounter donors who were giving large portions of their income and not asking to be wooed, honored, or celebrated. People just wanted to see evidence of your impact.

The EA community’s early inclination toward giving made it incredibly easy to launch new projects, especially those that focus on causes that don’t benefit the donors directly (such as animal welfare). In many ways, this generous dynamic is still present, but now I mostly only see it through funds and large grantmakers. Normally, it seems rare for new charitable ventures to launch with millions in funding so regularly, but this is common in EA. But it used to feel possible to launch a new organization supported directly by community members. This seems nearly impossible now, or at least much more difficult.

I’m not certain how much better the EA world of 2016 was, or how different it was from the EA of today. But the community atmosphere felt different — it felt easier to go against the status quo (and start a project in a previously highly neglected space) because there might be a handful of random people who would support it. It felt more community-focused — priorities were set by everyone, instead of by a few large foundations. And, as an executive of an EA organization, it felt less precarious. I had direct feedback from donors and supporters on our project regularly and was less tied to the power structures of the space.

There are probably genuine downsides to trying to move the community more toward earning to give again. But, when we measure community health only by looking at “how much money is available relative to the opportunities,” we miss important features of how those funds are controlled, how much community buy-in there is for the strategies funders pursue, and how much risk is entailed in the funding models available to EA organizations. An EA of many mid-sized donors is much better on all these fronts than an EA with a single large donor.

I've thought about similar things to this before. Thanks for writing it out for the community. I think there are few reasons for this change.

There are a few solutions to this:

I think there are costs to both approaches. There will be a lot less work done by "less committed" EAs if sacrifices compared to the private sector become expected. Furthermore, there are many efficiency gains to be had by not having several grantmaking orgs duplicate the same work + a bunch of less skilled and trained regrantors might on average make worse grants.

Still something to consider.

It's definitely true that, holding everything equal, spending less on salaries means it's easier to fundraise, and organizations can get more done for a given amount of money. But of course if you ask someone who could be earning $500k to work for $50k most of them (including me!) will say no, and even people who say yes initially are more likely to burn out and decide this is not what they want to do with their life.

There are also other costs to pushing hard on frugality: people will start making tradeoffs that don't really make sense given how valuable their time is to the organization. For example, in 2015 when our first child was refusing to eat at daycare it would have been possible for us to hire someone to watch them, but this would have been expensive. Instead, we chose a combination of working unusual hours and working fewer hours so she wouldn't need to be in daycare. We were both earning to give then, so this was fine, but if we had been doing work that was directly valuable I think it would probably have been a false economy.

I think this general area is quite subtle and confusing, where people have really different impressions depending on their background. From my perspective, as someone making about 1/3 as much in a direct work job as I was making (and expect I could still make) in industry, this is already a relative sacrifice. On the other hand, I also know people at EA orgs who would be making less money outside of EA. I don't at all have a good sense of which of these is more typical? And then the longer someone spends within EA, developing EA-specific career capital and foregoing more conventional career capital, the lower a sacrifice it looks like they are making (relative to the highest comp job they could get right now) but also the higher a sacrifice there actually making (relative to a career in which they had optimized for income).

(And, as always, when people give us as examples of people making large sacrifices, I have to point out that the absolute amount of money we have kept has been relatively high. I expect most EAs who spent a similar period in direct work were living on less and have much lower savings than we can. And I think this would still be true even if current direct work compensation had been in place since 2012.)

EDITED HEAVILY RESPONDING TO COMMENTS BELOW

I have a little bit of a different perspective on that it doesn't feel or appear good to label "only" earning 70k a "sacrifice", even if it is technically correct. Maybe using language like "trade-off" or "tough choice" might feel a little better.

70k (OPs suggested salary) still puts you very close to the top 1 percent of global earners. Imagine being a Ugandan interested in effective altruism looking at this discussion. The idea that a salary of 70k US being a 'sacrifice' could seem grating and crass, even if the use of the word is correct. Everyone earning 70k doing a satisfying job that is making a difference in the world, is super fortunate with a whole lot of factors that have come together to make that possible.

Even just having the choice to be able to make the decision whether to earn to give or do direct work for "only" 60 or 70k is a privilege most of the world doesn't have. I think it could be considered insensitive or even arrogant to talk in terms of sacrifice while being among the richest people around. To take it to the extreme (perhaps straw man tho) how would we feel about a sentence like "Bill Gates sacrificed an hour of his valuable time to serve in a soup kitchen"

Yes it may be psychologically hard to earn a while lot less than you could be doing something else, but I'm not sure "sacrifice" is the best terminology to be using.

I've seen a number of conversations about salary in EA where people talk past each other. Below is a list of what I think are some key cruxes- not everywhere people disagree, but where they are most likely to have different implicit assumptions, and most likely to have disagreements stemming only from definitions. I'd love if everyone arguing about salary in this thread would fill this out.

What salary are you proposing?(US$70k ?)What salary are you proposing?(US$70k ?)Obviously there's lots of different countries, and lot's of different cause areas. But just focusing on one in particular. I think CEA employees in the UK, could largely pay teacher salaries (24-30K GBP) and basically be fine, not lose lots of talent.

Pretty good conditions. Good apartment, good savings.

As soon as you're saving for pension, you're in a good spot I'd say.

>85% I'd guess. Keep in mind median UK salary is 38k GBP, and median UK age of work force is significantly older than CEA employees (older people need more money).

The same as the for-profit world, if they're good enough they're paid more. But that there's a downward pressure on people's salaries, not just a upwards pressure (as I believe we currently have).

If you lose your top choice due to insufficient salary, how good do you expect the replacement to be?

For CEA, I'd likely guess they'd be indistinguishable for most roles most of the time.

What is your counterfactual for the money saved on salary?

Half of one more employee, and a marginal effect resulting in more of an agile ecosystem (i.e. more entrepreneurship, more commitment).

I'm CEA's main recruitment person and I've been involved with CEA's hiring for 6+ years. I've also been involved in hiring rounds for other EA orgs.

I don't remember a case where the top two candidates were "indistinguishable." The gap very frequently seems quite large (e.g. our current guess is the top candidate might be twice as impactful, by some definition of expected impact). There have also been many cases where the gap is so large we don't hire for the role at all and work we feel is important simply doesn't happen. There have also, of course, been cases where we have two candidates we are similarly excited about. This is rare. If it does happen, we'd generally be happy to be transparent about the situation, and so if you have been offered a role and are wondering about your own replacability, I'd encourage you to just ask.

FWIW, my experience (hiring mostly operations roles) is often the opposite - I find for non-senior roles that I usually reach the end of a hiring process, and am making a pretty arbitrary choice between multiple candidates who both seem quite good on the (relatively weak) evidence from the hiring round. But, I also think RP filters a lot less heavily on culture fit / value alignment for ops roles than CEA does, which might be the relevant factor making this difference.

Thanks for clarifying, and I am certainly inclined to defer to you.

One concern I would have, is to what extent are these subjective estimations born-out by empirical data. Obviously you'd never deliberately hire the second best candidate, so we never really test our accuracy. I suspect this is particular bad with hiring, where the hiring process can be really comprehensive and it's still possible to make a bad hire.

To add to what Caitlin said, my experience as a hiring manager and as a candidate is that this often is not the case.

When I was hired at CEA I took roles on two different teams (Head of US Operations at CEA and the Events Team role at EAO, which later merged into CEA). My understanding at the time is that they didn’t have second choice candidates with my qualifications, and I was told by the EAO hiring manager that they would have not filled the position if I didn’t accept (I don’t remember whether I checked this with the CEA role).

I should note that I was applying for these roles in 2015 and that the hiring pool has changed since then. But in my experience as a hiring manager (especially for senior/generalist positions), it can be really hard to find a candidate that fits the specific requirements. Part of this is that my team requires a fairly specific skillset (that includes EA context, execution ability, and fit with our high energy culture) but I wouldn’t be surprised if other hiring managers have similar experiences. I think as the team grows and more junior positions become available this might be more flexible, though I think “indistinguishable” is still not accurate.

I definitely had roles I've hired for this year where the top candidate was significantly better than the second place candidate by a large margin

How senior was this position? or, can you say more about how this varies across different roles and experience levels?

Based on some other responses to this question I think replaceability may be a major crux, so the more details the better.

Not Peter, but looking at the last ~20 roles I've hired for, I'd guess that during hiring, maybe 15 or so had an alternative candidate who seemed worth hiring (though perhaps did worse in some scoring system). These were all operations roles within an EA organization. For 2 more senior roles I hired for during that time, there appeared to be suitable alternatives. For other less senior roles there weren't (though I think the opposite generally tends to be more true).

I do thing one consideration here is we are talking about who looked best during hiring. That's different than who would be a better employee - we're assuming our hiring process does a good job of assessing people's fit / job performance, etc., and we know that the best predictors during hiring are only moderately correlated with later job performance, so it's plausible that often we think there is a big gap between two candidates, but they'd actually perform equally well (or that someone who seems like the best candidate isn't). Hiring is just a highly uncertain business, and predicting long-term job performance from like, 10 hours of sample work and interviews is pretty hard — I'm somewhat skeptical that looking at hiring data is even the right approach, because you'd also want to control for things like if those employees always meet performance expectations in the future, etc, and you never actually get counterfactual data on how good the person you didn't hire was. I'm certain that many EA organizations have hired someone who appeared to be better than the alternative by a wide margin, and easily cleared a hiring bar, but who later turned out to have major performance issues, even if the organization was doing a really good job evaluating people.

Thanks for responding, it has really helped me clarify my understanding of your views.

I do think the comparison to teacher wages is a little unfair. In the US teaching jobs are incredibly stable, and stable things pay less. Unless the UK is very different, I expect that EA jobs would need to pay more just to have people end up with the same financial situation over time, because instability is expensive. But this is maybe a 10% difference, not so not that important in the scheme of things.

I think a big area of contention (in all the salary discussions, not just this one) stems from a disagreement on questions 6 and 7. For fields like alignment research, the answers may be "replacement is 1/10th as good" and "there is nothing else to spend on the money on". But for fields like globally health and poverty, salary trades off directly against work". So it's not surprising those fields look very different.

I'm not sure where CEA falls in this spectrum. In a sister comment Cait says that for CEA their number two candidate is often half as impactful as their top choice, and sometimes they choose to forego filling a role entirely if the top choice is unvailable. I'm surprised by that and a little skeptical that it's unfixable, but I also expect they've put a lot of thought into recruitment. If CEA is regularly struggling to fill roles, that certainly explains some of the salary explanation.

Cait didn't mention this but I believe I read elsewhere that CEA didn't want too big a gap between programmers and non-programmers, and solved this by ~overpaying non-programmers. I don't know how I feel about this. I guess tentatively I think that it was awfully convenient to solve the problem by overpaying people rather than expecting people to become comfortable with a salary gap, and it might have been good to try harder at that, or divide the orgs such that it wasn't so obvious. My understanding is that CEA struggled to find programmers[1], so paying less is a nonstarter.

There was a long LW thread on this that left me with the impression that CEA's main problem was it was looking for the wrong thing, and the thing it was looking for was extremely expensive. But I imagine that even if they were looking for the cheaper right thing, they couldn't get it with teacher wages.

I agree that there should be a premium for instability.

I agree this is a crux. To the extent you're paying more for better candidates, I am pretty happy.

Related to this, I have an intuition that salaries get inflated by EA's being too nice.

Also related to this point, a concern I have with paying little, or paying competitive salaries in workplaces which have an ideologically driven supply/demand mismatch (i.e. the game industry, or NGOs) often leads to toxic workplaces. If paying a premium avoids these, it could definitely be worth it. Although I am sceptical how much it helps, over other things (like generally creating a nice place to work, making people feel safe, hiring the right people ect.).

Happy to answer these

I suppose 6 and 7 are for an individual hire and not for EA as a whole.

Thanks Elizabeth can you clarify what you mean by no. 6?

Suppose you want to hire Alice, but she won't accept the salary, so you go to your second choice, Bob. How good is Bob, relative to Alice? Indistinguishable? 90% of impact? 50% of impact?

In many cases you just don't end up hiring anyone. There is no Bob.

Can you elaborate on this? What's your data source?

To come back to this, this podcast has an argument sort of adjacent to the conversation here.

No data source, just personal experience.

Maybe a more common outcome is eventually you find Bob but it’s 18 months later and you had to do without Alice for that time.

rephrase: where is your personal experience from?

I'm not asking for survey data and suspect EA is too weird to make normal surveys applicable. So personal anecdotes or at best the experiencing of people involved in lots of hiring is the most we can hope for.

Sorry Elizabeth, I hope you’ll understand if I don’t want to share more about this in a public forum. Happy to speak privately if you like.

I know we are moving to absurdity now a little, but I feel like at 70,000 dollars in a position where people are doing meaningful work that aligns with their values, 90 percent Bob is probably there.

Any sacrifice is relative. You can only sacrifice something if you had or could have had it in the first place.

Yes I agree with this, Jeff used the words "relative sacrifice", and I was wondering if it would be better words to use, as it somewhat softens the "sacrifice" terminology. But I think I'm wrong about this. Have deleted it from the comment.

The sense in which I'm using "sacrifice" is just "giving something up": it's not saying anything about how the situation post-sacrifice compares to other people's. For example, I could talk about how big or small a sacrifice it would be for me go vegan, even if as a vegan I would still be spending more on food and having a wider variety of options than most people globally. I think this is pretty standard, and looking through various definitions of "sacrifice" online I don't see anyone seeming to use it the way you are suggesting?

Yes I've googled and I think you are right, the word sacrifice is mainly used in the way you were using it in your comment. I've edited my comment to reflec t this.

I still think using the concept of sacrifice, when we are talking people at the absolute top of the chain deciding between a range of amazing life options doesn't feel great and perhaps could not look great externally either. I would rather talk positively about choosing the best option we have, or perhaps talking about "trade offs" rather than "sacrifices".

Given how much the community has grown, isn't this just what you'd expect? There are way more people than there were in 2016, which pushes down how weird the community is on average due to regression to the mean. The obvious solution is that EA should just be smaller and more selected for altruism (which I think would be an error).

I think the simplest explanation for this is that there are just more roles with well-paying counterfactuals e.g. ml engineering. Excluding those roles, after you adjust for the cost of living, most EA roles aren't particularly well paid e.g. Open Phil salaries aren't much higher than the median in SF and maybe less than the BART (Bay Area metro) police.

Sure, I'm not suggesting we become smaller but I don't think it's completely either or. A bit more altruistic pressure/examples seems good.

SF is among the most expensive cities in the world. Above median salary in the most expensive parts of the world seems quite high for non-profits. I think the actual simplest explanation is that EA got billions of dollars (a lot of which is now gone) and that had upward pressure on salaries.

Again, my comment mainly answered the question of why we don't have a robust earning to give ecosystem in EA. Not nearly enough people make enough money to give the type of sums that EA organizations spend.

Do you mean these numbers literally? You think people are being paid $1m a year, and all expenses are staff expenses?

To clarify, I don't think salaries are anywhere near $1M. I do think there are a lot of people at EA organizations who make $150-200k per year or so. When you add benefits, lunches, office space, expense policies, PTO, full Healthcare packages/benefits, staff retreats, events, unnecessary software packages, taxes etc. a typical organization might be spending 1/3rd of their budget on salaries so something like $5-6M, not $10M. I wasn't being exact with numbers and I should have been.

I think many EA organizations could reduce their budgets by ~50%, drastically turn up the frugality and creativity and remain approximately as effective.

I'm not sure the effects this would have. I suspect less "exodus from EA" than people think.

I'm also just speaking to what happened as to why we don't have a robust earning to give ecosystem. It's much different having 50 people fund a 100-500k org than a 5M org.

Minor downvoted because this comment seems to take Marcus's comment out of context / misread it:

I don't think the numbers are likely exactly right, but I think the broad point is correct. I think that likely an organization starting with say 70% market rate salaries in the longtermist space could, if it pursued fairly aggressive cost savings, reduce their budget by much more than 30%.

As an example, I was once quoted on office space in the community that cost around $14k USD / month for a four person office, including lunch every day. For a 10 person organization, that is around $420k/year for office and food. Switching to a $300/person/mo office, and not offering the same perks, which is fairly easily findable, including in large cities (though it won't be Class A office space) would save $384k, which is like, 4 additional staff at $70k/year, if that's our benchmark.

FWIW, I feel uncertain about frugality of this sort being desirable — but I definitely believe there are major cost savings on the table.

I think it depends a lot on whether you think the difference between 10x ($10M vs $1M) and 1.4x (30% savings) is a big deal? (I think it is)

The main out of context bit is that Elizabeth's comment seemed to interpret Marcus as only referring to salary, when the full comment makes it very clear that it wasn't just about that, which seemed like a strong misreading to me, even if the 10x factor was incorrect.

I suspect the actual "theoretically maximally frugal core EA organization with the same number of staff" is something like 2x-3x cheaper than current costs, if salaries moved to the $50k-$70k range.

I see Elizabeth as saying "all expenses are staff expenses", which is broader than salary and includes things like office and food?

I think why Elizabeth was pointing out that the calculation implies only staff costs contribute to the overall budget because if you have expenses for things other than staff (ex: in my current org we spend money on lab reagents and genetic sequencing, which don't go down if we decide to compensate more frugally) then your overall costs will drop less than your staff costs.

I'm not just talking about salaries when I talk about the costs of orgs (as i said, my original comment is a diagnosis, not a prescription). To use the example of lab reagents and genetic sequencing, I've worked in several chemistry labs and they operate very differently depending on how much money is available. When money is abundant (and frugality is not a concern), things like buying expensive laptops/computers (vs. thinking about what is actually necessary) to run equipment, buying more expensive chemicals, re-use of certain equipment (vs. discarding on single use), celebrations of accomplishments, etc. all are different.

The way I see it, from 2016 to 2022, EA (and maybe specifically longtermism) got a bunch of billionaire money that grew the movements "money" resource faster than the other resource like talent and time etc. and so money could be used more liberally, especially when trading it off vs. time, talent, ingenuity, creativity, etc.

Thanks for writing this up! One problem with this proposal that I didn't see flagged (but may have missed) is that if the ETG donors defer to the megadonors you don't actually get a diversified donor base. I earn enough to be a mid-sized donor, but I would be somewhat hesitant about funding an org that I know OpenPhil has passed up on/decided to stop funding, unless I understood the reasons why and felt comfortable disagreeing with them. This is both because of fear of unilateralist curse/downside risks, and because I broadly expect them to have spent more time than me and thought harder about the problem. I think there's a bunch of ways this is bad reasoning, grantmaker time is scarce and they may pass up on a bunch of good grants due to lack of time/information/noise, but it would definitely give me pause.

If I were giving specifically within technical AI Safety (my area of expertise), I'd feel this less strongly, but still feel it a bit, and I imagine most mid-sized donors wouldn't have expertise in any EA cause area.

OP doesn't have the capacity to evaluate everything, so there are things they don't fund that are still quite good.

Also OP seems to prefer to evaluate things that have a track record, so taking bets on people to be able to get more of a track record to then apply to OP would be pretty helpful.

I also think orgs generally should have donor diversity and more independence, so giving more funding to the orgs that OP funds is sometimes good.

Maybe there should be some way for OP to publicize what they don't evaluate, so others can avoid the adverse selection.

IMO, these both seem like reasons for more people to work at OP on technical grant making more than reasons for Neel to work part time on grant making with his money.

Why not both? I assume OP is fixing their capacity issues as fast as they can, but there still will be capacity issues remaining. IMO Neel still would add something here that is worth his marginal time, especially given Neel's significant involvement, expertise, and networks.

The underlying claim is that many people with technical expertise should do part time grant making?

This seems possible to me, but a bit unlikely.

I think it's worth considering. My guess is that doing so would not necessarily be very time consuming. Could also be interested for them to pool donations to limit the number of people who need to do it, form a giving circle, or donate to a fund (e.g., EA Funds).

I'd be curious to hear more about this - naively, if I'm funding an org, and then OpenPhil stops funding that org, that's a fairly strong signal to me that I should also stop funding it, knowing nothing more. (since it implies OpenPhil put in enough effort to evaluate the org, and decided to deviate from the path of least resistance)

Agreed re funding things without a track record, that seems clearly good for small donors to do, eg funding people to do independent research or start a small new research group, if you believe they're promising

I've found that if a funder or donor asks, (and they are known in the community,) most funders are happy to privately respond about whether they decided against funding someone, and often why, or at least that they think it is not a good idea and they are opposed rather than just not interested.

Thanks Neel, I get the issue in general, but I'm a bit confused about what exactly the crux really is here for you?

I would have thought you would be in one of the best positions of anyone to donate to an AI org - you are fully immersed in the field and I would have thought in a good position to fund things you think are promising in on the margins, perhaps even new and exciting things that AI funds may miss?

Our of interest why aren't you giving a decent chunk away at the moment? Feel free not to answer if you aren't comfortable with it!

I'm in a relatively similar position to Neel. I think technical AI safety grant makers typically know way more than me about what is promising to fund. There is a bunch of non-technical info which is very informative for knowing whether a grant is good (what do current marginal grants look like, what are the downside risks, is there private info on the situation which makes things seem sketchier, etc.) and grant makers are generally in a better position than I am to evaluate this stuff.

The limiting factor [in technical ai safety funding] is in having enough technical grant makers, not in having enough organizational diversity among grantmakers (at least at current margins).

If OpenPhil felt more saturated on technical AI grant makers, then I would feel like starting new orgs pursing different funding strategies for technical AI safety could look considerably better than just having more people work at grant making at OpenPhil.

That said, note that I tend to agree to reasonable extent with the technical takes at OpenPhil on AI safety. If I heavily disagreed, I might think starting new orgs looks pretty good.

I disagree with this (except unilateralist curse), because I suspect something like the efficient market hypothesis plays out when you many medium-small donors. I think it's suspect that one wouldn't make the same argument as the above for the for-profit economy.

I disagree, because you can't short a charity, so there's no way for overhyped charity "prices" to go down

My claim is that your intuitions are the opposite of what they would be if applied to the for-profit economy. You're response (if I understand correctly) is questioning the veracity of the analogy - which seems not to really get at the heart of the efficient market heuristic. I.e. you haven't claimed that bigger donors are more likely to be efficient, you've just claimed efficiency in charitable markets are generally unlikely?

Besides this, shorting isn't the only way markets regulate (or deflate) prices. "Selling" is the more common pathway. In this context, "selling" would be medium donors changing their donation to a more neglected/effective charity. It could be argued, this is more likely to happen under a dynamic donation "marketplace", with lot's of medium donors, than in a less dynamic, fewer but bigger donors, donation "marketplace"

Ah, gotcha. If I understand correctly you're arguing for more of a "wisdom of the crowds" analogy? Many donors is better than a few donors.

If so, I agree with that, but think the major disanalogy is that the big donors are professionals, with more time experience and context, while small donors are not - big donors are more like hedge funds, small donors are more like retail investors in the efficient market analogy

Thanks for pointing that out, Neel. It is also worth having in mind that GWWC's donations are concentraded in a few dozens of donours:

Given the donations per donor are so heavy-tailed, it is very hard to avoid organisations being mostly supported by a few big donors. In addition, GWWC recommends donating to funds for most people:

I agree with this. Personally, I have engaged a significant time with EA-related matters, but continue to donate to the Long-Term Future Fund (LTFF) because I do not have a good grasp about which opportunities are best within AI safety, even though I have opinions about which cause areas are more pressing (I also rate animal welfare quite highly).

I am more positive about people working on cause area A to decide on which interventions are most effective within A (e.g. you donating to AI safety interventions). However, people earning to give may well not be familiar with any cause area, and it is unclear whether the opportunity cost to get quite familiar would be worth it, so I think it makes sense to defer.

On the other hand, I believe it is important for donors to push funds to be more transparent about their evaluation process. One way to do this is donating to more transparent funds, but another is donating directly to organisations.

Yeah, I think there is an open question of whether or not this would cause a decline in the impact of what's funded, and this reason is one of the better cases why it would.

I think one potential middle-ground solution to this is having like, 5x as many EA Fund type vehicles, with more grant makers representing more perspectives / approaches, etc., and those funds funded by a more diverse donor base, so that you still have high quality vetting of opportunities, but also grantmaking bodies who are responsive to the community, and some level of donor diversity possible for organizations.

Yeah, that intermediate world sounds great to me! (though a lot of effort, alas)

I agree with this post. One mechanism that's really missing in this discussion, is the value of marginal grants. Large grant makers claim to appreciate the value of marginal grants, but in practice I have rarely seen it. Instead, for small org at least, a binary decision is made to fund or not fund the project.

For smaller and upstarting projects, these marginal grants are really important (as highlighted in your story). A donor might not be able to fund an office space, but they can fund the deposit. Or they can't fund the deposit, but they can fund the furniture. These are small coordination problem, but they make up most of what it means to get small and medium sized organizations off the ground.

I agree - all else equal - you'd rather have a flatter distribution of donors for the diversification (various senses) benefits. I doubt this makes this an important objective all things considered.

The main factor on the other side of the scale is scale itself: a 'megadonor' can provide a lot of support. This seems to be well illustrated by your original examples (Utility Farm and Rethink). Rethink started later, but grew much 100x larger, and faster too. I'd be surprised if folks at UF would not prefer Rethink's current situation, trajectory - and fundraising headaches - to their own.

In essence, there should be some trade-off between 'aggregate $' and 'diversity of funding sources' (however cashed out) - pricing in (e.g.) financial risks/volatility for orgs, negative externalities on the wider ecosystem, etc. I think the trade between 'perfectly singular support' and 'ideal diversity of funding sources' would be much less than an integer factor, and more like 20% or so (i.e. maybe better getting a budget of 800k from a reasonably-sized group than 1M from a single donor, but not better than 2M from the same).

I appreciate the recommendation here is to complement existing practice with a cohort of medium sized donors, but the all things considered assessment is important to gauge the value of marginal (or not-so-marginal) moves in this direction. Getting (e.g.) 5000 new people giving 20k a year seems a huge lift to me. Even if that happens, OP still remains the dominant single donor (e.g. it gave roughly the amount this hypothetical cohort would to animal causes alone in 2022). A diffuse 'ecosystem wide' benefits of these additional funders struggles by my lights to vindicate the effort (and opportunity costs) of such a push.

One problem I have with these discussions, including past discussions about why national EA orgs should have fundraising platform, is the reductionist and zero-sum thinking given in response.

I identified above, how an argument stating less donors results in more efficiency, would never be made in the for profit world. Similarly, a lot of the things we care about (talent, networks, entrepreneurship) become stronger the more small/medium donors we have. For the same reason that eating 3 meals in a day makes it easier to be productive - despite it taking more time compared to not eating at all - having more of a giving small-donor ecosystem will make it easier to achieve other things we need.

Wait, but it might actually have opportunity cost? Like those poeple could be doing something other than trying to get more medium sized donors? There is a cost to trying to push on this versus something else. (If you want to push on it, then great, this doesn't impose any cost on others, but that seems different from a claim that this is among the most promising things to be working on at the margin.)

Your argument here is "getting more donors has benefits beyond just the money" (I think). But, we can also go for those benefits directly without necessarily getting more donors. Like maybe trying to recruit more medium sized donors is the best way to community build, but this seems like sort of a specific claim which seems a priori unlikely (it could be true ofc) unless having more small donors is itself a substantial fraction of the value and that's why it's better than other options.

So, recruiting donors is perhaps subsidized by causing the other effects you noted, but if it's subsidized by some huge factor (e.g. more like 10x than 1.5x) than directly pursuing the effects seems like probably a better strategy.

Most of the people working on giving platforms, are pretty uniquely passionate about giving. The donation platform team we have, isn't that excited about EA-community building in general. This is a good, concrete example of one way a 0 sum model breaks down.

Just be clear, the mechanism I think at play here is A) Meeting people where they are B) Providing people with Autonomous ways to take action. These mechanisms are good for reaching a subset of people, and getting them engaged.

I am inlined to lean towards multiple axis of engagement, i.e. let's promote prediction markets (including the gamification model that manifold uses) to reach people and get people engaged. Let's throw intensive career discussions at those that would find that interesting. Likewise, let's not forget about donations as an important part of participating in this community.

Thanks for writing this!

I find this vision compelling, but I wonder how far away we are from it. As Gregory Lewis mentions in another comment, 5,000 donors giving $20,000 is ambitious in the short term (under 10,000 people have signed the GWWC pledge, for example).

To get a slightly more concrete view of the tractability here: In the case of Rethink, what portion of donations would need to come from small/medium-sized donors before it had appreciable effect on Rethink's ability to:

(PS- no worries if you'd rather not share your opinions on your employer specifically, I only mention them because you bring them up in the body of the post).

I think that one thing I reflect on is how much money has been spent on EA community building over the last 5 years or so. I'm guessing it is several 10s of millions of dollars. My impression (which might not be totally right) is that little of that went to promoting earning to give. So it seems possible that in a different world, where a much larger fraction was used on E2G outreach, we could have seen a substantial increase. The GWWC numbers are hard to interpret, because I don't think anything like massive, multi-million dollar E2G outreach has been tried, at least as far as I know.

I think broadly, it would be healthy for any organization of RP's size to not have a single funder giving over 40% of their funding, and ideally less. I assume the realistic version of this that might be possible is something like an $10M org. having $4M from one funder, maybe a couple of $500k-$1M donors, a few more $20k-$500k donors, and a pretty wide base of <$20k donors. So in that world, I'm guessing an organization would want to be generating something like 15%-20% of it's revenue from these mid-size donors? So definitely still a huge lift.

But, I think one thing worth considering is that while ideally there might be tens of thousands of $20k donors in EA, doing the outreach to get tens of thousands of $20k donors, if successful, will probably also bring in hundreds of <$100k donors, and maybe some handful of <$1M donors. This might not meet the ideal situation I laid out above, but on the margin seems very good.

Thanks, this makes sense. I also have the impression that E2G was deprioritised. In some cases, I've seen it actively spoken against (in the vein of "I guess that could be good, but it is almost no one's most impactful option"), mostly as pushback to the media impression that EA=E2G. I also see the point that more diversified funding seems good on the margin- to give organisations more autonomy and security.

One thing that isn't mentioned in this piece is the risks that come from relying on a broader base of donors. If the EA community would need to be much, much larger in order to support the current organisations with more diversified funding, how would this change the way the organisations acted? In order to keep a wider range of funders happy, would there be pressures to appeal to a common denominator? Would it in fact become harder to service and maintain relations with a wider range of donors? I presume that the ideal is closer to the portfolio you sketch for an organisation like RP in this comment.

I’m pretty sure that GWWC, Founders Pledge, Longview and Effective Giving all have operating costs of over $1M per year. It seems like quite a lot of effort has gone into testing the e2g growth hypothesis.

That doesn't seem quite right to me - Longview and EG don't strike me as being earning to give outreach, though they definitely bring funds into the community. And Founders Pledge is clearly only targeting very large donors. I guess maybe to be more specific, nothing like massive, multi-million dollar E2G outreach has been tried for mid-sized / every day earning to give, as you're definitely right that effort has gone into bringing in large donors.

I guess I don’t really know what you have in mind. I’m particularly confused as to what definition of outreach you’re using if it excludes Longview.

Gwwc almost certainly has an annual budget of over $1m per year and is also pretty clearly investing in e2g.

I don’t think there’s much e2g as a career stuff since 80k pivoted away from that message - but that’s obviously much narrower than e2g outreach.

Longview is interested in courting those who are already interested in donating at least seven figures annually. Their primary goal is to provide guidance to those who are already committed to donating on how they can use that money to do the most good.

An ETG outreach organization would be looking to get people to A. donate a significant portion of their money that they are not already giving to effective charities and/or B. start making career decisions that enable them to make more money so that they can ETG.

So there are organisations spending more than $1m per year who have encouraged people to donate a significant fraction of their income (for example founders pledge, GWWC and OFTW though I’m not sure of OFTWs budget).

80k hours have also looked into which careers pay the best (e.g. writing about quant trading and being an early startup employee) - I think they have a budget of around $5m/year. So it sounds like the ETG outreach organisation would need to do both of these things and focus on e2g rather than other things. “How do I optimise my career make money” is a pretty common question and I think you should be somewhat sceptical of EAs ability to beat non-EA resources.

It’s worth noting that there aren’t any multimillion dollar organisations that I’m aware of that do the equivalent thing (outreach + career advice) for solely AI safety, biosecurity or global health.