Ben_West🔸

Bio

Non-EA interests include chess and TikTok (@benthamite). We are probably hiring: https://metr.org/hiring

How others can help me

Feedback always appreciated; feel free to email/DM me or use this link if you prefer to be anonymous.

Posts 87

Comments1076

Topic contributions6

If you manage to convince an investor that timelines are very short without simultaneously convincing them to care a lot about x-risk, I feel like their immediate response will be to rush to invest briefcases full of cash into the AI race, thus helping make timelines shorter and more dangerous.

I'm the corresponding author for a paper that Holly is maybe subtweeting and was worried about this before publication but don't really feel like those fears were realized.

Firstly, I don't think there are actually very many people who sincerely think that timelines are short but aren't scared by that. I think what you are referring to is people who think "timelines are short" means something like "AI companies will 100x their revenue in the next five years", not "AI companies will be capable of instituting a global totalitarian state in the next five years." There are some people who believe the latter and aren't bothered by it but in my experience they are pretty rare.

Secondly, when VCs get the "AI companies will 100x their revenue in the next five years" version of short timelines they seem to want to invest into LLM-wrapper startups, which makes sense because almost all VC firms lack the AUM to invest in the big labs.[1] I think there are plausible ways in which this makes timelines shorter and more dangerous but it seems notably different from investing in the big labs.[2]

Overall, my experience has mostly been that getting people to take short timelines seriously is very close to synonymous with getting them to care about AI risk.

- ^

Caveat that ~everyone has the AUM to invest in publicly traded stocks. I didn't notice any bounce in share price for e.g. NVDA when we published and would be kind of surprised if there was a meaningful effect, but hard to say.

- ^

Of course, there's probably some selection bias in terms of who reaches out to me. Masayoshi Son probably feels like he has better info than what I could publish, but by that same token me publishing stuff doesn't cause much harm.

Thanks for doing this Saulius! I have been wondering about modeling the cost effectiveness of animal welfare advocacy under assumptions of relatively short AI timelines. It seems like one possible way of doing this is to to change the "Yearly decrease in probability that commitment is relevant" numbers in your sheet (cells I28:30). Do you have any thoughts on that approach?

You had never thought through "whether artificial intelligence could be increasing faster than Moore’s law." Should we conclude that AI risk skeptics are "insular, intolerant of disagreement or intellectual or social non-conformity (relative to the group's norms), and closed-off to even reasonable, relatively gentle criticism?"

I have to say, the bad part supports my observation!

Steven was responding to this:

The community of people most focused on keeping up the drumbeat of near-term AGI predictions seems insular, intolerant of disagreement or intellectual or social non-conformity (relative to the group's norms), and closed-off to even reasonable, relatively gentle criticism

None of Steven's bullet points support this. Many of them say the exact opposite of this.

More seriously, I didn’t really think through precisely whether artificial intelligence could be increasing faster than Moore’s law.

Fair enough, but in that case I feel kind of confused about what your statement "Progress does not seem like a fast exponential trend, faster than Moore's law" was intended to imply.

If the claim you are making is "AGI by 2030 will require some growth faster than Moore's law" then the good news is that almost everyone agrees with you but the bad news is that everyone already agrees with you so this point is not really cruxy to anyone.

Maybe you have an additional claim like "...and growth faster than moore's law is unlikely?" If so, I would encourage you to write that because I think that is the kind of thing that would engage with people's cruxes!

If you drew a chart for the GPT models on ARC-AGI-2, it would mostly just be a flat line.. It's only with the o3-low and o1-pro models we see scores above 0%

... which is what (super)-exponential growth looks like, yes?

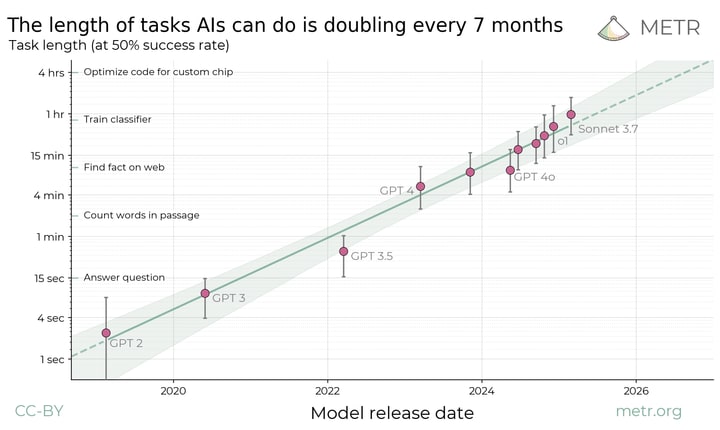

Specifically: We've gone from o1 (low) getting 0.8% to o3 (low) getting 4% in ~1 year, which is ~2 doublings per year (i.e. 4x Moore's law). Forecasting from this few data points sure seems like a cursed endeavor to me, but if you want to do it then I don't see how you can rule out Moore's-law-or-faster growth.

Progress does not seem like a fast exponential trend, faster than Moore's law and laying the groundwork for an intelligence explosion

Moore's law is ~1 doubling every 2 years. Barnes' law is ~4 doublings every 2 years:

This is cool, I like BHAGs in general and this one in particular. Do you have a target for when you want to get to 1M pledgers?