Lorenzo Buonanno🔸

Bio

Participation1

Hi!

I'm currently (Aug 2023) a Software Developer at Giving What We Can, helping make giving significantly and effectively a social norm.

I'm also a forum mod, which, shamelessly stealing from Edo, "mostly means that I care about this forum and about you! So let me know if there's anything I can do to help."

Please have a very low bar for reaching out!

I won the 2022 donor lottery, happy to chat about that as well

Posts 11

Comments618

Topic contributions5

you have a history of apologetics for Anthropic, Lorenzo.

I'm very surprised by this and by the many strong votes and agree votes, I don't know much about Anthropic and I don't write much about Anthropic. Is it possible that you're confusing me with someone else? You can see all my EA Forum comments and tweets mentioning "Anthropic" here and here.

I was also confused by that paragraph, as someone who read the handbook in ~2022. I just randomly came across this and this, and apparently this was an issue 7 years ago. I think it's likely that several people who have been around longer than us haven't noticed that the handbooks and CEA staff changed a lot.

Edit: the comment above has been edited, the below was a reply to a previous version and it makes less sense now, leaving it for posterity

You know much more than I do, but I'm surprised by this take. My sense is that Anthropic is giving a lot back:

funding

My understanding is that all early investors in Anthropic made a ton of money, it's plausible that Moskovitz made as much money by investing in Anthropic as by founding Asana. (Of course this is all paper money for now, but I think they could sell it for billions).

As mentioned in this post, co-founders also pledged to donate 80% of their equity, which seems to imply they'll give much more funding than they got. (Of course in EV, it could still go to zero)

staff

I don't see why hiring people is more "taking" than "giving", especially if the hires get to work on things that they believe are better for the world than any other role they could work on

and doesn't contribute anything back

My sense is that (even ignoring funding mentioned above) they are giving a ton back in terms of research on alignment, interpretability, model welfare, and general AI Safety work

To be clear, I don't know if Anthropic is net-positive for the world, but it seems to me that its trades with EA institutions have been largely mutually beneficial. You could make an argument that Anthropic could be "giving back" even more to EA, but I'm skeptical that it would be the most cost-effective use of their resources (including time and brand value)

As a side point, I'm confused when you say:

I don't think they suggest that, depending on your definition of "strong". Just above the sceenshotted quote, the article mentions that many early investors were at the time linked to EA.

That was said by the author of the article who was trying to make the point that there is a link between Anthropic and EA. So I don't see this as evidence of Anthropic being forthcoming.

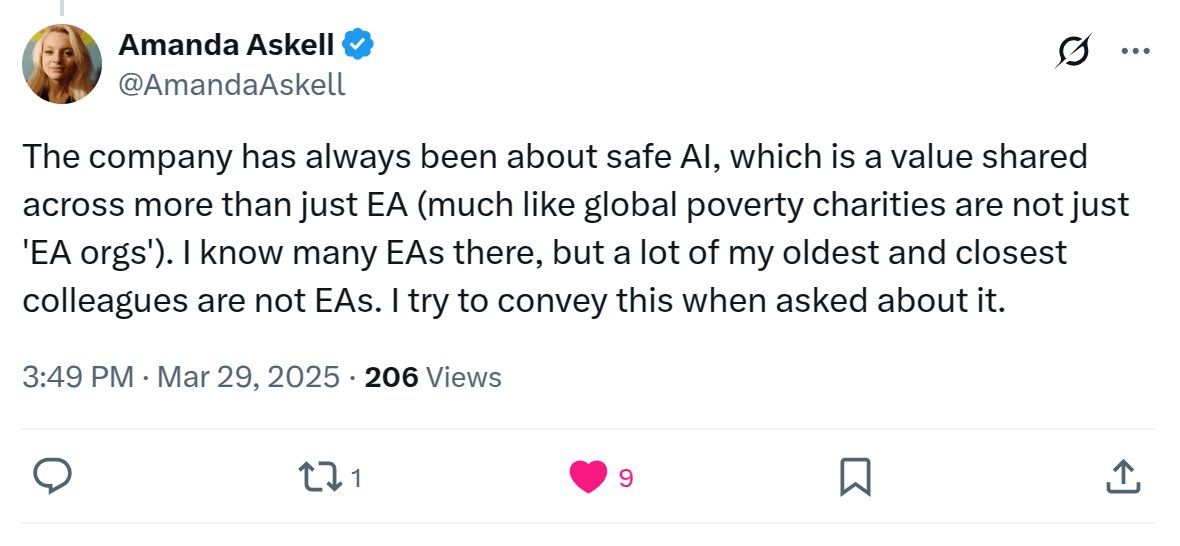

I think in the context of the article, their quotes (44 words in total) make more sense:

In that context, the quotes clarify that Anthropic is not an "EA company", and give a more accurate understanding of the relationship to the reader.

A more in-depth analysis of the historical affiliations, separations, agreements, and disagreements of Anthropic's funders, founders, and employees with various parts of EA over the past 15 years would take far more than two paragraphs.

If I didn't know anything about Anthropic and I read the words “I definitely have met people here who are effective altruists, but it's not a theme of the organization or anything”, I might think Anthropic is like Google where you may occasionally meet people in the cafeteria who happen to be effective altruists but EA really has nothing to do with the organisation.

You wouldn't think that in the context of the article, though.

I would not get the impression that many of the employees are EAs who work at Anthropic or work on AI safety for EA reasons. And that the three members of the trust they've given veto power over the company to have been heavily involved in EA.

I don't know what percentage of Anthropic employees consider themselves part of the EA community. Also, I don't agree that it's clear that Evidence Action's CEO is part of the effective altruism community because evidence action received money from GiveWell.

https://www.linkedin.com/in/kanika-bahl-091a936/details/experience/ She was working in global health since before effective altruism was a thing, and many/most people funded by OpenPhilanthropy don't consider themselves part of the community. In the same way that charities funded by Catholic donors are not necessarily Catholic. It does seem that OpenPhilanthropy was their main source of funding for many years though, which makes the link stronger than I originally thought.

I think the people in the article you quote are being honest about not identifying with the EA social community, and the EA community on X is being weird about this.

I think the confusion might stem from interpreting EA as "self-identifying with a specific social community" (which they claim they don't, at least not anymore) vs EA as "wanting to do good and caring about others" (which they claim they do, and always did)

Going point by point:

Dario, Anthropic’s CEO, was the 43rd signatory of the Giving What We Can pledge and wrote a guest post for the GiveWell blog. He also lived in a group house with Holden Karnofsky and Paul Christiano at a time when Paul and Dario were technical advisors to Open Philanthropy.

This was more than 10 years ago. EA was a very different concept / community at the time, and this is consistent with Daniela Amodei saying that she considers it an "outdated term"

Amanda Askell was the 67th signatory of the GWWC pledge.

This was also more than 10 years ago, and giving to charity is not unique to EA. Many early pledgers don't consider themselves EA (e.g. signatory #46 claims it got too stupid for him years ago)

Many early and senior employees identify as effective altruists and/or previously worked for EA organisations

Amanda Askell explicitly says "I definitely have met people here who are effective altruists" in the article you quote, so I don't think this contradicts it in any way

https://x.com/AmandaAskell/status/1905995851547148659

Anthropic has hired a "model welfare lead" and seems to be the company most concerned about AI sentience, an issue that's discussed little outside of EA circles.

That's false: https://en.wikipedia.org/wiki/Artificial_consciousness

On the Future of Life podcast, Daniela said, "I think since we [Dario and her] were very, very small, we've always had this special bond around really wanting to make the world better or wanting to help people" and "he [Dario] was actually a very early GiveWell fan I think in 2007 or 2008."

The Anthropic co-founders have apparently made a pledge to donate 80% of their Anthropic equity (mentioned in passing during a conversation between them here and discussed more here)Their first company value states, "We strive to make decisions that maximize positive outcomes for humanity in the long run."

Wanting to make the world better, wanting to help people, and giving significantly to charity are not prerogatives of the EA community.

It's perfectly fine if Daniela and Dario choose not to personally identify with EA (despite having lots of associations) and I'm not suggesting that Anthropic needs to brand itself as an EA organisation

I think that's exactly what they are doing in the quotes in the article: "I don't identify with that terminology" and "it's not a theme of the organization or anything"

But I think it’s dishonest to suggest there aren’t strong ties between Anthropic and the EA community.

I don't think they suggest that, depending on your definition of "strong". Just above the sceenshotted quote, the article mentions that many early investors were at the time linked to EA.

I think it’s a bad look to be so evasive about things that can be easily verified (as evidenced by the twitter response).

I don't think X responses are a good metric of honesty, and those seem to be mostly from people in the EA community.

In general, I think it's bad for the EA community that everyone who interacts with it has to worry about being liable for life for anything the EA community might do in the future.

I don't see why it can't let people decide if they want to consider themselves part of it or not.

As an example, imagine if I were Catholic, founded a company to do good, raised funding from some Catholic investors, and some of the people I hired were Catholic. If 10 years later I weren't Catholic anymore, it wouldn't be dishonest for me to say "I don't identify with the term, and this is not a Catholic company, although some of our employees are Catholic". And giving to charity or wanting to do good wouldn't be gotchas that I'm secretly still Catholic and hiding the truth for PR reasons. And this is not even about being a part of a specific social community.

GiveWell posts a lot of interesting stuff on their blog and on their website, but in the past year they only reposted hiring announcements on the EA Forum.

E.g. I don't think that USAID Funding Cuts: Our Response and Ways to Help from 10 days ago was cross-posted here, but I think many readers would have found it interesting

Neil Buddy Shah also serves on Anthropic’s Long-Term Benefit Trust (see mentions of CHAI on this page)

Importantly, it seems that GiveWell only funds specific programs from CHAI, not CHAI as a whole. It could very well be the case that CHAI as a whole is inefficient and not particularly good at what they do, but GiveWell thinks those specific programs are cost-effective.

Disclaimer: this is only from looking at GiveWell's website and searching for "CHAI", I don't have any insider information

I think that is extremely unlikely, they have a lot to lose as soon as it's confirmed that the archived data is not manipulated.

Also, from the page you cite:

we emphasize that these attacks can in most cases be launched only by the owners of particular domains.

So they would need to claim that you took control of a relevant domain as well.

But even if something like that happened, you could show that the archive has not been tampered (e.g. by linking the exact resource containing the information, or mentioning the "about this capture" tool that was added by the web archive to mitigate this)

I strongly agree that the benefits of sharing the evaluation greatly outweigh the risks, but I'm not sure if sharing the it relatively early is best

- There is a risk of starting a draining back-and-forth which could block or massively delay publication. See e.g. Research Deprioritizing External Communication which was delayed by one year

- It would cost more time for the org to review a very early draft and point out mistakes that would be fixed anyway

- It could cause the org to take the evaluation less seriously, and be less likely to take action based on the feedback

I think the minimal version proposed by @Jason of just sending an advance copy a week or two in advance is an extremely low-cost policy that mitigates most of the risks and provides most of the benefits (but some limited back-and-forth would be ideal)

This whole thing is about two quotes with 44 words in total, which really don't seem to me to be false or misleading. Based on the little information I have, in the context of the article, I think they make the representation of the relationship closer to what I think is true, and consistent with previous messaging from them.

Of course I could be wrong and Askell and Amodei could secretly mostly have EA friends, go to EA parties, secretly self-identify with the community, etc. but I don't think there's public evidence to suggest that.