NunoSempere

Bio

I run Sentinel, a team that seeks to anticipate and respond to large-scale risks. You can read our weekly minutes here. I like to spend my time acquiring deeper models of the world, and generally becoming more formidable. I'm also a fairly good forecaster: I started out on predicting on Good Judgment Open and CSET-Foretell, but now do most of my forecasting through Samotsvety, of which Scott Alexander writes:

Enter Samotsvety Forecasts. This is a team of some of the best superforecasters in the world. They won the CSET-Foretell forecasting competition by an absolutely obscene margin, “around twice as good as the next-best team in terms of the relative Brier score”. If the point of forecasting tournaments is to figure out who you can trust, the science has spoken, and the answer is “these guys”.

I used to post prolifically on the EA Forum, but nowadays, I post my research and thoughts at nunosempere.com / nunosempere.com/blog rather than on this forum, because:

- I disagree with the EA Forum's moderation policy—they've banned a few disagreeable people whom I like, and I think they're generally a bit too censorious for my liking.

- The Forum website has become more annoying to me over time: more cluttered and more pushy in terms of curated and pinned posts (I've partially mitigated this by writing my own minimalistic frontend)

- The above two issues have made me take notice that the EA Forum is beyond my control, and it feels like a dumb move to host my research in a platform that has goals different from my own.

But a good fraction of my past research is still available here on the EA Forum. I'm particularly fond of my series on Estimating Value.

My career has been as follows:

- Before Sentinel, I set up my own niche consultancy, Shapley Maximizers. This was very profitable, and I used the profits to bootstrap Sentinel. I am winding this down, but if you have need of estimation services for big decisions, you can still reach out.

- I used to do research around longtermism, forecasting and quantification, as well as some programming, at the Quantified Uncertainty Research Institute (QURI). At QURI, I programmed Metaforecast.org, a search tool which aggregates predictions from many different platforms—a more up to date alternative might be adj.news. I spent some time in the Bahamas as part of the FTX EA Fellowship, and did a bunch of work for the FTX Foundation, which then went to waste when it evaporated.

- I write a Forecasting Newsletter which gathered a few thousand subscribers; I previously abandoned but have recently restarted it. I used to really enjoy winning bets against people too confident in their beliefs, but I try to do this in structured prediction markets, because betting against normal people started to feel like taking candy from a baby.

- Previously, I was a Future of Humanity Institute 2020 Summer Research Fellow, and then worked on a grant from the Long Term Future Fund to do "independent research on forecasting and optimal paths to improve the long-term."

- Before that, I studied Maths and Philosophy, dropped out in exasperation at the inefficiency, picked up some development economics; helped implement the European Summer Program on Rationality during 2017, 2018 2019, 2020 and 2022; worked as a contractor for various forecasting and programming projects; volunteered for various Effective Altruism organizations, and carried out many independent research projects. In a past life, I also wrote a popular Spanish literature blog, and remain keenly interested in Spanish poetry.

You can share feedback anonymously with me here.

Note: You can sign up for all my posts here: <https://nunosempere.com/.newsletter/>, or subscribe to my posts' RSS here: <https://nunosempere.com/blog/index.rss>

Posts 111

Comments1229

Topic contributions14

Sorry to hear man. I tried to reach out to someone at OP a few months ago when I heard about your funding difficulties but I got ignored :(. Anyways, donated $100 and made a twitter thread here

We have strong reasons to think we know what the likely sources of existential risk are - as @Sean_o_h's new paper lays out very clearly.

Looked at the paper. The abstract says:

In all cases, an outcome as extreme as human extinction would require events or developments that either have been of very low probability historically or are entirely unprecedented. This introduces deep uncertainty and methodological challenges to the study of the topic. This review provides an overview of potential human extinction causes considered plausible in the current academic literature...

So I think you are overstating it a bit, i.e., it's hard to support statements about existential risks coming from classified risks vs unknown unknowns/black swans. But if I'm getting the wrong impression I'm happy to read the paper in depth.

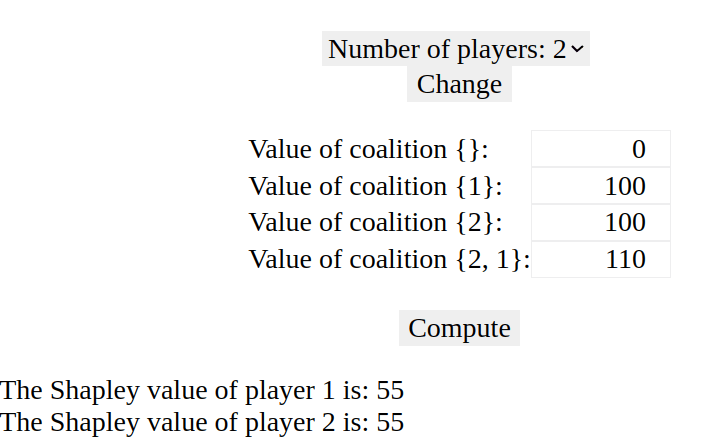

These are two different games. The joint game would be

Value of {}: 0 Value of {1}: 60 Value of {2}: 0 Value of {1,2}: 100`

and in that game player one is indeed better off in shapley value terms if he joins together with 2.

I'll let you reflect on how/whether adding an additional option can't decrease someone's shapley value, but I'll get back to my job :)

Thanks Felix, great question.

I'm not sure I'm following, and suspect you might be missing some terms; can you give me an example I can plug into shapleyvalue.com ? If there is some uncertainty that's fine (so if e.g., in your example Newton has a 50% chance of inventing calculus and ditto for Leibniz, that's fine).

Seems true assuming that your preferred conversion between human and animal lives/suffering is correct, but one can question those ranges. In particular, it seems likely to me that how much you should value animals is not an objective fact of life but a factor that varies across people.

This also made it more salient to me the need to become more independent of larger donors, so I'll be messaging some Sentinel readers to get more paid subscriptions