It's common for people who approach helping animals from a quantitative direction to need some concept of "moral weights" so they can prioritize. If you can avert one year of suffering for a chicken or ten for shrimp which should you choose? Now, moral weight is not the only consideration with questions like this, since typically the suffering involved will also be quite different, but it's still an important factor.

One of the more thorough investigations here is Rethink Priorities' moral weights series. It's really interesting work and I'd recommend reading it! Here's a selection from their bottom-line point estimates comparing animals to humans:

| Humans | 1 (by definition) |

| Chickens | 3 |

| Carp | 11 |

| Bees | 14 |

| Shrimp | 32 |

If you find these surprisingly low, you're not alone: that giving a year of happy life to twelve carp might be more valuable than giving one to a human is for most people a very unintuitive claim. The authors have a good post on this, Don't Balk at Animal-friendly Results, that discusses how the assumptions behind their project make this kind of result pretty likely and argues against putting much stock in our potentially quite biased initial intuitions.

What concerns me is that I suspect people rarely get deeply interested in the moral weight of animals unless they come in with an unusually high initial intuitive view. Someone who thinks humans matter far more than animals and wants to devote their career to making the world better is much more likely to choose a career focused on people, like reducing poverty or global catastrophic risk. Even if someone came into the field with, say, the median initial view on how to weigh humans vs animals I would expect working as a junior person in a community of people who value animals highly would exert a large influence in that direction regardless of what the underlying truth. If you somehow could convince a research group, not selected for caring a lot about animals, to pursue this question in isolation, I'd predict they'd end up with far less animal-friendly results.

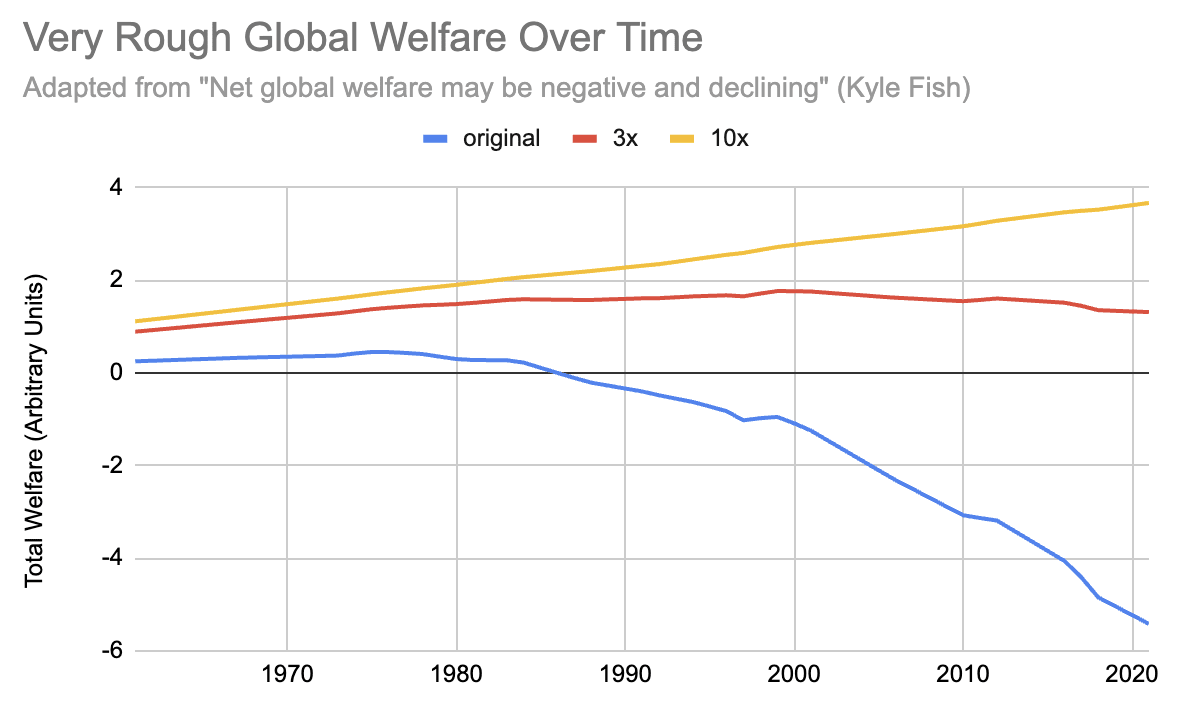

When using the moral weights of animals to decide between various animal-focused interventions this is not a major concern: the donors, charity evaluators, and moral weights researchers are coming from a similar perspective. Where I see a larger problem, however, is in broader cause prioritization, such as Net Global Welfare May Be Negative and Declining. The post weighs the increasing welfare of humanity over time against the increasing suffering of livestock, and concludes that things are likely bad and getting worse. If you ran the same analysis with different inputs, such as what I'd expect you'd get from my hypothetical research group above, however, you'd instead conclude the opposite: global welfare is likely positive and increasing.

For example, if that sort of process ended up with moral weights that were 3x lower for animals relative to humans we would see approximately flat global welfare, while if they were 10x lower we'd see increasing global welfare:

See sheet to try your own numbers; original chart digitized with via graphreader.com.

Note that both 3x and 10x are quite small compared to the uncertainty involved in coming up with these numbers: in different post the Rethink authors give 3x (and maybe as high as 10x) just for the likely impact of using objective list theory instead of hedonism, which is only one of many choices involved in estimating moral weights.

I think the overall project of figuring out how to compare humans and animals is a really important one with serious implications for what people should work on, but I'm skeptical of, and put very little weight on, the conclusions so far.

Hi Jeff. Thanks for engaging. Three quick notes. (Edit: I see that Peter has made the first already.)

First, and less importantly, our numbers don't represent the relative value of individuals, but instead the relative possible intensities of valenced states at a single time. If you want the whole animal's capacity for welfare, you have to adjust for lifespan. When you do that, you'll end up with lower numbers for animals---though, of course, not OOMs lower.

Second, I should say that, as people who work on animals go, I'm fairly sympathetic to views that most would regard as animal-unfriendly. I wrote a book criticizing arguments for veganism. I've got another forthcoming that defends hierarchicalism. I've argued for hybrid views in ethics, where different rules apply to humans and animals. Etc. Still, I think that conditional on hedonism it's hard to get MWs for animals that are super low. It's easier, though still not easy, on other views of welfare. But if you think that welfare is all that matters, you're probably going to get pretty animal-friendly numbers. You have to invoke other kinds of reasons to really change the calculus (partiality, rights, whatever).

Third, I've been trying to figure out what it would look like to generate MWs for animals that don't assume welfarism (i.e., the view that welfare is all that matters morally). But then you end up with all the familiar problems of moral uncertainty. I wish I knew how to navigate those, but I don't. However, I also think it's sufficiently important to be transparent about human/animal tradeoffs that I should keep trying. So, I'm going to keep mulling it over.

I want to add that personally before this RP "capacity for welfare" project I started with an intuition that a human year was worth about 100-1000 times more than a chicken year (mean ~300x) conditional on chickens being sentient. But after reading the RP "capacity for welfare" reports thoroughly I have now switched roughly to the RP moral weights valuing a human year at about 3x a chicken year conditional on chickens being sentient (which I think is highly likely but handled in a different calculation). This report conclusion did come at a large surprise to me.

Obviously me changing my views to match RP research is to be expected given that I am the co-CEO of RP. But I want to be clear that contra your suspicions it is not the case at least for me personally that I started out with insanely high moral value on chickens and then helped generate moral weights that maintained my insanely high moral value (though note that my involvement in the project was very minimal and I didn't do any of the actual research). I suspect this is also the case for other RP team members.

That being said, I agree that the tremendous uncertainty involved in these calculations is important to recognize, plus there likely will be some potentially large interpersonal variation based on having different philosophical assumptions (e.g., not hedonism) as well as different fundamental values (given moral anti-realism which I take to be true).

Mmm, good point, I'm not trying to say that. I would predict that most people looking into the question deeply would shift in the direction of weighting animals more heavily than they did at the outset. But it also sounds like you did start with, for a human, unusually pro-animal views?

Jeff, are you saying you think "an intuition that a human year was worth about 100-1000 times more than a chicken year" is a starting point of "unusually pro-animal views"?

In some sense, this seems true relative to most humans' implied views by their actions. But, as Wayne pointed out above, this same critique could apply to, say, the typical American's views about global health and development. Generally, it doesn't seem to buy much to frame things relative to people who've never thought about a given topic substantively and I don't think you'd think this would be a good critique of a foreign aid think tank looking into how much to value global health and development.

Maybe you are making a different point here?

Also, it would help more if you were being explicit about what you think a neutral baseline is. What would you consider more typical or standard views about animals from which to update? Moment to moment human experience is worth 10,000x that of a chicken conditional on chickens being sentient? 1,000,000x? And, whatever your position, why do you think that is a more reasonable starting point?

I did say that, and at the time I wrote that I would have predicted that in realistic situations requiring people to trade off harms/benefits going to humans vs chickens the median respondent would just always choose the human (but maybe that's just our morality having a terrible sense of scale), and Peter's 300x mean would have put him somewhere around 95th percentile.

Since writing that I read Michael Dickens' comment, linking to this SSC post summarizing the disagreements [1] and I'm now less sure. It's hard for me to tell exactly what they surveys included: for example, I think they excluded people who didn't think animals have moral worth at all, and it's not clear to me whether they were getting people to compare lives vs life years. I don't know if there's anything better on this?

I agree! I'm not trying to say that uninformed people's off-the-cuff guesses about moral weights are very informative on what moral weights we should have. Instead, I'm saying that people start with a wide range of background assumptions and if two people started off with 5th and 95th percentile views trading off benefits/harms to chickens vs humans I expect them to end up farther apart in their post-investigation views than two people who started at 95th.

[1] That post cites David Moss from RP as having run a better survey, and summarizes it, but doesn't link to it -- I'm guessing this is because it was Moss doing something informally with SSC and the SSC post is the canonical source, but if there's a full writeup of the survey I'd like to see it!

David's post is here: Perceived Moral Value of Animals and Cortical Neuron Count

What do you think of this rephrasing of your original argument:

I think this argument is very bad and I suspect you do too. You can rightfully point out that in this context someone starting out at the 5th percentile before going into a foreign aid investigation and then determining foreign aid is much more valuable than the general population thinks would be, in some sense, stronger evidence than if they had instead started at the 95th percentile. However, that seems not super relevant. What's relevant is whether it is defensible at all to norm to a population based on their work on a topic given a question of values like this (that or if there were some disanalogy between this and animals).

Generally, I think the typical American when faced with real tradeoffs (they actually are faced with these tradeoffs implicitly as part of a package vote) don't value the lives of the global poor equally to the lives of their fellow Americans. More importantly, I think you shouldn't norm where your values on global poverty end up after investigation back to what the typical American thinks. I think you should weigh the empirical and philosophical evidence about how to value the lives of the global poor directly and not do too much, if any, reference class checking about other people's views on the topic. The same argument holds for whether and how much we should value people 100 years from now after accounting for empirical uncertainty.

Fundamentally, the question isn't what people substantively do think (except for practical purposes), the question is what beliefs are defensible after weighing the evidence. I think it's fine to be surprised by what RP's moral weight work says on capacity for welfare, and I think there are still high uncertainty in this domain. I just don't think either of our priors, or the general population's priors, about the topic should be taken very seriously.

Awesome, thanks! Good post!

First, I think GiveWell's research, say, is mostly consumed by people who agree people matter equally regardless of which country they live in. Which makes this scenario more similar to my "When using the moral weights of animals to decide between various animal-focused interventions this is not a major concern: the donors, charity evaluators, and moral weights researchers are coming from a similar perspective."

But say I argued that the US Department of Transportation funding ($12.5M/life) should be redirected to foreign aid until they had equal marginal costs per life saved. I don't think the objection I'd get would be "Americans have greater moral value" but instead things like "saving lives in other countries is the role of private charity, not the government". In trying to convince people to support global health charities I don't think I've ever gotten the objection "but people in other countries don't matter" or "they matter far less than Americans", while I expect vegan advocates often hear that about animals.

I have gotten the latter one explicitly and the former implicitly, so I'm afraid you should get out more often :).

More generally, that foreigners and/or immigrants don't matter, or matter little compared to native born locals, is fundamental to political parties around the world. It's a banal take in international politics. Sure, some opposition to global health charities is an implied or explicit empirical claim about the role of government. But fundamentally, not all of it as a lot of people don't value the lives of the out-group and people not in your country are in the out-group (or at least not in the in-group) for much of the world's population.

GiveWell donors are not representative of all humans. I think a large fraction of humanity would select the "we're all equal" option on a survey but clearly don't actually believe it or act on it (which brings us back to revealed preferences in trades like those humans make about animal lives).

But even if none of that is true, were someone to make this argument about the value of the global poor, the best moral (I make no claims about what's empirically persuasive) response is "make a coherent and defensible argument against the equal moral worth of humans including the global poor", and not something like "most humans actually agree that the global poor have equal value so don't stray too far from equality in your assessment." If you do the latter, you are making a contingent claim based on a given population at a given time. To put it mildly, for most of human history I do not believe we even would have gotten people to half-heartedly select the "moral equality for all humans" option on a survey. For me at least, we aren't bound in our philosophical assessment of value by popular belief here or for animal welfare.

Yikes; ugh. Probably a lot of this is me talking to so many college students in the Northeast.

I think maybe I'm not being clear enough about what I'm trying to do with my post? As I wrote to Wayne below, what I'm hoping happens is:

Some people who don't think animals matter very much respond to RP's weights with "that seems really far from where I'd put them, but if those are really right then a lot of us are making very poor prioritization decisions".

Those people put in a bunch of effort to generate their own weights.

Probably those weights end up in a very different place, and then there's a lot of discussion, figuring out why, and identifying the core disagreements.

I think my median is mostly still at priors (which started off not very different from yours). Though I guess I have more uncertainty now, so if forced to pick, the average is closer to the project's results than I previously thought, simply because of how averages work.

I think that with a strong prior, you should conclude that RP's research would be incorrect at representing your values.

Possibly, but what would your prior be based on to warrant being that strong?

I think this is a possible outcome, but not guaranteed. Most people have been heavily socialized to not care about most animals, either through active disdain or more mundane cognitive dissonance. Being "forced" to really think about other animals and consider their moral weight may swing researchers who are baseline "animal neutral" or even "anti-animal" more than you'd think. Adjacent evidence might be the history of animal farmers or slaughterhouse workers becoming convinced animal killing is wrong through directly engaging in it.

I also want to note that most people would be less surprised if a heavy moral weight is assigned to the species humans are encouraged to form the closest relationships with (dogs, cats). Our baseline discounting of most species is often born from not having relationships with them, not intuitively understanding how they operate because we don't have those relationships, and/or objectifying them as products. If we lived in a society where beloved companion chickens and carps were the norm, the median moral weight intuition would likely be dramatically different.

Is this actually much evidence in that direction? I agree that there are many examples of former farmers and slaughterhouse workers that have decided that animals matter much more than most people think, but is that the direction the experience tends to move people? I'm not sure what I'd predict. Maybe yes for slaughterhouse workers but no for farmers, or variable based on activity? For example, I think many conventional factory farming activities like suffocating baby chicks or castrating pigs are things the typical person would think "that's horrible" but the typical person exposed to that work daily would think "eh, that's just how we do it".

I agree and I point to that more so as evidence that even in environments that are likely to foster a moral disconnect (in contrast to researchers steeped in moral analysis) increased concern for animals is still a common enough outcome that it's an observable phenomenon.

(I'm not sure if there's good data on how likely working on an animal farm or in a slaughterhouse is to convince you that killing animals is bad. I would be interested in research that shows how these experiences reshape people's views and I would expect increased cognitive dissonance and emotional detachment to be a more common outcome.)

This criticism seems unfair to me:

I'm going to simplify a bit to make this easier to talk about, but imagine a continuum in how much people start off caring about animals, running from 0% (the person globally who values animals least) to 100% (values most). Learning that someone who started at 80% looked into things more and is now at 95% is informative, and someone who started at 50% and is now at 95% is more informative.

This isn't "some people are biased and some aren't" but "everyone is biased on lots of topics in lots of ways". When people come to conclusions that point in the direction of their biases others should generally find that less convincing than then they come to ones that point in the opposite direction.

What I would be most excited about seeing is people who currently are skeptical that animals matter anywhere near as much as Rethink's current best guess moral weights would suggest treat this as an important disagreement and don't continue just ignoring animals in their cause prioritization. Then they'd have a reason to get into these weights that didn't trace back to already thinking animals mattered a lot. I suspect they'd come to pretty different conclusions, based on making different judgement calls on what matters in assessing worth or how to interpret ambiguous evidence about what animals do or are capable of. Then I'd like to see an adversarial collaboration.

I strongly object to the (Edit: previous) statement that my post "concludes that human extinction would be a very good thing". I do not endorse this claim and think it's a grave misconstrual of my analysis. My findings are highly uncertain, and, as Peter mentions, there are many potential reasons for believing human extinction would be bad even if my conclusions in the post were much more robust (e.g. lock-in effects, to name a particularly salient one).

Sorry! After Peter pointed that out I edited it to "concludes that human extinction would be a big welfare improvement". Does that wording address your concerns?

EDIT: changed again, to "concludes that things are bad and getting worse, which suggests human extinction would be beneficial".

EDIT: and again, to "concludes that things are bad and getting worse, which suggests efforts to reduce the risks of human extinction are misguided".

EDIT: and again, to just "concludes that things are bad and getting worse".

EDIT: and again, to "concludes that things are likely bad and getting worse".

Thanks, Jeff! This helps a lot, though ideally a summary of my conclusions would acknowledge the tentativeness/uncertainty thereof, as I aim to do in the post (perhaps, "concludes that things may be bad and getting worse").

Sorry for all the noise on this! I've now added "likely" to show that this is uncertain; does that work?

Hm, I'm not sure how I would have read this if it had been your original wording, but in context it still feels like an effort to slightly spin my claims to make them more convenient for your critique. So for now I'm just gonna reference back to my original post—the language therein (including the title) is what I currently endorse.

Hmm..., sorry! Not trying to spin your claims and I would like to have something here that you'd be happy with. Would you agree your post views "currently negative and getting more negative" as more likely than not?

(I'm not happy with "may" because it's ambiguous between indicating uncertainty vs possibility. more)

I’m still confused by the perceived need to state this in a way that’s stronger than my chosen wording. I used “may” when presenting the top line conclusions because the analysis is rough/preliminary, incomplete, and predicated on a long list of assumptions. I felt it was appropriate to express this degree of uncertainty when making my claims in the the post, and I think that that becomes all the more important when summarizing the conclusions in other contexts without mention of the underlying assumptions and other caveats.

I think we're talking past each other a bit. Can we first try and get on the same page about what you're claiming, and then figure out what wording is best for summarizing that?

My interpretation of the epistemic status of your post is that you did a preliminary analysis and your conclusions are tentative and uncertain, but you think global net welfare is about equally likely to be higher or lower than what you present in the post. Is that right? Or would you say the post is aiming at illustrating more of a worst case scenario, to show that this is worth substantial attention, and you think global net welfare is more likely higher than modeled?

Given the multiple pushbacks by different people, is there a reason you didn't just take Kyle's suggested text "concludes that things may be bad and getting worse"?

"May" is compatible with something like "my overall view is that things are good but I think there's a 15% chance things are bad, which is too small to ignore" while Kyle's analysis (as I read it) is stronger, more like "my current best guess is that things are bad, though there's a ton of uncertainty".

If I were you I would remove that part altogether. As Kyle has already said his analysis might imply that human extinction is highly undesirable.

For example, if animal welfare is significantly net negative now then human extinction removes our ability to help these animals, and they may just suffer for the rest of time (assuming whatever killed us off didn’t also kill off all other sentient life).

Just because total welfare may be net negative now and may have been decreasing over time doesn’t mean that this will always be the case. Maybe we can do something about it and have a flourishing future.

Yeah, this seems like it's raising the stakes too much and distracting from the main argument; removed.

But his analysis doesn't say that? He considers two quantities in determining net welfare: human experience, and the experience of animals humans raise for food. Human extinction would bring both of these to zero.

I think maybe you're thinking his analysis includes wild animal suffering?

Fair point, but I would still disagree his analysis implies that human extinction would be good. He discusses digital sentience and how, on our current trajectory, we may develop digital sentience with negative welfare. An implication isn't necessarily that we should go extinct, but perhaps instead that we should try to alter this trajectory so that we instead create digital sentience that flourishes.

So it's far too simple to say that his analysis "concludes that human extinction would be a very good thing". It is also inaccurate because, quite literally, he doesn't conclude that.

So I agree with your choice to remove that wording.

EDIT: Looks like Ariel beat me to this point by a few minutes.

(Not speaking on behalf of RP. I don't work there now.)

FWIW, corporate chicken welfare campaigns have looked better than GiveWell recommendations on their direct welfare impacts if you weigh chicken welfare per year up to ~5,000x less than human welfare per year. Quoting Fischer, Shriver and myself, 2022 citing others:

About Open Phil's own estimate in that 2016 piece, Holden wrote in a footnote:

My understanding is that they've continued to be very cost-effective since then. See this comment by Saulius, who estimated their impact, and this section of Grilo, 2022.

Duffy, 2023 for RP also recently found a handful of US ballot initiatives from 2008-2018 for farm animal welfare to be similarly cost-effective, making, in my view, relatively conservative assumptions.

Remember that these values are conditional on hedonism. If you think that humans can experience greater amounts of value per limit time because of some non-hedonic goods that humans have access to that non-human animals don't, then you can accept everything the RP Moral Weight report says, but still think that human lives are far more valuable relative to non-human animal lives than just plugging in the numbers from the report would suggest. Personally I find the numbers low relative to my intuitions, but not necessarily counterintuitive conditional on hedonism. Most of the reasons I can think of to value humans more than animals don't have anything to do with the (to me, somewhat weird) idea that humans have more intense pleasures and pains than other conscious animals. Hedonism is very much a minority view amongst philosophers, and "objective list" theories that are at least arguably friendly to human specialness (or maybe to human and mammal specialness) are by far the most popular: https://survey2020.philpeople.org/survey/results/5206

Niceone, If this is the case

"Hedonism is very much a minority view amongst philosophers, and "objective list" theories that are at least arguably friendly to human specialness (or maybe to human and mammal specialness) are by far the most popular: https://survey2020.philpeople.org/survey/results/5206"

Because of this, I like Bob Fischer's comittment above to keep trying to generate Moral weights that don't assume hedonism. Otherwise we only have the current RP numbers, and we will see similar graphs and arguments "conditional on hedonism" which might not reflect the range of opinions people both EAs and non-EAs have about whether or not we should look at the world purely hedonistically...

(Disclaimer: I take RP's moral weights at face value, and am thus inclined to defend what I consider to be their logical implications.)

Specifically with respect to cause prioritization between global heath and animal welfare, do you think the evidence we've seen so far is enough to conclude that animal welfare interventions should most likely be prioritized over global health?

In "Worldview Diversification" (2016), Holden Karnofsky wrote that "If one values humans 10-100x as much [as chickens], this still implies that corporate campaigns are a far better use of funds (100-1,000x) [than AMF]." In 2023, Vasco Grilo replicated this finding by using the RP weighs to find corporate campaigns 1.7k times as effective.

Let's say RP's moral weights are wrong by an order of magnitude, and chickens' experiences actually only have 3% of the moral weight of human experiences. Let's say further that some remarkably non-hedonic preference view is true, where hedonic goods/bads only account for 10% of welfare. Still, corporate campaigns would be an order of magnitude more effective than the best global health interventions.

While I agree with you that it would be premature to conclude with high confidence that global welfare is negative, I think the conclusions of RP's research with respect to cause prioritization still hold up after incorporating the arguments you've enumerated in your post.

Instead of going that direction, would you say that efforts to prevent AI from wiping out all life and neutralizing all value in the universe on earth are counterproductive?

This seems maybe truth for animals vs AMF but not for animals vs Xrisk.

We're working on animals vs xrisk next!

This could depend on your population ethics and indirect considerations. I'll assume some kind of expectational utilitarianism.

The strongest case for extinction and existential risk reduction is on a (relatively) symmetric total view. On such a view, it all seems dominated by far future moral patients, especially artificial minds, in expectation. Farmed animal welfare might tell us something about whether artificial minds are likely to have net positive or net negative aggregate welfare, and moral weights for animals can inform moral weights for different artificial minds and especially those with limited agency. But it's relatively weak evidence. If you expect future welfare to be positive, then extinction risk reduction looks good and (far) better in expectation even with very low probabilities of making a difference, but could be Pascalian, especially for an individual (https://globalprioritiesinstitute.org/christian-tarsney-the-epistemic-challenge-to-longtermism/). The Pascalian concerns could also apply to other population ethics.

If you have narrow person-affecting views, then cost-effective farmed animal interventions don’t generally help animals alive now, so won't do much good. If death is also bad on such views, extinction risk reduction would be better, but not necessarily better than GiveWell recommendations. If death isn't bad, then you'd pick work to improve human welfare, which could include saving the lives of children for the benefit of the parents and other family, not the children saved.

If you have asymmetric or wide person-affecting views, then animal welfare could look better than extinction risk reduction depending on human vs nonhuman moral weights and expected current lives saved by x-risk reduction, but worse than far future quality improvements or s-risk reduction (e.g. https://onlinelibrary.wiley.com/doi/full/10.1111/phpr.12927, but maybe animal welfare work counts for those, too, and either may be Pascalian). Still, on some asymmetric or wide views, extinction risk reduction could look better than animal welfare, in case good lives offset the bad ones (https://onlinelibrary.wiley.com/doi/full/10.1111/phpr.12927). Also, maybe extinction risk reduction could look better for indirect reasons, e.g. replacing alien descendants with our happier ones, or because the work also improves the quality of the far future conditional on not going extinct.

EDIT: Or, if the people alive today aren't killed (whether through a catastrophic event or anything else, like malaria), there's a chance they'll live very very long lives through technological advancement, and so saving them could at least beat the near-term effects of animal welfare if dying earlier is worse on a given person-affecting view.

That being said, all the above variants of expectational utilitarianism are irrational, because unbounded utility functions are irrational (e.g. can be money pumped, https://onlinelibrary.wiley.com/doi/abs/10.1111/phpr.12704), so the standard x-risk argument seems based on jointly irrational premises. And x-risk reduction might not follow from stochastic dominance or expected utility maximization on all bounded increasing utility functions of total welfare (https://globalprioritiesinstitute.org/christian-tarsney-the-epistemic-challenge-to-longtermism/ and https://arxiv.org/abs/1807.10895; the argument for riskier bets here also depends on wide background value uncertainty, which would be lower with lower moral weights for nonhuman animals; stochastic dominance is equivalent to higher expected utility on all bounded increasing utility functions consistent with the (pre)order in deterministic cases).

Yes, I agree with that caveat.

Thank you for the post!

I also suspect this, and have concerns about it, but in a very different way than you I speculate. More particularly, I am concerned by the "people rarely get deeply interested in the moral weight of animals " part. This is problematic because for many actions humans do, there are consequences to animals (in many cases, huge consequences), and to act ethically, even for some non-conseuquentialists, it is essential to at least have some views about moral weights of animals.

But the issue isn't only most people not being interested in investigating "moral weights" of animals, but that for people who don't even bother to investigate, they don't use the acknowledgement of uncertainty (and tools for dealing with uncertainty) to guide their actions - they assign, with complete confidence, 1 to each human and 0 to almost everyone else.

The above analysis, if I am only roughly correct, is crucial to our thinking about which direction to move people's view is a correct one. If most people are already assigning animals with virtual 0s, where else can we go? Presumably moral weights can't go negative, animals' moral weights only have one place to go, unless most people were right - that all animals have moral weights of virtually 0.

For the reasons above, I am extremely skeptical this is worthy of worry. I think unless it happens to be true that all animals have moral weights of virtually 0, it seems to me that "a community of people who value animals highly exerting a large influence in that direction regardless of what the underlying truth" is something that we should exactly hope for, rationally and ethically speaking. (emphasis on "regardless of what the underlying truth" is mine)

P.S. A potential pushback is that a very significant number of people clearly care about some animals, such as their companion animals. But I think we have to also look at actions with larger stakes. Most people, and even more so for a collection of people (such as famailies, companies, governments, charities, and movements), judging from their actions (eating animals, driving, animal experiments, large scale constructions) and reluctance to adjust their view regarding these actions, clearly assign a virtual 0 to the moral weights of most animals - they just chose a few species, maybe just a few individual animals, to rise to within one order of magnitude of difference in moral weight with humans. Also, even for common companion animals such as cats and dogs, many people are shown to assign much less moral weight to them when they are put into situations where they have to choose these animals against (sometimes trivial) human interests.

Some (small-sample) data on public opinion:

These surveys suggest that the average person gives considerably less moral weight to non-human animals than the RP moral weight estimates, although still enough weight that animal welfare interventions look better than GiveWell top charities (and the two surveys differed considerably from each other, with the MTurk survey giving much higher weight to animals across the board).

The difference between the two surveys was inclusion/exclusion of people who refused to equate finitely many animals to a human.

Also, the wording of the questions in the survey isn't clear about what kinds of tradeoffs it's supposed to be about:

The median responses for chickens were ~500 and ~1000 (when you included infinities and NA as infinities). But does this mean welfare range or capacity for welfare? Like if we had to choose between relieving an hour of moderate intensity pain in 500 chickens vs 1 human, we should be indifferent (welfare range)? Or, is that if we could save the lives of 500 chickens or 1 human, we should be indifferent (capacity for welfare)? Or something else?

If it's capacity for welfare, then this would be a pretty pro-animal view, because chickens live around 10-15 years on average under good conditions, and under conventional intensive farming conditions, 40-50 days if raised for meat and less than 2 years if raised for eggs. Well, the average person probably doesn't know how long chickens live, so maybe we shouldn't interpret it as capacity for welfare. Also, there are 20-30 chickens killed in the US per American per year, so like 2000 per American over their life.

Another issue is the difference between comparing life-years and lives, since humans are much longer living than chickens.

Ah, that's what I meant to get at by welfare range (life-years) vs capacity for welfare (lives). I assume they're comparing welfare ranges, but if they're comparing capacity for welfare/lives and summing moral value, then the median response would be more pro-animal than under the other interpretation, like 50 to 100 chickens per human in life-year or welfare range terms.

Two nitpicks:

The chart is actually estimates for how many animal life years of a given species are morally equivalent to one human life year. Though you do get the comparison correct in the paragraph after the table.

~

You'd have to ask Kyle Fish but it's not necessarily the case that he endorses this conclusion about human extinction and he certainly didn't actually say that (note if you CTRL+F for "extinction" it is nowhere to be found in the report). I think there's lots of reasons to think that human extinction is very bad even given our hellish perpetuation of factory farming.

Thanks; edited to change those to "here's a selection from their bottom-line point estimates comparing animals to humans" and [EDIT: see above].

I don't think he concludes that either, nor do I know if he agrees with that. Maybe he implies that? Maybe he concludes that if our current trajectory is maintained / locked-in then human extinction would be a big welfare improvement? Though Kyle is also clear to emphasize the uncertainty and tentativeness of his analysis.

I think if you want to emphasize uncertainty and tentativeness it is a good idea to include something like error bars, and to highlight that one of the key assumptions involves fixing a parameter (the weight on hedonism) at the maximally unfavourable value (100%).

Edited again; see above.

It is bizarre to me that people would disagree-vote this as it seems to be a true description of the edit you made. If people think the edit is bad they should downvote, not disagreevote.

Eh; I interpret this upvote+disagree to mean "I think it's good that you posted that you made the edit, but the edit it doesn't fix the problem"

Do people here think there is a correct answer to this question?