Summary

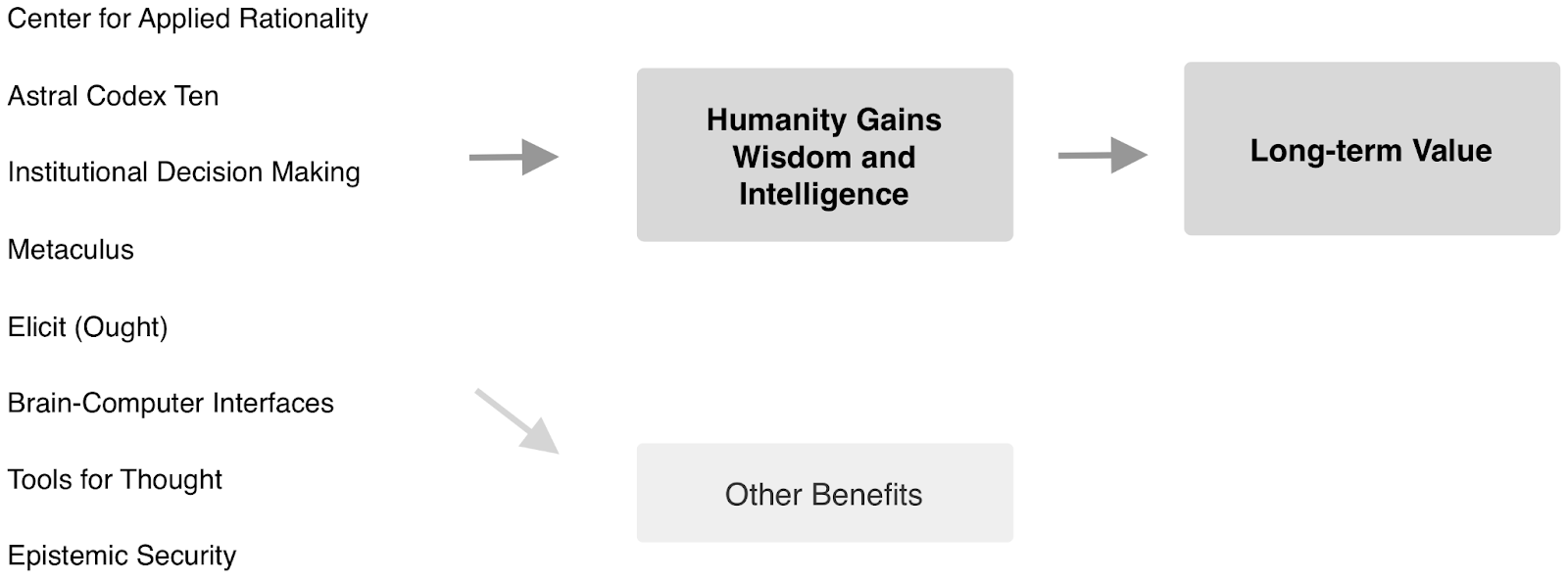

I think it makes sense for Effective Altruists to pursue prioritization research to figure out how best to improve the wisdom and intelligence[1] of humanity. I describe endeavors that would optimize for longtermism, though similar research efforts could make sense for other worldviews.

The Basic Argument

For those interested in increasing humanity’s long-term wisdom and intelligence[1], several types of wildly different interventions are options on the table. For example, we could improve at teaching rationality, or we could make progress on online education. We could make forecasting systems and data platforms. We might even consider something more radical, like brain-computer interfaces or highly advanced pre-AGI AI systems.

These interventions share many of the same benefits. If we figure out ways to remove people’s cognitive biases, causing them to make better political decisions, that would be similar to the impact of forecasting systems on their political decisions. It seems natural to attempt to figure out how to compare these. We wouldn’t want to invest a lot of resources into one field, to realize 10 years later that we could have spent them better in another. This prioritization is pressing because Effective Altruists are currently scaling up work in several relevant areas (rationality, forecasting, institutional decision making) but mostly ignoring others (brain-computer interfaces, fundamental internet improvements).

In addition to caring about prioritization between cause areas, we should also care about estimating the importance of wisdom and intelligence work as a whole. Estimating the importance of wisdom and intelligence gains is crucial for multiple interventions, so it doesn’t make much sense to ask each intervention’s research base to independently tackle this question on their own. Previously I’ve done a lot of thinking about this as part of my work to estimate the value of my own work on forecasting. It felt a bit silly to have to answer this bigger question about wisdom and intelligence, like the bigger question was far outside actual forecasting research.

I think we should consider doing serious prioritization research around wisdom and intelligence for longtermist reasons.[2] This work could both inform us of the cost-effectiveness of all of the available options as a whole, and help us compare directly between different options.

Strong prioritization research between different interventions around wisdom and intelligence might at first seem daunting. There are so clearly many uncertainties and required judgment calls. We don’t even have any good ways of measuring wisdom and intelligence at this point.

However, I think the Effective Altruist and Rationalist communities would prove up to the challenge. GiveWell’s early work drew skepticism for similar reasons. It took a long time for Quality-Adjusted Life Years to be accepted and adopted, but there’s since been a lot of innovative and educational progress. Now our communities have the experience of hundreds of research person-years of prioritization work. We have at least a dozen domain-specific prioritization projects[3]. Maybe prioritization work in wisdom and intelligence isn’t far off.

List of Potential Interventions

I brainstormed an early list of potential interventions with examples of existing work. I think all of these could be viable candidates for substantial investment.

- Human/organizational

- Rationality-related research, marketing, and community building (CFAR, Astral Codex Ten, LessWrong, Julia Galef, Clearer Thinking)

- Institutional decision making

- Academic work in philosophy and cognitive science (GPI, FHI)

- Cognitive bias research (Kahneman and Tversky)

- Research management and research environments (for example, understanding what made Bell Labs work)

- Cultural/political

- Freedom of speech, protections for journalists

- Liberalism (John Locke, Voltaire, many other intellectuals)

- Epistemic Security (CSER)

- Epistemic Institutions

- Software/quantitative

- Positive uses of AI for research, pre-AGI (Ought)

- “Tools for thought” (note-taking, scientific software, collaboration)

- Forecasting platforms (Metaculus, select Rethink Priorities research)

- Data infrastructure & analysis (Faunalytics, IDInsight)

- Fundamental improvements in the internet / cryptocurrency

- Education innovations (MOOCs, YouTube, e-books)

- Hardware/medical

Key Claims

To summarize and clarify, here are a few claims that I believe. I’d appreciate insightful pushback for those who are skeptical of any.

- “Wisdom and intelligence” (or something very similar) is a meaningful and helpful category.

- Prioritization research can meaningfully compare different wisdom and intelligence interventions.

- Wisdom and intelligence prioritization research is likely tractable, though challenging. It’s not dramatically more difficult than global health or existential risk prioritization.

- Little of this prioritization work has been done so far, especially publicly.

- Wisdom and intelligence interventions are promising enough to justify significant work in prioritization.

Open Questions

This post is short, and of course, leaves open a bunch of questions. For example,

- Does “wisdom and intelligence” really represent a tractable idea to organize prioritization research around? What other options might be superior?

- Would wisdom and intelligence prioritization efforts face any unusual challenges or opportunities? (This would help us craft these efforts accordingly.)

- What specific research directions might wisdom and intelligence prioritization work investigate? For example, it could be vital to understand how to quantify group wisdom and intelligence.

- How might Effective Altruists prioritize this sort of research? Or, how would it rank on the ITN framework?

- How promising should we expect the best identifiable interventions in wisdom and intelligence to be? (This related to the previous question)

I intend to write about some of these later. But, for now, I’d like to allow others to think about them without anchoring.

Addendum: Related Work & Terminology

There’s some existing work advocating for broad interventions in wisdom and intelligence, and there’s existing work on the effectiveness of particular interventions. I’m not familiar with existing research in inter-cause prioritization (please message me if you know of such work).

Select discussion includes, or can be found by searching for:

- The term “Raising the Sanity Waterline” on LessWrong. It’s connected to the tag Epistemic Hygiene.

- Lesswrong has tags for “Society-Level Intellectual Progress”, “Individual-Level Intellectual Progress”, and "Intelligence Amplification"

- The field of Collective Intelligence asks a few of the relevant questions.

- I’ve previously used the term “Effective Epistemics”, but haven’t continued that thread since.

- Life Improvement Science is a very new field that's interested in similar areas, and also for relatively longtermist reasons.

Thanks to Edo Arad, Miranda Dixon-Luinenburg, Nuño Sempere, Stefan Schubert, Brendon Wong for comments and suggestions.

[1]: What do I mean by “wisdom and intelligence”? I expect this to roughly be intuitive to some readers, especially with the attached diagram and list of example interventions. The important cluster I’m going for is something like “the overlapping benefits that would come from the listed interventions.” I expect this to look like some combination of calibration, accuracy on key beliefs, the ability to efficiently and effectively do intellectual work, and knowledge about important things. It’s a cluster that’s arguably a subset of “optimization power” or “productivity.” I might spend more time addressing this definition in future posts, but thought such a discussion would be too dry and technical for this one. All that said, I’m really not sure about this, and hope that further research will reveal better terminology.

[2]: Longtermists would likely have a higher discount rate than others. This would allow for more investigation of long-term wisdom and intelligence interventions. I think non-longtermist prioritization in these areas could be valuable but would be highly constrained by the discount rates involved. I don’t particularly care about the question of “should we have one prioritization project that tries to separately optimize for longtermist and nonlongtermist theories, or should we have separate prioritization projects?”

[3]: GiveWell, Open Philanthropy (in particular, subgroups focused on specific cause areas), Animal Charity Evaluators, Giving Green, Organization for the Prevention of Intense Suffering (OPIS), Wild Animal Initiative, and more.

Great post - I agree with a lot of what you write. I wrote about something quite similar here:

"Decreasing populism and improving democracy, evidence-based policy, and rationality"

where the general cause area of improving rationality also came out on top:

and did some ITN type prioritization for potential funding opportunities:

Yes, that looks extremely relevant, thanks for flagging that.

Great to see more attention on this topic! I think there is an additional claim embedded in this proposal which you don't call out:

I notice that I'm intuitively skeptical about this point, even though I basically buy your other premises. It strikes me that there is likely to be much more variation in impact potential between specific projects or campaigns, e.g. at the level of a specific grant proposal, than there is between whole categories, which are hard to evaluate in part because they are quite complementary to each other and the success of one will be correlated with the success of others. You write, "We wouldn’t want to invest a lot of resources into one field, to realize 10 years later that we could have spent them better in another." But what's to say that this is the only choice we face? Why not invest across all of these areas and chase optimality by judging opportunities on a project-by-project basis rather than making big bets on one category vs. another?

Interesting, thanks so much for the thought. I think I agree that we'll likely want to fund work in several (or all) of these fields. However, I expect some fields will be dramatically more exciting than others. It seems likely enough to me that 70% of the funding might be best in one of the mentioned intervention areas above, and that several would have less than 5% of the remaining amounts.

It could well be the case that more research would prove otherwise! Maybe 70% of the intervention areas listed would be roughly equal to each other. I think it's a bit unlikely, but I'm not sure. This is arguably one good question for future researchers in the space to answer, and a reason why it could use more research.

If it were the case that the areas are roughly equal to each other, I imagine we'd then want more total prioritization research, because we couldn't overlook any of them.

One obvious research project at this point would just be to survey a bunch of longtermists and get their take on how important/tractable each intervention area is.

For those interested in the 'epistemic security' topic, the most relevant report is here; it's an area we (provisionally) plan to do more on.

https://www.repository.cam.ac.uk/handle/1810/317073

Or a brief overview by the lead author is here:

https://www.bbc.com/future/article/20210209-the-greatest-security-threat-of-the-post-truth-age

The implicit framing of this post was that, if individuals just got smarter, everything would work out much better. Which is true to some extent. But I'm concerned this perspective is overlooking something important, namely that it's very often the case it's clear what should be done for the common good, but society doesn't organise itself to do those things because many individuals don't want to - for discussion, see the recent 80k podcast on institutional economics and corruption. So I'd like to see a bit more emphasis on collective decision-making vs just individuals getting smarter.

This tension is one reason why I called this "wisdom and intelligence", and tried to focus on that of "humanity", as opposed to just "intelligence", and in particular, 'individual intelligence".

I think that "the wisdom and intelligence of humanity" is much safer to optimize than "the intelligence of a bunch of individuals in isolation".

If it were the case that "people all know what to do, they just won't do it", then I would agree that wisdom and intelligence aren't that important. However, I think these cases are highly unusual. From what I've seen, in most cases of "big coordination problems", there are considerable amounts of confusion, deception, and stupidity.

Your initial point reminds me in some sense the orthogonality thesis by Nick Bostrom, but applied to humans. High IQ individuals acted in history to pursue completely different goals, so it's not automatic to assume that by improving humanity's intelligence as a whole we would assure for sure a better future to anyone.

At the same time I think we could be pretty much confident to assume that an higher IQ-level of humanity could at least enable more individuals to find optimal solutions to minimize the risks of undesirable moral outcomes from the actions of high-intelligent but morally-questionable individuals, while at the same time working on solving more efficiently other and more relevant problems.

My main objection is to number 5: Wisdom and intelligence interventions are promising enough to justify significant work in prioritization.

The objection is a combination of:

--Changing societal values/culture/ habits is hard. Society is big and many powerful groups are already trying to change it in various ways.

--When you try, often people will interpret that as a threatening political move and push back.

--We don't have much time left.

Overall I still think this is promising, I just thought I'd say what the main crux is for me.

Some of the interventions don't have to do with changing societal values/culture/ habits though; e.g. those falling under hardware/medical.

But maybe you think they'll take time, and that we don't have enough time to work on them either.

+1 for Stefan's point.

On "don't have much time left", this is a very specific and precise question. If you think that AGI will happen in 5 years, I'd agree that advancing wisdom and intelligence probably isn't particularly useful. However, if AGI happens to be 30-100+ years away, then it really gets to be. Even if there's a <30% chance that AGI is 30+ years away, that's considerable.

In the very short-time-frames, "education about AI safety" seems urgent, though is more tenuously "wisdom and intelligence".

It seems plausible that the observable things that make the rationalist Bay Area community look good comes from the aptitude of its members and their intrinsic motivation and self improvement efforts.

Without this pool of people, the institutions and competences of the community is not special, and it’s not even clear it’s above the baseline of similar communities.

So what?:

This comment was longer but it involves content from a thread that is going on on LessWrong that I think most people are aware of. To summarize the point, what seems to be going on is a lot of base-rate sort of negative experiences you would expect with a young, male dominated community that is focused on intangible goals which allows marginal, self-appointed leaders to build sources of authority.

Because the exceptional value comes from its people, but the problems come from (sadly) prosaic dynamics, I think it is wise to be concerned that perspectives/interventions to create a community will conflate the rationalist quality with its norms/ideas/content.

Also, mainly because I guess I don't trust execution or think that execution is really demanding, I think this critique applies to other interventions, most directly to lifehacking.

Less directly, I think caution is good for other interventions, e.g. "Epistemic Security", "Cognitive bias research", "Research management and research environments (for example, understanding what made Bell Labs work)".

The underlying problem isn't "woo", it's that there's already people other investigating this too and the bar for founders seems high because of the downsides.

I'd also agree that caution is good for many of the listed interventions. To me, that seems to be even more of a case for more prioritization-style research though, which is the main thing I'm arguing for.

Honestly, I think my comment is just focused on "quality control" and preventing harm.

Based on your comments, I think it is possible that I am completely aligned with you.

I agree that the existing community (and the EA community) represent much, if not the vast majority, of the value we have now.

I'm also not particularly excited about lifehacking as a source for serious EA funding. I wrote the list to be somewhat comprehensive, and to encourage discussion (like this!), not because I think each area deserves a lot of attention.

I did think about "recruiting" as a wisdom/intelligence intervention. This seems more sensitive to the definition of "wisdom/intelligence" than other things, so I left it out here.

I'm not sure how extreme you're meaning to be here. Are you claiming something like,

> "All that matters is getting good people. We should only be focused on recruiting. We shouldn't fund any augmentation, like LessWrong / the EA Forum, coaching, or other sorts of tools. We also shouldn't expect further returns to things like these."

No, I am not that extreme. It's not clear anyone should care about my opinion in "Wisdom and Intelligence" but I guess this is it:

My guess is counterintuitive, but it is that these existing institutions, that are shown to have good leaders, should be increased in quality, using large amounts of funding if necessary.

I think I agree, though I can’t tell how much funding you have in mind.

Right now we have relatively few strong and trusted people, but lots of cash. Figuring out ways, even unusually extreme ways, of converting cash into either augmenting these people or getting more of them, but seem fairly straightforward to justify.

I wasn’t actually thinking that the result of prioritization would always be that EAs end up working in the field. I would expect that in many of these intervention areas, it would be more pragmatic to just fund existing organizations.

My guess is that prioritization could be more valuable for money than EA talent right now, because we just have so much money (in theory).

Ok, this makes a lot of sense and I did not have this framing.

Low quality/low effort comment:

For clarity one way of doing this is how Open Phil makes grants: well defined cause areas with good governance that hires extremely high quality program officers with deep models/research who make high EV investments. The outcome of this, weighted by dollar, has relatively few grants go to orgs “native to EA". I don’t think you have mimic the above, this even be counterproductive and impractical.

The reason my mind went to a different model of funding was related to my impression/instinct/lizard brain when I saw your post. Part of the impression went like:

There’s a “very-online” feel to many of these interventions. For example, “Pre-AGI” and “Data infrastructure”.

"Pre-AGI". So, like, you mean machine learning, like Google or someone’s side hustle? This boils down to computers in general, since the median computer today uses data and can run ML trivially.

When someone suggests neglected areas, but 1) it turns out to be a buzzy field, 2) there seems to be tortured phrases and 3) association of money, I guess that something dumb or underhanded is going on.

Like the grant maker is going to look for “pre-AGI” projects, walk past every mainstream machine learning or extant AI safety project, and then fund some curious project in the corner.

10 months later, we’ll get an EA forum post “Why I’m concerned about Giving Wisdom”.

The above story contains (several) slurs and is not really what I believed.

I think it gives some texture to what some people might think when they see very exciting/trendy fields + money, and why careful attention to founder effects and aesthetics is important.

I'm not sure this is anything new and I guess that you thought about this already.

I agree there are ways for it to go wrong. There’s clearly a lot of poorly thought stuff out there. Arguably, the motivations to create ML come from desires to accelerate “wisdom and intelligence”, and… I don’t really want to accelerate ML right now.

All that said, the risks of ignoring the area also seem substantial.

The clear solution is to give it a go, but to go sort of slowly, and with extra deliberation.

In fairness, AI safety and bio risk research also have severe potential harms if done poorly (and some, occasionally even when done well). Now that I think about it, bio at least seems worse in this direction than “wisdom and intelligence”; it’s possible that AI is too.

I just want to flag that I very much appreciate comments, as long as they don’t use dark arts or aggressive techniques.

Even if you aren’t an expert here, your questions can act as valuable data as to what others care about and think. Gauging the audience, so to speak.

At this point I feel like I have a very uncertain stance on what people think about this topic. Comments help here a whole lot.

It seems like some of these areas are hugely important. I think that "tools for thought" and human computer interfaces are important and will play a much bigger role in a few years than they do now.

I think there’s a issue here with one of these topics:

ROT13:

I think there are many people who will never work on this because of the associations. Also, this might not just affect this area but all projects or communities associated with this.

Do you have any thoughts about the practicalities of advancing this?

Also, I might write something critically on unrelated topics. I wanted say this because I think it can feel obnoxious if you give a nice response but then I continue to write something further negative.

(Strongly endorse you giving further critical feedback - this is a new area, and the more the other side is steelmanned, the better the decision that can be reached about whether and how to prioritize it. That said, I don't think this criticism is particularly good, per Ozzie's response.)

(I need to summarize the ROT, to respond)

The issue here is with political issues around the "genetic modifications" category I listed.

I agree these are substantial issues for this category, but don't think these issues should bleed into the other areas.

Strong "wisdom and intelligence prioritization" work would hopefully inform us on the viability here. I assume the easy position, for many reasons, is just "stay totally away from genetic modifications, and focus instead on the other categories." It would probably take a very high bar and a lot of new efforts (for example, finding ways to get some of the advantages without the disadvantages) to feel secure enough to approach it.

I'm fairly confident that the majority of the other intervention areas I listed would be dramatically less controversial.

Since I've been interested in these topics for years (and I have almost started a PhD at Leiden University about this) I am pondering the possibility of writing something in the same cluster of this post but slightly different - e.g. like "The case for cognitive enhancement as a priority cause", a reading list or something like that.

But before that I want briefly to tell you my story. I think it could be valuable for this conversation by looking at like at a Minimum Viable Product for what you said here

"...For example, we could improve at teaching rationality, or we could make progress on online education..."

Since July 2020 I am running an educational project on Instagram (named @school_of_thinking) with the intention of teaching rationality to the general public (at the moment I have 12.500 follower). Not only rationality, actually, but also critical thinking, decision theory, strategic thinking, epistemology and effective communication. I've been a passive member of the rationalist community for several years and I decided to start this project in part because in Italy (the project is all runned in italian) we lack the critical thinking culture that is present in other anglo-saxon countries. An example of this is that I haven't found on amazon.it any updated serious textbook on critical thinking written in italian. This project is all EA-value based.

I've had a constant organic growth, a high and stable engagement rate (between 8% and 15%) and a decent number of positive and unbiased detailed feedbacks. It is all based on some informal pedagogic considerations that I have in mind about how to teach things in general. My idea now is to expand this project into other platforms, to create courses and books and to start a rationalist podcast.

There will be too much to say about my project, but if anyone want to ask me questions I am completely open. I also think I will write an entire post about it.

Sounds like very interesting work! As a Frenchman, it's encouraging to see this uptake in another "latin" European country. I think this analytic/critical thinking culture is also underdeveloped in France. I'm curious: in your project, do you make connections to the long tradition of (mostly Continental?) philosophical work in Italy? Have you encountered any resistance to the typically anglo-saxon "vibe" of these ideas? In France, it's not uncommon to dismiss some intellectual/political ideas (e.g., intersectionality) as "imported from America" and therefore irrelevant.

Hi Baptiste! Not even a single connection to the Continental tradition could be found in School of Thinking :D.

Actually, I have always been pretty clear in defining my approach as strongly analytical/anglo-saxon. Until now, fortunately, I haven't received any particular resistance regarding my approach but mainly positive feedback, probably because most of my followers are into STEM or already into analytical philosophy.

Yes yes, more strength to this where it's tractable and possible backfires are well understood and mitigated/avoided!

One adjacent category which I think is helpful to consider explicitly (I think you have it implicit here) is 'well-informedness', which I motion is distinct from 'intelligence' or 'wisdom'. One could be quite wise and intelligent but crippled or even misdirected if the information available/salient is limited or biased. Perhaps this is countered by an understanding of one's own intellectual and cognitive biases, leading to appropriate ('wise') choices of information-gathering behaviour to act against possible bias? But perhaps there are other levers to push which act on this category effectively.

To the extent that you think long-run trajectories will be influenced by few specific decision-making entities, it could be extremely valuable to identify, and improve the epistemics and general wisdom (and benevolence) of those entities. To the extent that you think long-run trajectories will be influenced by the interactions of many cooperating and competing decision-making entities, it could be more important to improve mechanisms for coordination, especially coordination against activities which destroy value. Well-informedness may be particularly relevant in the latter case.

That’s an interesting take.

When I was thinking about “wisdom”, I was assuming it would include the useful parts of “well-informedness”, or maybe, “knowledge”. I considered using other terms, like “wisdom and intelligence and knowledge”, but that got to be a bit much.

I agree it’s still useful to flag that such narrow notions as “well informedness” are useful.

The core idea sounds very interesting: Increasing rationality likely has effects which can be generalized, therefore having a measure could help evaluate wider social outreach causes.

Defining intelligence could be an AI-complete problem, but I think the problem is complicated enough as a simple factor analysis (i. e. even without knowing what we're talking about :). I think estimating impact once we know the increase in any measure of rationality is the easier part of the problem - for ex. knowing how much promoting long-termist thinking increases support for AI regulation, we're only a few steps from getting the QALY. The harder part for people starting out in social outreach might be to estimate how many people they can get on board of thinking more long-termistically with their specific intervention.

So I think it might be very useful to put together a list of all attempts to calculate the impact of various social outreach strategies for anyone who's considering a new one to be able to find some reference points because the hardest estimates here also seem to be the most important (e. g. the probability Robert Wright would decrease oversuspicion between powers). My intuition tells me differences in attitudes are something intuition could predict quite well, so the wisdom of the crowd could work well here.

The best source I found when I tried to search whether someone tried to put changing society into numbers recently is this article by The Sentience Institute.

Also, this post adds some evidence based intervention suggestions to your list.

Relevant:

I just came across this LessWrong post about a digital tutor that seemed really impressive. It would count there.

https://www.lesswrong.com/posts/vbWBJGWyWyKyoxLBe/darpa-digital-tutor-four-months-to-total-technical-expertise

Cool article. This is exactly the type of stuff we need - but you're only scraping the surface. I /really/ recommend you look into the work of John Vervaeke. He's a cognitive scientist who is all about wisdom cultivation (among many other things). He talks about wisdom as being a type of meta-rationality in which you know when to apply rationality and avoid being caught in the biases of over rationalizing everything - he speaks for how rationality, emotions, and intuition all have their strengths and shortcoming, and how to have them work together so you can become the best possible agent.

You can fx. start here:

https://youtu.be/Sbun1I9vxjwht

https://youtu.be/c6Fr8v2cAIw

This will be too esoteric for many but it could be argued that Daniel M. Ingram’s Emergent Phenomenology Research Consortium is trying to operationalise wisdom research. https://theeprc.org/

Thanks for the link, I wasn't familiar with them.

For one, I'm happy for people to have a very low bar to post links to things that might or might not be relevant.