Executive summary

One of the biggest challenges of being in a community that really cares about counterfactuals is knowing where the most important gaps are and which areas are already effectively covered. This can be even more complex with meta organizations and funders that often have broad scopes that change over time. However, I think it is really important for every meta organization to clearly establish what they cover and thus where these gaps are; there is a substantial negative flowthrough effect when a community thinks an area is covered when it is not.

Why this matters

The topic of having a transparent scope recently came up at a conference as one of the top concerns with many EA meta orgs. Some negative effects that have been felt by the community are in large part due to unclear scopes, including:

- Organizations leaving a space thinking it's covered when it's not.

- Funders reducing funding in an area due to an assumption that someone else is covering it when there are still major gaps.

- Two organizations working on the same thing without knowledge of each other, due to both having a broad mandate, but simultaneously putting resources into an overlapping subcomponent of this mandate.

- Talent being turned off or feeling misled by EA when they think an org misportrays itself.

- Talent ‘dropping out of the funnel’ when they go to what they believe is the primary organization covering an area and finding that what they care about isn’t covered, due to the organization claiming too broad a mandate.

- There can also be a significant amount of general frustration caused when people think an organization will cover, or is covering, an area and then an organization fails to deliver (often on something they did not even plan on doing).

What do I mean when I say that organizations should have a transparent scope:

Broadly, I mean organizations being publicly clear and specific about what they are planning to cover both in terms of action and cause area.

- In a relevant timeframe: I think this is most important in the short term (e.g., there is a ton of value in an organization saying what they are going to cover over the next 12 months, and what they have covered over the last months).

- For the most important questions: This clarity needs to both be in priorities (e.g., cause prioritization) and planned actions (e.g., working with student chapters). This can include things the organization might like or think is impactful to do but are not doing due to capacity constraints or its current strategic direction.

- For the areas most likely for people to confuse: It is particularly important to provide clarity about things that people think one might be doing (for example, Charity Entrepreneurship probably doesn’t need to clarify that it doesn’t sell flowers, but should really be transparent over whether it plans to incubate projects in a certain cause area or not).

How to do this

When I have talked to organizations about this, I sometimes think that the “perfect” becomes the enemy of the good and they do not want to share a scope that is not set in stone. All prioritizations can change, and it can sometimes even be hard internally to have a sense of where the majority of your resources are going. However, given the importance of counterfactuals and the number of aspects that can help proxy these factors, I think a pretty solid template can be created. Given that CE is also often asked this question I made a quick template below that I think gives a lot of transparency if answered clearly and can give people a pretty clear sense of an organization's focus. It's worth noting that what I am suggesting is more about clarity rather than justification. While an org can choose not to provide the reasoning for what it’s doing, being clear on what they are doing is a great, quick first step.

What is CE’s planned scope over the next 12 months (2023-2024)

Our top three goals this year

- To incubate ~10 charities with 75% of them growing to be field leaders (e.g. GW supported or equivalent in other cause areas)

- To run our new foundation program multiple times

- To explore other impactful career paths we could run training programs in

Actions (More info in our annual report)

| 3 things EAs might be surprised that we spend considerable time doing | 3 things EAs might be surprised that we do not spend considerable time doing |

- Our recent foundation program launch (~2 FTE) - Helping CE charity alumni longer term (~1 FTE) | - Helping/researching social enterprise projects (~0 FTE) - Training existing NGOs outside the CE network (~0 FTE) |

Budget (more info in our budget)

| We put more money into X than people would expect | We put less money into X than people would expect |

~7.5% CE Incubation program stipends o cover costs of living (~£112k) ~10% Contingency (~£150k) | ~6% Office costs (3200 sq in London) (~£90k) ~58% Staff cost (for ~16 FTE) (~£864k) |

In practice cause area prioritization (more writing on our cause priority)

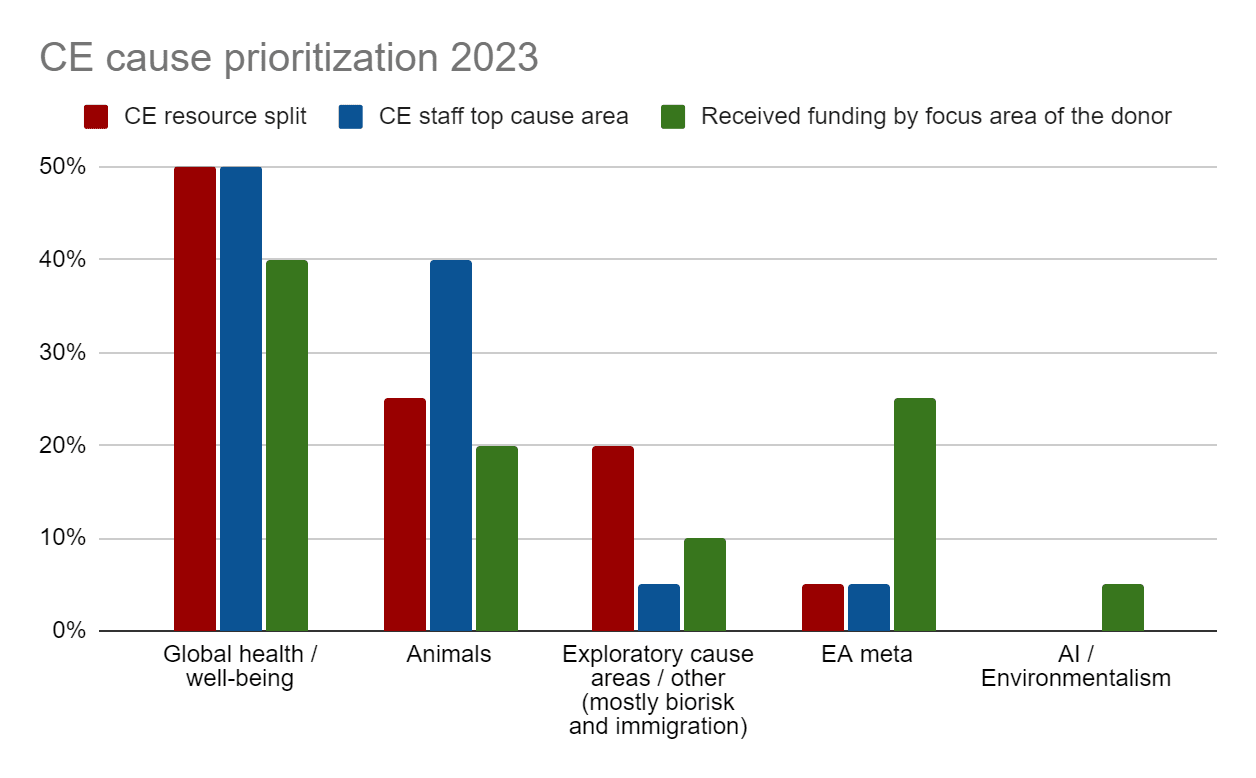

Notes on chart: All numbers are rough approximations. Global health / well-being includes family planning and mental health, animals is mostly farmed animals. We expect the exploration cause areas to change more often than the others year to year. Received funding is typically not earmarked or restricted to a specific purpose or cause area but it still creates an implicit expectation/pressure.

I mostly agree, but would add that it seems totally okay if two orgs sometimes work on the same thing! It's easy to over-index on the simple existence of an item within scope and say "oh that's covered" and move on, without actually asking "is this need really being met in the world?" Competition is good in general, and I wouldn't want to overly discourage it.

Thanks for posting this!

Some of what you're saying reminds me of " 80k would be happy to see more projects in the careers space" and "80,000 Hours wants to see more people trying out recruiting" — and another potentially relevant post is Healthy Competition.

Less directly related: I've recently been feeling confused about what my attitude to transparency (in general) should be. I really appreciate transparency and viscerally feel its (potential) benefits, but am also (probably less viscerally) aware of its costs. One example of my confusion: I've been struggling to decide how much I should prioritize transparency-oriented projects or tasks I could be working on, even when the only cost for me is time that I could be spending on something else (i.e. when I don't have to worry about other potential risks because they're not as relevant in that case). (I wrote a bit on this here.)

Indeed, I think those points in the right direction, and this post by 80k stands out as one of the most clear examples of things I would like to see more of. For example, you can gather from this 80k post that ~20% of effort goes to all areas outside of Xrisk/EA meta and I think this would be quite surprising for many people in the EA community to know. However, I still think this information is not well-known or internalised by the broader community.

Wow only 20%!

That really surprises me at least, actually makes me a little nervous about directing public health bent people there like I do at the moment - I should look into it more...

https://probablygood.org/ might be a potential alternative, together with Successif (You could send all 3 and see what they find most useful)

Thanks wil check them out

I think their main research page on this is a good place to start - it gives an explicit ranking of their latest thinking on which problems are most pressing: https://80000hours.org/problem-profiles/ (last updated May 24, 2023).

100% agree - EA organisations should say EXACTLY what they are doing, why they are doing it and how they are doing it and make active efforts to publicise this. In my limited experience EA charities themselves (with the exception perhaps of AI orgs) are pretty good at doing this especially compared with other charites.

In general as well, I think that Orgs should do ONE thing only (wrote a blog about it a couple of years ago) with the vast majority of their time, and maybe do a few other smaller, relevant things on the side. Their scope should NOT be large. For example CE incubate and fund new effective orgs as their one thing, with side projects of a foundation program and helping support CE founders

In terms of clear scope transpency which is communicated well, below I have put my my low confidence personal opinion as to which sectors within EA are better and worse at transparency. There might be good reasons for this - its far harder for AI orgs to communicate clearly what their scope is compared to us basic global health normys ;). In saying that though, public health orgs and animal advocacy orgs often post and communicate their scope on the forum, but how often do you get one of these hugely funded AI orgs writing a short and nice post telling us what the are up to?

For EA leadership orgs though I think its easier to do better, so its clear to us all why buying a castle is a great idea and within their scope (cheap shot :p). The CEA front page doesn't even make it clear what exactly they do and their scope is, although their What is CEA page is quite good if perhaps a little broad.

Global Health Orgs > Animal rights org > Community building orgs / EA leadership >AI orgs

(Low confidence, keen to hear other thoughts)

I would go further than you, in that I don't agree with hedging for future flexibility at all. Better to do something clearly defined now, go hard and do it well ,then if you pivot at some stage change your scope clearly and very publicly when it becomes necessary.

Nice post, love it!

On CEA (where I'm Chief of Staff) and its website specifically:

On CEA, I think a chart like the one I outlined in the post would be super useful, as I pretty constantly hear very different perspectives on what CEA does or does not do. I understand this might change with, e.g., a new ED, but even understanding 'what CEA was like in 2022' I think could provide a lot of value.

Love it man thanks so much for the reply, appreciate it a lot! Never considered that as an issue for the website so that makes a lot of sense. Still think having somthing like this at the top of your front page wouldn't hurt

"The Centre for Effective Altruism (CEA) builds and nutures a global community who think carefully about the world’s biggest problems and takes high impact action to solve them"

Perhaps I took too much artistic license ;).

Your ordering of who has struggled with this in the past matches my sense, although I would add that I think it's particularly important for community building, meta, and EA leadership organizations. These are both the least naturally definitionally clear in what they do and have the most engagement with a counterfactually sensitive community.

I really agree with the thesis that EA orgs should make it clear what they are and aren't doing.

But I think specifically for EA orgs that try to be public facing (e.g., an org focused on high net worth donors like Longview) there's a way that very publicly clarifying a transparent scope can cut against their branding/ how they sell themselves. These orgs need to sell themselves at some level to be as effective as possible (it's what their competition is doing), and selling yourself and being radically transparent do seem like they trade off at times (e.g., how much you prioritise certain sections of your website).

Thankfully, we have a lovely EA forum that can probably solve this issue :)

Thanks mike - I understand the argument and I'm sure others will agree with you, but I'm not sure I agree on the selling yourself vs. being radically transparent as necessarily being a good tradeoff to make. Part of what makes EA stuff so great, and a point of difference I think should be clear communication and transparency even it might appear to be an undersell or even slightly jarring to people not familiar with EA.

And yes I completely agree even if orgs do choose to be bit fluffy, vague and shiny on their website, at least they can transparently lay out their scope right here :D.

I suspect one of if not the main targets here is CEA[1], so I wanted to say that fwiw I think CEA's level of transparency about their scope is about right.

I decided to look for their scope on their website and it was in the first place I looked, where they say (last updated: January 2023):

I think beyond this level of transparency, CEA is probably hitting rapidly diminishing returns or taking attention away from more important topics. No matter how transparent any well-known entity is about anything, there will always be a sizeable proportion of people who don't know about it and don't look for it and I don't think CEA should feel responsible for that.

The post is tagged "Criticism of effective altruist organizations", you focus on "meta organizations", CEA is a core meta organization, it's been brought up a couple of times in the comments etc

"I think beyond this level of transparency, CEA is probably hitting rapidly diminishing returns or taking attention away from more important topics." I would be surprised if this was the case as some pretty basic stuff is missing, e.g. I could not find a recent public budget for CEA.

In two minutes I found income/expenditure for the US and UK entities in 2021 and I think they've been a bit busy recently with other priorities.

Edit: Also I thought we were discussing scope.

2021 is outdated when discussing budgets and projects we are not currently working on blog posts. With regards to the budget, I believe this is the legal minimum that has to be made public, though much of the data is combined within an EVF, making it harder to pull out specific details. I think the budget is inherently tied to the scope, as it's challenging to truly understand where an organisation is allocating its resources without this kind of basic information. For instance, if an organisation spends a large percentage of its resources on a certain area, any cause preference in that area would have a much greater impact on the overall scope of the organisation.

Circling back to say we recently published CEA's 2023 budget.

Note that we project spending to be substantially under budget.

I don't think those points support your conclusion at all. There are few/no details on specific outcomes they want to achieve over the next 1--2 years, and no clarification of how resources are spent. I think that page provides less detail on scope for CEA than this forum post does for CE, and certainly less than the linked report.

To address your specific quotes.

This is an aim/desire, not what they are actually doing.

Similar. It is something they aim not to do (with arguable effectiveness) and then a call for other people to do things.

This is a good high-level summary of their scope. It would be a great intro to something describing their intended scope.

This is over two years old.

I quoted these parts because I've seen a lot of people accuse them of wanting control of the EA community or having overarching responsibility for it (ah, including yourself incidentally: "If CEA do not want to be driven by the community, I think they should consider whether they should present themselves as representatives of the community") and it's relevant to scope.

What more do you need to know to avoid the bulk of the problems Joey refers to this in this post?

Obviously it does. If you're going to criticize other organizations for something, you better make sure to improve your own org on that metric at the same time. But that seems like a cheap shot to me and the relevant standard is not "Are other meta orgs providing as much transparency as the most transparent meta org." It's a question of trade-offs in time and attention (which people on this forum seem to consider only maybe 2% of the time when criticizing someone else for not doing X, despite trade-offs nearly always being a necessary part of the argument). And I do honestly feel like it will never be enough for people who've already decided they don't like CEA.