Rethink Priorities has been conducting a range of surveys and experiments aimed at understanding how people respond to different framings of Effective Altruism (EA), Longtermism, and related specific cause areas.

There has been much debate about whether people involved in EA and Longtermism should frame their efforts and outreach in terms of Effective altruism, Longtermism, Existential risk, Existential security, Global priorities research, or by only mentioning specific risks, such as AI safety and Pandemic prevention (examples can be found at the following links: 1,2,3,4,5,6,7,8). These discussions have taken place almost entirely in the absence of empirical data, even though they concern largely empirical questions.[1]

In this post we report the results of three pilot studies examining responses to different EA-related terms and descriptions. Some initial findings are:

- Longtermism appears to be consistently less popular than other EA-related terms and concepts we examined, whether presented just as a term, just as a conceptual description, or both together, across our studies.

- Global catastrophic risk reduction appears to be relatively well-liked, whether presented as a term, a description, or both.

- Effective giving and High impact giving were well-liked as terms, but were relatively less popular than other items when terms were accompanied by descriptions, or as descriptions alone

While we think these initial results are interesting, we would caution against updating too strongly on these findings until we have replicated and extended them in studies with a larger set of different framings. One of the key motivations for sharing these initial results is to encourage people to suggest different ideas and framings which they would like to see tested.

Study 1. Cause area framing

In the first of these studies, we aimed to assess how much people liked different cause areas framed in more ‘moderate’ or ‘controversial’ ways (operationalized as liking a movement that promoted giving resources to each cause). For this initial study, we simply used two different framings for each cause area, designed to be more or less popular. In future work we aim to systematically develop and test a larger number of potential framings. In addition, we only used very short descriptions of each cause area. Results could potentially differ substantially when providing more detail about each cause area.

The exact causes and framings are presented below. Only the descriptions of the cause areas were provided, without the names:

| Cause area | Moderate | Controversial |

| AI risk | Ensuring that advanced artificial intelligence is safe, through research and regulation. | Working to prevent possible threats from advanced artificial intelligence, which could threaten the extinction of humanity. |

| Climate change | Addressing the impacts of global warming and promoting sustainable practices, such as a reduction in fossil fuels. | Addressing the impact of global warming, such as through researching and promoting new technologies to capture carbon emissions or geo-engineering. |

| Digital sentience | Research and regulation to reduce the risk of harm to possible advanced artificial intelligences. | Working to promote the wellbeing of potential future digital minds, such as advanced artificial intelligences, which may be conscious. |

| Farmed animal welfare | Reducing the suffering of animals in factory farms and promoting alternatives. | Aiming to prevent the suffering of animals produced for food, by ending factory farming and campaigning against the consumption of animal products. |

| Global health and development | Improving healthcare access and economic conditions for the world's poorest populations. | Helping people in the poorest countries in the world through focusing on the most highly evidence-based interventions, such as malaria nets and direct cash transfers. |

| Invertebrate welfare | Work to understand and improve the welfare of farmed invertebrate animals, including shrimp and bees. | Promoting the welfare of invertebrates, such as insects, through changes to agriculture, pest control and other interventions. |

| Pandemic preparedness | Strengthening global health systems to detect, respond to, and reduce the impact of potential pandemic outbreaks. | Working to reduce the risks of disastrous future pandemics, including from manmade illnesses, which could threaten the destruction of humanity. |

| Wild animal welfare | Helping support the welfare of wild animals that suffer from issues such as disease or starvation in the wild. | Working to reduce the suffering that wild animals experience in nature, including through researching novel interventions such as genetic engineering. |

The survey was fielded online to approximately 1400 US adults. For each cause area, it was randomly determined whether each respondent would see the moderate or the controversial framing, such that overall each description was seen by approximately 700 people, and each person saw a mix of moderate and controversial framings across the cause areas. Responses were weighted according to Age, Census Region, Education, Race/ethnicity, Sex, Urban vs. Rural vs. Suburban, Income, and political party identification, so as to be representative of the US adult population. Sampling was also done using quotas for some demographic variables to reduce the need for such rebalancing.

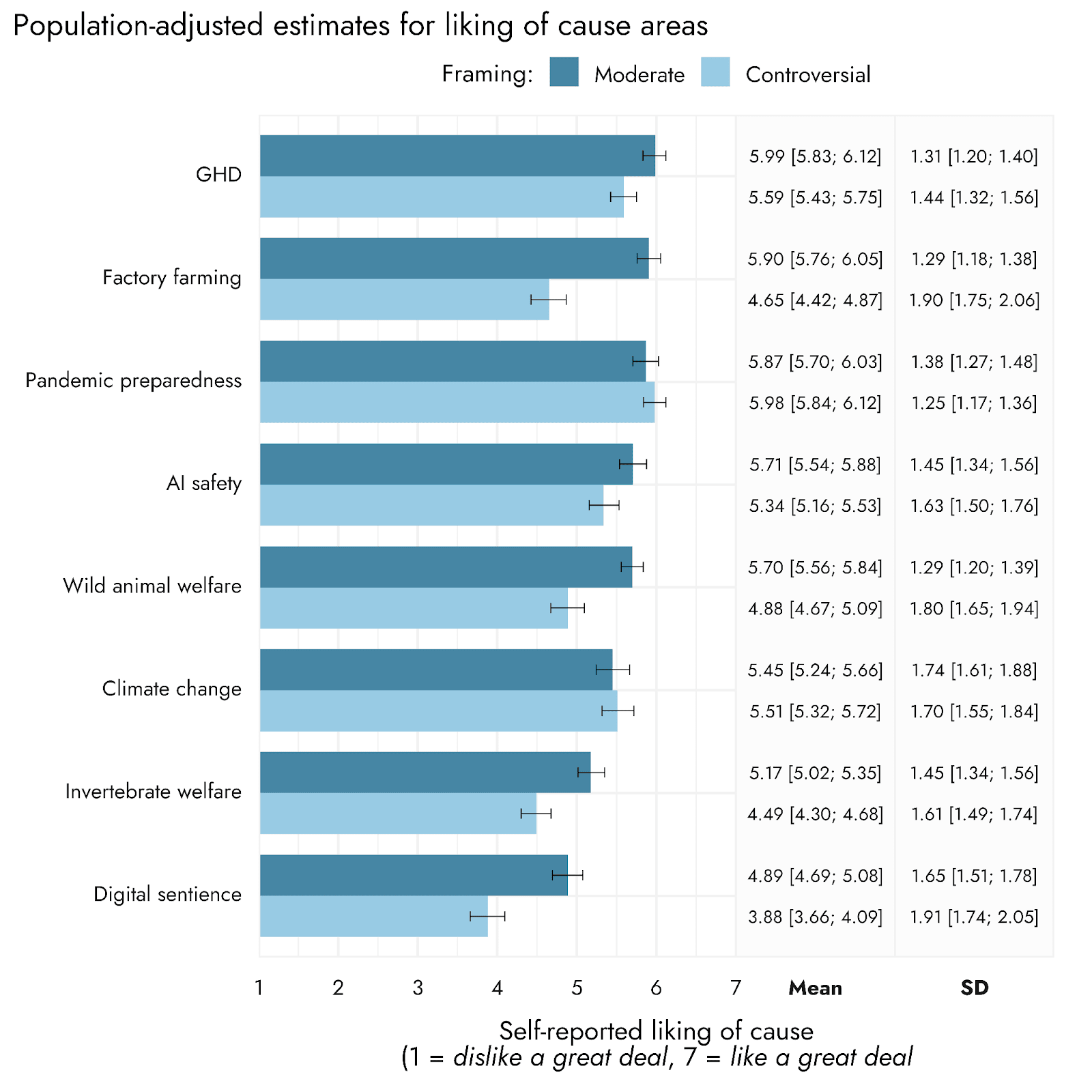

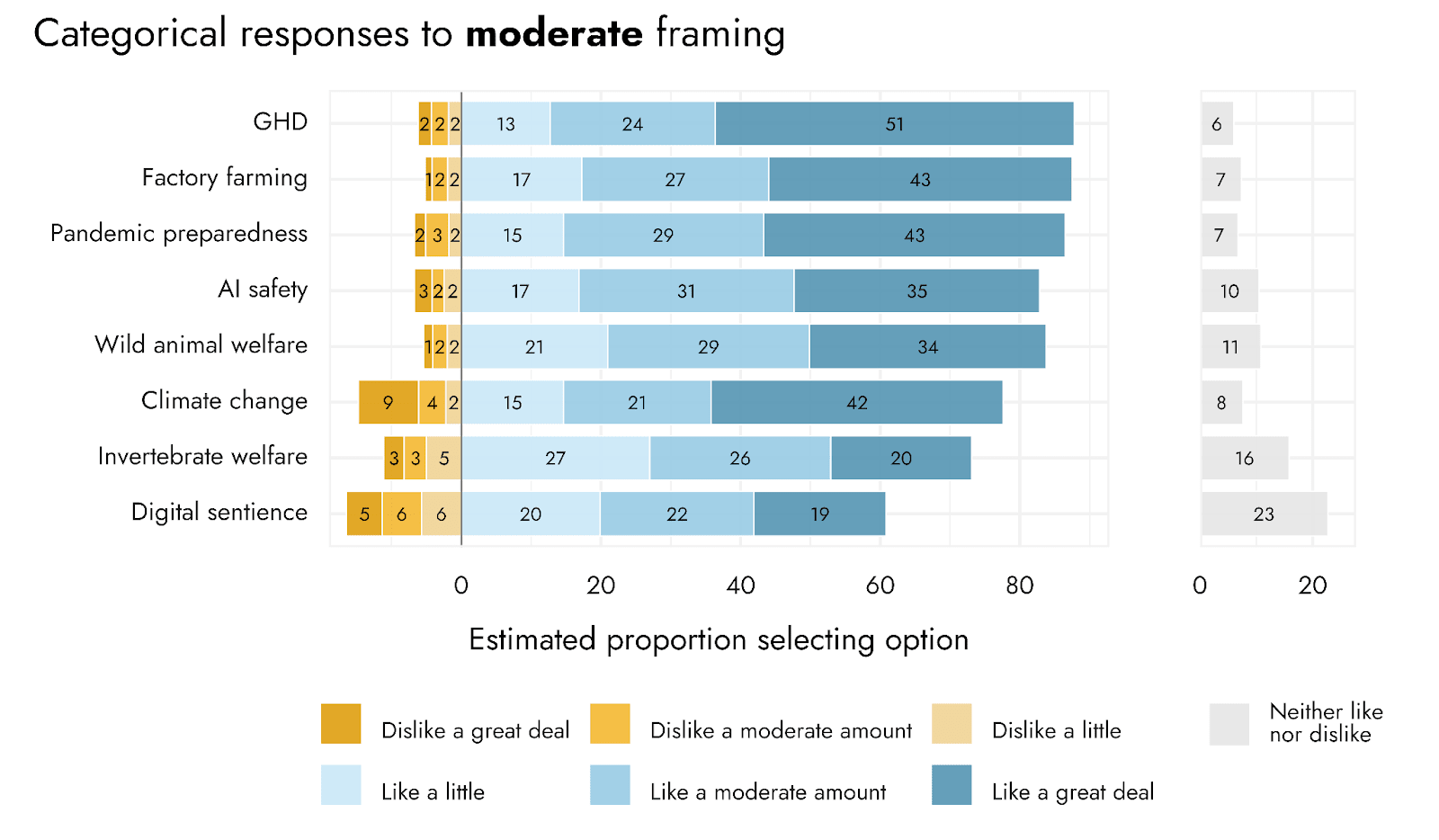

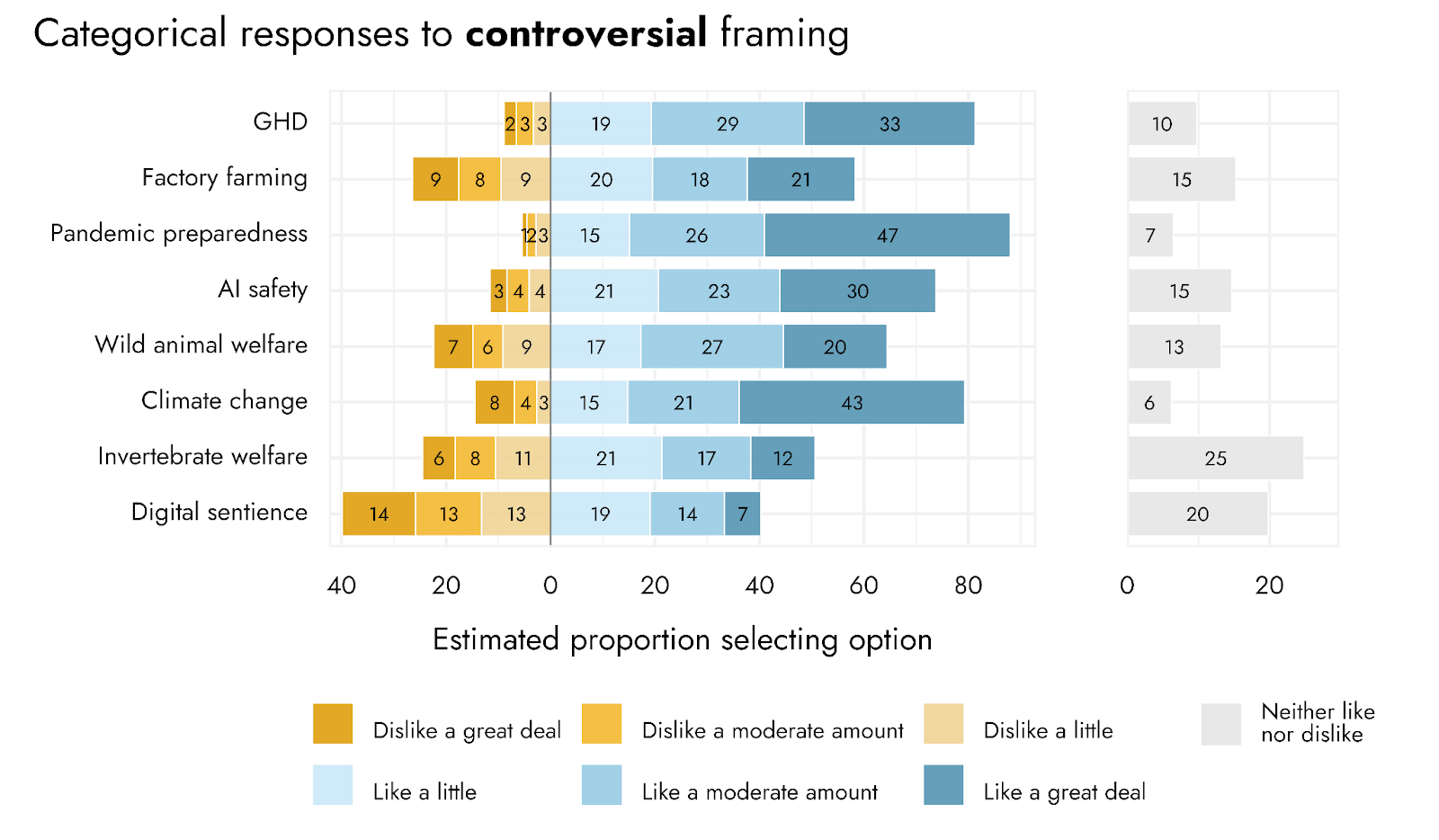

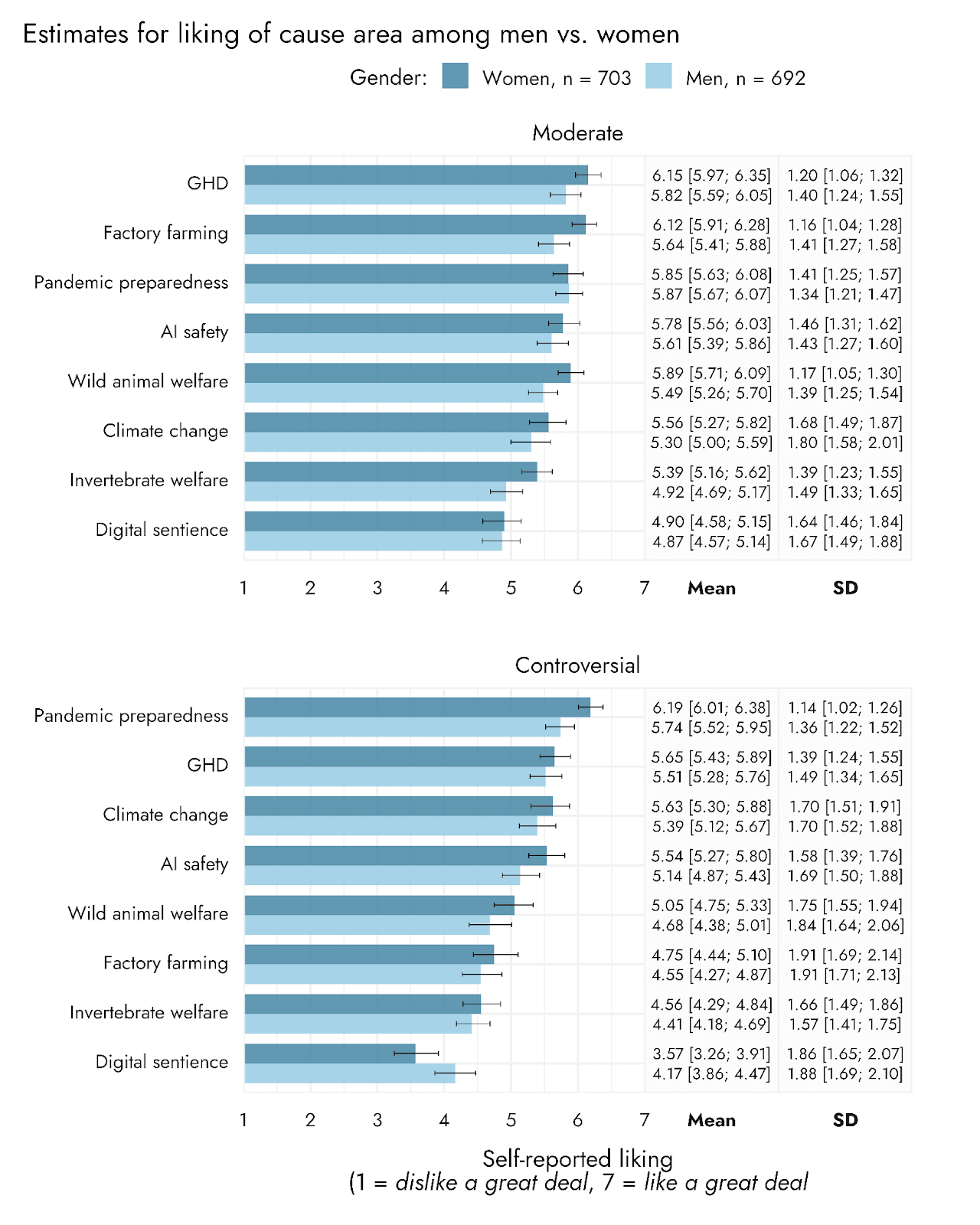

The figures below show the reported liking of each cause area, depending on how it was framed, followed by the distribution of responses for the moderate and controversial framings.

As anticipated, liking for different causes varied across different framings, with more controversial framings generally less well-liked. However, the extent of differences between the different framings we tested varied substantially across causes. For Digital sentience and Factory farming, we observed large differences based on the framing employed. In contrast, Climate change and Pandemic preparedness appeared to be equally positively viewed in moderate and controversial framings.[2]

In some cases, the effect of framing made a substantial difference to the relative performance of different causes. For example, the controversially framed versions of factory farming and wild animal welfare were among the least-liked causes, while their more moderate framings were quite well-liked.

Looking at overall ratings of the cause areas, two of the more nascent EA cause areas - Invertebrate welfare and particularly Digital sentience - polled quite poorly relative to other causes in either a moderate or controversial framing. However, this may reflect the unfamiliarity of both causes - indeed, both causes received the greatest share of neutral responses, indicating relatively uncommitted stances. If presented with lengthier descriptions, which explained and made the case for these causes, it is possible they would be perceived more positively, and this should be explored in future work.

Demographics

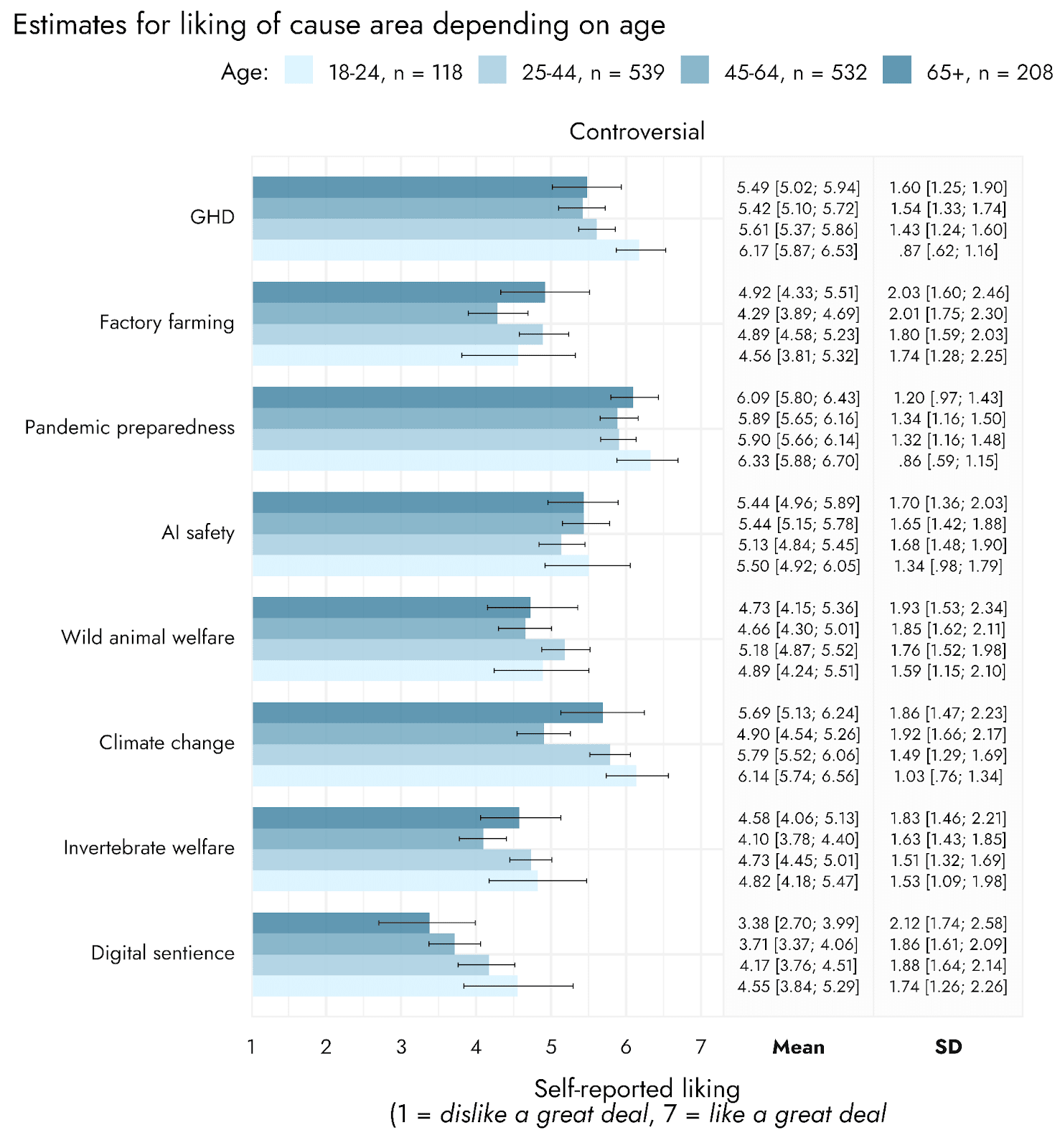

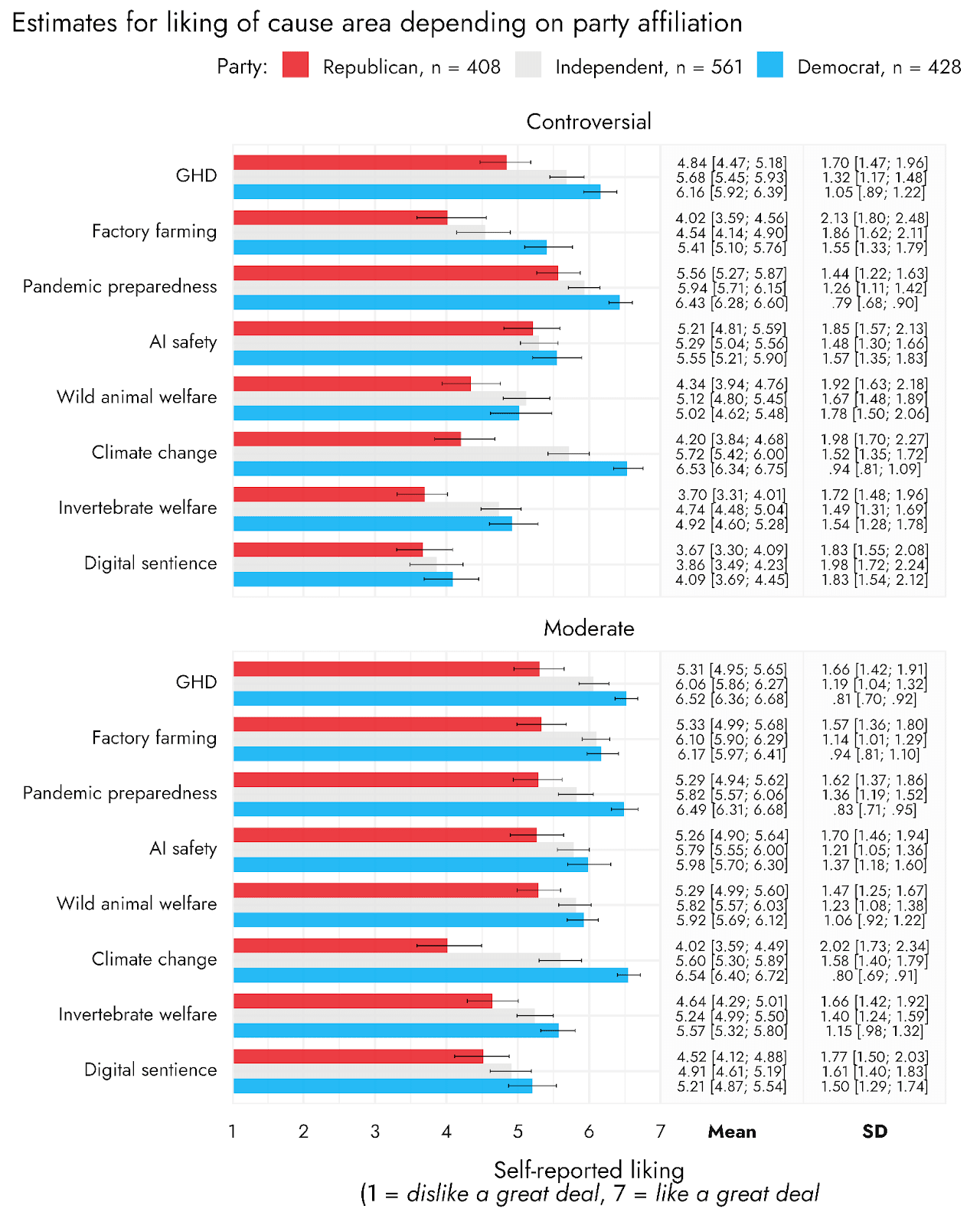

We also explored some demographic variation in how these cause areas and framings are responded to, and highlight below some potential patterns of interest.

With respect to gender, we noted that women tended to be similarly or slightly more sympathetic than men towards both moderate and controversial framings of each cause area, with the exception of Digital sentience, where women rated the cause substantially less positively than did men for the controversial framing.

Digital sentience was also subject to an age-based trend (this appeared to some degree in both framings, but was clearer in the controversial framing), whereby younger people tended to be more positive than older people about this cause area (though notable that the cause still performed poorly relative to others). There was also a hint of younger people being more positive than older people about pursuing GHD through a focus on evidence-based interventions.

A further trend of note, and one that was apparent across these studies and others we have conducted in the past, was that all of the causes tended to appeal most to respondents who identified as Democrats, and least to Republican-identified respondents. This was most striking with respect to Climate change, but occurred to some degree for all cause areas.

These results highlight some substantial differences between people’s assessment of different causes and different framings of those causes, as well as potentially interesting differences in the extent to which assessments of different causes vary depending on framing.

We think that results of this kind are likely relevant to a variety of different actors. However, larger studies examining a wider variety of framings are necessary to understand these differences and what drives them. For example, these could reveal whether the people’s dislike of particular framings is driven by aversion to references to human “extinction” or to “campaigning”, or by sounding too radical or not radical enough, or something else.

Study 2. EA-related concepts with and without descriptions

In the second of these studies, we investigated how people responded to several EA or EA-related cause areas depending on whether they were asked about the term only, a description without the term, or both the term and description. This allows us to assess whether people simply like or dislike terms, such as Effective altruism, the concepts represented by these terms, or their combination.

A sample of approximately 2100 US adults were randomly split to be shown either an EA-related term/name (n = 690), a conceptual description of these EA-related terms (without the name of the term; n = 696), or both the term and description together (n = 710). As in the previous study, responses were weighted according to Age, Census Region, Education, Race/ethnicity, Sex, Urban vs. Rural vs. Suburban, Income, and political party identification, so as to be representative of the US adult population, with quotas for some demographic variables to reduce the need for such rebalancing.

The terms and their respective descriptions are shown below.[3]

| Term | Description |

| Effective Altruism | A movement that aims to find the best ways to help others, and put them into practice. It often involves researching which charities are the most effective and which careers have the highest impact. |

| Effective Giving | A movement dedicated to using evidence and reason to inform your charitable donation decisions, ensuring that you identify and donate to the places where your money can have the greatest impact. |

| High Impact Giving | A movement dedicated to using evidence and reason to inform your charitable donation decisions, ensuring that you identify and donate to the places where your money can have the greatest impact. |

| Existential Risk Reduction | A field that seeks to reduce risks from threats such as global pandemics, nuclear war, asteroid impacts, and dangerous technological developments that place civilization at risk. |

| Global Catastrophic Risk Reduction | A field that seeks to reduce risks from threats such as global pandemics, nuclear war, asteroid impacts, and dangerous technological developments that place civilization at risk. |

| Existential Security | A field that seeks to reduce risks from threats such as global pandemics, nuclear war, and dangerous technological developments that place civilization at risk, so that humanity’s risk from threats to its existence and flourishing is and remains very low. |

| Global Priorities Research | A field that aims to identify the most pressing issues facing humanity and determine how we can most effectively address them. |

| Longtermism | The view that positively influencing the long-term future, potentially hundreds or thousands of years away, is a key moral priority of our time. |

| Positive action | A movement and a philosophy which encourages people to help make the world a better place in concrete ways. It encourages people to help the world in whatever ways make most sense for them. |

Note that Positive action was used as a vaguely positive, but generic, item that we expect relatively few people would actively object to, which can function as something like a control against which responses to other items can be compared.

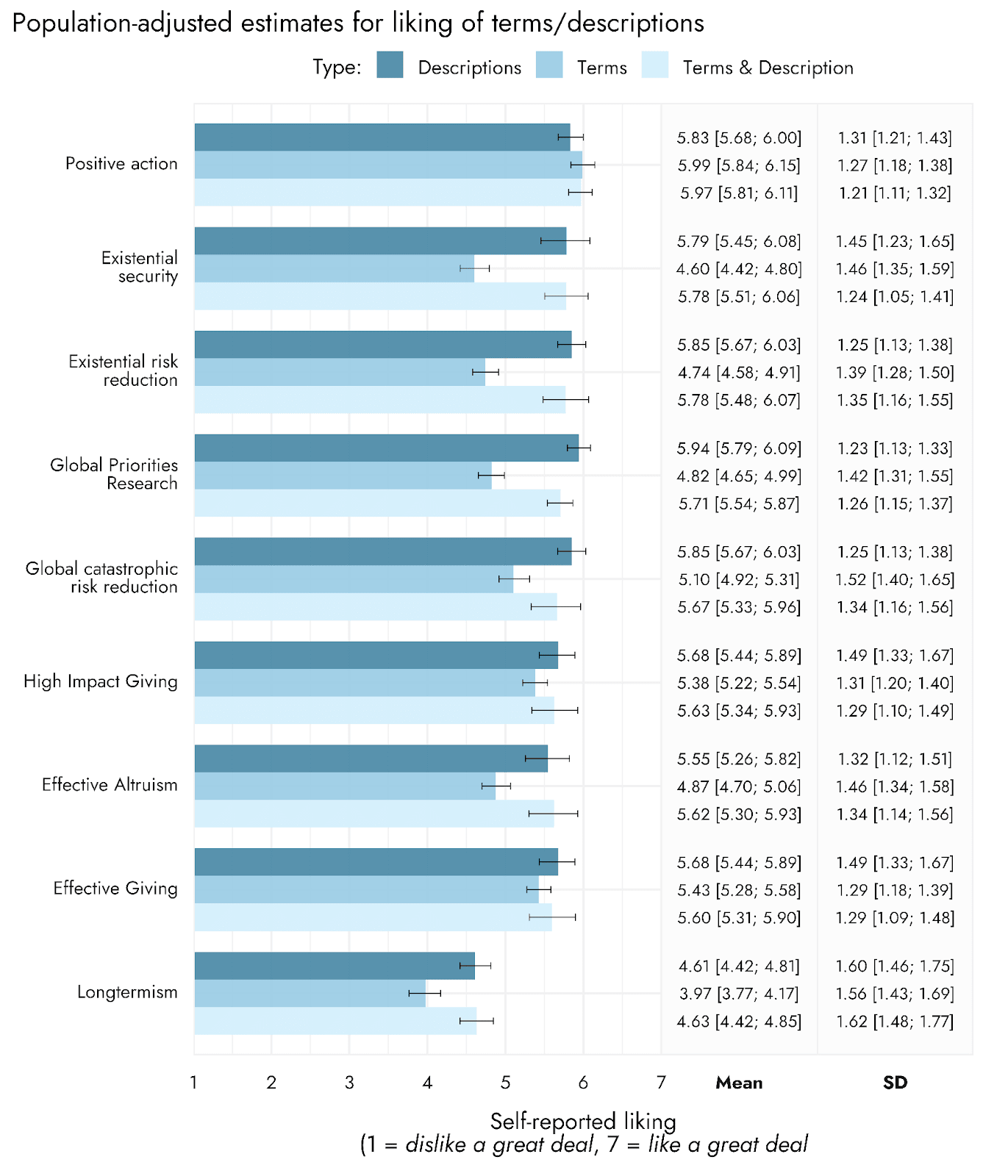

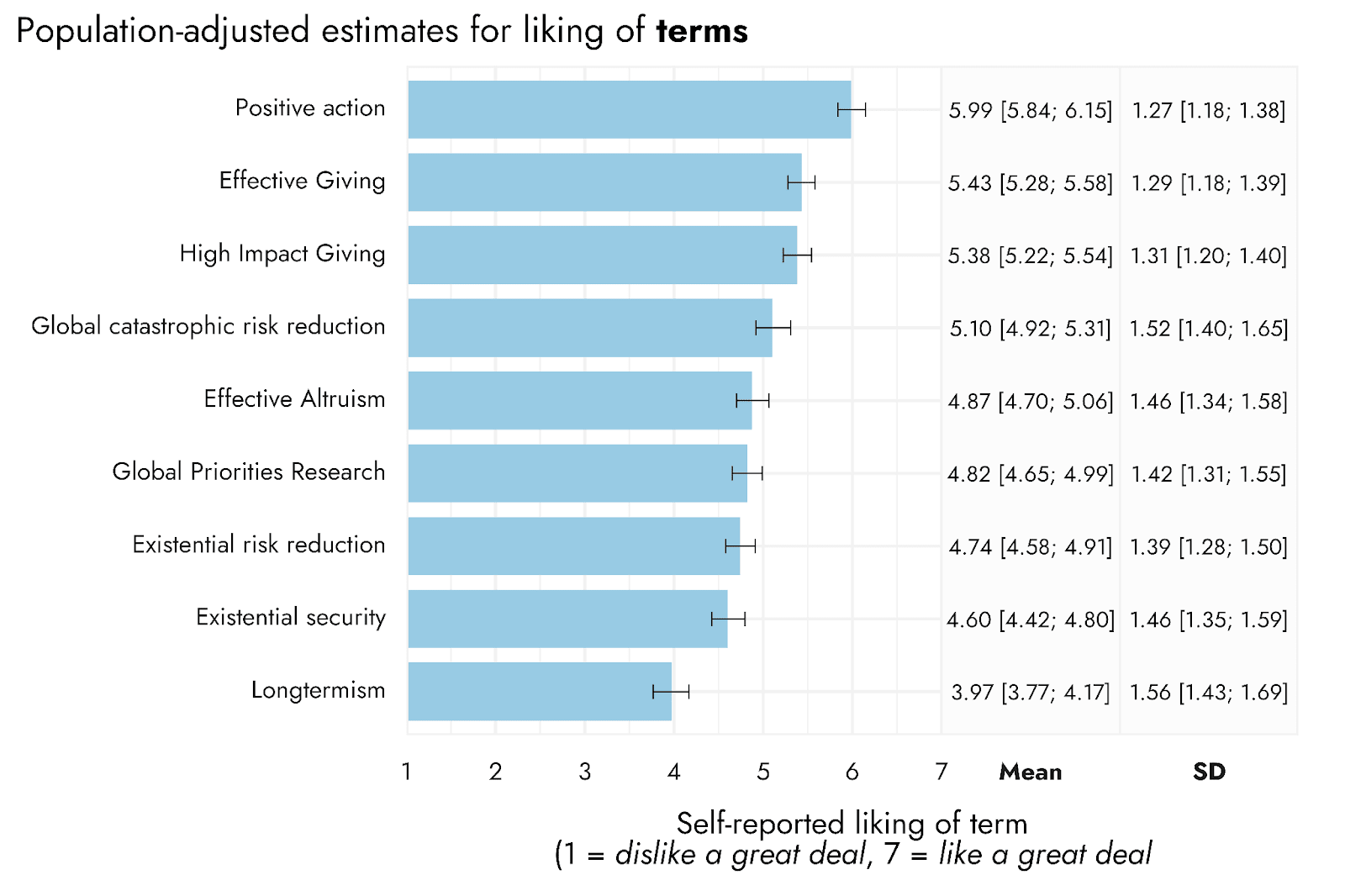

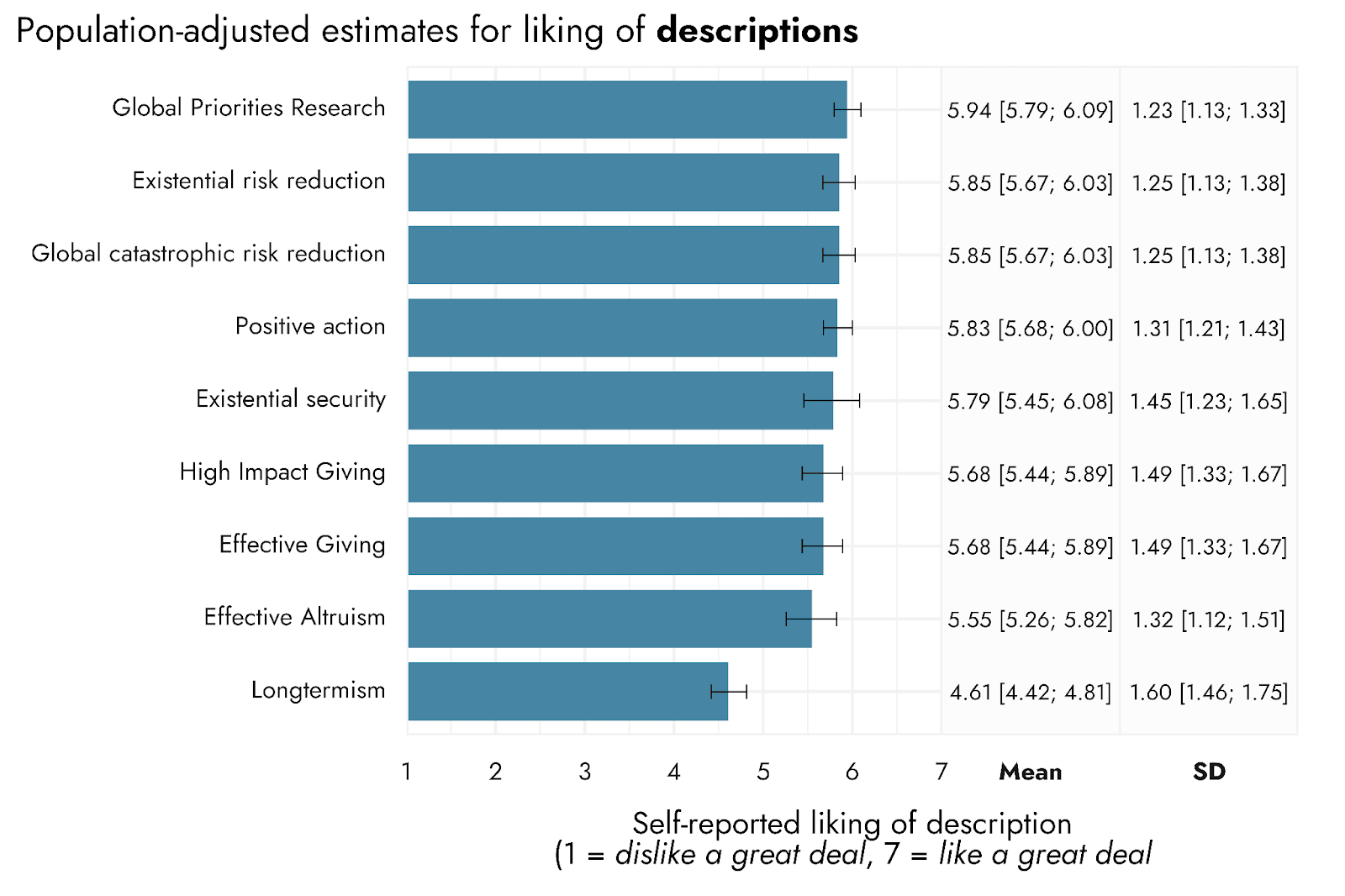

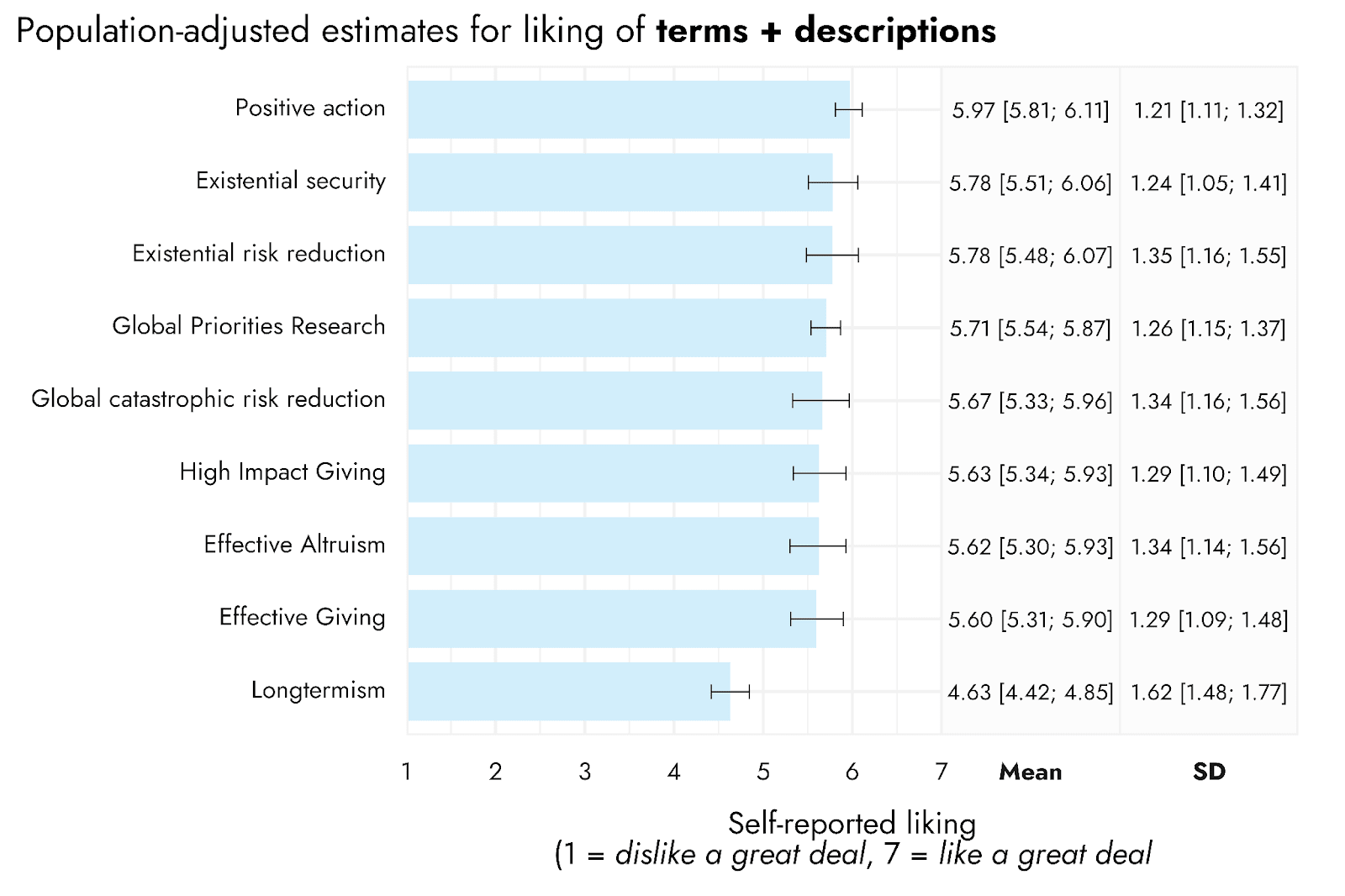

The topline results from this study are presented below, with plots provided so as to be able to compare causes within descriptions, as well as to compare how items perform in each condition:

Examining the results across every condition, it is striking that the liking of the terms alone tended to be substantially lower than liking of the descriptions, or terms + descriptions. There were three main exceptions to this tendency: Positive action, Effective giving and High impact giving, where the terms were relatively well-liked, and liked to a similar degree, as the descriptions or terms and descriptions combined.

Based on the distribution of responses, the lower average ratings of these other terms in isolation appeared to be driven by more people remaining neutral when provided with the term alone. This may well be explained by it not being clear, without further explanation, what the terms mean. In the case of Effective giving, High impact giving, and Positive action, it may seem more transparent what the terms mean, or they may simply sound more positive, without further explanation.

Nevertheless, there were some substantial differences in how the terms were rated. For example, Global catastrophic risk reduction appeared to be relatively well-liked as a term, performing only just below Effective giving and High impact giving. In contrast, Existential security was rated relatively poorly, and Longtermism was particularly poorly-liked as a term.

When descriptions were provided, however, people appear more positive about essentially all the terms except for Longtermism. Longtermism stood out as performing poorly relative to the other items whether looked at as just the term, just the description, or the term + description.

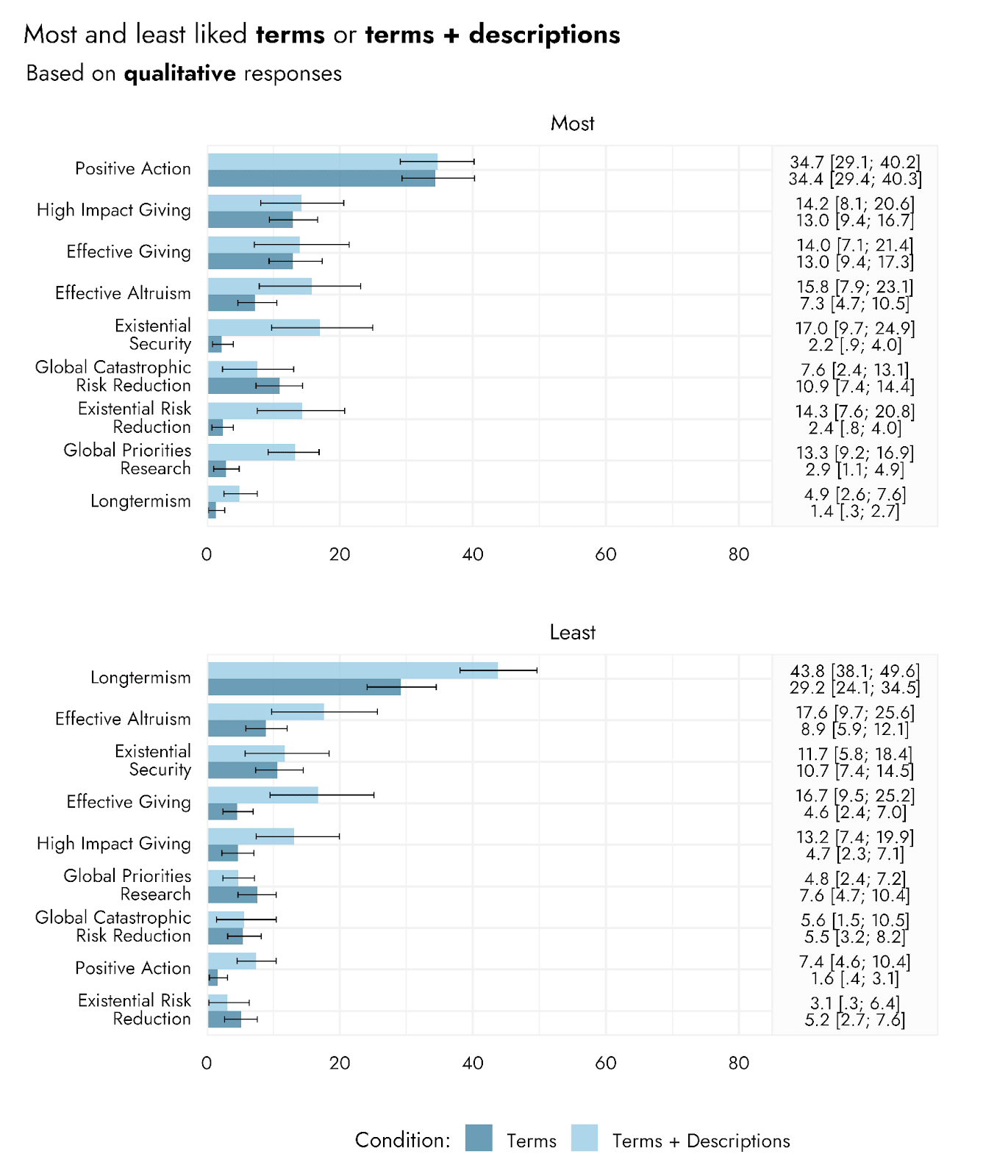

This poor performance of Longtermism was further indicated by responses to a subsequent question in the survey that asked people to indicate which of the items was their least preferred, and their most preferred. Longtermism was the most common ‘least liked’ and least common ‘most liked’ item:

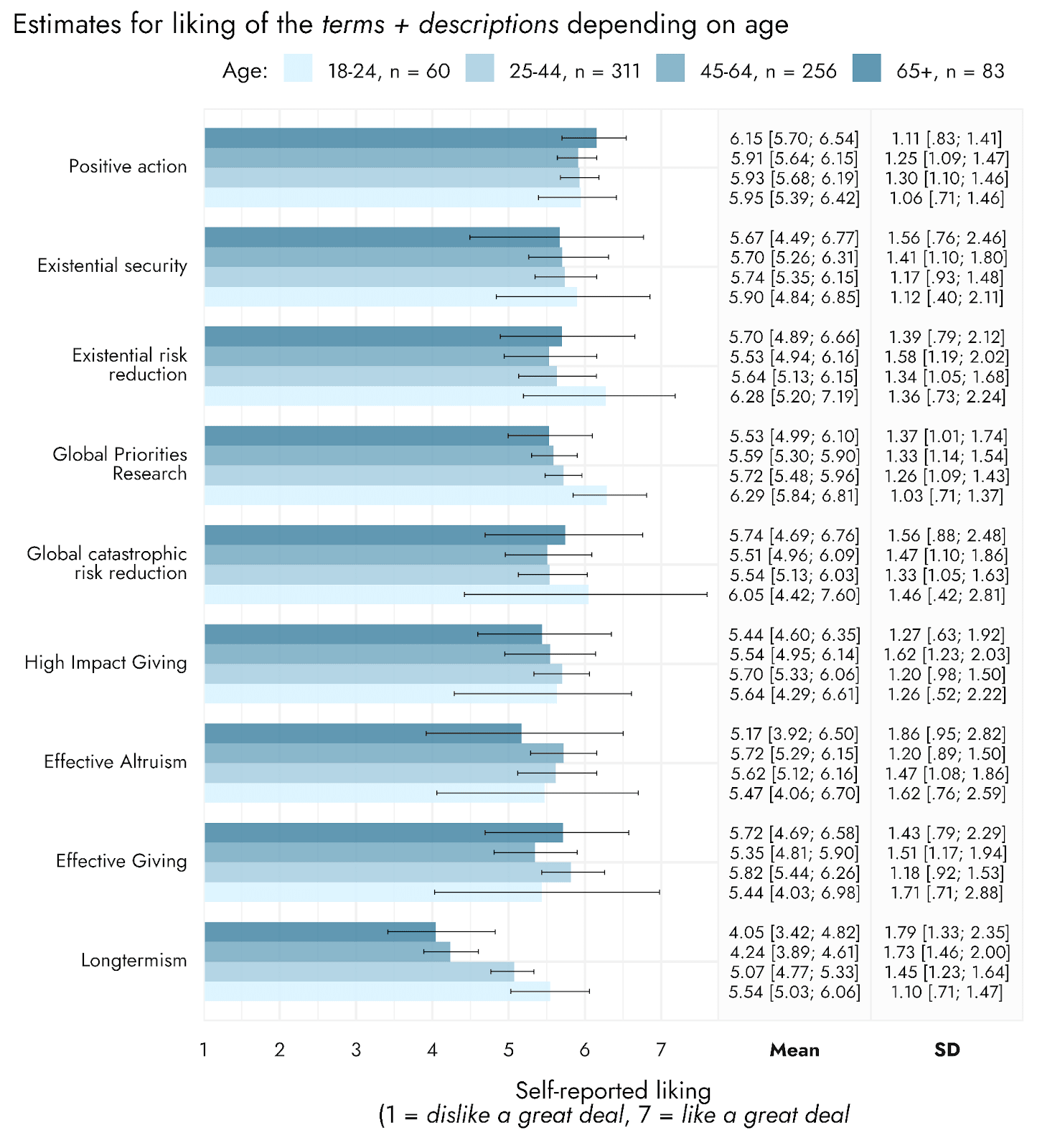

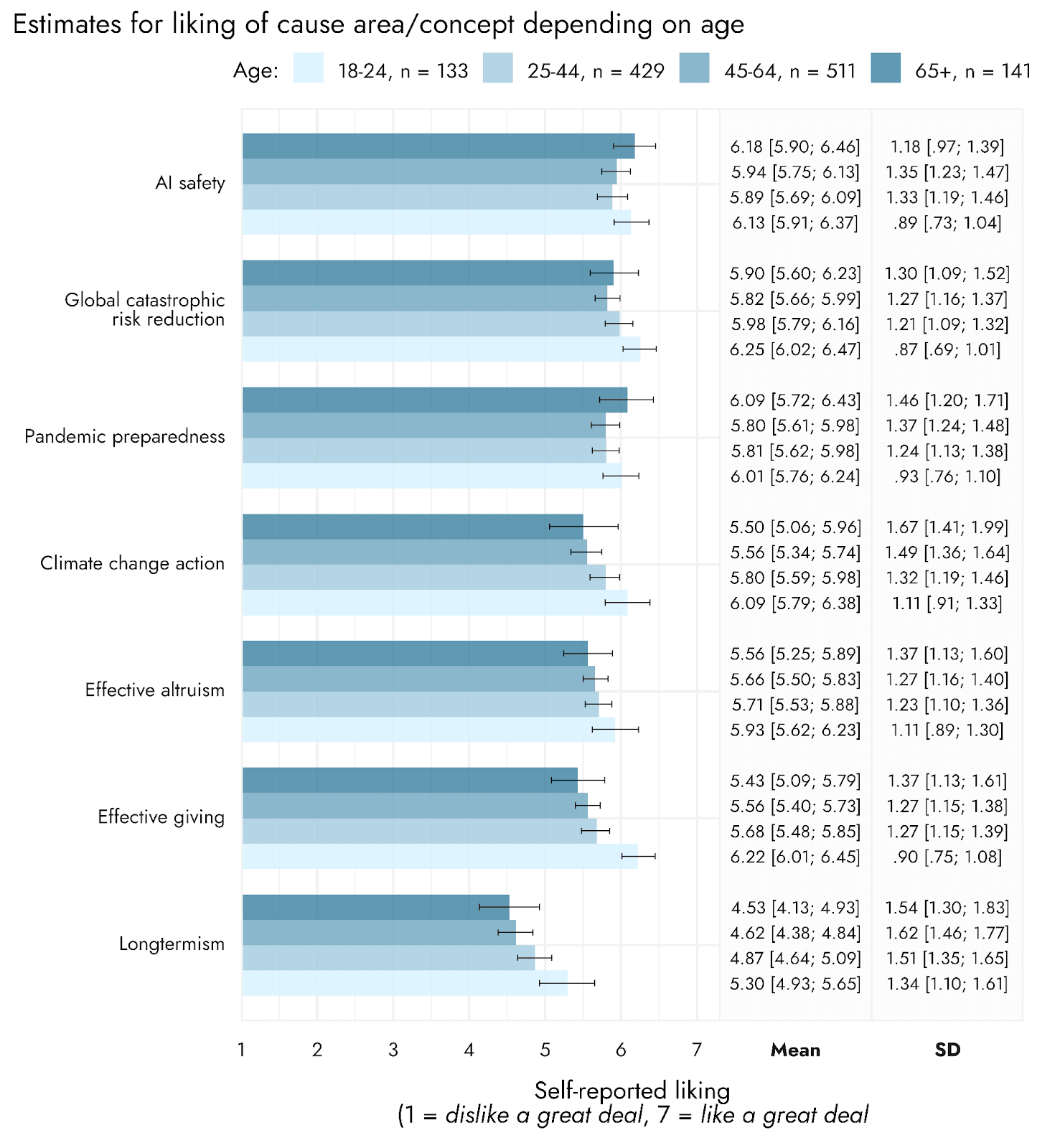

Demographics

As with the previous study, we looked at some demographic breakdowns to highlight some potential demographic patterns of interest.

Though still performing poorly overall, Longtermism was relatively more liked among younger than older respondents (this was the case when both the description only or the terms + description were considered). However, this must be caveated with the acknowledgement that the higher and lower ends of this age trend rely upon two age brackets with disproportionately fewer respondents.

Study 3. Preferences for concrete causes or more general ideas/movements

The key idea of this study was to assess the extent to which people might or might not prefer general ideas/movements (such as Effective altruism and Longtermism) relative to specific cause areas (such as AI safety or Pandemic preparedness). Climate change action was included to provide a reference to a more traditional cause area.

The respondents were a sample of approximately 1200 UK adults, weighted to reflect the UK population according to Age, Geographic Region, Education, Race/Ethnicity, Sex, and reported 2024 General Election vote. Sampling was also done using quotas for age, sex and ethnicity to reduce the need for such rebalancing.

Respondents were shown the names and descriptions of multiple EA-related ideas and cause areas. The specific items were:

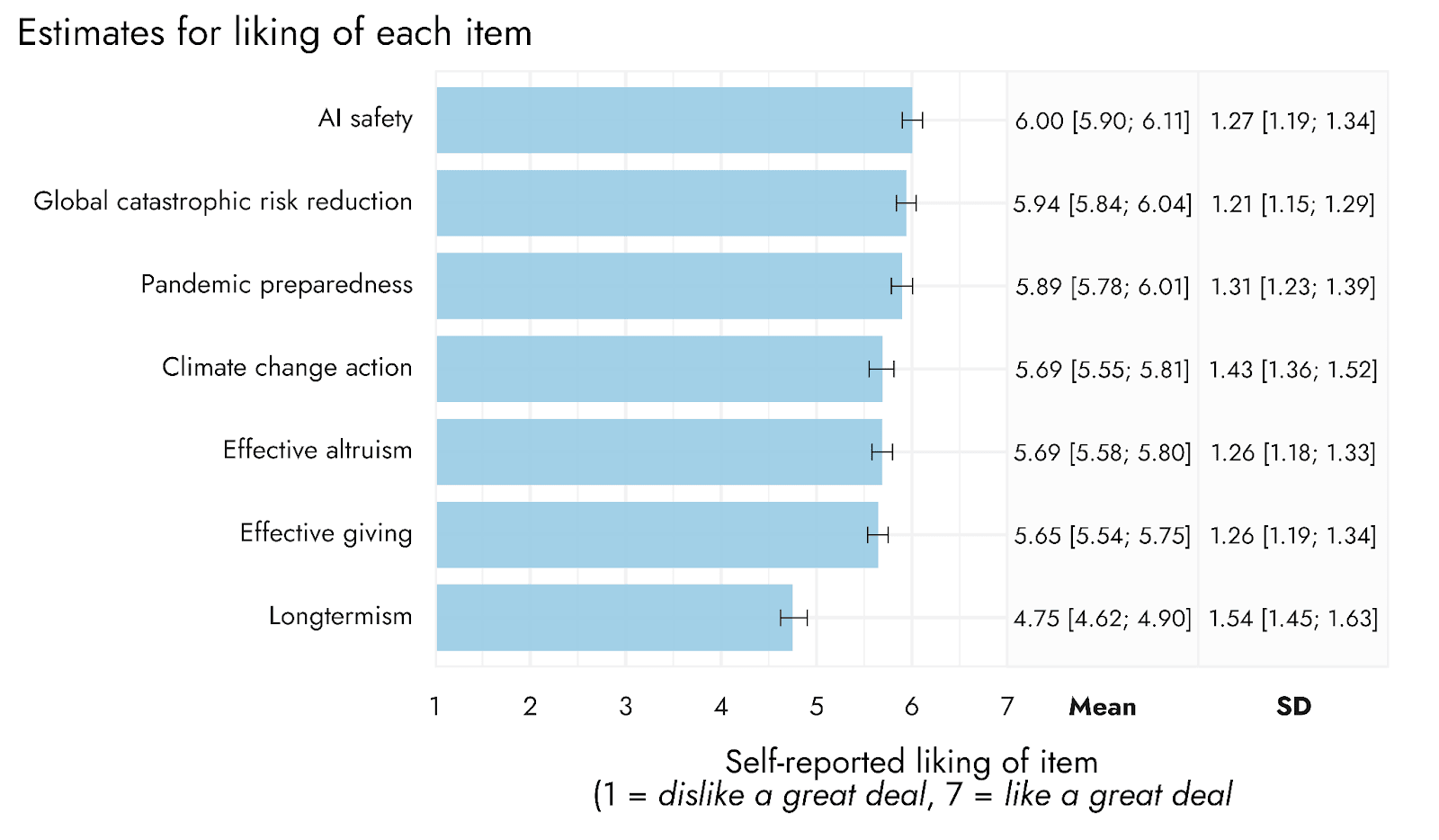

The topline results are presented in the figure below.

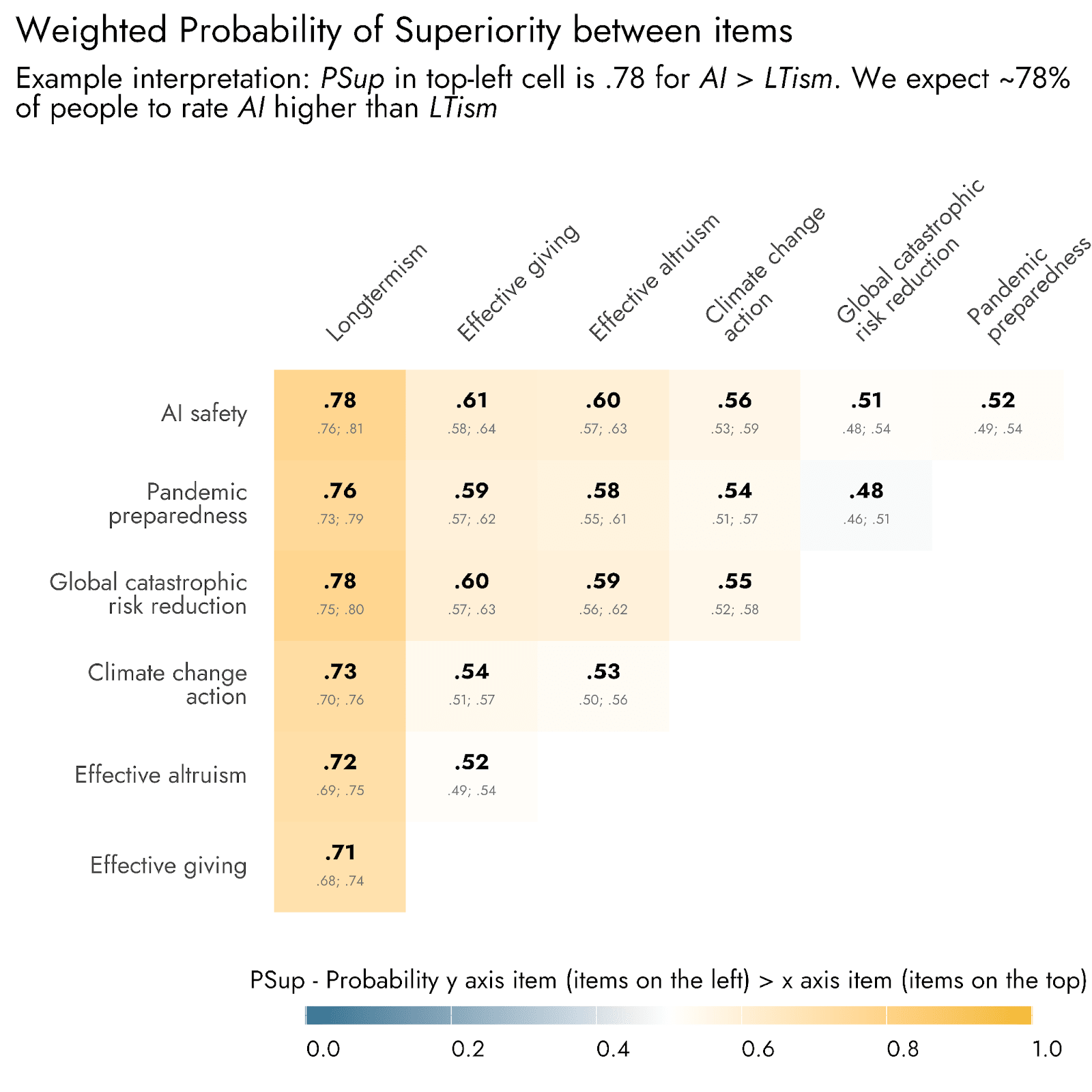

Paired comparisons among items are presented in the Probability of Superiority matrix below.

The means and probability of superiority matrix suggest three approximate tiers of the items in terms of their likability:

- AI safety, Pandemic preparedness, and Global catastrophic risk reduction (which explicitly or implicitly include the other two specific cause area) were rated most positively.

- Effective altruism, Effective Giving, and Climate change action were rated marginally lower than the three aforementioned items, and similarly to one another.

- Finally and most strikingly, Longtermism performed much worse than the other items - we estimate that if it were paired with the next lowest item in the list (Effective giving) in a forced choice, Effective giving would be preferred by around 70% of people (a greater than 2:1 ratio).

Given that a considerable degree of emphasis in Longtermism is on AI safety and Pandemic preparedness, these results might suggest that it is better to promote these two causes in and of themselves, or with reference to more general catastrophic risk reduction, as opposed to through the lens of Longtermism. However, it is worth noting that Global catastrophic risk reduction performed very similarly to both AI safety and Pandemic preparedness. Promoting this general category might be comparably effective to promoting AI safety and Pandemic preparedness.

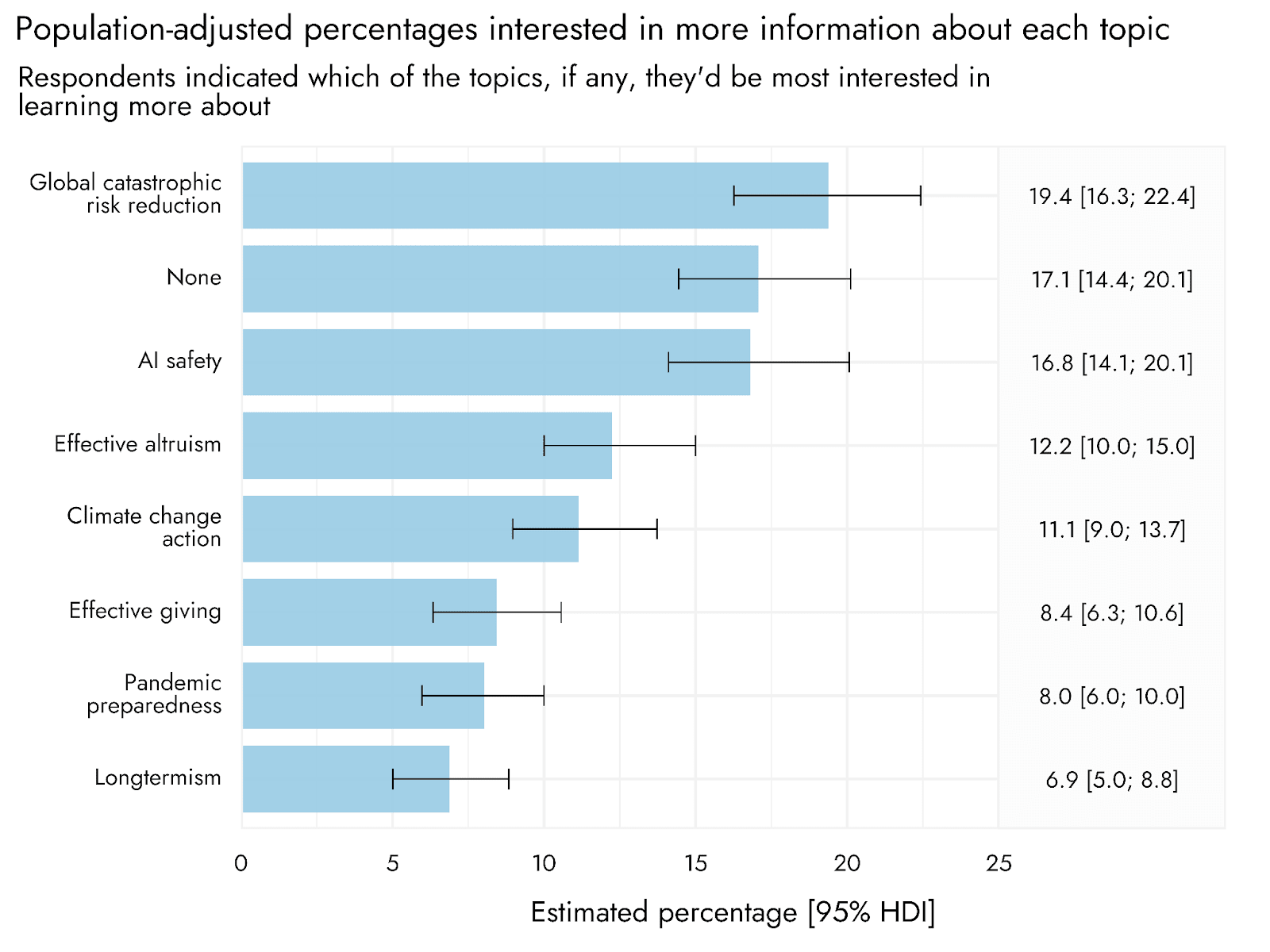

We also asked respondents which topic they would be most interested to learn more about. Responses to this question could potentially differ from those to the question about general liking of the topics. For example, respondents might like a topic, but not be interested in finding out more about it, or they might dislike a topic, but want to find out more about it.

In terms of which topics respondents would most like to learn more about, Global catastrophic risk reduction came top, with AI safety performing similarly, and Longtermism again placing last. Interestingly, Pandemic preparedness also performed relatively poorly on this metric, despite being well-liked. It is possible given the recency of the Covid-19 pandemic that people may feel they know enough about this topic as not to desire or need further information. It is quite possible that these results would differ if respondents received a more detailed explanation of Pandemic preparedness as a field (e.g., noting man-made pandemics).

Demographics

Similarly to in Study 2, Longtermism performed relatively better amongst younger respondents than older respondents (though still not very well). There were also age trends for other items, with Effective altruism, Effective giving, and Climate change action also tending to be rated more positively amongst younger than older respondents.

Manifold Market Predictions

Social science results often seem obvious with hindsight, even when people would think the opposite result seemed obvious too, if they were told it was true. Political practitioners have likewise been shown to be unable to predict which messages will be persuasive.

To assess whether the outcome of these studies would be predictable, we created several markets on Manifold.[4]

| Question | Prediction | Result | Correct? |

| Would UK respondents like “effective giving” or “AI safety” more? (study 3) | Effective giving (72%) | AI safety | Incorrect |

| Would UK respondents like “effective altruism” or “Global catastrophic risk reduction” more? (study 3) | Effective altruism (67%)

| Global catastrophic risk reduction | Incorrect |

| Would US respondents like the term “existential security” or “global catastrophic risk reduction” more? (study 2) | Global catastrophic risk reduction (67%) | Global catastrophic risk reduction | Correct |

| Would a larger share of the UK public be interested in learning about “AI safety” or about “pandemic preparedness”? (study 3) | Pandemic preparedness (67%) | AI safety | Incorrect |

| Would UK respondents like “effective giving” or “pandemic preparedness” more? (study 3) | Effective giving (62%) | Pandemic preparedness | Incorrect |

| Would US respondents like the term “longtermism” or “existential risk reduction” more? (study 2) | Existential risk reduction (70%) | Existential risk reduction | Correct |

The predictions of these markets were, at most, moderately confident (ranging between 60-72% probability), and a number of the predictions were inaccurate. Markets correctly predicted that Global catastrophic risk reduction would be preferred to Existential security, and Existential risk reduction to Longtermism, but all other predictions were inaccurate. The markets predicted that Effective giving and Effective altruism would perform better than AI safety, Global catastrophic risk reduction and Pandemic preparedness, which was not borne out by our results.

General discussion

These studies suggest a number of findings that, if confirmed in future studies, would be of significant relevance to actors involved in EA and global catastrophic risk reduction:

- Longtermism was consistently less popular than other EA-related terms and concepts we examined, whether presented as a term, description, or both, across both our studies (US and UK).

- Global catastrophic risk reduction, in contrast, appeared to be relatively well-liked. In Study 2 (US), it performed at least moderately well, whether as a term, description, or both. In Study 3 (UK), when presented as a term with description, it was the joint-first (alongside AI safety) as the item respondents most liked and were most interested in learning more about.

- Effective giving / High impact giving were relatively well-liked as terms (Study 2), but lagged behind other terms when presented with descriptions (Study 2 and 3)

- When comparing specific cause areas to broader concepts in Study 3, we found that AI safety, Pandemic preparedness, and Global catastrophic risk reduction were all comparably highly well-liked, and outperformed Effective altruism, Effective giving, and Longtermism. However, results differed for which items people would be interested in learning more about: respondents were most interested in learning more about Global catastrophic risk reduction and AI safety, but much less interested in learning more about Pandemic preparedness, perhaps because it already seems familiar to them.

- There were some differences across demographic groups, with both Digital sentience and Longtermism performing relatively better amongst younger than older respondents, though still worse than other items.

In a future post, we plan to discuss qualitative data gathered as part of these studies, to shed light on possible reasons for these patterns.

However, we would caution against updating overly strongly based only on these initial studies:

- Many of these results likely depend on the particular framing or description that was used (which is part of the motivation for this project). For example, Effective giving / High impact giving, and Longtermism, might well be more well-liked if framed differently. As such, we would encourage people to suggest framings and descriptions which they would like to see tested.

- Similarly, it does not follow from these results that no longtermist arguments or messages would be more well-received than catastrophic risk reduction arguments. Our work only directly examined the terms and descriptions themselves, while prior message testing work we conducted found significant variation in the effectiveness of different messages

- The sample sizes of these pilots mean we have statistical power only to confidently identify relatively large differences. With larger samples, we would have a more precise sense of how different framings performed and would be able to detect smaller differences. There may be robust differences between the things we tested, which were smaller than we could detect with our samples (this applies particularly to examination of demographic differences, which we can examine in more detail in larger future studies).

- In addition to testing different framings, there are various other different designs that we would want to test to assess the robustness of these findings (some of which we discuss below). For example, here we focused on asking people how much they liked different ideas, presented abstractly, but responses might differ in more concrete contexts, where we ask participants about their interest in joining a movement described in a certain way.

In future work, we aim to extend this research in several ways. We are keen to receive suggestions and requests from the community, but we highlight some specific proposals below:

- As mentioned above, we aim to test a much wider variety of different framings. This will allow us to test specific hypotheses about the causes of different responses to these framings (e.g., whether people dislike references to “extinction”, or whether people dislike framings of Effective altruism / Effective giving that sound overly demanding).

- We will examine these effects in a variety of more concrete, practical contexts. For example, we might examine people’s perceptions of overall portfolios of different causes, rather than simply individual causes. Likewise, we will use more naturalistic designs, where participants are randomly assigned to view only one of several possible framings in the context of a realistic ‘advert’ for effective altruism, and we will measure relevant endpoints (such as interest in signing up for a mailing list about effective altruism).

We have argued that decision-makers should not update too much on these specific results, as we think that the results could change when using different framings (this is one reason why it is valuable to test a variety of different framings). However, we believe that decision-makers should take seriously these kinds of findings (especially when validated in further studies), as we think they are highly consequential for understanding how best to frame and communicate our efforts.

Rethink Priorities is a think-and-do tank dedicated to informing decisions made by high-impact organizations and funders across various cause areas. We invite you to explore our research database and stay updated on new work by subscribing to our newsletter.

- ^

In saying so, we do not mean that advocates and movement builders should mold their outreach just to what people like or ‘want’, and disguise their true beliefs. We believe that such message and framing testing can ensure that core principles are conveyed in a way that people can grasp, and which is not offputting or otherwise counterproductive for reasons that could be averted.

- ^

Based on this initial test of a small number of framings, it is unclear to what extent this reflects general differences across the causes, rather than idiosyncrasies of the two framings we tested for each. The results for pandemic preparedness may be due to the recency of the Covid-19 pandemic and discussion surrounding it meaning that people do not see statements about the possible end of humanity resulting from pandemics as being as alarmist as they might be with respect to other threats. Similarly, the wide ranging public discussion about climate change may have prepared people to be more receptive to solutions that could be viewed as relatively extreme in other contexts, such as ‘geoengineering’. It is also possible that we simply failed to select framings that differed sufficiently from one another in relevant ways, highlighting the need for a wider, more systematically designed set of framings.

- ^

Note that the descriptions for Effective Giving and High Impact Giving are the same, as are the descriptions of Global Catastrophic Risk Reduction and Existential Risk Reduction. To prevent confusion/crossover effects, when terms + descriptions were provided, respondents randomly saw the description labeled as either one or the other of these terms, rather than seeing the same description twice, with a different label. In addition, to prevent fatigue and avoid further crossover from highly similar items, respondents seeing descriptions or terms + descriptions, rather than just the terms, saw only five of the items, with items swapped out to prevent highly similar ones being shown (e.g., not seeing descriptions of both Effective Altruism and Effective Giving).

- ^

We limited markets to results which we knew ex post were statistically significant, to avoid ambiguity and complication regarding non-significant differences between items, and we avoided duplicating markets with pairwise comparisons involving the same item. For future studies, it may be interesting to create markets ex ante for a larger number of questions, which would serve as a stronger test of how predictable the results were.

Great stuff! Many thanks to the team and whoever commissioned this! Looking forward to future research in this direction.

Thanks! This was supported via Manifund and then topped up by Open Philanthropy after they saw it on there (so thanks to our donors on there and to Open Phil!).

Thanks James!

This is great, thinks for doing it!

I apologize if this was listed above and I missed it, but what was the specific question you asked? "How much do you like the following terms?"

Thanks Ben!

For descriptions or descriptions with terms the questions were "How much do you like or dislike each of the following?" (Study 2) and "Please indicate the extent to which you like or dislike the following" (Study 3), both asked on a 7 point scale from "Dislike a great deal" - "Like a great deal".

For terms only, we included some more instructions to ensure that people evaluated the terms specifically, rather than trying to evaluate the things referred to by the terms: "Based only on the terms provided below, how much do you like or dislike each of the following? (Please note that you do not have to have heard of the terms to answer. Please just answer based on the names below without looking up additional information)." This was also asked on a 7 point scale from "Dislike a great deal" - "Like a great deal".

For cause areas (Study 1), the question: "Based on the description of each cause area below, please indicate to what extent you like or dislike a movement that promotes giving resources to this area." And, again, we used the scale 7 point scale from "Dislike a great deal" - "Like a great deal".

Since Longtermism as a concept doesn't seem widely appealing, I wonder how other time-focused ethical frameworks fare, such as Shorttermism (Focusing on immediate consequences), Mediumtermism (Focusing on foreseeable future), or Atemporalism (Ignoring time horizons in ethical considerations altogether).

I'd guess these concepts would also be unpopular, perhaps because ethical considerations centered on timeframes feel confusing, too abstract or even uncomfortable for many people.

If true, it could mean that any theory framed in opposition, such as a critique of Shorttermism or Longtermism, might be more appealing than the time-focused theory itself. Critizising short-term thinking is an applause light in many circles.

I agree this could well be true at the level of arguments i.e. I think there are probably longtermist (anti-shorttermist), framings which would be successful. But I suspect it would be harder to make this work at the level of framing/branding a whole movement, i.e. I think promoting the 'anti-shorttermist' movement would be hard to do successfully.

Executive summary: Rethink Priorities conducted studies testing public responses to different framings of Effective Altruism (EA) and related concepts, finding that longtermism was consistently less popular than other terms, while global catastrophic risk reduction was relatively well-liked.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.

The summary was generally good, but I wouldn't say the above exactly. In the one study where we tested specific causes against broader concepts, AI Safety and Pandemic preparedness were roughly neck and neck with the general broader concept Global catastrophic risk reduction. Those three were more popular than Climate change (specific), Effective Altruism and Effective Giving (broader), which were neck and neck with each other. And all were more effective than Longtermism. So there wasn't a clear difference between specific cause area vs broader concept distinction.

do you have a sense of how to interpret the differences between options? E.g. I could imagine that basically everyone always gives an answer between 5 and 6, so a difference of 5.1 and 5.9 is huge. I could also imagine that scores are uniformly distributed between the entire range of 1-7, in which case 5.1 vs 5.9 isn't that big.

Relatedly, I like how you included "positive action" as a comparison point but I wonder if it's worth including something which is widely agreed to be mediocre (Effective Lawnmowing?) so that we can get a sense of how bad some of the lower scores are.

Thanks Ben!

I don't think there's a single way to interpret the magnitude of the differences or the absolute scores (e.g a single effect size), so it's best to examine this in a number of different ways.

One way to interpret the difference between the ratings is to look at the probability of superiority scores. For example, for Study 3 we showed that ~78% of people would be expected to rate longtermism AI safety (6.00) higher than longtermism (4.75). In contrast, for AI safety vs effective giving (5.65), it's 61%, and for GCRR (5.95) it's only about 51%.

You can also examine the (raw and weighted) distributions of the responses. This allows one to assess directly how many people "Like a great deal", "Dislike a great deal" and so on.

You can also look at different measures, which have a more concrete interpretation than liking. We did this with one (interest in hearing more information about a topic). But in future studies we'll include additional concrete measures, so we know e.g. how many people say they would get involved with x movement.

I agree that comparing these responses to other similar things outside of EA (like "positive action" but on the negative side) would be another useful way to compare the meaning of these responses.

One other thing to add is that the design of these studies isn't optimised for assessing the effect of different names in absolute terms, because we every subject evaluated every item ("within-subjects"). This allows greater statistical power more cheaply, but the evaluations are also more likely to be implicitly comparative. To get an estimate of something like the difference in number of people who would be interested in x rather than y (assuming they would only encounter one or the other in the wild at a single time), we'd want to use a between-subjects design where people only evaluate one and indicate their interest in it.

This is great work, thank you very much everyone. It's given me a lot to think about, especially the surprisingly poor performance of longtermism. I will be reframing somewhat my own arguments and terminology in future based on this information. I'd like to see more research, however, as to why longtermism performed poorly in comparison with global catastrophic risks, because many of the latter play out on a long-term timescale. So there's a bit of a contradiction.

Thanks Deborah!

I think there are likely multiple different factors here: