Abstract

AI safety field-building faces significant coordination challenges as the field rapidly expands. This paper introduces the Systemic Design Framework for AI Safety Field-Building (SyDFAIS[1]), a novel framework that integrates Systemic Design methodology with field-building practice. Using BlueDot Impact as a case study, I present a seven-step framework, describing main activities applied to the current AI safety landscape with hyperlinked tools for practitioners. This research contributes both to a theoretical understanding and practical guidance for field-building in the AI safety context, concluding with implementation considerations and priorities for future work.

In Brief: The SyDFAIS Framework

Step | Main Activities | Tools |

|---|---|---|

Step 1 Framing the System |

| |

Step 2 Listening to the System |

| |

Step 3 Understanding the System |

| |

Step 4 Defining the Desired Future |

| |

Step 5 Exploring the Possibility Space |

| |

Step 6 Planning the Change Process |

| |

Step 7 Fostering the Transition |

|

Table 1: Systemic Design Framework for AI Safety Field-Building (SyDFAIS)

1. Introduction

Research Question

Using BlueDot Impact as a case study, how can a Systemic Design methodology contribute to effective AI safety field-building?

Why Field-Building in AI Safety?

Field building is a particular approach to catalyzing change. According to Bridgespan Group's comprehensive research analyzing 35+ fields and conversations with 30+ field leaders, field building represents a strategic approach to achieving population-level change through coordinated collective action. Field building goes beyond scaling individual organizations - it involves deliberately strengthening the ecosystem of actors working toward shared goals. This includes developing infrastructure, establishing shared knowledge bases, and fostering collaboration across various stakeholders.

The AI safety field currently faces critical coordination challenges that threaten its effectiveness at a crucial time. Current challenges include:

- Lack of consensus on fundamental assumptions and definitions around safety and alignment

- Fragmentation between different approaches (e.g., technical vs. governance)

- Absence of a coordinated field-level agenda and strategy

- Scattered infrastructure leading to duplicated efforts

- Concentrated funding sources and limited public awareness/support

With potentially transformative AI systems on the horizon, the need for field-wide coordination has never been more urgent. While field-building methodologies exist in other domains (e.g., public health, education reform), few, to my knowledge as of January 2025, have been specifically adapted for the unique challenges of AI safety.

Why BlueDot Impact?

BlueDot Impact (here onwards referred to as BlueDot) stands as a prominent organization in the AI safety ecosystem, primarily known for operating AI Safety Fundamentals—the largest and most well-known AI safety educational programs globally.

Several factors make BlueDot an appropriate case study for applying a field-building methodology:

- Their position as a trusted neutral party with established relationships across various AI safety approaches and stakeholders

- Their proven operational capabilities in delivering large-scale educational programs

- Their unique vantage point at the intersection of technical, governance, and strategic aspects of AI safety

- Their interest to address system-level challenges in field building beyond scaling individual interventions

- Their access to generous, mission-aligned funding

What is the Systemic Design Methodology?

Systemic Design is a methodology for addressing complex problems by combining the strengths of two approaches - systems and design thinking.

Systems thinking focuses on the whole, examining how different elements influence each other and shape system-wide outcomes. It asks “How did we get here?” and involves

- Framing: Who and what is involved?

- Listening: What are the most influencing factors?

- Understanding: What is causing the behavior?

Design thinking focuses on the parts, creating effective products and services through structured problem-solving and prototyping. It asks “How do we get there?” and involves

- Exploring: What are the leverage possibilities?

- Designing: How can we intervene?

- Fostering: How can we care about the transition?

The Systemic Design methodology is claimed to be particularly powerful because it bridges the gap between macro-level system understanding and micro-level intervention design. Rather than treating these as separate domains, it recognizes that effective change requires working at both levels simultaneously. This hybrid approach may allow field builders to both understand the big picture and leverage that understanding to act effectively at specific intervention points towards system-level impact.

Systemic Design has found its way into various fields, like infrastructure, transportation, housing, public services, citizen participation, and social innovation However, I could not find analysis of how this methodology actually performs across different cases. This makes it challenging to determine whether Systemic Design is more effective in some fields than others—a particularly relevant question as we consider its potential application to the emerging field of AI safety.

Selecting a toolkit

System Design toolkits are a collection of tools and techniques available to practitioners. They are designed to facilitate stakeholder engagement and collaboration without requiring deep expertise in the field of Systemic Design. My limited desktop research revealed three prominent toolkits.

In this research, I chose to use the Systemic Design Toolkit for three reasons:

- its (apparent) wider adoption in practice

- its development through a multi-stakeholder collaboration[2]

- its open accessibility

The other toolkits are

- Follow the Rabbit: A Field Guide to Systemic Design by CoLab (open access)

- The Service Design Network's toolkit (pay wall)

The Systemic Design toolkit

The Systemic Design Toolkit consists of 7 steps:

Step 1 - Framing the System (Systems Thinking) Setting the boundaries of your system in space and time and identifying the hypothetical parts and relationships. |

Step 2 - Listening to the System (Design Thinking) Listening to the experiences of people and discovering how the interactions lead to the system’s behavior. Verifying the initial hypothesis. |

Step 3 - Understanding the System (Systems Thinking) Seeing how the variables and interactions influence the dynamics and emergent behavior. Identifying the leverage points to work with. |

Step 4 - Defining the Desired Future (Design Thinking) Helping the stakeholders articulate the common desired future and the intended value creation. |

Step 5 - Exploring the Possibility Space (Systems Thinking) Exploring the most effective design interventions with potential for system change. Defining variations for implementation in different contexts. |

Step 6 - Planning the Change Process (Design Thinking) Defining and planning how your organisation and eco-system should (re-)organise to deliver the intended value. |

Step 7 - Fostering the Transition Defining how the interventions will mature, grow and finally be adopted in the system. |

The toolkit offers a library of ~30 practical tools accompanying each step. Rather than cataloging the complete toolkit here, I have carefully selected 13 tools deemed most relevant for the context of BlueDot’s AI Safety field-building strategy, presented in the Application section (Table 1).

2. Application

In this section, I apply the Systemic Design methodology to AI Safety field-building. My analysis includes an attempt to adapt and contextualize each step based on my assessment of

- BlueDot’s strategy

- the current AI Safety landscape

- the current state of AI Safety field-building

To make the concepts presented in each step more accessible to those less familiar with systems and design thinking, my analysis includes practical examples referring to current actors in the AI safety landscape, however this is more for illustration purposes than a thorough assessment of critical paths forward. The section concludes with an original Systemic Design Framework for AI Safety Field Building (SyDFAIS) (Table1).

BlueDot’s 2025 strategy shows its desire to pivot towards AI safety field-building, potentially restructuring into four specialist teams:

- Strategy coordination: build the plan for AI safety

- Training: develop AI safety talent (their established core function)

- Placements: connect talent with AI safety roles

- Public communications: create conditions for success

The primary application of SyDFAIS is assumed to fall primarily under BlueDot's strategy function, but the outlined steps are likely to intersect with all four teams' objectives, potentially offering a unified framework for BlueDot’s field-building activities.

Step 1: Framing the System

In framing the AI safety system, we begin with mapping the field’s fundamental architecture - establishing the basic boundaries of who exists within the field and their primary functions.

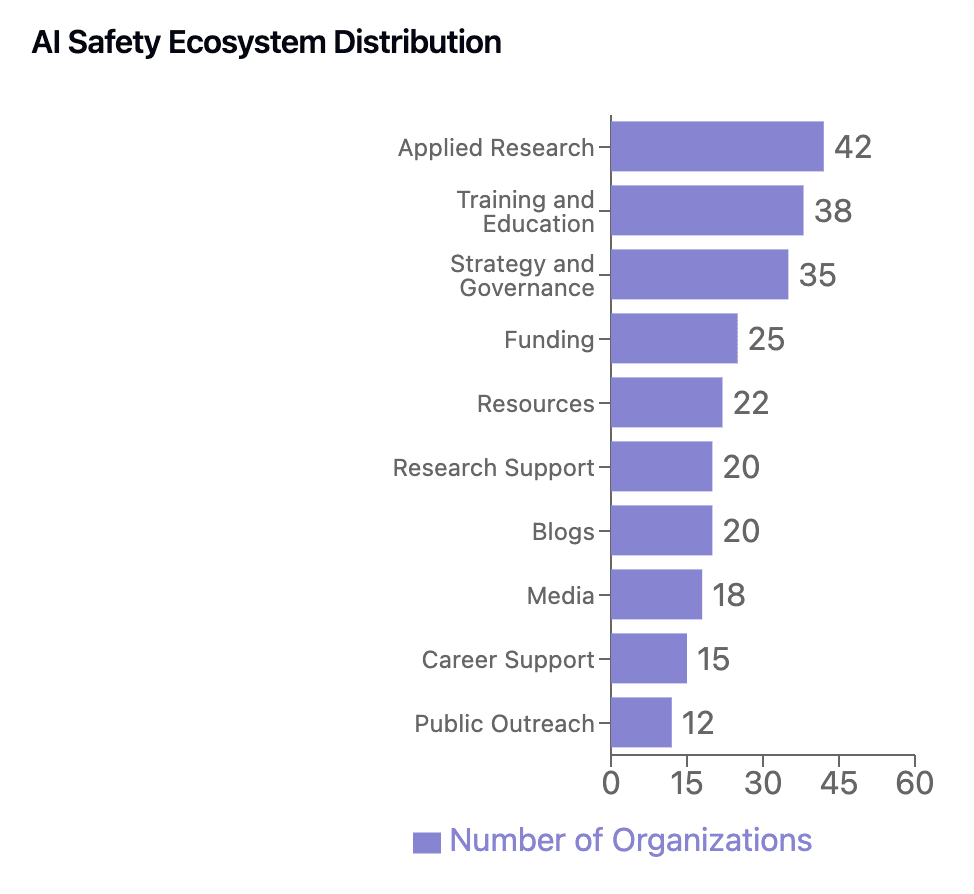

As of December 2024, the AI Safety landscape encompasses 247 distinct actors across 10 functional categories - including major research institutions like DeepMind and Apollo, funding organizations like Open Philanthropy, educational initiatives like AI Safety Camp and SERI Fellowship, and numerous other actors across policy, governance, and public engagement domains.

BlueDot's position as a field catalyst would require them to engage in similar structural mapping, presumably through its strategy team's work, asking:

- Which organizations fund research?

- Who provides educational resources?

- Where does policy work happen?

The process includes recognizing established power centers, emerging initiatives, and how different functions are distributed geographically - while remaining open to how these boundaries might evolve as the field develops. For BlueDot, this means maintaining an updated understanding of the field's composition and dynamics, which informs their own strategic planning and helps other organizations understand where they fit in the broader ecosystem - essential groundwork for deeper system analysis and coordinated action.

Figure 1: AI Safety Ecosystem Distribution (AISafety.com)

Step 2: Listening to the System

While Step 1 establishes what exists on the surface, Listening to the System delves into how these actors actually interact and influence each other. This deeper examination reveals the dynamics that animate the structural blueprint - how knowledge truly flows between organizations, what enables or inhibits collaboration, and how different approaches to AI safety work together or conflict in practice.

Through studying the AI safety landscape, this step uncovers the lived experiences and perspectives shaping the ecosystem's behavior. It examines how research insights move between technical and policy domains, how funding decisions actually influence research directions, and what cultural or philosophical differences affect field-wide coordination.

For example, how - if any - do

- Established research institutions like DeepMind and Anthropic interact with emerging initiatives?

- Educational programs like AI Safety Fundamentals feed into the broader ecosystem?

- Organizations like 80,000 Hours connect talent to opportunities?

- Forums like LessWrong facilitate knowledge sharing?

Listening also includes understanding emotional and relational factors:

- Why do certain organizations collaborate effectively while others remain distant?

- How different approaches to AI safety affect community cohesion?

- What unstated assumptions or beliefs influence field development?

Ultimately, this reveals potential tensions, for example between technical alignment research and policy advocacy, gaps in how knowledge spreads through the community, and varying approaches to talent development. Such understanding becomes essential for later stages of the design process, ensuring that interventions work with, rather than against, the field's existing dynamics.

Step 3: Understanding the System

If Step 1 maps what exists and Step 2 reveals how actors interact, Step 3 seeks to understand why the system behaves as it does and where it might be most effectively influenced.

This deeper analysis reveals causal loops and feedback mechanisms. For instance, we might observe how funding flows create reinforcing cycles:

Organizations with strong research output → attract more funding → enabling more research → leading to greater influence

Or we might see balancing loops:

Media communications → leads to increased public concern about AI safety → leads to hasty policy responses → inadvertently reduces meaningful safety work

The analysis examines variables that change over time and influence the system's behavior. In AI safety, these might include the rate of technical research progress, the availability of qualified researchers, the level of coordination between organizations, or the quality of knowledge transfer between technical and policy domains. Each variable can be enhanced or diminished by others, creating complex webs of influence.

Critical to this step is identifying leverage points - places in the system that help reveal where targeted interventions could catalyze significant impact and strengthen the field as a whole. These might include:

- Strengthening knowledge-sharing infrastructure between research organizations

- Creating better coordination mechanisms between technical and governance work

- Developing more effective ways to translate research insights into policy recommendations

Step 4: Defining the Desired Future

This step involves articulating a comprehensive vision across multiple levels - from the individual, organizational, and societal level impact. The desired future must be considered through various lenses: economic sustainability of the field, social cohesion among different approaches, and psychological well-being of practitioners, and broader societal outcomes.

Individual level (micro)

At the individual level, examining the AI safety landscape suggests several desired outcomes: clear career progression paths for researchers, robust training opportunities, and sustainable funding for independent research. For instance, we might envision a future where talented researchers can smoothly transition from programs like AI Safety Fundamentals into roles at organizations like Anthropic or GovAI, with clear development pathways.

Organizational level (meso)

At the organizational level, the vision encompasses stronger institutional frameworks and ecosystem health. This might include better coordination mechanisms between technical research labs and policy organizations, shared infrastructure for knowledge dissemination, and robust funding channels. Looking at the current landscape, this could mean evolving the relationship between research institutions like DeepMind and policy organizations like the Center for AI Policy, creating more effective ways to translate technical insights into governance recommendations.

Societal level (macro)

At the societal level, the vision extends to broader impacts and systemic change. This includes developing widespread public understanding of AI safety challenges, establishing effective governance frameworks, and creating conditions for responsible AI development. The landscape map shows emerging initiatives in public communication and policy advocacy that could be strengthened and scaled.

Each of these levels interacts with the others. For example, individual career sustainability enables organizational stability, which in turn supports broader societal impact. The value proposition must consider both immediate benefits (like improved research coordination) and longer-term transformational goals (like establishing effective global governance mechanisms).

Envisioning what should exist in the future may be particularly challenging in the AI safety context due to high levels of uncertainty. It could be feasible to imagine multiple target states to work towards. The idea here is to provide a reference point for subsequent steps in the systemic design process, helping ensure that interventions align with the field's ultimate goals rather than just addressing immediate challenges.

Step 5: Exploring the Possibility Space

Unlike previous steps that focus on understanding what exists or defining what should exist, Step 5 specifically explores how to bridge that gap. Exploring the Possibility Step moves from understanding to action by plotting potential interventions not in isolation but as part of an interconnected strategy that addresses multiple aspects of the system simultaneously.

BlueDot’s four team structure (strategy, training, placements, communications) represents one such exploration of how different interventions might work together, where each team addresses different dimensions of the system: This structured approach to intervention creates reinforcing effects - for instance, when strategic insights inform training programs, which then feed talent into well-matched placements, all supported by effective communication channels.

However, Exploring the Possibility Space suggests opportunities to expand and refine this approach. For example, additional interventions might be needed in areas like rules/regulations or balancing functions that may not be fully covered by the current proposed team structure. This framework also helps identify how each team's work could be enhanced through more deliberate attention to systems-related aspects like delays, information flows, and self-organization mechanisms within their respective domains.

Non-exhaustive examples

| Systems-related aspects | Potential interventions |

| Goals | Creating shared metrics for field progress and impact |

| Self-organization | Developing shared research agendas, collaborative funding models |

| Information flows | Improving knowledge transfer, feedback loops between research groups |

| Digital structures | Building better collaboration platforms |

| Delays | Streamline funding processes |

This systematic exploration of possibilities helps ensure that interventions are both comprehensive in scope and mutually reinforcing in practice, creating a more robust bridge between the current state of the field and its envisioned future.

Step 6: Planning the Change Process

Whereas previous steps focus on understanding the system or exploring possibilities, Planning the Change Process involves creating a concrete roadmap for change, detailing how different elements of the field need to evolve over time and in relation to each other to achieve the desired transformation. This means creating a comprehensive theory of systems change that maps interventions across multiple dimensions (e.g. societal, operational, financial, etc) and time horizons. Let's explore this through the structured framework of inputs/activities → outputs → outcomes → impacts → strategic impact:

Short Term (1 year): The societal layer might begin with activities like AI Safety Fundamentals' educational programs and BlueDot's coordination efforts as inputs, producing outputs like well-trained professionals and improved field coordination mechanisms. At the operational level, organizations like Constellation might focus on building basic infrastructure for knowledge sharing and collaboration.

Mid Term: These initial activities should lead to concrete outcomes: the educational programs creating a reliable talent pipeline, coordination mechanisms enabling better research collaboration, and infrastructure supporting more efficient knowledge transfer. The socioeconomic layer might see emerging career pathways and professional networks taking shape, while the financial layer could show more diversified funding models developing through organizations like Longview and SFF (Survival and Flourishing Fund).

Long Term (Strategic Impact): The sustaining purposes begin to manifest - a robust, self-reinforcing field with clear career paths, effective coordination mechanisms, and stable funding structures. This builds toward broader impacts like improved AI safety research quality and better integration of technical insights into policy. The ultimate strategic impact would be a mature field effectively addressing AI safety challenges through coordinated action.

Throughout this progression the model must account for:

- Strategic Assumptions: Such as beliefs about AI development timelines and the effectiveness of different safety approaches

- Expected Risks: Including coordination failures, resource constraints, or misalignment between technical and policy efforts

- Tail Risks: Potentially including unexpected technological breakthroughs or major policy shifts

Although outside the scope of this paper, it may be worth considering how Step 6 relays with Bridgepan’s field-building model, consisting of five observable success characteristics (Knowledge base, Actors, Field-level agenda, Infrastructure, Resources) across three phases (Emerging → Forming → Evolving & Sustaining).

Step 7: Fostering the Transition

Fostering the Transition in AI safety field-building means planning how interventions evolve from initial pilots to field-wide adoption. This multi-horizon approach acknowledges that transformation doesn't happen all at once, that each transition phase builds on previous successes while maintaining the flexibility to adapt to changing circumstances, requiring careful orchestration across three distinct phases:

1. Incubating phase: BlueDot's strategy team can help identify and support promising pilot initiatives. Rather than running all programs themselves, they work to understand where different organizations' efforts fit into the broader ecosystem. For instance, while ARENA focuses on ML engineering upskilling and SERI develops research fellowships, BlueDot's role is to help these organizations understand how their initiatives contribute to field-wide development and where they might connect with other efforts.

2. Connecting phase: BlueDot's placement and communications teams serve as crucial bridges. They help connect successful pilot programs with potential adopters and supporters across the ecosystem. For example, they might help the Center for AI Safety identify policy organizations that could benefit from their technical insights, or help Future of Life Institute target their outreach more effectively. BlueDot's role here isn't to control these connections but to facilitate them and help organizations see opportunities for collaboration they might otherwise miss.

3. Scaling Up phase: BlueDot's four-team structure (strategy, training, placements, communications) works to identify successful practices and help spread them across the field. Rather than trying to standardize everything themselves, they help organizations like the AI Safety Communications Centre and the Center for Long-Term Resilience understand how their work can become field infrastructure. Their training team can help spread best practices, while their communications team helps build field-wide awareness of what's working.

Throughout this process, BlueDot acts as a coordinator and facilitator rather than a central authority. Their value comes from their bird's-eye view of the field and their ability to help different organizations understand how their work fits into the broader transition. This helps ensure that the field's development is coordinated without being controlled, enabled without being owned - allowing for both structured evolution and organic growth.

3. Discussion

The following analysis examines the theoretical and practical contributions and challenges of applying SyDFAIS in AI safety field-building.

Theoretical Considerations

The theoretical foundation of SyDFAIS represents a novel integration of Systemic Design principles with AI safety field-building needs.

Contributions:

- Bridges macro-level understanding with micro-level interventions

- Provides conceptual framework for handling complex, multi-stakeholder dynamics

- Enables systematic approach to emergent properties in AI safety field development

Challenges:

- Reconciling epistemological tensions between different knowledge systems (e.g. systems and design thinking) and approaches to safety (e.g. technical and governance)

- Balancing structure and rigor with fluidity and agility

- Difficulty in testing theoretical assumptions with limited precedents for comparison

Practical Considerations

SyDFAIS lends itself to practical application even with limited expertise in Systemic Design, yet not without challenge translating theory into action.

Contributions:

- Provides a holistic, operational framework for AI safety field-building

- Offers distinct yet interconnected pathways for field-building activities

- Provides concrete tools for ideation, planning, and implementation

- Enables responsive adjustment, learning, and iterative improvement

Challenges:

- Limited availability of expertise in Systemic Design

- Time investment required for proper implementation

- Competing priorities for funding allocation

- Lacks clearly developed systems-level success metrics

Implementation Considerations for BlueDot Impact

Drawing from both theoretical and practical insights, three key priorities emerge for BlueDot Impact's immediate consideration:

Invest in systems design expertise

- Focus initially on upskilling the strategy team through targeted training programsPartner with systems change consultants for periodic reviews and guidance

- Develop shared tools and frameworks that enable consistent approach across teams

Establish cross-team coordination mechanisms

- Create regular touchpoints between strategy, training, placements, and communications teams

- Develop shared tools and frameworks that enable consistent approach across teams

- Implement quarterly review sessions to assess and adjust coordination effectiveness

Build systematic learning Infrastructure

- Design clear processes for capturing insights from each team's field-building activities

- Establish metrics for tracking progress in field-building initiatives

- Create accessible knowledge base for sharing learnings across the organization

4. Conclusion

The development of the Systems Design Framework for AI Safety Field-Building (SyDFAIS) represents a potential milestone in the emergence of AI safety field-building. It offers both theoretical foundations and practical frameworks for coordinated action through Systemic Design principles, which was explored using BlueDot as a case study offering. However, future work must address theoretical and practical considerations to adequately support the complex task of building a field that safely navigates the development of transformative AI.

Future Work

- Systems design expertise development

- Creation of specialized training programs for AI safety field-builders

- Development of context-specific systemic design tools and methods

- Research into effective integration of systems and design thinking approaches in AI safety

- Measurement and evaluation frameworks

- Development of clear success metrics for field-building initiatives

- Creation of assessment tools for measuring field-wide progress

- Design of feedback mechanisms for continuous improvement

- Implementation guidelines

- Creation of detailed implementation playbooks for organizations

- Development of risk assessment and mitigation strategies

- Research into effective change management approaches for field-building

Suggested Resources

- Adaptive Management for Complex Change

- How Field Catalysts Galvanize Social Change

- Field Building for Population-Level Change

- Guide to Systems Change

- Systemic Design Toolkit

- Systemic Design Toolkit Guide

- Building a Systems-Oriented Approach to Technology and National Security Policy

- Thinking Big: How to Use Theory of Change for Systems Change

Acknowledgements

This write up is a result of ~20 hours of research as part of a capstone project submission for BlueDot Impact’s AI Safety Fundamentals Technical course. Special thanks to Adam Jones Jones for contextual stepping stones, Lovkush Agarwal for 13 week-strong conversational bridges, and Hunter Muir for dreaming with me in systems land.

This is a valuable synthesis! The SyDFAIS approach feels like it ties together the big-picture need for AI safety coordination with steps for coordinating organizations. I love how it moves beyond the usual “let’s fix AI safety” rhetoric and acknowledges that this is a multi-faceted problem that requires real collaboration and coordination among different players in the space.

It’s easy to assume we know all the key players or challenges, but your emphasis on mapping actual interactions feels crucial. We can’t just “fund our way” to better coordination; we need to understand who’s doing what, see how these organizations interact dynamically, and how these efforts overlap—or conflict.

One part that really caught my attention was Step 5 (Exploring the Possibility Space). It’s all too common in AI safety to keep rehashing the same interventions—fund more interpretability research, fund more policy fellows—but this step seems to challenge us to look for novel strategies and synergy across different fields. It’s a reminder we can’t just spend our way out of the alignment problem.

It’s easy to wave our hands about “holistic approaches,” but seeing those steps spelled out—Framing, Listening, Understanding, etc.—makes it much more tangible.

A couple of questions for anyone here:

-Have you seen real-world success stories of a structured framework like this that dramatically improved coordination among diverse stakeholders?

-Are there systems-based coordination efforts already happening among orgs within the AI safety space? Coming from an understanding of the entire system (or at least parts of it)

Keen to hear different viewpoints and any pushback, especially from those who’ve seen frameworks like this either flop or succeed in other complex domains.

I think this type of thinking and work is useful and important.

It's very surprising (although in some ways not surprising) that this analysis hasn't been done before elsewhere.

Have you searched for previous work on analyzing the AI safety space?

If OpenPhil (and others) are in the process of funding billions of dollars of AI safety work and field building, wouldn't they themselves do some sort of comprehensive analysis or fund / lean on someone else to do it?

Thanks Matt.

Based on limited desktop research and two 1:1s with people from BlueDot Impact and GovAI, the types of existing analysis are fragmented, not conducted as part a holistic systems based approach. (I could be wrong)

Examples: What Should AI be Trying to Achieve identifies possible research directions based on interviews with AI safety experts; A Brief Overview of AI Safety Alignment Orgs identifies actor groupings and specific focus areas; the AI Safety Landscape map provides a visual of actor groupings and functions.

Perhaps an improved version of my research would include a complete literature review of such findings, to not only qualify my claim (and that of others I've spoken to) that we lack a holistic approach for both understanding and building the field, but use existing efforts as a starting points (which I hint to in Application Step 1).

As for Open Phil, your comment spurred me to ask them this question in their most recent grant announcement post!

Happy for you to signpost me to other orgs/specific individuals. I'm keen to turn my research into action.

Some asked asked what the impact of applying SyDFAIS could be?

I've given this ~ 2 hours of thought, aligning with current literature on Bridgespan's 5 observable characteristics of field-building across 35+ fields.

Below is an impact assessment table, presenting quantified estimates across 5 key outcome areas, along with underlying assumptions and uncertainties.

https://docs.google.com/document/d/1gm0LJ2nDifUfnQn0T7ZqWbo4RNf42MwEjd7Bc8jq0Gg/edit?tab=t.0

I may develop this into a separate post...

Really great work Moneer. Thinking this complex thing through from a systems lens is much needed, and I think should receive support from grantmakers who care about better oversight and transparency of the ongoings that are happening across the field. After all it would make work more effective while fostering better conversations between all actors!

I am curious how this will progress and look forward to any updates.

Here is an interactive example of what a framework like SydFAIS can lead to.

Imagine we had something similar for AI Safety!!