This post is part of WIT's CRAFT sequence. It examines one of the decision theories included in the Portfolio Builder Tool.

Executive Summary

- EA is a movement aimed at “doing the most good”. There are two ways to interpret the good that we are aiming at. On one view, we should attempt to bring about states of the world with the most absolute value. On a second view, we should attempt to make the greatest difference, to perform those actions that bring about the greatest positive change in value.

- A difference-making risk averse (DMRA) agent prefers actions that are very likely to make a difference over actions that have the same expected difference made but with more variance. She is averse to situations where her action does no tangible good or, worse, reduces the amount of value in the world.

- In several key cause prioritization decisions, DMRA’s recommendations differ significantly from those of expected value maximization or risk aversion aimed at absolute value. DMRA is more likely to recommend diversifying one’s giving, donating to global health over existential risk mitigation, and favoring humans over species of uncertain sentience.

- Greaves, et al. (2022) argue that DMRA is inconsistent with pure benevolence because it recommends actions that are stochastically dominated. They speculate that people have DMRA preferences because they desire to add meaning to their lives by making a difference. We consider two ways of defending DMRA against these arguments.

- First, DMRA might be extrinsically justified as a decision procedure for obtaining the greatest absolute good.

- We often have better epistemic access to the difference that our actions will make than the absolute amount of value that will result. Therefore, someone might adopt DMRA as a local strategy for achieving the best absolute good under significant uncertainty.

- The viability of this strategy depends on whether users can safeguard against being stochastically dominated.

- It is unclear whether DMRA is a more effective strategy for achieving the absolute good than other decision procedures.

- Second, difference making might be intrinsically valuable, so it is sensible for agents to be risk averse about the difference that they make. Several common moral intuitions seem to support the claim that making a difference is inherently valuable.

- The desire to add meaning to one’s own life might not be morally weighty. However, someone might care about the difference made by a moral community at large, and it is more plausible that meaning-making is morally weighty when considering such groups.

- Some moral theories place importance on being the actual cause of good, not just a probabilistic promotor of it.

- Changes in value—not just the amount of value—might be morally important. If there is something intrinsically valuable about things getting better, then someone might reasonably prioritize making things better.

1. Introduction

Effective Altruism is a movement aimed at doing the most good. However, there is considerable ambiguity about what it means to “do the most” and what the relevant sense of “good” should be. Here, I will focus on one foundational disagreement about the good that we should be aiming at. A first view says that we should be aiming at the best overall state of the world, the state that contains the most absolute value. A second view says that we should be aiming to make the greatest difference. We should take those actions that will result in the greatest relative value, where this is measured by the difference in value between the state of the world conditional on our action and the state of the world were we to do nothing.

These aims will often overlap, but they can come apart when paired with certain strategies for “doing the most”. Someone focused on the absolute good might try to maximize expected value, or they might be risk averse in that they put more weight on avoiding the worst outcomes.[1] Regardless, someone aimed at the absolute good measures the success of an action via some feature of the overall state of value that results. There are also various strategies for making a difference. Someone who seeks to maximize the expected difference made will behave the same as someone who seeks to maximize expected absolute value. However, someone who is both risk-averse and aiming at making the greatest actual difference, as opposed to expected, will have preferences that can differ significantly from both expected value maximization and standard (“avoid the worst”) risk aversion.

A difference-making risk averse (DMRA) agent evaluates actions by the difference in value that her action yields, compared to doing nothing. She is risk averse, which means that for some measure of value, X, she prefers a sure-thing value of X = x to a bet that has an expected value of x but with variance or uncertainty (Greaves, et al. 2022). Conjoining these, we get an agent who prefers actions that are very likely to make a difference over actions that have the same expected difference made but with more variance. We will focus on an “avoid the worst” DMRA agent (where this is measured in relative, not absolute terms), who is averse to situations where her action does no tangible good or, worse, reduces the amount of value in the world.

In the CURVE sequence, we showed that DMRA preferences sometimes yield dramatically different recommendations than other prominent decision theories. DMRA also seems like a plausible psychological explanation for why many people have the preferences that they do. This raises the question: should we be risk-averse about the difference that our actions make? The most prominent discussion of DMRA—from Greaves, et al. (2022)—raises some serious problems for the view. They show that DMRA recommends actions that are stochastically dominated and therefore inconsistent with pure benevolence.

My goal here is to explore and evaluate some possible reasons why someone might be difference-making risk averse.[2] First, someone might use DMRA as a local strategy for achieving the absolute good in the face of significant uncertainty about the amount of value in the world. Second, making a difference might have intrinsic moral value which should be considered alongside reasons of benevolence.

2. What DMRA recommends

Before delving into particular DMRA decision theories, we can see, at a more qualitative level, how DMRA differs from both EV maximization or standard “avoid the worst” risk-averse theories. Consider the following cases (the first two are adapted from Greaves, et al. 2022; the third is from Clatterbuck 2023):

Case 1: Diversification

You are weighing the following three options for donating to charity. First, you could give all of the money to the Against Malaria Foundation (AMF). Second, you could give all of the money to Unlimit Health. Third, you could diversify, giving half to AMF and half to Unlimit. Suppose that for each charity, there’s a .5 probability that your donation will be successful and that these probabilities are independent (your AMF donation failing doesn’t make it any more likely that your Unlimit donation will fail and vice versa).

Giving to a single cause has a higher EV than diversifying, but diversification has a higher probability of making a difference. If you give to a single cause, your donation has a .5 chance of succeeding, but if you diversify, you have a .75 chance of success. Therefore, DMRA recommends diversifying.[3]

Case 2: Cause prioritization

You are weighing a choice between giving to existential risk mitigation or AMF. The probability that your donation will prevent a catastrophic event is very small (say, .00000001) but, if it succeeds, it will save an enormous number of lives. The probability that your donation to AMF will succeed, having positive effects for some much smaller number of people, is .5.

If the potential payoffs of avoiding existential risk are high enough, giving to those existential risk prevention will have higher EV than giving to AMF.[4] Risk aversion about the (absolute) worst case scenarios will favor x-risk even more strongly. Giving to AMF has a higher probability of making a difference, so DMRA will recommend it.

Case 3: Individuals of uncertain moral status

You are weighing a choice between an action to improve conditions at shrimp farms and giving to AMF. You believe that the probability that shrimp are sentient is .1 and that they have a small welfare range if sentient. Nevertheless, because your action could affect hundreds of millions of shrimp, giving to shrimp has a higher EV than giving to AMF. Risk aversion about the (absolute) worst-case scenarios will favor shrimp even more strongly. Giving to humans has a higher probability of making a difference, so DMRA will recommend it.

In each of cases 1-3, many people have preferences in line with the recommendations of DMRA and against the recommendations of EV maximization or standard “avoid the worst” risk aversion.

3. DMRA decision theories

We can make these attitudes more precise by developing DMRA decision theories (Duffy 2023; Clatterbuck 2023). A difference-making risk-averse agent will be sensitive to the probability that her action makes a difference. She is also sensitive to the amount of difference that could be made, though (unlike the EV maximizer) decreases in the probability of difference-making do not cleanly trade-off with increases in possible payoffs. Thus, she seeks some balance between maximizing utility and minimizing futility.

A DMRA decision theory would evaluate actions by incorporating both the probability and magnitude of differences that could result from them. One way to develop such a theory is to start with existing risk-averse decision theories that assign extra decision weight to the worst-case possible outcomes of an action, the outcomes that result in the lowest absolute value. These can be adapted for DMRA purposes by measuring the value of outcomes in terms of the difference they yield compared to doing nothing. Equivalently, one can think of the total payoff in some state of the world as the sum of the amount of background value in that state (what would obtain had you not intervened) and the direct payoff of your action (what your intervention contributed). To capture difference-making, we assume that the background state of the world is 0 and only focus on the direct payoffs. Either way, more weight is put on actions that make things worse (or do nothing, or do little) and less on actions that make a large, positive difference.[5]

4. DMRA as a strategy for achieving the absolute good

One reason that people might be difference-making risk averse is that they ultimately care to achieve the greatest absolute good and use difference-making as a local strategy for achieving it. Even if they believe that the criterion of rationality makes reference to the absolute good, the correct decision procedure for limited agents will involve the difference made.

The reason is that we typically have much better epistemic access to the difference that our actions will make than we do to the overall amount of value they will produce. Recall that the absolute good that is yielded by an action will be the sum of the background value that would obtain independent of one’s action and the change in value yielded by one’s action. If I give to AMF, I can make an educated guess about how much value I might add to the world. But I have no idea how much total value the world will contain after my action.

The thought is: “I don’t know what the best thing is to do from the point of view of the whole universe. What I can do is take actions that are probably going to add value and avoid actions that might make things worse.” This is somewhat analogous to a utilitarian argument for giving special preference to one’s nearest and dearest as a local strategy for achieving the most impartial good (Jackson 1991). Because we have special epistemological (and material) access to our own families and communities, we are better able to satisfy their needs. Therefore, a system in which each individual is tasked with caring for their own local sphere of concern can sometimes achieve more overall good than one in which individuals distribute care impartially across all people. When we have more knowledge about local states of affairs than global ones, perhaps there is a reason for maximizing locally.

Of course, local strategies are notoriously unreliable.[6] This defense of DMRA will fail if maximizing local difference-making leads to worse overall states of the world. Greaves, et al. (2022) present a series of formidable arguments for why DMRA will cause agents to prefer actions that are inconsistent with pure benevolence (given the assumption that a purely benevolent agent prefers action A to B if and only if A better promotes the good). In particular, Greaves, et al. show that DMRA decision theories can recommend actions that are stochastically dominated.[7]

An action B stochastically dominates action A iff:

a. For all outcomes o in O, the probability that the outcome is at least as good as o is at least as high on B as it is on A; and

b. For some o in O, the probability that the outcome is at least as good as o is strictly higher on B than it is on A.

A preference ordering respects stochastic dominance iff for any B that stochastically dominates A, B is preferred to A. It is extremely plausible that respecting stochastic dominance is a necessary condition for anyone whose preferences track the betterness relation, conceived of in terms of absolute value.[8]

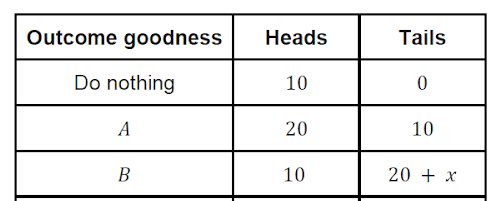

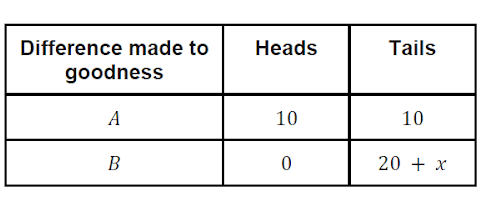

Here is a simple case from Greaves et al. in which DMRA violates stochastic dominance. Consider the following bets on the outcome of a fair coin:

The possible values of o are: 10, 20, 20+x. The probability of getting something at least as good as 10 or 20 is equal for A and B. The probability of getting something at least as good as 20+x is higher on B than A. Therefore, B stochastically dominates A. If you are aimed at achieving the most absolute good, B is clearly the better choice.

We can also measure the outcomes of bets A and B in terms of the difference in value that they yield:

Bet A will certainly make a +10 difference. B yields a higher expected difference but with more variance. For some sufficiently large value of x (depending on the extent of their risk aversion[9]), a DMRA agent will prefer A to B. However, as we saw above, B stochastically dominates A.

This example demonstrates how DMRA can lead to decisions contrary to the goal of achieving the best absolute good. Depending on your tolerance of pluralism and flexibility about choice of decision theory, this may not be fatal. It may be possible to safeguard against the bad cases and only use DMRA when (at a minimum) it doesn’t violate stochastic dominance. If, on the other hand, DMRA systematically violates stochastic dominance, then perhaps it is not possible—or worthwhile—to fix its flaws, especially when there are other, well-established decision theories to choose from.[10]

There are a few strategies available for safeguarding against being stochastically dominated. For example, if the background is independent of action payoffs, then if A’s worst-case outcome is always better than B’s worst-case outcome, A will not be stochastically dominated by B. In the above bets, the background is not independent of action payoffs. The background value of the state in which B pays off (tails) is worse than the state in which it doesn’t (heads), and the amount that B pays off more than compensates for that loss. This ensures that no matter what happens, betting on B is going to be pretty good. If you know that these conditions don’t hold, then perhaps you can confidently act on your DMRA preferences while staying within the general dictates of benevolence.[11] Diversifying, giving to malaria over x-risk, and helping humans over shrimp probably aren’t stochastically dominated.

Nevertheless, by ignoring the background state of the world, DMRA throws out information that matters when assessing how to act benevolently. This problem is especially acute if you are risk averse. The amount of value we achieve will be a combination of the background state of the world plus what we add to it. Standard models of risk aversion—which place extra decision weight on avoiding the worst case outcomes—are highly sensitive to background values:

- When the stakes of your action are small relative to the background, you should be less risk averse. A millionaire should be less risk averse about a $10 bet than should someone with $100 to their name.

- When the background has a high probability of being pretty bad, then you should also be less risk averse.[12] Suppose you need at least $1,000 by tomorrow to pay off your bookie and save your kneecaps. If you already have $950, you should take safe bets that give you a good chance of getting $50 without putting the rest of your money at risk. But if you have $100, you should probably take some longshot bets on a jackpot that could get you over the hump. Desperate times call for drastic action!

Because it ignores the background, DMRA is thus a very strange kind of risk aversion. Nevertheless, there are difficult questions about what you ought to do if you are risk averse and you don’t know what the background state is.[13]

The question of whether difference-making risk aversion is an effective strategy for achieving overall benevolence is a complicated one, for which the answer is probably “not always, maybe not very often.” The question of whether it’s the best that we can do in light of significant uncertainty about the background value of the world is even trickier. Therefore, if there’s a persuasive case to be made for DMRA, it will identify something that is intrinsically valuable about making a difference.

5. The inherent value of making a difference

If something is valuable, then it often makes sense to be risk averse about it, to be concerned with securing some value instead of gambling for a greater amount. If making a difference is of inherent moral value—independent of the dictates of pure benevolence—then the fact that an action has a high chance of making a difference will contribute to its moral worth and being difference-making risk-averse will be a strategy for securing it.

My goal here is to present some reasons why making a difference might be inherently morally valuable, some common moral intuitions that seem to tell in favor of it. I don’t take any of these to be knockdown arguments for DMRA, especially not in the preliminary sketches here. Instead, I intend these to be evocative suggestions for fruitful avenues that a moral defense might take. For someone who does not share these moral intuitions, the following might constitute an error theory for why many people do have DMRA preferences.

5.1. Meaning-making

Greaves, et al. suggest that difference-making risk aversion might stem from a desire to add meaning to one’s life:

A natural diagnosis is that what is going on in all these cases is a sort of pseudo-benevolence, rather than the genuine article. Perhaps, for instance, what is really driving the preferences in question is a desire to render one’s own life in some sense more meaningful, rather than a direct desire that the world be better. It might then not be surprising that the corresponding behaviour is in tension with benevolence, since a desire for meaningfulness is not straightforwardly a matter of benevolence. (22)

It’s unclear how to evaluate whether the desire to enhance the meaning of one’s own life is morally weighty. On one reading, it sounds like it is merely a self-serving desire to be the hero, to achieve something of narrative rather than altruistic value. On another, it just sounds like a restatement of the desire to do something valuable, where this could be thoroughly altruistic. On the latter reading, the question of meaning-making will dissolve into the question of whether making a difference is inherently valuable.

Greaves, et al. argue that this is an agent-relative rather than an agent-neutral way of assessing the value of an action. However, it seems plausible that people’s DMRA preferences persist even when evaluating actions by other people. For example, imagine that Jane, a major player in philanthropic giving, withdraws millions of dollars of funding for programs that decrease (human) child malnutrition and redirects them toward improving conditions at insect farms. Her best estimate of the probability that the insects are sentient is .01. However, she judges that the vast number of insect lives affected outweighs the sure thing of helping a smaller number of humans. One might reasonably protest: but you are almost certain that your money is doing absolutely nothing! There is only a 1% chance that the world is better as a result of your action and a 99% chance that you have thrown away millions of dollars. On the other hand, if you had given to child malnutrition, you could be certain that sentient creatures’ lives improved as a result of your action. That is a massively risky thing that you did!

This attitude is a kind of DMRA, but it is not merely an aversion to one’s own actions failing to make a difference. It also doesn’t seem to stem from the concern that Jane’s life will lack meaning as a result of her actions. While this attitude concerns the relation between actions and their consequences—not merely the absolute, total value of the consequences—it is not agent-relative in the way that Greaves, et al. envision.

Whatever the merits of the desire to add meaning to one’s own life, it seems more plausible that meaning-making matters when the agent in question is humanity or a moral community more broadly. Consider a scenario in which all of humanity’s resources are devoted to helping nonsentient shrimp or staving off an AI apocalypse that was not going to occur. This is a tragic waste of resources that could have been used to actually do good. But it also seems like a waste of things that add meaning to the human project, things like goodwill, communal progress, or knowledge.

5.2. Being an actual cause

Even when we focus on individual moral agents, many commonly held moral intuitions seem to support the claim that there is something inherently morally valuable about being the actual cause of realized differences. There are two components to this claim.

First, people often do not judge actions purely on their basis of the outcomes; the degree of agential involvement in bringing about that outcome is relevant to the moral worth of that action (Wedgwood 2009). For example, many people hold that there is a distinction between actively bringing about some outcome (e.g., killing) and failing to act to prevent that outcome (e.g., letting die). Recall our working example of the choice between actions A and B. Pure benevolence seems to recommend action B, since that has a higher probability of getting you better outcomes. If you choose B and heads comes up, the background outcome is good. However, your action did not bring about that goodness. Therefore, if you have the intuition that background payoffs—to which you did not causally contribute—are less relevant to the moral worth of your action than the things for which you are causally responsible, then this will count in favor of option A.

Second, in the moral domain, many people have intuitions that distinguish between actions that probabilistically promoted some outcome and those that were actual causes of that outcome. The most prominent examples come from Nagel’s (1979) discussion of moral luck. Two agents can raise the probability of an outcome E to the same probability but their actions are deemed to have different moral worth depending on whether E actually occurred. For example, suppose Sam and Pam both drive drunk, thus raising the probability that they will kill someone to, say, .1. Luckily, Sam drives home without incident. A child runs into the street in front of Pam’s car, and with her reflexes slowed, she is not able to swerve out of the way. Pam’s action is judged more severely than Sam’s even though Sam also would have killed the child had he been in Pam’s position.

Similar intuitions hold in happier cases. Though it is morally laudable to take actions that raise the probability of a good outcome, extra moral worth is accorded to those actions that actually lead to a good outcome. As Broome (1987) puts it, “the fact that something good might have happened is not itself something good that did happen.”

Pairing these two general intuitions, we get a view on which part of the moral worth of an action derives from what actually happened as a result of purposive activity to bring about those consequences. If you hold these intuitions, you’ll likely believe that the total moral worth of your life will, in part, consist in whether your actions actually made things better or worse. It would make sense to be risk averse about the difference you make, being careful to avoid actions that have a considerable chance of being harmful or futile.

The moral intuitions that I have detailed here are as controversial as they are common. Indeed, both are commonly rejected by utilitarians (the first more than the second). Justifying them, and turning them into a full argument for DMRA, would require much more work. At a minimum, however, these commitments might explain why many people have DMRA preferences for reasons that are at least potentially more morally weighty than the desire for meaning.

5.3. Change for the better is valuable

The meaning-making and being-an-actual-cause arguments located the moral value of difference-making in the relationship between an agent and the consequences of action. A final potential justification for DMRA may be more palatable to consequentialists because it locates this value in the consequences themselves.

Here’s an analogy that will be familiar to utilitarians. If hedonism is true, then the value of a life is to be measured in terms of the happiness that it contains. A natural suggestion is that the total amount of happiness is all that matters. However, suppose that Tim and Jim lead lives with the exact same amount of total happiness. Tim’s life begins poorly and unhappily. He is depressed and has few close relationships. However, he perseveres and his life steadily gets better. His later years are full of passions, joy, and friendship. Jim’s life starts very happily. However, over time, he loses his friends and grows depressed, spending his final years in misery. To many, Tim’s life seems better than Jim’s, which suggests that total happiness is not all that matters. The shape of a life—the distribution of happiness over time—also seems to matter. It’s not necessarily that something other than happiness matters, it’s that some higher-order feature of its distribution also matters.[14]

The lesson we might draw from the shape of a life is that there is something intrinsically valuable about things getting better. According to a relative value thesis, the value of a state Si is not wholly determined by its absolute value. Instead, the direction and magnitude of change from some prior S0 to Si is relevant to the value of Si. If that’s correct, then a purely benevolent agent should be sensitive to the direction of change that their actions will yield, not just the absolute value that will result. If she is risk averse, then she will prefer actions that are likely to make things better.

6. Conclusion

I’ve surveyed a few reasons why someone might put credence in DMRA as a decision principle. First, under conditions of significant uncertainty about the background amount of value in the world, one might (carefully) use DMRA as a local strategy for achieving the best overall good. Second, one might find it morally important to be the actual cause of good outcomes or assign extra importance to increases in value.

Even if one assigns some credence to DMRA, there are still many open questions about how the view ought to be formulated and how it should be weighed against other decision theories, including:

- Who is the relevant agent? Should I be DMRA about the effects of my actions, the actions of a moral community, or the actions of humanity as a whole? Being DMRA with respect to my own individual actions will cause me to give to safe bets. However, being DMRA for a wider community or the long run will leave more room for taking risky bets.

- Should I be DMRA about each individual action or a sequence of actions over the long run? [15]

- What counts as making a difference? Is raising the objective chance of a good outcome sufficient? Or do I have to be the actual cause of a good outcome? This matters a lot for DMRA evaluations of various causes. Projects to mitigate existential risk might have a low chance of actually averting a catastrophe but might nevertheless successfully lower the chances of one. In comparison, if shrimp are not sentient, then projects to benefit them don’t actually raise the objective chances of a good outcome.

- How should we manage meta-normative uncertainty over decision theories, especially in those cases where competing theories render very different results about what to do? Some methods of incorporating uncertainty might lead us toward diversification: investing in some projects that are sure to make a difference and some long-shot causes. On other methods, the potential EV gains of long-shot causes might swamp any DMRA considerations.

Acknowledgments

This post was written by Hayley Clatterbuck in her capacity as a researcher on the Worldview Investigation Team at Rethink Priorities. Thanks to Arvo Muñoz Morán, Bob Fischer, David Moss, Derek Shiller, Hilary Greaves, and an audience at the Global Priorities Institute for helpful feedback. If you like our work, please consider subscribing to our newsletter. You can explore our completed public work here.

- ^

Alternatively, they might focus only on those states that are sufficiently probable or choose those actions that have the greatest probability of achieving some absolutely good outcome.

- ^

I will be focused on reasons that would justify being DMRA and will not focus on psychological investigations into whether and why people are DMRA. There is a vast literature that bears on this topic, much of it stemming from Kahneman & Tversky (1979). For example, they find that people tend to evaluate bets in terms of losses and gains from a fixed reference point rather than the overall net money that would result, that “the carriers of value or utility are changes of wealth, rather than final asset positions that include current wealth” (Kahneman & Tversky 1979, 273).

- ^

These recommendations may vary depending on the precise DMRA model that one uses. One can weaken these conclusions to “DMRA recommends this action moreso than EV maximization does”.

- ^

- ^

Duffy (2023) and Clatterbuck (2023) adapt two existing risk-weighted decision theories for DMRA purposes. Risk-Weighted Expected Utility theory (Buchak 2013) and its difference-making extension, DMRAEV, discount better outcomes of an action as a function of their probability. Weighted-Linear Utility theory (Bottomley and Williamson 2023) discounts better outcomes as a function of their utility; its difference-making extension sets the background utility of the world to 0 to isolate just the value added by the action itself. Duffy (2023) applies these theories to a wide array of cause prioritization decisions.

- ^

For example, if one’s nearest and dearest are already well off or if it takes more resources to help them, then it will be more effective to direct one’s actions at those who are far away.

- ^

They also show that DMRA can lead to collective defeat. For simplicity’s sake, I will only focus on the stochastic dominance argument here, though some of the possible responses I give on behalf of DMRA might apply there too.

- ^

It has also been suggested as a requirement on rational choice (Tarsney 2020).

- ^

For DMREV with r = p2, then the risk averse agent will prefer A to B whenever x < 20.

- ^

Tarsney (2020) argues that conditional on uncertainty about the amount of background value, if A has a higher expected utility than B, then A will almost always stochastically dominate B. Suppose you are highly uncertain about the background state of the world—you assign some non-zero probability to it being -1 billion, 5, +1 trillion, etc. As the background value increases in magnitude (in either positive or negative direction) compared to the size of the bet, the background will contribute much more to the probability that your resultant payoff value (the background + the value added by your action) will be larger than some x. As we consider background states that get more negative, a smaller sure-thing bet will move your likely payoff closer to x in every background, but it has a very small probability of raising it beyond x. A long-shot bet for a huge possible payoff will succeed in fewer cases, but it has a much higher probability of raising the value above x if it does. As Tarsney demonstrates, unless the probability of getting lower (negative) payoffs falls off very rapidly or the probability of success of the long-shot is fanatically miniscule, the bet with the higher EV will stochastically dominate the one with lower.

A problem here is that in many of Tarsney’s cases, neither bet stochastically dominates the other conditional on any precise background value, but B stochastically dominates A conditional on uncertainty over background values. You might think that there is something wrong with your preferences if they lead to this conclusion. On the other hand, you might think that there is something wrong with conditioning on uncertainty in this way.

- ^

In the case at hand, if the background value of heads and tails are VB(H) and VB(T), respectively, then B stochastically dominates A when VB(T) + 10 ≤ VB(H) ≤ VB(T) + (10 + x).

- ^

This is one of the lessons of Allais cases. The ability to capture standard Allais preferences is a frequently used desideratum for risk averse decision theories.

- ^

This could be turned into a general argument against even standard versions of risk aversion. Here’s the dilemma. Suppose that you are risk averse and include the background value of the world when assessing your actions. The background value in the world will probably be enormous relative to the magnitude of your action. Therefore, you should behave as an expected value maximizer. Suppose, then, that you do not include the background value of the world (DMRA). Now, you are susceptible to choosing actions that are stochastically dominated, and your preferences won’t align with standard risk averse profiles. Therefore, there is no acceptable way of being risk averse.

- ^

- ^

See Gustafsson (2021) for sequential dominance arguments against various risk averse preferences.

DMRA could actually favour helping animals of uncertain sentience over helping humans or animals of more probable sentience, if and because helping humans can backfire badly for other animals in case other animals matter a lot (through the meat eater problem and effects on wild animals), and helping vertebrates can also backfire badly for wild invertebrates in case wild invertebrates matter a lot (especially through population effects through land use and fishing). Helping other animals seems less prone to backfire so much for humans, although it can. And helping farmed shrimp and insects seems less prone to backfire so much (relative to potential benefits) for other animals (vertebrates, invertebrates, farmed and wild)

I suppose you might prefer human-helping interventions with very little impact on animals. Maybe mental health? Or, you might combine human-helping interventions to try to mostly cancel out impacts on animals, like life-saving charities + family planning charities, which may have roughly opposite sign effects on animals. And maybe also hedge with some animal-helping interventions to make up for any remaining downside risk for animals. Their combination could be better than primarily animal-targeted interventions under DMRA, or at least inteventions aimed at helping animals unlikely to matter much.

Maybe chicken welfare reforms still look good enough on their own, though, if chickens are likely enough to matter enough, as I think RP showed in the CURVE sequence.

All super interesting suggestions, Michael!

re: "being an actual cause", is there an easy way to bracket the (otherwise decisive-seeming) vainglory objection that MacAskill raises in DGB of the person who pushes a paramedic aside so that he can instead be the actual (albeit less competent) cause of saving a life?

Hi Richard,

That is indeed a very difficult objection for the "being an actual cause is always valuable" view. We could amend that principle in various ways. One is agent-neutral: it is valuable that someone makes a difference (rather than the world just turning out well), but it's not valuable that I make a difference. One adds conditions to actual causation; you get credit only if you raise the probability of the outcome? Do not lower the probability of the outcome (in which case it's unclear whether you'd be an actual cause at all).

Things get tricky here with the metaphysics of causation and how they interact with agency-based ethical principles. There's stuff here I'm aware I haven't quite grasped!

Another motivation I think worth mentioning is just objecting to fanaticism. As Tarsney showed, respecting stochastic dominance with statistically independent background value can force a total utilitarian to be pretty fanatical, although exactly how fanatical will depend on how wide the distribution of the background value is. Someone could still find that objectionably fanatical, even to the extent of rejecting stochastic dominance as a guide. They could still respect statewise dominance.

That being said, DMRA could also be "fanatical" about the risk of causing net harm, leading to paralysis and never doing anything or always sticking with the "default", so maybe the thing to do is to give less than proportional weight to both net positive impacts and net negative impacts, e.g. a sigmoid function of the difference.

I agree that the plausibility of some DMRA decision theory will depend on how we actually formalize it (something I don't do here but which Laura Duffy did some of here). Thanks for the suggestion.

Executive summary: Difference-making risk aversion (DMRA) is explored as a potential decision-making approach for effective altruism, examining its merits as both a strategy for achieving absolute good and as an intrinsically valuable moral consideration.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.