tl;dr: Ask questions about AGI Safety as comments on this post, including ones you might otherwise worry seem dumb!

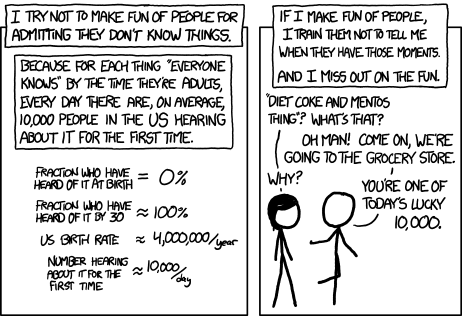

Asking beginner-level questions can be intimidating, but everyone starts out not knowing anything. If we want more people in the world who understand AGI safety, we need a place where it's accepted and encouraged to ask about the basics.

We'll be putting up monthly FAQ posts as a safe space for people to ask all the possibly-dumb questions that may have been bothering them about the whole AGI Safety discussion, but which until now they didn't feel able to ask.

It's okay to ask uninformed questions, and not worry about having done a careful search before asking.

Stampy's Interactive AGI Safety FAQ

Additionally, this will serve as a way to spread the project Rob Miles' volunteer team[1] has been working on: Stampy - which will be (once we've got considerably more content) a single point of access into AGI Safety, in the form of a comprehensive interactive FAQ with lots of links to the ecosystem. We'll be using questions and answers from this thread for Stampy (under these copyright rules), so please only post if you're okay with that! You can help by adding other people's questions and answers to Stampy or getting involved in other ways!

We're not at the "send this to all your friends" stage yet, we're just ready to onboard a bunch of editors who will help us get to that stage :)

We welcome feedback[2] and questions on the UI/UX, policies, etc. around Stampy, as well as pull requests to his codebase. You are encouraged to add other people's answers from this thread to Stampy if you think they're good, and collaboratively improve the content that's already on our wiki.

We've got a lot more to write before he's ready for prime time, but we think Stampy can become an excellent resource for everyone from skeptical newcomers, through people who want to learn more, right up to people who are convinced and want to know how they can best help with their skillsets.

PS: Based on feedback that Stampy will be not serious enough for serious people we built an alternate skin for the frontend which is more professional: Alignment.Wiki. We're likely to move one more time to aisafety.info, feedback welcome.

Guidelines for Questioners:

- No previous knowledge of AGI safety is required. If you want to watch a few of the Rob Miles videos, read either the WaitButWhy posts, or the The Most Important Century summary from OpenPhil's co-CEO first that's great, but it's not a prerequisite to ask a question.

- Similarly, you do not need to try to find the answer yourself before asking a question (but if you want to test Stampy's in-browser tensorflow semantic search that might get you an answer quicker!).

- Also feel free to ask questions that you're pretty sure you know the answer to, but where you'd like to hear how others would answer the question.

- One question per comment if possible (though if you have a set of closely related questions that you want to ask all together that's ok).

- If you have your own response to your own question, put that response as a reply to your original question rather than including it in the question itself.

- Remember, if something is confusing to you, then it's probably confusing to other people as well. If you ask a question and someone gives a good response, then you are likely doing lots of other people a favor!

Guidelines for Answerers:

- Linking to the relevant canonical answer on Stampy is a great way to help people with minimal effort! Improving that answer means that everyone going forward will have a better experience!

- This is a safe space for people to ask stupid questions, so be kind!

- If this post works as intended then it will produce many answers for Stampy's FAQ. It may be worth keeping this in mind as you write your answer. For example, in some cases it might be worth giving a slightly longer / more expansive / more detailed explanation rather than just giving a short response to the specific question asked, in order to address other similar-but-not-precisely-the-same questions that other people might have.

Finally: Please think very carefully before downvoting any questions, remember this is the place to ask stupid questions!

- ^

If you'd like to join, head over to Rob's Discord and introduce yourself!

- ^

Via the feedback form.

If companies like OpenAI and Deepmind have safety teams, it seems to me that they anticipate that speeding up AI capabilities can be very bad, so why don't they press the brakes on their capabilities research until we come up with more solutions to alignment?

In this post, one of the lines of discussion is about whether values are "fragile". My summary, which might be wrong:

And then I lose the thread. Why isn't cfoster0's response compelling?

To make it even less intimidating, maybe next time include a Google Form where people can ask questions anonymously that you'll then post in the thread a la https://www.lesswrong.com/posts/8c8AZq5hgifmnHKSN/agi-safety-faq-all-dumb-questions-allowed-thread?commentId=xm8TzbFDggYcYjG5e ?

What stops AI Safety orgs from just hiring ML talent outside EA for their junior/more generic roles?

This is a question about some anthropocentrism that seems latent in the AI safety research that I've seen so far:

Why do AI alignment researchers seem to focus only on aligning with human values, preferences, and goals, without considering alignment with the values, preferences, and goals of non-human animals?

I see a disconnect between EA work on AI alignment and EA work on animal welfare, and it's puzzling to me, given that any transformative AI will transform not just 8 billion human lives, but trillions of other sentient lives on Earth. Are any AI researchers trying to figure out how AI can align with even simple cases like the interests of the few species of pets and livestock?

If we view AI development not just as a matter of human technology, but as a 'major evolutionary transition' for life on our planet more generally, it would seem prudent to consider broader issues of alignment with the other 5,400 species of mammals, the other 45,000 species of vertebrates, etc...

IMO: Most of the difficulty in technical alignment is figuring out how to robustly align to any particular values whatsoever. Mine, yours, humanitys, all sentient life on earth, etc. All roughly equally difficult. "Human values" is probably the catchphrase mostly for instrumental reasons--we are talking to other humans, after all, and in particular to liberal egalitarian humans who are concerned about some people being left out and especially concerned about an individual or small group hoarding power. Insofar as lots of humans were super concerned that animals would be left out too, we'd be saying humans+animals. The hard part isn't deciding who to align to, it's figuring out how to align to anything at all.

Ben - thanks for the reminder about Harsanyi.

Trouble is, (1) the rationality assumption is demonstrably false, (2) there's no reason for human groups to agree to aggregate their preferences in this way -- any more than they'd be willing to dissolve their nation-states and hand unlimited power over to a United Nations that promises to use Harsanyi's theorem fairly and incorruptibly.

Yes, we could try to align AI with some kind of lowest-common-denominator aggregated human (or mammal, or vertebrate) preferences. But if most humans would not be happy with that strategy, it's a non-starter for solving alignment.

What things would make people less worried about AI safety if they happened? What developments in the next 0-5 years should make people more worried if they happen?

I'm going to repeat my question from the "Ask EA anything" thread. Why do people talk about artificial general intelligence, rather than something like advanced AI? For some AI risk scenarios, it doesn't seem necessary that the AI be "generally" intelligent.

Cool. That all makes sense.

Seems like a lot of alignment research at the moment is analogous to physicists at Los Alamos National Labs running computer simulations to show that a next-generation nuke will reliably give a yield of 5 megatons plus or minus 0.1 megatons, and will not explode accidentally, and is therefore aligned with the Pentagon's mission of developing 'safe and reliable' nuclear weaponry.... and then saying 'We'll worry about the risks of nuclear arms races, nuclear escalation, nuclear accidents, nuclear winter, and nuclear terrorism later -- they're just implementation details'.

The situation is much worse than that. It's more like: They are worried about the possibility that the first ever nuclear explosion will ignite the upper atmosphere and set the whole earth ablaze. (You've probably read, this is a real concern they had). Except in this hypothetical the preliminary calculations are turning up the answer of Yes no matter how they run them. So they are continuing to massage the calculations and make the modelling software more realistic in the hopes of a No answer, and also advocating for changes to the design of the bomb that'll hopefully mitigate the risk, and also advocating for the whole project to slow down before it's too late, but the higher-ups have go fever & so it looks like in a few years the whole world will be on fire. Meanwhile, some other people are talking to the handful of Los Alamos physicists and saying "but even if the atmosphere doesn't catch on fire, what about arms races, accidents, terrorism, etc.?" and the physicists are like "lol yeah that's gonna be a whole big problem if we manage so survive the first test, which unfortunately we probably won't. We'd be working on that problem if this one didn't take priority."

Why do you think AI Safety is tractable?

Related, have we made any tangible progress in the past ~5 years that a significant consensus of AI Safety experts agree is decreasing P(doom) or prolonging timelines?

Edit: I hadn't noticed there was already a similar question

What are good ways to test your fit for technical AI Alignment research? And which ways are best if you have no technical background?

How would you recommend deciding which AI Safety orgs are actually doing useful work?

According to this comment, and my very casual reading of LessWrong, there is definitely no consensus on whether any given org is net-positive, net-neutral, or net-negative.

If you're working in a supporting role (e.g. engineering or hr) and can't really evaluate the theory yourself, how would you decide which orgs are net-positive to help?

This is a great idea! I expect to use these threads to ask many many basic questions.

One on my mind recently: assuming we succeed in creating aligned AI, whose values, or which values, will the AI be aligned with? We talk of 'human values', but humans have wildly differing values. Are people in the AI safety community thinking about this? Should we be concerned that an aligned AI's values will be set by (for example) the small team that created it, who might have idiosyncratic and/or bad values?

What should people do differently to contribute to AI safety if they have long vs short timelines?

I'm relatively unconvinced by most arguments I've read that claim deceptive alignment will be a thing (which I understand to be a model that intentionally behaves differently on its training data and test data to avoid changing its parameters in training).

Most toy examples I've seen, or thought experiments, don't really seem to actually be examples of deceptive alignment since the model is actually trained on the "test" data in these examples. For example, while humans can decieve their teachers in etiquette school then use poor manners outside the s... (read more)

Should we expect power-seeking to often be naturally found by gradient descent? Or should we primarily expect it to come up when people are deliberately trying to make power-seeking AI, and train the model as such?

What is prosaic alignment? What are examples for prosaic alignment?

OK, let's say a foreign superpower develops what you're calling 'weakly aligned AI', and they do 'muster the necessary power to force the world into a configuration where [X risk] is lowered'... by, for example, developing a decisive military and economic advantage over other countries, imposing their ideology on everybody, and thereby reducing the risk of great-power conflict.

I still don't understand how we could call such an AI 'aligned with humanity' in any broad sense; it would simply be aligned with its host government and their interests, and s... (read more)

To what extent is AI alignment tractable?

I'm especially interested in subfields that are very tractable – as well as fields that are not tractable at all (with people still working there).

Won't other people take care of this - why should I additionally care?

A single AGI with its utility function seems almost impossible to make safe. What happens if you have a population of AGIs each with its own utility function? Probably dangerous to make biological analogies ... but biological systems are often kept stable by the interplay between agonist and antagonist processes. For instance, one AGI in the population wants to collect stamps and another wants to keep that from happening.

To what degree is having good software engineering experience helpful for AI Safety research?

Related question to the one posed by Yadav:

Does the fact that OpenAI and DeepMind have AI Safety teams factor significantly into AI x-risk estimates?

My independent impression is that it's very positive, but I haven't seen this factor being taken explicitly into account in risk estimates.

Oh, I didn't know that the field was so against AI X-risks. Because when I saw this https://aiimpacts.org/what-do-ml-researchers-think-about-ai-in-2022/ 5-10% of X-risks seemed enough to take them seriously. Is that survey not representative? Or is there a gap between people recognizing the risks and giving them legitimacy?

According to the CLR, since resource acquisition is an instrumental goal - regardless of the utility function of the AGI - , it is possible that such goal can lead to a race where each AGI can threaten others such that the target has an incentive to hand over resources or comply with the threateners’ demands. Is such a conflict scenario (potentially leading to x-risks) from two AGIs possible if these two AGIs have a different intelligence level? If so, isn't there a level of intelligence gap at which x-risks become unlikely? How to characterize this f... (read more)

If AGI has several terminal goals, how does it classify them? Some kind of linear combination?

I have the feeling that there is a tendency in the AI safety community to think that if we solve the alignment problem, we’re done and the future must be necessarily flourishing (I observe that some EAs say that either we go extinct or it’s heaven on earth depending on the alignment problem, in a very binary way actually). However, it seems to me that post aligned-AGI scenario merit attention as well: game theory provides us a sufficient rationale to state that even rational agents (in this cases >2 AGIs) can take sub-optimal decisions (including catastrophic scenarios) when face with some social dilemma. Any thoughts on this please?

Interesting.

I'm not sure I understood the first part and what f(A,B) is. In the example that you gave B is only relevant with respect of how much it affects A ("damage the reputability of the AI risk ideas in the eye of anyone who hasn't yet seriously engaged with them and is deciding whether or not to"). So, in a way you are still trying to maximize |A| (or probably a subset of it: people who can also make progress on it (|A'|)). But in "among other things" I guess that you could be thinking of ways in which B could oppose A, so maybe that's why you... (read more)

Why is there so much more talk about the existential risk from AI as opposed to the amount by which individuals (e.g. researchers) should expect to reduce these risks through their work?

The second number seems much more decision-guiding for individuals than the first. Is the main reason that its much harder to estimate? If so, why?

"RL agents with coherent preference functions will tend to be deceptively aligned by default." - Why?

I think EA has the resources to make the alignment problem viral or at least in STEM circles. Wouldn't that be good? I'm not asking if it would be an effective way of doing good, just a way.

Because I'm surprise that not even AI doomers seem to be trying to reach the mainstream.

If someone was looking to get a graduate degree in AI, what would they look for? Is it different for other grad schools?

How dependent is current AGI safety work on deep RL? Recently there has been a lot of emphasis on advances in deep RL (and ML more generally), so it would be interesting to know what the implications would be if it turns out that this particular paradigm cannot lead to AGI.