Manifund is hosting a $200k funding round for EA community projects, where the grant decisions are made by you. You can direct $100-$800 of funding towards the projects that have helped with your personal journey as an EA. Your choices then decide how $100k in matching will be allocated, via quadratic funding!

Sign up here to get notified when the projects are live, or read on to learn more!

Timeline

- Phase 1: Project registrations open (Aug 13)

- Phase 2: Community members receive funds (Aug 20)

- We’ll give everyone $100 to donate; more if you’ve been active in the EA community. Fill out a 2-minute form to claim your $100, plus bonuses for:

- Donor: $100 for taking the GWWC 🔸10% Pledge

- Organizer: $100 for organizing any EA group

- Scholar: $100 for having 100 or more karma on the EA Forum

- Volunteer: $100 for volunteering at an EAG(x), Future Forum, or Manifest

- Worker: $100 for working fulltime at an EA org, or full-time on an EA grant

- Senior: $100 for having done any of the above prior to 2022

- Insider: $100 if you had a Manifund account before August 2024

- You can then donate your money to any project in the Community Choice round!

- You can also leave comments about any specific project. This is a great way to share your experiences with the project organizer, or the rest of the EA community.

- Funds in Phase 2 will be capped at $100k, first-come-first-served.

- We’ll give everyone $100 to donate; more if you’ve been active in the EA community. Fill out a 2-minute form to claim your $100, plus bonuses for:

- Phase 3: Funds matched and sent to projects (Sep 1)

- Projects and donations will be locked in at the end of August. Then, all money donated will be matched against a $100k quadratic funding pool.

- Unlike a standard 1:1 match, quadratic funding rewards a project with lots of small donors more than a project with few big donors. The broader the support, the bigger the match!

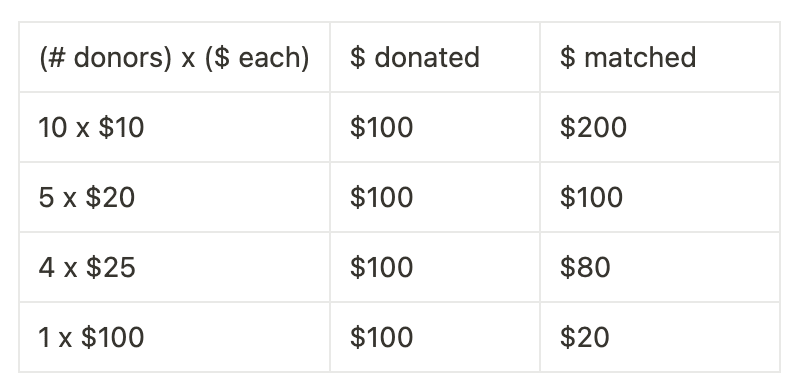

Specifically, the match is proportional the square of the sum of square roots of individual donations. A toy example:

- Learn more about principles behind quadratic funding by reading Vitalik Buterin’s explainer, watching this video, or playing with this simulator.

What is an EA community project?

We don’t have a strict definition, but roughly any project which helps you: learn about EA, connect with the EA community, or grow your impact in the world. We’re casting a wide net; projects do not have to explicitly identify as EA to qualify (though, we also endorse being proud of being EA). If you’re not sure if you count, just apply!

Examples of projects we’d love to fund:

- Community groups

- Regional groups like EA Philippines

- Cause-specific groups like Hive

- University groups like EA Tufts

- Physical spaces

- Coworking spaces like Lighthaven, Epistea, and AI Safety Serbia Hub

- Housing like CEEALAR and Berkeley REACH

- Events

- Conferences like Manifest, EAGx, LessOnline, and AI, Animals, and Digital Minds

- Extended gatherings like Manifest Summer Camp or Prague Fall Season

- Recurring meetups like local groups or online EA coworking

- Tournaments like Metaculus Tournaments or The Estimation Game

- Essay competitions like EA Criticism & Red Teaming Contest

- Software

- Tools like Squiggle, Carlo, Fatebook, or Guesstimate

- Visualizations like AI Digest

- Datasets like Donations List Website

- Educational programs

- Incubators like AI Safety Camp and Apart Hackathons

- Course materials like AI Safety Fundamentals

- Information resources

- Websites like AISafety.com

- Youtube channels like Rational Animations, Rob Miles, and A Happier World

- Podcasts like The 80k Podcast, The Dwarkesh Podcast, and The Inside View

FAQ

- What is Manifund?

- Manifund is a platform for funding impactful projects. We’ve raised over $5m for hundreds of projects across causes like AI safety, biosecurity, animal welfare, EA infrastructure, and scientific research. Beyond crowdfunding, we also run programs such as AI safety regrants, impact markets, and ACX Grants.

- Why are you doing this?

- We want to give the EA community a voice in what projects get funded within our own community. Today, most funding decisions are centralized in the hands of a few grantmakers, such as OpenPhil, EA Funds, and SFF. We greatly appreciate their work, but at the same time, suspect that local knowledge gets lost in this process. With EA Community Choice, we’re asking everyone to weigh from their own experiences, on what projects have helped with their personal journey towards doing good.

- Why these criteria for donation bonuses?

- We chose these to highlight the different ways that someone can contribute to the EA movement. EA Community Choice aims to be more democratic than technocratic; we want to ensure a wide range of activities get recognized, and that a broad swathe of the EA community feels bought in to these donation decisions.

- Why quadratic funding?

- Quadratic funding is theoretically optimal to distribute matching funds towards a selection of public goods (and we’re suckers for elegant theory). The crypto community has pioneered this with some success, eg with Gitcoin Grants and Optimism’s Retroactive Public Goods Funding rounds. Closer to home, the LessWrong Annual Review is an example of a quadratic voting system in practice, which produces pretty good results.

- Where did this $200k come from?

- An anonymous individual in the EA community. Manifund would love to thank them publicly, but alas, the donor wishes not to be named for now. (It’s not FTX.)

- Can I direct my funds to a project I work on or am involved with?

- Yes! We ask that you mention this as a comment on the project, but otherwise it’s fine to donate to projects you are involved with.

- How should I direct my funds? Eg should I fund projects based on their past work, or how they would use marginal funding?

- We suggest based on how much value you have gotten out of it (aka retroactive instead of prospective), but it’s your charity budget; feel free to spend it as you wish.

- We’d appreciate if you leave a comment about what made you decide to give to a particular project, though this is optional.

- Can I update my donations before Phase 3?

- Yes! If later donations or comments change your mind about where you want to give, you can change your allocation

- If I think a project has negative externalities, can I make a “negative vote” aka pay to redirect money away from it?

- TBD. This may be theoretically optimal and has been used by other projects, but leaning no because of bad vibes/potential for drama and additional complexity it introduces.

- Can I contribute my own money towards a community project?

- Yes! You can make a personal donation to any project in this community choice round; these donations will also be eligible for the quadratic funding match (as well as a 501c3 tax deduction, if you’re based in the US).

- How about contributing towards the matching fund?

- Yes! We’re happy to accept donations to increase the size of the matching pool for this round. Reach out to austin@manifund.org and I’ll be happy to chat!

- Or, if you’re excited by this structure but want to try a different focus (eg a funding round for “technical AI safety projects” or “animal welfare projects”), let us know!

Get involved!

As the name “EA Community Choice” implies, we’d love for all kinds of folks in the community to participate. You can:

- Register your project to receive funding in this round

- Recommend a project to join the round

- Sign up to get notified when funds get sent out

Excited to support the projects that y’all choose!

Thanks to Rachel, Saul, Anton, Neel, Constance, Fin and others for feedback!

(Personal note, not reflecting the views of organisations I’m working for)

I assume organisations and groups considering signing up for this program have been doing donor due diligence on the legal and reputational risks of this funding opportunity, and it might be interesting to exchange information as this organisation seems new to funding projects in the EA community.

Concerning potential reputational risks, the source of funding stands out:

Accepting money from an anonymous donor shifts the due diligence of this donor to Manifund, so as a recipient of the funding, you are trusting them to have acted in a way that you would have when you would have decided to accept the money.

Manifund is also a co-organiser of Manifest, one of the example projects mentioned above. Being associated with it might not be in the interest of all community members. For example, this comment by Peter Wildeford had many upvotes:

While groups' preferences will determine whether or not to apply for funding through this source, I hope organisations in the EA community have learned from FTX to do donor due diligence and consider potential reputational risks.

It's hard to say much about the source of funding without leaking too much information; I think I can say that they're a committed EA who has been around the community a while, who I deeply respect and is generally excited to give the community a voice.

FWIW, I think the connection between Manifest and "receiving funding from Manifund or EA Community Choice" is pretty tenuous. Peter Wildeford who you quoted has both raised $10k for IAPS on Manifund and donated $5k personally towards a EA community project. This, of course, does not indicate that Peter supports Manifest to any degree whatsoever; rather, it shows that sharing a funding platform is a very low bar for association.

Thanks. I think linking to your internal notes might have helped; at least it gave me more insight and answered questions.

Perhaps I am being dense here but... do you literally mean this? Like you actually think it is more likely than not that most groups and orgs considering signing up have been doing legal due diligence? Given the relatively small amounts of dollars at play, and the fact that your org might get literally zero, I would expect very few orgs to have done any specific legal due diligence. Charitable organisations need to be prudent with their resources and paying attourneys because of the possibility you might get a small grant that might have some unspecified legal issue - though you are not aware of any specific red flags - does not seem like a good use of donor resources to me.

I don't think most will do this, nor do I believe due diligence has to be very extensive. In most cases this is delegated to the grant maker if there is enough prior information on their activities. For individuals it can be a short online search or reaching out to people who know them. For organisations it can also be asking around if others have done this, what I was doing here - having a distributed process can reduce the amount of work for all.

In this case Manifund has a lot of information online, so as others haven't chimed in I'll do this as an example.

First I had a cursory look at the Manifund website reading through their board of director meeting notes. What stood out:

Then went into their notes to find out about their compliance processes, especially concerning donor and grantee due diligence.

So just looking at these documents my quick takes:

Overall I would see a moderate chance of the organisation failing to pay out user assets due to underfunding, seeing serious risks to their charitable status or failing IRS checks on grantmaking. However I don't see the level of professionalism I would like to see in organisations in the EA ecosystem post FTX and OpenAI board discussions, which is why I wouldn't want to partake in a Manifund project with an EA branding and an anonymous donor.

But I'm probably missing information and context and happy to update with further facts.

Note that Manifund know who the donor is (as do I, and can vouch that I see no red flags), the donor just doesn't want their identity to be public. I think this is totally fine and should not be a yellow flag.

EDIT: To clarify, I'm referring to the regranting donor, who may not be the same as the community choice donor

It's still a yellow flag in my book. A would-be grantee org would be delegating its responsibility to assess the donor for suitability to Manifund. I don't think Manifund keeps its high degree of risk tolerance a secret (and I get the sense that it is relatively high even by EA standards). I also think it is well-understood that Manifund gives little weight to optics/PR/etc. And it's hard for a young charity (or other org) to say no to a really big chunk of mone, which might further increase risk tolerance over and above the Manifund folks having a higher risk tolerance out of the gate.

So the would-be grantee is giving up the opportunity to evaluate donor-associated risks using its own criteria. We can't evaluate the actual level of risks from Manifund's major donors, but its high risk tolerance + very low optics weighting means that the risk could be high indeed. That warrants the yellow flag in my book.

OK, I don't believe that this is a yellow flag about, eg, Manifund's judgement. But I can agree this should be a yellow flag when considering whether to accept Manifund's money.

(fwiw, I think I'm much less risk tolerant than Manifund, and disagree with several decisions they've made, but have zero issues with taking money from this donor)

I read Patrick as saying that he didn't see evidence of the "level of professionalism" that he would find necessary to "partake in a Manifund project with . . . an anonymous donor." In other words, donor anonymity requires a higher level of confidence in Manifund than would be the case with a known source of funds. I don't read anyone as saying that taking funds from a donor whose identity is not publicly known is a strike against Manifund's judgment.

Yes, thank you for putting it this way, that was what I want to convey. For example I would be more comfortable with taking a grant funded by an anonymous donation to Open Phil as they has a history of value judgments and due diligence concerning grants and seem to be a well-run organisation in general.

Sure, this seems reasonable

I don't know your organization, of course, but I don't see how some of this stuff would impact the average EA CC grantee very much (at least in expectancy). The presence of allegedly weak internal controls and governance would be of much more concern to me as a potential donor to Manifund than it would be as a potential grantee.

I want to be very clear that I have not done my own due diligence on Manifund because I am not planning to donate to or seek money through them. I'm jumping to some extreme examples here merely to illustrate that the risk of certain things depends on what one's relationship to the charity in question is.

In extreme cases, IRS can strip an organization of its recognition under IRC 501(c)(3), or another paragraph of subsection (c). This certainly has adverse consequences for the organization and its donors, the most notable of which include that donors can't take tax deductions for donations to the ex-501(c)(3) going forward and that the nonprofit will have to pay taxes on any revenues realized as a for-profit. However, I am not coming up with any significant consequences for organizations that had received grants from the non-profit in the past, at least not off the top of my head. States can also sue to take over nonprofits, but it takes a lot -- usually significant self-dealing like at the Trump Foundation or the National Rifle Association -- to trigger such a suit. They aren't quick, and the state still has to spend the monies consistent with the charity's objectives and/or donor intent. So consequences that sound really bad in the abstract may not be on a grantee's radar at all, because the consequences would not actually hit the grantee.

I think Manifund 's internal affairs would have some relevance to the risk that a grantee organization might not get money it was expecting. But to the extent that an organization would be seriously affected by non-receipt of an anticipated $5-$10K, the correct answer is usually going to be just not counting on the money before it hits the org's bank account. Maybe there's an edge case in which investing resources to better quantify that risk would be worthwhile, though. It seems unlikely here, where the time between application and expected payment is a few months.

As far as the risk of a FTX-style clawback . . . yeah, it's possible that some donations to Manifund could be fraudulent conveyances (which don't, contrary to the name, have to involve actual fraud). Since we don't know who the major donors are, that's hard to assess. However, under a certain dollar amount, litigation just isn't financially viable for the estate. That being said, many of us (including myself) would feel an ethical obligation to return donations in some cases involving actual capital-F Fraud. So that would be a relevant consideration, but the base rate is low enough that it's hard to justify spending a lot of time on this at moderate donation levels.

It sounds like "D" is anonymous in the sense that Manifund isn't going to tell the world who they are, but not in the sense that their identity is unknown to Manifund (or IRS, which would receive the information on a non-public schedule to Form 990). Although extreme reliance on a single non-disclosed donor is still a yellow flag, that would be an important difference.

All that is to say that I expect that the significant majority of organizations would not find those yellow flags enough to preclude participation at the funding level at play here. (Whether they would find the connection between Manifund and Manifest a barrier is not considered here.) Nor would I expect most orgs to have done more than a cursory check at the $5-10K level. But again, you're the expert on your organization and I don't want to imply you are making the wrong decision for your own org!

Thank you for that assessment! I agree that the legal risk is low, and for this reason, I wouldn't refrain from participating in the project.

On the reputation side, I might have updated too much from FTX. As an EA meta organisation, I want a higher bar for taking donations than a charity working on the object level. This would be especially the case if I took part in a project that is EA branded and asked me to promote the project to get funding. Suppose Manifund collapses or the anonymous donor is exposed as someone whose reputation would keep people from joining the community. In that case, I think it would reflect poorly on the ability of the community overall to learn from FTX and install better mechanisms to identify unprofessional behaviour.

Perhaps the crux is whether I would actually lose people in our target groups in one of the scenario cases or if the reputational damage would be only outside of the target group. In the last Community Health Survey, 46% of participants at least somewhat agreed with having a desire for changes post-FTX. Leadership and scandals were two of the top areas mentioned, which I interpret as community members wanting fewer scandals and better management of organisations. Vetting donors is one way that leaders can learn from FTX and reduce risk. But there is also the risk of losing out to donations.

I understand your concern, thanks for flagging this!

To add a perspective: As a former EA movement builder who thought about this a bunch, the reputation risks of accepting donations from a platform by an organization that also organizes events that some people found too "edgy" seem very low to me. I'd encourage EA community organizers to apply, if the money would help them do more good, and if they're concerned about risks, ask CEA or other senior EA community organizers for advice.

Generally, I feel many EAs (me included) lean towards being too risk-averse and hesitant to take action than too risk-seeking (see omission bias). I'm also a bit worried about increasing pressure on community organizers not to take risks and worry about reputation more than they need to. This is just a general impression, I might be wrong, and I still think there are many organizers who might be not sufficiently aware of the risks, so thanks for pointing this out!

This is an interesting and insightful viewpoint, but I'm not sure how it applies at the expected donation size. Although the size of the potential grant one's organization might receive is unknown, the use of a quadratic funding mechanism and wide eligibility criteria makes me think most potential applicants are looking at an expected value of funding of $10,000 or less. I don't think it is the current standard of care in the philanthropic sector to generally vet donations of this size (especially to the extent you think they are likely to be one-off). So most orgs would -- reasonably! -- just cash a ~$5,000 check that came in without investigating the drawer of the check. The exception would be a donor-identity problem that was obvious on its face; I expect most orgs would investigate and likely shred a check if drawn on the account of one of SBF's immediate family members! But otherwise, it seems that the amount of due diligence investigation one is arguably "delegating" to Manifund is close to zero.

I expect the amount of due diligence to vary based on funding, project size, and public engagement levels. Even a small amount might be a significant part of the yearly income of a small EA group that might have to deal with increased scrutiny or discussions based on the composition of its existing and potential group members.

Thank you for doing this, and huge thanks to the anonymous donor!

Three quick questions on the implementation side of things:

Apologies if these were already answered somewhere, I'm really curious to see the results of this experiment!

Appreciate the questions! In general, I'm not super concerned about adversarial action this time around, since:

Specifically:

We're going with QF because it's a schelling point/rallying flag for getting people interested in weird funding mechanisms. It's not perfect, but it's been tested enough in the wild for us to have some literature behind it, while not having much actual exposure within EA. If we run this again, I'd be open to mechanism changes!

Meanwhile I think sharing this on X and encouraging their followers to participate is pretty reasonable -- while we're targeting EA Community Choice at medium-to-highly engaged EAs, I do also hope that this would draw some new folks into our scene!

I'd also consider erring on the side of being clear and explicit about the norms you expect people to follow. For instance, someone who only skims the explanation of QF (or lacks the hacker/lawyer instinct for finding exploits!) may not get that behavior that is pretty innocuous in other contexts can be reasonably seen as pretty collusive and corrupting in the QF context. For instance, in analogous contexts, I suspect that logrolling-type behaviors ("vote" for my project by allocating a token amount of your available funds, and I'll vote for yours!) would be seen as fine by most of the general population.[1]

Indeed, I'm not 100% sure on where you would draw the line on coordination / promotional activities.

I would have assumed logrolling type behaviours were basically fine here (at least, if the other person also somewhat cared about your charity), so +1 that explicit norms are good

This is really cool! I would love to see EA move away from official funds and more towards microregranting schemes like this. Do you think in the future there's much chance of you either

Glad you like it! As you might guess, the community response to this first round will inform what we do with this in the future. If a lot of people and projects participate, then we'll be a lot more excited to run further iterations and raise more funding for this kind of event; I think success with this round would encourage larger institutional donors to want to participate.

re: ongoing process, the quadratic funding mechanism typically plays out across different rounds -- though I have speculated about an ongoing version before.

Also, all the granting decisions here will be done in public, so I highly encourage other EA orgs to use the data generated for their own purposes (eg evaluating potential new grantmakers).

I went through and allocated funds[1] to projects. I was more influenced by concepts like affirmation and encouragement than I expected.

I had previously been a micrograntor for the ACX impact certificates program. Compared to that project pool, these projects are more likely to seek small sums to amplify the impact of existing volunteer work. So they tended to score well on two evaluation criteria I consider: sweat equity / skin in the game as a signal, and the potential for relatively small amounts of money to serve as an enzyme multiplying the effect of volunteer-labor and other non-financial inputs already present.

I suggest in this comment that providing very modest amounts of funding to volunteer-led projects has a degree of signaling value -- not enough to justify throwing a few hundred dollars at everything willy-nilly, but enough to factor into the calculus at the lowest funding levels.[2] Receiving funding from other community members sends messages like: "We believe in the value of what you're doing," "Your contributions are noticed and appreciated," and "You belong."[3] For better or worse, I think this meaning is more prominent in EA culture than many other movement subcultures. And I think it may be particularly valuable for those on the geographical and cultural periphery, far from San Fran and London. A number of the projects currently fundraising in the EACC initiative are led by people from LMICs.

I want to be careful to avoid the Dodo bird verdict, under which everyone has won and all must win prizes. Funding allocation is even more about tradeoffs and saying no to good things than the median activity in life. But the significant majority of projects are things I'm happy to see funded, and I'm not convinced that other community funding mechanisms are in a good position to consider the funding needs of many of the smaller projects. So I gave some consideration to the signaling value of funding and sometimes erred on the side of ensuring that a project got at least some funding -- Manifund sets a minimum of $500 for operational reasons, although a project can set a higher one.[4]

Whether this is a sign that I'm getting soft in my old age (just turned 42) or something is left as an exercise to the reader and/or commenter.

I had been too late to claim any EACC funds, which ran out within hours. But I had funds on Manifund that someone else had given me. So I had somewhat more money available for use, although it was not restricted to EACC projects.

I speculate that the signaling value scales only weakly with the dollar amount beyond a fairly low floor, so its relative importance will be higher in small-dollar decisions.

Most of these projects involve people with non-EA jobs volunteering based on opportunities they have seen arise in their context. The counterfactual to them performing their current labors may well be them doing ordinary things. Therefore, I am less concerned about the possibility of sending these messages when the person would be better off working on a different project.

In some cases, providing minimal levels of funding may not allow a project to launch or continue. But this is less likely for projects seeking small sums as an enzyme to multiply the effects of other inputs.

Just to clarify, I assume that our distributions will not be made public / associated with our names?

[More of a general question for Manifund, but posting it here as others may benefit from whatever insight @Austin or others can provide]

What is the expected distribution of vetting labor between Manifund users and Manifund itself? In particular, I see a number of very small organizations previously unknown to me (especially African animal-welfare organizations this round) -- which is great! However, that does raise some due diligence questions that I don't have to think about when I'm considering allocating money to, e.g., GiveWell. It would be unfortunate either for me to falsely assume Manifund is performing certain due diligence (in which case it isn't done) or to duplicate what Manifund is already doing (which would be inefficient and waste grantees' time).

(The rest of this question will have some non-U.S. focus, in part because the legal expectations are different for U.S. and non-U.S. grants.)

In particular, is it fair to assume that:

I appreciate, of course, that Manifund doesn't want to wield a broad veto power over proposals that users want to see funded. I guess I would roughly draw the line at Manifund ensuring that the proposal is legitimate, but deferring to the user on whether funding it is a good idea?

I did not graduate from Yale, by the way.

Thanks for the questions! Most of our due diligence happens in the step where the Manifund team decides whether to approve a particular grant; this generally happens after a grant has met its minimum funding bar and the grantee has signed our standard grant agreement (example). At that point, our due diligence usually consists of reviewing their proposal as written for charitable eligibility, as well as a brief online search, looking through the grant recipient's eg LinkedIn and other web presences to get a sense of who they are. For larger grants on our platform (eg $10k+), we usually have additional confidence that the grant is legitimate coming from the donors or regrantors themselves.

In your specific example, it's very possible that I personally could have missed cross-verifying your claim of attending Yale (with the likelihood decreasing the larger the grant is for). Part of what's different about our operations is that we open up the screening process so that anyone on the internet can chime in if they see something amiss; to date we've paused two grants (out of ~160) based on concerns raised from others.

I believe we're classified as a public charity and take on expenditure responsibility for our grants, via the terms of our grant agreement and the status updates we ask for from grantees.

And yes, our general philosophy is that Manifund as a platform is responsible for ensuring that a grant is legitimate under US 501c3 law, while being agnostic about the impact of specific grants -- that's the role of donors and regrantors on our platform.

I think this is a very fun idea, thanks for making this happen!

With the princely allocation of $600 I received, I've been going through the list with my partner and discussing the pros and cons as we see them of each project before allocating them. Obviously the majority of these discussions are going to end in no donation, so I wonder if Austin thinks (or any of the individual grant requestors, if you're reading this would like) us to comment on why we're not funding those we don't, if we feel like we have anything to say?

Please keep in mind if we did that there are dozens of projects and we don't want to much extra bandwidth on this, so if we made such comments they would more or less be a C&P of our personal notes, and not edited to spare anyone's feelings.

I'd really appreciate you leaving thoughts on the projects, even if you decided not to fund them. I expect that most project organizers would also appreciate your feedback, to help them understand where their proposals as written are falling short. Copy & paste of your personal notes would be great!

That is a wonderful idea!

One question - what about an initiative we highly value but that is discontinued or may soon be discontinued? May the funds in such a case be allocated to the organisation that launched the initiative for unrestricted spending?

Yes, we're happy to allocate funds to the org that ran that initiative for them to spend unrestricted towards other future initiatives!

Thanks so much for organising and very much looking forward! Shared with our whole community :)

So exciting! Can community members donate to more than one project? I understood so from the post. And if they do, then there is probably some beautiful optimum(s?) of how much they should diversify, as a vote can be a lot more powerful than the size of the donation when it is matched. Maybe that math is explained in one of the links on quadratic funding. I haven't checked them out yet :)

The nice thing about the quadratic voting / quadratic funding formula (and the reason that so many people are huge nerds about it) is that the optional diversification is really easy to state:

One explanation for this is, if you're donating $X to A and $1 to B, then adding one more cent to A increases A's total match by 1/X the amount that B would get if you gave it to B. So the point where your marginal next-dollar is equal is the point where your funding / votes are proportional to impact.

This seems false.

Consider three charities A,B,C and three voters X,Y,Z, who can donate $1 each. The matching funds are $3. Voter Z likes charity C and thinks A and B are useless, and gives everything to C. Voter Y likes charity B and thinks A and C are useless, and gives everything to B. Voter X likes charities A and B equally and thinks C is useless.

Then voter X can get more utility by giving everything to charity B, rather than splitting equally between A and B: If voter X gives everything to charity B, the proportions for charities A,B,C are 02:(1+1)2:12 If voter X splits between A and B, the proportions are √1/22:(1+√1/2)2:12 The latter gives less utility according to voter X.

The quadratic-proportional lemma works in the setting where there's an unbounded total pool; if one project's finding necessarily pulls from another, then I agree it doesn't work to the extent that that tradeoff is in play.

In this case, I'm modeling each cause as small relative to the total pool, in which case the error should be correspondingly small.

Yes, community members can donate in any proportion to the projects in this round. The math of quadratic funding roughly means that your first $1 to a project receives the largest match, then the next $3, then the next $5, $7, etc. Or: your match to a project is proportional to the square root of how much you've donated.

You can get some intuition by playing with the linked simulator; we'll also show calculations about current match rates directly on our website. But you also don't have to worry very much about the quadratic funding equation if you don't want to, and you can just send money to whatever projects you like!

Hey, I was unable to donate to my own project with the funds (but was able to donate to others' projects and others were able to donate to mine). Are others having this issue?

yes, same

What a great initiative!

Just a quick question regarding this:

I'm writing this on August 20th - is it too late to claim the $100? If not, where is the 2-minute form you mentioned that we need to fill out? Thank you!

Hey! It is not too late; in fact, people can continue signing up to claim and direct funds anytime before phase 3.

(I'm still working on publishing the form; if it's not up today, I'll let y'all know and would expect it to be up soon after)