Introduction

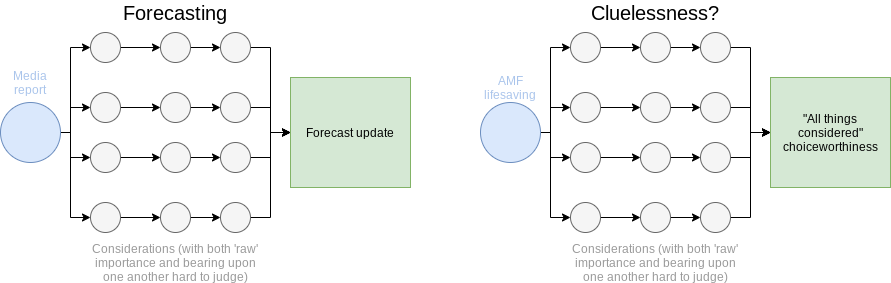

In sketch, the challenge of consequentialist cluelessness is the consequences of our actions ramify far into the future (and thus - at least at first glance - far beyond our epistemic access). Although we know little about them, we know enough to believe it unlikely these unknown consequences will neatly ‘cancel out’ to neutrality - indeed, they are likely to prove more significant than those we can assess. How, then, can we judge some actions to be better than others?

For example (which we shall return to), even if we can confidently predict the short-run impact of donations to the Against Malaria Foundation are better than donations to Make-a-Wish, the final consequences depend on a host of recondite matters (e.g. Does reducing child mortality increase or decrease population size? What effect does a larger population have on (among others) economic growth, scientific output, social stability? What effect do these have on the margin in terms of how the future of humankind goes?) In aggregate, these effects are extremely unlikely to neatly cancel out, and their balance will likely be much larger than the short run effects.

Hillary Greaves, in presenting this issue (Greaves 2016), notes that there is an orthodox subjective Bayesian ‘answer’ to it: in essence, one should offer (precise) estimates for all the potential long-term ramifications, ‘run the numbers’ to give an estimate of the total value, and pick the option that is best in expectation. Calling this the ‘sceptical response’, she writes (p.12):

This sceptical response may in the end be the correct one. But since it at least appears that something deeper is going on in cases like the one discussed in section 5 [‘complex cluelessness’], it is worth exploring alternatives to the sceptical response.

Andreas Mogensen, in subsequent work (Mogensen 2019), goes further, suggesting this is a ‘Naive Response’. Our profound uncertainty across all the considerations which bear upon the choice-worthiness of AMF cannot be reasonably summarized into a precise value or probability distribution. Both Greaves and Mogensen explore imprecise credences as an alternative approach to this uncertainty.

I agree with both Greaves and Mogensen there’s a deeper issue here, and an orthodox reply along the lines of, “So be it, I assign numbers to all these recondite issues”, is too glib. Yet I do not think imprecise credences are the best approach to tackle this problem. Also, I do not think the challenge of cluelessness relies on imprecise credences: even if we knew for sure our credences should be precise, the sense of a deeper problem still remains.

I propose a different approach, taking inspiration from Amanda Askell and Phil Trammell, where we use credal fragility, rather than imprecision, to address decision making with profound uncertainty. We can frame issues of ‘simple cluelessness’ (e.g. innocuous variations on our actions which scramble huge numbers of future conceptions) as considerations where we are resiliently uncertain of their effect, and so reasonably discount them as issues to investigate further to improve our best guess (which is commonly, but not necessarily, equipoise). By contrast, complex cluelessness are just those cases where the credences we assign to the considerations which determine the long-run value of our actions are fragile, and we have reasonable prospects to make our best guess better.

Such considerations seem crucial to investigate further: even though it may be even harder to (for example) work out the impact of population size on technological progress than it is the effect size of AMF’s efforts on child mortality, the much greater impact of the former than the latter on the total impact of AMF donations makes this topic the better target of investigation on the margin to improve our estimate of the value of donating to AMF.[1]

My exploration of this approach suggests it has some attractive dividends: it preserves features of orthodox theory most find desirable, avoids the costs of imprecise credences, and - I think - articulates well the core problem of cluelessness which Greaves, Mogensen, myself, and others perceive. Many of the considerations regarding the influence we can have on the deep future seem extremely hard, but not totally intractable, to investigate. Offering naive guestimates for these, whilst lavishing effort to investigate easier but less consequential issues, is a grave mistake. The EA community has likely erred in this direction.

On (and mostly contra) imprecise credences

Rather than a single probability function which will give precise credences, Greaves and Mogensen suggest the approach of using a set of probability functions (a representor). Instead of a single credence for some proposition p, we instead get a set of credences, arising from each probability function within the representor.

Although Greaves suggests imprecise credences as an ‘alternative line’ to orthadox subjective Bayesianism, Mogensen offers a stronger recommendation of the imprecise approach over the ‘just take the expected value’ approach, which he deems a naive response (p. 6):

I call this ‘the Naïve Response’ because it is natural to object that it fails to take seriously the depth of our uncertainty. Not only do we not have evidence of a kind that allows us to know the total consequences of our actions, we seem often to lack evidence of a kind that warrants assigning precise probabilities to relevant states. Consider, for example, the various sources of uncertainty about the indirect effects of saving lives by distributing anti-malarial bed-nets noted by Greaves (2016). We have reason to expect that saving lives in this way will have various indirect effects related to population size. We have some reason to think that the effect will be to increase the future population, but also some reason to think that it will be to decrease the net population (Roodman 2014; Shelton 2014). It is not clear how to weigh up these reasons. It is even harder to compare the relative strength of the reasons for believing that increasing the population is desirable on balance against those that support believing that population decrease is desirable at the margin. That the distribution of bed-nets is funded by private donors as opposed to the local public health institutions may also have indirect political consequences that are hard to assess via the tools favoured by the evidence-based policy movement (Clough 2015). To suppose that our uncertainty about the indirect effects of distributing anti-malarial bed-nets can be summarized in terms of a perfectly precise probability distribution over the relevant states seems to radically understate the depth of our uncertainty.

I fear I am too naive to be moved by Mogensen’s appeal. By my lights, although any of the topics he mentions are formidably complicated, contemplating them, weighing up the considerations that bear upon them, and offering a (sharp) ‘best guess’ alongside the rider that I am deeply uncertain (whether cashed out in terms of resilience or 'standard error' - cf., and more later) does not seem to pretend false precision nor do any injustice to how uncertain I should be.

Trading intuitions offers little: it is commonplace in philosophy for some to be indifferent to a motivation others find compelling. I’m not up to the task of giving a good account of the de/merits of im/precise approaches (see eg. here). Yet I can say more on why imprecise credences poorly articulate the phenomenology of this shared sense of uncertainty - and more importantly, seem to fare poorly as means to aid decision-making:[2] the application of imprecise approaches gives results (generally of excessive scepticism) which seem inappropriate.

Incomparability

Unlike ‘straight ticket expected value maximization’, decision rules on representors tend to permit incomparability: if elements within one’s representor disagree on which option is better, it typically offers no overall answer. Mogensen’s account of one such decision rule (the ‘maximality rule’) notes this directly:

When there is no consensual ranking of a and a`, the agent’s preference with respect to these options is indeterminate: she neither prefers a to a`, nor a` to a, nor does she regard them as equally good.

I think advocates of imprecise credences consider this a feature rather than a bug,[3] but I do not think I am alone in having the opposite impression. The articulation would be something along the lines of decision rules on representors tending ‘radically anti-majoritarian’: a single member of one’s credal committee is enough to ‘veto’ a comparison of a versus a`. This doesn’t seem the right standard in cases of consequentialist cluelessness where one wants to make judgements like ‘on balance better in expectation’.

This largely bottoms out in the phenomenology of uncertainty:[4] if, in fact, one’s uncertainty is represented by a set of credence functions, and, in fact, one has no steers on the relative plausibility of the elements of this set compared to one another, then responding with indeterminacy when there is no consensus across all elements seems a rational response (i.e. even if the majority of my representor favours action a over alternative action a`, without steers on how to weigh a-favouring elements over others, there seems little room to surpass a ‘worst-case performance’ criterion like the maximality rule).

Yet I aver that in most-to-all cases (including those of consequentialist cluelessness) we do, in fact, have steers about the relative plausibility of different elements. I may think P(rain tomorrow) could be 0.05, and it could be 0.7 (and other things besides), yet I also have impressions (albeit imprecise) on one being more reasonable than the other. The urge of the orthodox approach is we do better trying to knit these imprecise impressions into a distribution to weigh the spectrum of our uncertainty - even though it is an imperfect representation,[5] rather than deploying representors which often unravel anything downstream of them as indeterminate.

To further motivate, this (by my lights, costly) incomparability may prove pervasive and recalcitrant in cases of consequentialist cluelessness, for reasons related to the challenge of belief inertia.

A classical example of belief inertia goes like this: suppose a coin of unknown bias. It seems rationality permissible for one’s representor on the probability of said coin landing heads to be (0,1).[6] Suppose one starts flipping this coin. No matter the number of coin flips (and how many land heads), the representor on the posterior seems stuck to (0,1): for any element in this posterior representor, for any given sequence of observations, we can find an element in the prior representor which would update to it.[7]

This problem is worse for propositions where we anticipate receiving very limited further evidence. Suppose (e.g.) we take ourselves to be deeply uncertain on the proposition “AMF increases population growth”. Following the spirit of imprecise approach, we offer a representor with at least one element either side of 0.5. Suppose one study, then several more, then a systematic review emerges which all find that AMF lives saved do translate into increased population growth. There’s no guarantee all elements of our representor, on this data, will rise above 0.5 - it looks permissible for us to have included in our representor a credence function which would not do this (studies and systematic reviews are hardly infallible). If this proposition lies upstream of enough of the expected impact, such a representor entails we will never arrive at an answer as to whether donations to AMF are better than nothing.

For many of the other propositions subject to cluelessness (e.g. “A larger population increases existential risk”) we can only hope to acquire much weaker evidence than sketched above. Credence functions that can remain resiliently ‘one side or the other’ of 0.5[8] in the face of this evidence again seems at least permissible (if not reasonable) to include in our representor.[9] Yet doing so makes for pervasive and persistent incomparability: including a few mildly stubborn credence functions in some judiciously chosen representors can entail effective altruism from the longtermist perspective is a fool’s errand. Yet this seems false - or, at least, if it is true, it is not true for this reason.[10]

Through a representor darkly

A related challenge is we have very murky access to what our representor either is or should be. A given state of (imprecise) uncertainty could be plausibly described by very large numbers of candidate representors. As decision rules on representors tend to be exquisitely sensitive to which elements they contain, it may be commonplace where (e.g.) action a is recommended over a` given representor R, but we can counter-propose R`, no less reasonable by our dim lights of introspective access, which hold a and a` to be incomparable.[11]

All the replies here look costly to me. One could ‘go meta’ and apply the decision rules to the superset of all credence functions that are a member of at least one admissible representor[12] (or perhaps devise some approach to aggregate across a family of representors), but this seems likely to amplify the problems of incomparability and murky access that apply to the composition of a single representor.[13] Perhaps theory will be able to offer tools to supplement internal plausibility to assist us in picking the ‘right’ representor (although this seems particularly far off for ‘natural language’ propositions cluelessness tends to concern). Perhaps we can work backwards from our intuitions about when actions should be incomparable or not to inform what our representor should look like, although reliance on working backwards like this raises the question as to what value - at least prudentially - imprecise credences have as a response to uncertainty.

Another alternative for the imprecise approach is to go on the offensive: orthodoxy faces a similar problem. Any given sharp representation I offer to represent my uncertainty is also susceptible to counter-proposals which will seem similarly appropriate, and in some of these cases the overall judgement will prove sensitive to which representation I use. Yet although a similar problem, it is much less in degree: even heavy-tailed distributions are much less sensitive to ‘outliers’ than representors, and orthodox approaches have more resources available to aggregate and judge between a family of precise representations.

Minimally clueless forecasting

A natural test for approaches to uncertainty is to judge them by their results. For consequentialist cluelessness, this is impossible: there is no known ground truth of long-run consequences to judge against. Yet we can assess performance in nearby domains, and I believe this assessment can be adduced in favour of orthodox approaches versus imprecise ones.

Consider a geopolitical forecasting question, such as: “Before 1 January 2019, will any other EU member state [besides the UK] schedule a referendum on leaving the EU or the eurozone?” This question opened on the Good Judgement Open on 16 Dec 2017. From more than a year out, there would seem plenty to recommend imprecision: there would be 27 countries, each with their complex political context, several of whom with elections during this period, and a year is a long time in politics. Given all of this, would an imprecise approach not urge us it is unwise to give a (sharp) estimate of the likelihood of this event occurring within a year?

Yet (despite remaining all-but-ignorant of these particulars) I still offered an estimate of 10% on Dec 17. As this didn’t happen, my Brier score was a (pretty good) 0.02 - although still fractionally worse than the median forecaster for this question (0.019).[14] If we instrumented this with a bet (e.g. “Is it better for me to bet donation money on this not occuring at a given odds?”), I would fare better than my “imprecise/incomparible” counterpart. Depending on how wide their representor was, they could not say taking a bet at evens or 5:1 (etc.) was better than not doing so. By tortured poker analogy, my counterpart’s approach leads them to play much too tightly, leaving value on the table an orthodox approach can reliably harvest.[15]

Some pre-emptive replies:

First, showing a case where I (or the median) forecaster got it right means little: my counterpart may leave ‘easy money’ on the table when the precisification was right, yet not get cleaned out when the precisification was wrong. Yet across my forecasts (on topics including legislation in particular countries, election results, whether people remain in office, and property prices - _all _of which I know very little about), I do somewhat better than the median forecaster, and substantially better than chance (Brier ~ 0.23). Crucially, the median forecaster also almost always does better than chance too (~ 0.32 for those who answered the same questions as I) - which seems the analogous consideration for cluelessness given our interest is in objective rather than relative accuracy.[16] That the imprecise/incomparible approach won’t recommend taking ‘easy money’ seems to be a general trend rather than a particular case.

Second, these uncertainties are ‘easier’ than the examples of consequentialist cluelessness. Yet I believe the analogy is fairly robust, particularly with respect to ‘snap judgements’. There are obvious things one can investigate to get a better handle on the referendum question above: one could look at the various political parties standing in each country, see which wanted to hold such a referendum, and look at their current political standing (and whether this was likely to change over the year). My imprecise/incomparible counterpart, on consulting these, could winnow their representor so (by the maximality rule) they may be recommended to take rather than refrain even-odds or tighter bets.

Yet I threw out an estimate without doing any of these (and I suspect the median forecaster was not much more diligent). Without this, there seems much to recommend including in my representor at least one element with P>0.5 (e.g. “I don’t know much about the Front Nationale in France, but they seem a party who may want to leave the EU, and one which has had political success, and I don’t know when the next presidential election is - and what about all the other countries I know even less about?”). As best as I can tell, these snap judgements (especially from superforecasters,[17] but also from less trained or untrained individuals) still comfortably beat chance.

Third, these geopolitical forecasting questions generally have accessible base rates or comparison classes (the reason I threw out 10% the day after the referendum question opened was mostly this). Not so for consequentialist cluelessness - all of the questions about trends in long-term consequences are yet to resolve,[18] and so we have no comparison class to rely on for (say) whether greater wealth helps the future go better or otherwise. Maybe orthodox approaches are fine when we can moor ourselves to track records, but they are inadequate when we cannot and have to resort to extrapolation fuelled by analogy and speculation - such as cases of consequentialist cluelessness.

Yet in practice forecasting often takes one far afield from accessible base rates. Suppose one is trying to work out (in early 2019) whether Mulvaney will last the year as White House Chief of staff. One can assess turnover of white house staff, even turnover of staff in the Trump administration - but how to balance these to Mulvaney’s case in particular (cf. ‘reference class tennis’)? Further suppose one gets new information (e.g. media rumours of him being on ‘shaky ground’): this should change one’s forecast, but by how much? (from 10% to 11%, or to 67%?) There seems to be a substantially similar ‘extrapolation step’ here.

In sum: when made concrete, the challenges of geopolitical forecasting are similar to those in consequentialist cluelessness. In both:

- The information we have is only connected to what we care about through a chain of very uncertain inferences (e.g.[19] “This report suggests that Trump is unhappy with Mulvaney, but what degree of unhappiness should we infer given this is filtered to us from anonymous sources routed through a media report? And at what rate of exchange should this nebulous ‘unhappiness’ be cashed out into probability of being dismissed?”).

- There are multiple such chains (roughly corresponding to considerations which bear upon the conclusion), which messily interact with one another (e.g. “This was allegedly prompted by the impeachment hearing, so perhaps we should think Trump is especially likely to react given this speaks to Mulvaney’s competence at an issue which is key to Trump - but maybe impeachment itself will distract or deter Trump from staff changes?”)

- There could be further considerations we are missing which are more important than those identified.

- Nonetheless, we aggregate all of these considerations to give an all-things considered crisp expectation.

Empirically, with forecasting, people are not clueless. When they respond to pervasive uncertainty with precision, their crisp estimates are better than chance, and when they update (from one crisp value to another) based on further information (however equivocal and uncertain it may be) their accuracy tends to improve.[20] Cases of consequentialist cluelessness may differ in degree, but not (as best as I can tell) in kind. In the same way our track record of better-than-chance performance warrants us to believe our guesses on hard geopolitical forecasts, it also warrants us to believe a similar cognitive process will give ‘better than nothing’ guesses on which actions tend to be better than others, as the challenges are similar between both.[21]

Credal resilience and ‘value of contemplation’

Suppose I, on seeing the evidence AMF is one of the most effective charities (as best as I can tell) for saving lives, resolve to make a donation to it. Andreas catches me before I make the fateful click and illustrates all the downstream stakes I, as a longtermist consequentialist, should also contemplate - as these could make my act of donation much better or much worse than I first supposed.

A reply along the lines of, “I have no idea what to think about these things, so let’s assume they add up to nothing” is unwise. It is unwise as (accord Mogensen and others) I cannot simply ‘assume away’ considerations on which the choiceworthiness of my action is sensitive to. I have to at least think about them.

Suppose instead I think about it for a few minutes and reply, “On contemplating these issues (and the matter more generally), my best guess is the downstream consequences in aggregate are in perfect equipoise - although I admit I am highly uncertain on this - thus the best guess for the expected value of my donation remains the same.”

This is also unwise, but for a different reason. The error is not (pace cluelessness) on giving a crisp estimate for a matter I should still be profoundly uncertain about: if I hopped into a time machine and spent a thousand years mulling these downstream consequences over, and made the same estimate (and was no less uncertain about it); or for some reason I could only think about it for those few minutes, I should go with my all-things-considered best guess. Yet the error is that in these circumstances, it seems I should be thinking about these downsteam consequences for much longer than a few minutes.

This rephrases ‘value of information’ (perhaps better ‘contemplation’).[22] Why this seems acutely relevant here is precisely the motivation for consequentialist cluelessness: when the overall choiceworthiness of our action proves very sensitive to a considerations we are very uncertain about, the expected marginal benefit of reducing our uncertainty here will often be a better use of our efforts than acting on our best guess.

Yet in making wise decisions about how to allocate time to contemplate things further, we should factor in ‘tractability’. Some propositions, although high-stakes, might be those in which we are resiliently uncertain, and so effort trying to improve our guesswork is poorly spent. Others, where our credences are fragile (‘non-resiliently uncertain’) are more worthwhile targets for investigation.[23]

I take the distinction between ‘simple’ and ‘complex’ cluelessness to be mainly this. Although vast consequences could hang in the balance with trivial acts (e.g. whether I click ‘donate now’ now or slightly later could be the archetypal ‘butterfly wing’ which generates a hurricane, or how trivial variations in behaviour might change the identity of a future child - and then many more as this different child and their descendants ‘scramble’ the sensitive identity-determining factors of other conceptions), we rule out further contemplation of ‘simple cluelessness’ because it is intractable. We know enough to predict that the ex post ‘hurricane minimizing trivial movements’ won’t be approximated by simple rules (“By and large, try and push the air molecules upwards”), but instead exquisitely particularized in a manner we could never hope to find out ex ante. Likewise, for a given set of ‘nearby conceptions’ to the actual one, we know enough to reasonably believe we will never have steers on which elements are better, or how - far causally upstream - we can send ripples in just the right way to tilt the odds in favour of better results.[24]

Yet the world is not only causal chaos. The approximate present can approximately determine the future: physics allows us to confidently predict a plane will fly without modelling to arbitrary precision all the air molecules of a given flight. Similarly, we might discover moral ‘levers on the future’ which, when pulled in the right direction, systematically tend to make the world better rather than worse in the long run.

‘Complex cluelessness’ draws our attention to such levers, where we know little about which direction they are best pulled, but (crucially) it seems we can improve our guesswork. Whether (say) economic growth is good in the long-run is a formidably difficult question to tackle.[25] Yet although it is harder than (say) how many child deaths a given antimalarial net distributions can be expected to avert, it is not as hopelessly intractable as the best way to move my body to minimize the expected number of hurricanes. So even though the expected information per unit effort on the long-run impacts of economic growth will be lower than evaluating a charity like AMF, the much greater importance of his consideration (both in terms of its importance and its wide applicability) makes this the better investment of our attention.[26]

Conclusion

Our insight into the present is poor, and deteriorates inexorably as we try to scry further and further into the future. Yet longtermist consequentialism urges us to evaluate our actions based on how we forecast this future to differ. Although we know little, we know enough that our actions are apt to have important long term ramifications, but we know very little about what those will precisely be, or how they will precisely matter. What ought we to do?

Yet although we know little, we are not totally clueless. I am confident the immediate consequences of donations to AMF are better than those to make a wish. I also believe the consequences simpliciter are better for AMF donations than Make-a-wish donations, although this depends very little on the RCT-backed evidence base, and much more on a weighted aggregate of poorly-educated guesswork on ‘longtermist’ considerations (e.g. that communities with fewer child deaths fare better in naive terms, and the most notable dividends of this with longterm relevance - such as economic growth in poorer countries - systematically tend to push the longterm future in a better direction) which I am much less confident in.

This guesswork, although uncertain and fragile, nonetheless warrants my belief that AMF-donations are better than Make-a-wish ones. The ‘standard of proof’ for consequentialist decision making is not ‘beyond a reasonable doubt’, but a balance of probabilities, and no matter how lightly I weigh my judgement aggregating across all these recondite matters, it is sufficient to tip the scales if I have nothing else to go on. Withholding judgement will do worse if, to any degree, my tentative guesswork tends towards the truth. If I had a gun to head to allocate money to one or the other right now, I shouldn’t flip a coin.

Without the gun, another option I have is to try and improve my guesswork. To best improve my guesswork, I should allocate my thinking time to uncertainties which have the highest yield - loosely, a multiple of their ‘scale’ (how big an influence they play on the total value) and ‘tractability’ (how much I can improve my guess per unit effort).

Some uncertainties, typically those of ‘simple’ cluelessness, score zero by the latter criterion: I can see from the start I will not find ways to intervene on causal chaos to make it (in expectation) better, and so I leave them as high variance (but expectation neutral) terms which I am indifferent towards. If I were clairvoyant on these matters, I expect I would act very differently, but I know I can’t get any closer than I already am.

Yet others, those of complex cluelessness, do not score zero on ‘tractability’. My credence in “economic growth in poorer countries is good for the longterm future” is fragile: if I spent an hour (or a week, or a decade) mulling it over, I would expect my central estimate to change, although my remaining uncertainty to be only a little reduced. Given this consideration has much greater impact on what I ultimately care about, time spent on this looks better than time further improving the estimate of immediate impacts like ‘number of children saved’. It would be unwise to continue delving into the latter at the expense of the former. Would that we do otherwise.

Acknowledgements

Thanks to Andreas Mogensen, Carl Shulman, and Phil Trammel for helpful comments and corrections. Whatever merits this piece has are mainly owed to their insight. It's failures are owed to me (and many to lacking time and ability to address their criticism better).

[1] Obviously, the impact of population size on technological progress also applies to many other questions besides the utility of AMF donations.

[2] (Owed to/inspired by Mogensen) One issue under the hood here is whether precision is descriptively accurate or pragmatically valuable. Even if (in fact) we cannot describe our own uncertainty as (e.g.) a distribution to arbitrary precision, we fare better if we act as-if this were the case (the opposite is also possible). My principal interest is the pragmatic one: that agents like ourselves make better decisions by attempting to EV-maximization with precisification than they would with imprecise approaches.

[3] One appeal I see is the intuition that for at least some deeply mysterious propositions, we should be rationally within our rights to say, “No, really, I don’t have any idea”, rather than - per the orthodox approach - taking oneself to be rationally obliged to offer a distribution or summary measure to arbitrary precision.︎

[4] This point, like many of the other (good) ones, I owe to conversation with Phil Trammell.

[5] And orthodox approaches can provide further resources to grapple with this problem: we can perform sensitivity analyses with respect to factors implied by our putative distribution. In cases where we get a similar answer ‘almost all ways you (reasonably) slice it’, we can be more confident in making our decision in the teeth of our uncertainty (and vice versa). Similarly, this quantitative exercise in setting distributions and central measures can be useful for approaching reflective equilibrium on the various propositions which bear upon a given conclusion.︎

[6] One might quibble we might have reasons to rule out extremely strong biases (e.g. P(heads) = 10^-20), but nothing important turns on this point.

[7] Again the ‘engine’ of this problem is the indeterminate weights on the elements in the set. Orthodoxy has also to concede that, given a prior ranging between (0,1) for bias, given any given sequence of heads and tails, any degree of bias (towards heads or tails) is still possible. Yet, as the prior expressed the relative plausibility of different elements, it can say which values(/intervals) have gotten more or less likely. Shorn of this, it is also inert.

[8] I don’t think the picture substantially changes if the proposition changes from “Does a larger population increase existential risk” to “What is the effect size of a larger population on existential risk?”

[9] Winnowing our representor based on its resilience to the amount of evidence we can expect to receive looks ad hoc. ︎

[10] A further formidable challenge is how to address ‘nested’ imprecision: what to do if we believe we should be imprecise about P(A) and P(B|A). One family of cases that spring to mind is moral uncertainty: our balance of credence across candidate moral theories, and (conditional on a given theory) which option is the best will seem to be things that ‘fit the bill’ for an imprecise approach. Naive approaches seem doomed to incomparability, as the total range of choice-worthiness will inevitably expand the longer the conditional chain - and, without density, we seem stuck in the commonplace where these ranges overlap.

[11] Earlier remarks on the challenge of mildly resilient representor elements provide further motivation. Consider Morgensen’s account of how imprecision and maximality will not lead to a preference to AMF over Make-A-Wish given available evidence. After elaborating the profound depth of our uncertainty around the long-run impacts of AMF, he writes (p. 16-17):

[I]t was intended to render plausible the view that the evidence is sufficiently ambiguous that the probability values assigned by the functions in the representor of a rational agent to the various hypotheses that impact on the long-run impact of her donations ought to be sufficiently spread out that some probability function in her representor assigns greater expected moral value to donating to the Make-A-Wish Foundation.

[...]

Proving definitively that your credences must be so spread out as to include a probability function of a certain kind is therefore not in general within our powers. Accordingly, my argument rests in large part on an appeal to intuition. But the intuition to which I am appealing strikes me as sufficiently forceful and sufficiently widely shared that we should consider the burden of proof to fall on those who deny it.

Granting the relevant antecedents, I share this intuition. Yet how this intuitive representor should update in response to further evidence is mysterious to me. I can imagine some that would no longer remain ‘sufficiently spread out’ if someone took a month or so to consider all the recondite issues Morgensen raises, and concludes, all things considered, that AMF has better overall long-run impact than Make-A-Wish; I can imagine others that would remain ‘sufficiently spread out’ even in the face of person-centuries of work on each issue raised. This (implicit) range spans multitudes of candidate representors.

[12] Morgensen notes (fn.4) the possibility a representor could be a fuzzy set (whereby membership is degree-valued), which could be a useful resource here. One potential worry is this invites an orthodox-style approach: one could weigh each element by its degree of membership, and aggregate across them.

[13] Although recursive criticisms are somewhat of a philosophical ‘cheap shot’, I note that “What representor should I use for p?” seems (for many ‘p’s) the sort of question which the motivations of imprecise credences (e.g. the depth of our uncertainty, (very) imperfect epistemic access) recommend an imprecise answer.

[14] I think what cost me was I didn’t update this forecast as time passed (and so the event became increasingly more unlikely). In market terms, I set a good price on Dec 17, but I kept trading at this price for the rest of the year.

[15] Granted, the imprecise/incomparable approach is not recommending refraining from the bet, but saying it has no answer as to whether taking the bet or not doing so is the better option. Yet I urge one should take these bets, and so this approach fails to give the right answer.

[16] We see the same result with studies on wholly untrained cohorts (e.g. undergraduates). E.g. Mellers et al. (2017).

[17] In conversation, one superforecaster I spoke to suggested they take around an hour to give an initial forecast on a question: far too little time to address the recondite matters that bear upon a typical forecasting question.

[18] Cf. The apocryphal remark about it being ‘too early to tell’ what the impact of the French Revolution was.

[19] For space I only illustrate for my example - Morgensen (2019) ably explains the parallel issues in the ‘AMF vs. Make-a-wish’ case.

[20] Although this is a weak consideration, I note approaches ‘in the spirit’ of an imprecise/incomparible approach, when applied to geopolitical forecasting, are associated with worse performance: Rounding estimates (e.g. from a percentage to n bands across the same interval) degrades accuracy - especially for the most able forecasters; those who commit to making (precise) forecasts and ‘keeping score’ improve more than those who do less of this. Cf. Tetlock’s fireside chat:

Some people would say to us, “These are unique events. There's no way you're going to be able to put probabilities on such things. You need distributions. It’s not going to work.” If you adopt that attitude, it doesn't really matter how high your fluid intelligence is. You're not going to be able to get better at forecasting, because you're not going to take it seriously. You're not going to try. You have to be willing to give it a shot and say, “You know what? I think I'm going to put some mental effort into converting my vague hunches into probability judgments. I'm going to keep track of my scores, and I'm going to see whether I gradually get better at it.” The people who persisted tended to become superforecasters. ︎

[21] Skipping ahead, Trammell (2019) also sketches a similar account to ‘value of contemplation’ which I go on to graffiti more poorly below (p 6-7):

[W]e should ponder until it no longer feels right to ponder, and then to choose one of the the acts it feels most right to choose. Lest that advice seem as vacuous as “date the person who maximizes utility”, here is a more concrete implication. If pondering comes at a cost, we should ponder only if it seems that we will be able to separate better options from worse options quickly enough to warrant the pondering—and this may take some time. Otherwise, we should choose immediately. When we do, we will be choosing literally at random; but if we choose after a period of pondering that has not yet clearly separated better from worse, we will also be choosing literally at random.

The standard Bayesian model suggests that if we at least take a second to write down immediate, baseless “expected utility” numbers for soap and pasta, these will pick the better option at least slightly more often than random. The cluelessness model sketched above predicts (a falsifiable prediction!) that there is some period—sometimes thousandths of a second, but perhaps sometimes thousands of years—during which these guesses will perform no better than random.

I take the evidence from forecasting to give evidence that orthodoxy can meet the challenge posed in the second paragraph. As it seems people (especially the most able) can make ‘rapid fire’ guesses on very recondite matters that are better than chance, we can argue by analogy that the period before guesswork manages to tend better than chance, even for the hard questions of complex cluelessness, tends to be more towards the ‘thousands of a second’ than ‘thousands of years’.

[22] In conversation Phil Trammell persuades me it isn’t strictly information in the classic sense of VoI which is missing. Although I am clueless with respect to poker, professional players aren’t, and yet VoI is commonplace in their decision making (e.g. factoring in whether they want to ‘see more cards’ in terms of how aggressively to bet). Contrariwise I may be clueless whilst having all the relevant facts, if I don’t know how to ‘put them together’.

[23] Naturally, our initial impressions here might be mistaken, but can be informed by the success of our attempts to investigate (cf. multi-armed bandit problems).

[24] Perhaps one diagnostic for ‘simple cluelessness’ could be this sensitivity to ‘causal jitter’.

[25] An aside on generality and decomposition - relegated to a footnote as the natural responses seem sufficient to me: We might face a trade-off between broader and narrower questions on the same topic: is economic growth in country X a good thing versus economic growth generally, for example. In deciding to focus on one or the other we should weigh up their relative tractability (on its face, perhaps the former question is easier) applicability to the decision (facially, if the intervention is in country X, the more particular question is more important to answer - even if economic growth was generally good or bad, country X could be an exception), and generalisability (the latter question offers us a useful steer for other decisions we might have to make).

[26] If it turned out it was hopelessly intractable after all, that, despite appearances, there are not trends or levers - or perhaps we find there are many such levers connected to one another in an apparently endless ladder of plausibly sign-inverting crucial considerations - then I think we can make the same reply (roughly indifference) that we make to matters of simple cluelessness.

At current, I straightforwardly disagree with both of these claims; I do not think it is a good use of 'EA time' to try and pin down exactly what the long term (say >30 years out) effects of AMF donations are, or for that matter any other action originally chosen for its short term benefits (such as...saving a drowning child). I feel very confused about why some people seem to think it is a good use of time, and would appreciate any enlightenment offered on this point.

The below is somewhat blunt, because at current cluelessness arguments appear to me to be almost entirely without decision-relevance, but at the same time a lot of people who I think are smarter than me appear to think they are relevant and interesting for something and someone, and I'm not quite clear what the something or who the someone is, so my confidence is necessarily more limited than the tone would suggest. Then again, this thought is clearly not limited to me, e.g. Buck makes a very similar point here. I'd really love to get an example, even a hypothetical one, of where any of this would actually matter at the level of making donation/career/etc. decisions. Or if everybody agrees it would not impact decisions, an explanation of why it is a good idea to spend any 'EA time' on this at all.

***

For the challenge of complex cluelessness to have bite in the case of AMF donations, it seems to me that we need something in the vicinity of these two claims:

In short, I claim that once we truly believe (1) and (2) AMF would no longer be on our list of donation candidates.

For example, suppose you go through your suggested process of reflection, come to the conclusion AMF will in fact tractably boost economic growth in poorer countries, that such growth is one of the best ways to improve the longterm future, and that AMF's impact on growth is morally far more important than the considerations which motivated the choice of AMF in the first place, namely its impact on child mortality in the short term. Satisfied that you have now met the challenge of cluelessness, should you go ahead and click the 'donate' button?

I think you obviously shouldn't. It seems obvious to me that at minimum you should now go and find an intervention that was explicitly chosen for the purpose of boosting long term growth by the largest amount possible. Since AMF was apparently able to predictably and tractably impact the long term via incidentally impacting growth, it seems like you should be able to do much better if you actually try, and for the optimal approach to improve the long term future to be increasing growth via donating to AMF would be a prime example of Surprising and Suspicious Convergence. In fact, the opening quote from that piece seems particularly apt here:

Similar thoughts would seem to apply to also other possible side-effects of AMF donations; population growth impacts, impacts on animal welfare (wild or farmed), etc. In no case do I have reason to think that AMF is a particularly powerful lever to move those things, and so if I decide that any of them is the Most Important Thing then AMF would not even be on my list of candidate interventions.

Indeed, none of the people I know who think that the far future is massively morally important and that we can tractably impact it focus their do-gooding efforts on AMF, or anything remotely like AMF. To the extent they give money to AMF-like things, it is for appearences' sake, for personal comfort, or a hedge against their central beliefs about how to do good being very wrong (see e.g. this comment by Aaron Gertler). As a result, cluelessness arguments appear to me to be addressing a constituency that doesn't actually exist, and attempts to resolve cluelessness are a 'solution looking for a problem'.

If cluelessness arguments are intended have impact on the actual people donating to short term interventions as a primary form of doing good, they need to engage with the actual disagreements those people have, namely the questions of whether we can actually predict the size/direction of the longterm consequences despite the natural lack of feedback loops (see e.g. KHorton's comment here or MichaelStJules' comment here), or the empirical question of whether the impacts of our actions do in fact wax or wane over time (see e.g. reallyeli here), or the legion of potential philosophical objections.

Instead, cluelessness arguments appear to me to assume away all the disagreements, and then say 'if we assume the future is massively morally relevant compared to the present, that we can tractably and predictably impact said future, and a broadly consequentalist approach, one should be longtermist in order to maximise good done'. Which is in fact true, but unexciting once made clear.

Great comment. I agree with most of what you've said. Particularly that trying to uncover if donating to AMF is going to be a great way to improve the long-run future seems a fools errand.

This is where my quibble comes in:

I don't think this is true. Cluelessness arguments intend to demonstrate that we can't be confident that we are actually doing good when we donate to say GiveWell charities, by noting that there are important indirect/long-run effects that we have good reason to expect will occur, and have good reason to suspect are sufficiently important such that they could change the sign of GiveWell's final number if properly included in their analysis. It seems to me that this should have an impact on any person donating to GiveWell charities unless for some reason these people just don't care about indirect/long-run effects of their actions (e.g. they have a very high rate of pure time preference). In reality though you'll be hard-pressed to find EAs who don't think indirect/long-run effects matter, so I would expect cluelessness arguments to have bite for many EAs.

I don't think you need to demonstrate to people that we can tractably influence the far future for them to be impacted by cluelessness arguments. It is certainly possible to think cluelessness arguments are problematic for justifying giving to GiveWell charities, and also to think we can't tractably affect the far future. At that point you may be left in a tough place but, well, tough! You might at that point be forgiven for giving up on EA entirely as Greaves notes.

(By the way I recall us having a similar convo on Facebook about this, but this is certainly a better place to have it!)

If we have good reason to expect important far future effects to occur when donating to AMF, important enough to change the sign if properly included in the ex ante analysis, that is equivalent to (actually somewhat stronger than) saying we can tractably influence the far future, since by stipulation AMF itself now meaningfully and predictably influences the far future. I currently don't think you can believe the first and not the second, though I'm open to someone showing me where I'm wrong.

There's an important and subtle nuance here.

Note that complex cluelessness only arises when we know something about how the future will be impacted, but don't know enough about these foreseeable impacts to know if they are net good or bad when taken in aggregation. If we knew literally nothing about how the future would be impacted by an intervention this would be a case of simple cluelessness, not complex cluelessness, and Greaves argues we can ignore simple cluelessness.

What Greaves argues is that we don't in fact know literally nothing about the long-run impacts of giving to GiveWell charities. For example, Greaves says we can be pretty sure there will be long-term population effects of giving to AMF, and that these effects will be very important in the moral calculus (so we know something). But she also says, amongst other things, that we can't be sure even if the long-run effect on population will be positive or negative (so we clearly don't know very much).

So yes we are in fact predictably influencing the far future by giving to AMF, in that we know we will be affecting the number of people who will live in the future. However, I wouldn't say we are influencing the far future in a 'tractable way' because we're not actually making the future better (or worse) in expectation, because we are utterly clueless. Making the far future better or worse in expectation is the sort of thing longtermists want to do and they claim there are some ways to do so.

If we aren't making the future better or worse in expectation, it's not impacting my decision whether or not to donate to AMF. We can then safely ignore complex cluelessness for the same reason we would ignore simple cluelessness.

Cluelessness only has potential to be interesting if we can plausibly reduce how clueless we are with investigation (this is a lot of Greg's point in the OP); in this sense the simple/complex difference Greaves identifies is not quite the action-relevant distinction. If, having investigated, far future impacts meaningfully alter the AMF analysis, this is precisely because we have decided that AMF meaningfully impacts the far future in at least one way that is good or bad in expectation, i.e. we can tractably impact the far future.

Put simply, if we cannot affect the far future in expectation at all, then logically AMF cannot affect the far future in expectation. If AMF does not affect the far future in expectation, far future effects need not concern its donors.

Saying that the long-run effects of giving to AMF are not positive or negative in expectation is not the same as saying that the long-run effects are zero in expectation. The point of complex cluelessness is that we don't really have a well-formed expectation at all because there are so many forseeable complex factors at play.

In simple cluelessness there is symmetry across acts so we can say the long-run effects are zero in expectation, but in complex cluelessness we can't say this. If you can't say the long-run effects are zero in expectation, then you can't ignore the long-run effects.

I think all of this is best explained in Greaves' original paper.

I'm not sure how to parse this 'expectation that is neither positive nor negative or zero but still somehow impacts decisions' concept, so maybe that's where my confusion lies. If I try to work with it, my first thought is that not giving money to AMF would seem to have an undefined expectation for the exact same reason that giving money to AMF would have an undefined expectation; if we wish to avoid actions with undefined expectations (but why?), we're out of luck and this collapses back to being decision-irrelevant.

I have read the paper. I'm surprised you think it's well-explained there, since it's pretty dense. Accordingly, I won't pretend I understood all of it. But I do note it ends as follows (emphasis added):

And of course Greaves has since said that she does think we can tractably influence the far future, which resolves the conflict I'm pointing to anyway. In other words, I'm not sure I actually disagree with Greaves-the-individual at all, just with (some of) the people who quote her work.

I would put it as entertaining multiple probability distributions for the same decision, with different expected values. Even if you have ranges of (so not singly defined) expected values, there can still be useful things you can say.

Suppose you have 4 different acts with EVs in the following ranges:

I would prefer each of 2, 3 and 4 to 1, since they're all robustly positive, while 1 is not. 4 is also definitely better in expectation than 1 and 2 (according to the probability distributions we're considering), since its EV falls completely to the right of each's, so this means neither 1 nor 2 is permissible. Without some other decision criteria or information, 3 and 4 would both be permissible, and it's not clear which is better.

Thanks for the response, but I don't think this saves it. In the below I'm going to treat your ranges as being about the far future impacts of particular actions, but you could substitute for 'all the impacts of particular actions' if you prefer.

In order for there to be useful things to say, you need to be able to compare the ranges. And if you can rank the ranges ("I would prefer 2 to 1" "I am indifferent between 3 and 4", etc.), and that ranking obeys basic rules like transitivity, that seems equivalent to collapsing the all the ranges to single numbers. Collapsing two actions to the same number is fine. So in your example I could arbitrarily assign a 'score' of 0 to action 1, a score of 1 to action 2, and scores of 2 to each of 3 and 4.

Then my decision rule just switches from 'do the thing with highest expected value' to 'do (one of) the things with highest score', and the rest of the argument is essentially unchanged: either every possible action has the same score or it doesn't. If some things have higher scores than others, then replacing a lower score action with a higher score action is a way to tractably make the far future better.

Therefore, claims that we cannot tractably make the far future better force all the scores among all actions being taken to be the same, and if the scores are all the same I think your scoring system is decision-irrelevant; it will never push for action A over action B.

Did I miss an out? It's been a while since I've had to think about weak orderings..

Ya, it's a weak ordering, so you can't necessarily collapse them to single numbers, because of incomparability.

[1, 1000] and [100, 105] are incomparable. If you tried to make them equivalent, you could run into problems, say with [5, 50], which is also incomparable with [1, 1000] but dominated by [100, 105].

[5, 50] < [100, 105]

[1, 1000] incomparable to the other two

If your set of options was just these 3, then, sure, you could say [100, 105] and [1, 1000] are equivalent since neither is dominated, but if you introduce another option which dominates one but not the other, that equivalence would be broken.

I think there are two ways of interpreting "make the far future better":

1 implies 2, but 2 does not imply 1. It might be the case that none of the options look robustly better than doing nothing, but still some options are better than others. For example, writing their expected values as the difference with doing nothing, we could have:

and suppose specifically that our distibutions are such that 2 always dominates 1, because of some correspondence between pairs of distributions. For example, although I can think up scenarios where the opposite might be true, it seems going out of your way to torture an animal to death (for no particular benefit) is dominated at least by killing them without torturing them. Basically, 1 looks like 2 but with extra suffering and the harms to your character.

In this scenario, we can't reliably make the world better, compared to doing nothing, but we still have that option 2 is better than option 1.

Thanks again. I think my issue is that I’m unconvinced that incomparability applies when faced with ranking decisions. In a forced choice between A and B, I’d generally say you have three options: choose A, choose B, or be indifferent.

Incomparability in this context seems to imply that one could be indifferent between A and B, prefer C to A, yet be indifferent between C and B. That just sounds wrong to me, and is part of what I was getting at when I mentioned transitivity, curious if you have a concrete example where this feels intuitive?

For the second half, note I said among all actions being taken. If ‘business as usual’ includes action A which is dominated by action B, we can improve things by replacing A with B.

I think if you reject incomparability, you're essentially assuming away complex cluelessness and deep uncertainty. The point in this case is that there are considerations going in each direction, and I don't know how to weigh them against one another (in particular, no evidential symmetry). So, while I might just pick an option if forced to choose between A, B and indifferent, it doesn't reveal a ranking, since you've eliminated the option I'd want to give, "I really don't know". You could force me to choose among wrong answers to other questions, too.

B = business as usual / "doing nothing"

C= working on a cause you have complex cluelessness about, i.e. you're not wiling to say it's better or worse than or equivalent to B (e.g. for me, climate change is an example)

A=C but also torturing a dog that was about to be put down anyway (or maybe generally just being mean to others)

I'm willing to accept that C>A, although I could see arguments made for complex cluelessness about that comparison (e.g. through the indirect effects of torturing a dog on your work, that you already have complex cluelessness about). Torturing a dog, however, could be easily dominated by the extra effects of climate change in A or C compared to B, so it doesn't break the complex cluelessness that we already had comparing B and C.

Some other potential examples here, although these depend on how the numbers work out.

That's really useful, thanks, at the very least I now feel like I'm much closer to identifying where the different positions are coming from. I still think I reject incomparability; the example you gave didn't strike me as compelling, though I can imagine it compelling others.

I would say it's reality that's doing the forcing. I have money to donate currently; I can choose to donate it to charity A, or B, or C, etc., or to not donate it. I am forced to choose and the decision has large stakes; 'I don't know' is not an option ('wait and do more research' is, but that doesn't seem like it would help here). I am doing a particular job as opposed to all the other things I could be doing with that time; I have made a choice and for the rest of my life I will continue to be forced to choose what to do with my time. Etc.

It feels intuitively obvious to me that those many high-stakes forced choices can and should be compared in order to determine the all-things-considered best course of action, but it's useful to know that this intuition is apparently not shared.

It's not so much that we should avoid doing it full stop, it's more that if we're looking to do the most good then we should probably avoid doing it because we don't actually know if it does good. If you don't have your EA hat on then you can justify doing it for other reasons.

I've only properly read it once and it was a while back. I just remember it having quite an effect on me. Maybe I read it a few times to fully grasp it, can't quite remember. I'd be quite surprised if it immediately clicked for me to be honest. I clearly don't remember it that well because I forgot that Greaves had that discussion about the psychology of cluelessness which is interesting.

Just to be clear I also think that we can tractably influence the far future in expectation (e.g. by taking steps to reduce x-risk). I'm not really sure how that resolves things.

I'm surprised to hear you say you're unsure you disagree with Greaves. Here's another quote from her (from here). I'd imagine you disagree with this?

If you think you can tractably impact the far future in expectation, AMF can impact the far future in expectation. At which point it's reasonable to think that those far future impacts could be predictably negative on further investigation, since we weren't really selecting for them to be positive. I do think trying to resolve the question of whether they are negative is probably a waste of time for reasons in my first comment, and it sounds like we agree on that, but at that point it's reasonable to say that 'AMF could be good or bad, I'm not really sure, because I've chosen to focus my limited time and attention elsewhere'. There's no deep or fundamental uncertainty here, just a classic example of triage leading us to prioritise promising-looking paths over unpromising-looking ones.

For the same reason, I don't see anything wrong with that quote from Greaves; coming from someone who thinks we can tractably impact the far future and that the far future is massively morally relevant, it makes a lot of sense. If it came from someone who thought it was impossible to tractably impact the future, I'd want to dig into it more.

On a slightly different note, I can understand why one might not think we can tractably impact the far future, but what about the medium-term future? For example it seems that mitigating climate change is a pretty surefire way to improve the medium-term future (in expectation). Would you agree with that?

If you accept that then you might also accept that we are clueless about giving to AMF based on it's possible medium-term climate change impacts (e.g. maybe giving to AMF will increase populations in the near to medium term, and this will increase carbon emissions). What do you think about this line of reasoning?

Medium-term indirect impacts are certainly worth monitoring, but they have a tendency to be much smaller in magnitude than primary impacts being measured, in which case they don’t pose much of an issue; to be best of my current knowledge carbon emissions from saving lives are a good example of this.

Of course, one could absolutely think that a dollar spent on climate mitigation is more valuable than a dollar spent saving the lives of the global poor. But that’s very different to the cluelessness line of attack; put harshly it’s the difference between choosing not to save a drowning child because there is another pond with even more drowning children and you have to make a hard trolley-problem-like choice, versus choosing to walk on by because who even really knows if saving that child would be good anyway. FWIW, I feel like many people effectively arguing the latter in the abstract would not actually walk on by if faced with that actual physical situation, which if I’m being honest is probably part of why I find it difficult to take these arguments seriously; we are in fact in that situation all the time, whether we realise it or not, and if we wouldn’t ignore the drowning child on our doorstep we shouldn’t entirely ignore the ones half a world away...unless we are unfortunately forced to do so by the need to save even greater numbers / prevent even greater suffering.

Perhaps, although I wouldn't say it's a priori obvious, so I would have to read more to be convinced.

I didn't raise animal welfare concerns either which I also think are relevant in the case of saving lives. In other words I'm not sure you need to raise future effects for cluelessness worries to have bite, although I admit I'm less sure about this.

I certainly wouldn't walk on by, but that's mainly due to a mix of factoring in moral uncertainty (deontologists would think me the devil) and not wanting the guilt of having walked on by. Also I'm certainly not 100% sure about the cluelessness critique, so there's that too. The cluelessness critique seems sufficient to me to want to search for other ways than AMF to do the most good, but not to literally walk past a drowning child.

This makes some sense, but to take a different example, I've followed a lot of the COVID debates in EA and EA-adjacent circles, and literally not once have I seen cluelessness brought up as a reason to be concerned that maybe saving lives via faster lockdowns or more testing or more vaccines or whatever is not actually a good thing to do. Yet it seems obvious that some level of complex cluelessness applies here if it applies anywhere, and this is a case where simply ignoring COVID efforts and getting on with your daily life (as best one can) is what most people have done, and certainly not something I would expect to leave people struggling with guilt or facing harsh critique from deontologists.

As Greaves herself notes, such situations are ubiquitous, and the fact that cluelessness worries are only being felt in a very small subset of the situations should lead to a certain degree of skepticism that they are in fact what is really going on. But I don't want to spend too much time throwing around speculation about intentions relative to focusing the object level arguments made, so will leave this train of thought here.

To be fair I would say that taking the cluelessness critique seriously is still quite fringe even within EA (my poll on Facebook provided some indication of this).

With an EA hat on I want us to sort out COVID because I think COVID is restricting our ability to do certain things that may be robustly good. With a non-EA hat on I want us to sort out COVID because lockdown is utterly boring (although it actually got me into EA and this forum a bit more which is good) and I don't want my friends and family (or myself!) to be at risk of dying from it.

Most people have decided to obey lockdowns and be careful in how they interact with others, in order to save lives. In terms of EAs not doing more (e.g. donating money) I think this comes down to the regular argument of COVID not being that neglected and that there are probably better ways to do good. In terms of saving lives, I think deontologists require you to save a drowning child in front of you, but I'm not actually sure how far that obligation extends temporally/spatially.

This is interesting and slightly difficult to think about. I think that when I encounter decisions in non-EA-life that I am complexly clueless about, that I let my personal gut feeling takes over. This doesn't feel acceptable in EA situations because, well, EA is all about not letting personal gut feelings take over. So I guess this is my tentative answer to Greaves' question.

I have complex cluelessness about the effects of climate change on wild animals, which could dominate the effects on humans and farmed animals.

Belatedly:

I read the stakes here differently to you. I don't think folks thinking about cluelessness see it as substantially an exercise in developing a defeater to 'everything which isn't longtermism'. At least, that isn't my interest, and I think the literature has focused on AMF etc. more as salient example to explore the concepts, rather than an important subject to apply them to.

The AMF discussions around cluelessness in the OP are intended as toy example - if you like, deliberating purely on "is it good or bad to give to AMF versus this particular alternative?" instead of "Out of all options, should it be AMF?" Parallel to you, although I do think (per OP) AMF donations are net good, I also think (per the contours of your reply) it should be excluded as a promising candidate for the best thing to donate to: if what really matters is how the deep future goes, and the axes of these accessible at present are things like x-risk, interventions which are only tangentially related to these are so unlikely to be best they can be ruled-out ~immediately.

So if that isn't a main motivation, what is? Perhaps something like this:

1) How to manage deep uncertainty over the long-run ramifications of ones decisions is a challenge across EA-land - particularly acute for longtermists, but also elsewhere: most would care about risks about how in the medium term a charitable intervention could prove counter-productive. In most cases, these mechanisms for something to 'backfire' are fairly trivial, but how seriously credible ones should be investigated is up for grabs.

Although "just be indifferent if it is hard to figure out" is a bad technique which finds little favour, I see a variety of mistakes in and around here. E.g.:

a) People not tracking when the ground of appeal for an intervention has changed. Although I don't see this with AMF, I do see this in and around animal advocacy. One crucial consideration around here is WAS, particularly an 'inverse logic of the larder' (see), such as "per area, a factory farm has a lower intensity of animal suffering than the environment it replaced".

Even if so, it wouldn't follow the best thing to do would to be as carnivorous as possible. There are also various lines of response. However, one is to say that the key objective of animal advocacy is to encourage greater concern about animal welfare, so that this can ramify through to benefits in the medium term. However, if this is the rationale, metrics of 'animal suffering averted per $' remain prominent despite having minimal relevance. If the aim of the game is attitude change, things like shelters and companion animals over changes in factory farmed welfare start looking a lot more credible again in virtue of their greater salience.

b) Early (or motivated) stopping across crucial considerations. There are a host of ramifications to population growth which point in both directions (e.g. climate change, economic output, increased meat consumption, larger aggregate welfare, etc.) Although very few folks rely on these when considering interventions like AMF (but cf.) they are often being relied upon by those suggesting interventions specifically targeted to fertility: enabling contraceptive access (e.g. more contraceptive access --> fewer births --> less of a poor meat eater problem), or reducing rates of abortion (e.g. less abortion --> more people with worthwhile lives --> greater total utility).

Discussions here are typically marred by proponents either completely ignoring considerations on the 'other side' of the population growth question, or giving very unequal time to them/sheltering behind uncertainty (e.g. "Considerations X, Y, and Z all tentatively support more population growth, admittedly there's A, B, C, but we do not cover those in the interests of time - yet, if we had, they probably would tentatively oppose more population growth").

2) Given my fairly deflationary OP, I don't think these problems are best described as cluelessness (versus attending to resilient uncertainty and VoI in fairly orthodox evaluation procedures). But although I think I'm right, I don't think I'm obviously right: if orthodox approaches struggle here, less orthodox ones with representors, incomparability or other features may be what should be used in decision-making (including when we should make decisions versus investigate further). If so then this reasoning looks like a fairly distinct species which could warrant it's own label.

This makes some sense to me, although if that's all we're talking about I'd prefer to use plain English since the concept is fairly common. I think this is not all other people are talking about though; see my discussion with MichaelStJules.

FWIW, I don't think 'risks' is quite the right word: sure, if we discover a risk which was so powerful and so tractable that we end up overwhelming the good done by our original intervention, that obviously matters. But the really important thing there, for me at least, is the fact that we apparently have a new and very powerful lever for impacting the world. As a result, I would care just as much about a benefit which in the medium term would end up being worth >>1x the original target good (e.g. "Give Directly reduces extinction risk by reducing poverty, a known cause of conflict"); the surprisingly-high magnitude of an incidental impact is what is really catching my attention, because it suggests there are much better ways to do good.

I think you were trying to draw a distinction, but FWIW this feels structurally similar to the 'AMF impact population growth/economic growth' argument to me, and I would give structually the same response: once you truly believe a factory farm net reduced animal suffering via the wild environment it incidentally destroyed, there are presumably much more efficient ways to destroy wild environments. As a result, it appears we can benefit wild animals much more than farmed animals, and ending factory farming should disappear from your priority list, at least as an end goal (it may come back as an itself-incidental consequence of e.g. 'promoting as much concern for animal welfare as possible'). Is your point just that it does not in fact disappear from people's priority lists in this case? That I'm not well-placed to observe or comment on either way.

This I agree is a problem. I'm not sure if thinking in terms of cluelessness makes it better or worse; I've had a few conversations now where my interlocutor tries to avoid the challenge of cluelessness by presenting an intervention that supposedly has no complex cluelessness attached. So far, I've been unconvinced of every case and think said interlocutor is 'stopping early' and missing aspects of impact about which they are complexly clueless (often economic/population growth impacts, since it's actually quite hard to come up with an intervention that doesn't credibly impact one of those).

I guess I think part of encouraging people to continue thinking rather than stop involves getting people comfortable with the fact that there's a perfectly reasonable chance that what they end up doing backfires, everything has risk attached, and trying to entirely avoid such is both a fool's errand and a quick path to analysis paralysis. Currently, my impression is that cluelessness-as-used is pushing towards avoidance rather than acceptance, but the sample size is small and so this opinion is very weakly held. I would be more positive on people thinking about this if it seemed to help push them towards acceptance though.

Point taken. Given that I hope this wasn't too much of a hijack, or at least was an interesting hijack. I think I misunderstood how literally you intended the statements I quoted and disagreed with in my original comment.

(Apologies in advance I'm rehashing unhelpfully)

The usual cluelessness scenarios are more about that there may be powerful lever for impacting the future, and your intended intervention may be pulling it in the wrong direction (rather than a 'confirmed discovery'). Say your expectation for the EV of GiveDirectly on conflict has a distribution with a mean of zero but an SD of 10x the magnitude of the benefits you had previously estimated. If it were (e.g.) +10, there's a natural response of 'shouldn't we try something which targets this on purpose?'; if it were 0, we wouldn't attend to it further; if it meant you were -10, you wouldn't give to (now net EV = "-9") GiveDirectly.

The right response where all three scenarios are credible (plus all the intermediates) but you're unsure which one you're in isn't intuitively obvious (at least to me). Even if (like me) you're sympathetic to pretty doctrinaire standard EV accounts (i.e. you quantify this uncertainty + all the others and just 'run the numbers' and take the best EV) this approach seems to ignore this wide variance, which seems to be worthy of further attention.

The OP tries to reconcile this with the standard approach by saying this indeed often should be attended to, but under the guise of value of information rather than something 'extra' to orthodoxy. Even though we should still go with our best guess if we to decide (so expectation neutral but high variance terms 'cancel out'), we might have the option to postpone our decision and improve our guesswork. Whether to take that option should be governed by how resilient our uncertainty is. If your central estimate of GiveDirectly and conflict would move on average by 2 units if you spent an hour thinking about it, that seems an hour well spent; if you thought you could spend a decade on it and remain where you are, going with the current best guess looks better.

This can be put in plain(er) English (although familiar-to-EA jargon like 'EV' may remain). Yet there are reasons to be hesitant about the orthodox approach (even though I think the case in favour is ultimately stronger): besides the usual bullets, we would be kidding ourselves if we ever really had in our head an uncertainty distribution to arbitrary precision, and maybe our uncertainty isn't even remotely approximate to objects we manipulate in standard models of the same. Or (owed to Andreas) even if so, similar to how rule-consequentialism may be better than act-consequentialism, some other epistemic policy would get better results than applying the orthodox approach in these cases of deep uncertainty.

Insofar as folks are more sympathetic to this, they would not want to be deflationary and perhaps urge investment in new techniques/vocab to grapple with the problem. They may also think we don't have a good 'answer' yet of what to do in these situations, so may hesitate to give 'accept there's uncertainty but don't be paralysed by it' advice that you and I would. Maybe these issues are an open problem we should try and figure out better before pressing on.