Karma Police

[Epistemic confidence: 80%: I may offer specific bets at the bottom]

Summary

- 1 min video summary

- The Forum is a key community space

- Regular Forum users should have opportunity to know the most valuable EA information

- If they don’t, it is probably because someone didn’t write it the Forum didn’t surface it

- I did some simple analysis and the Forum karma system does okay

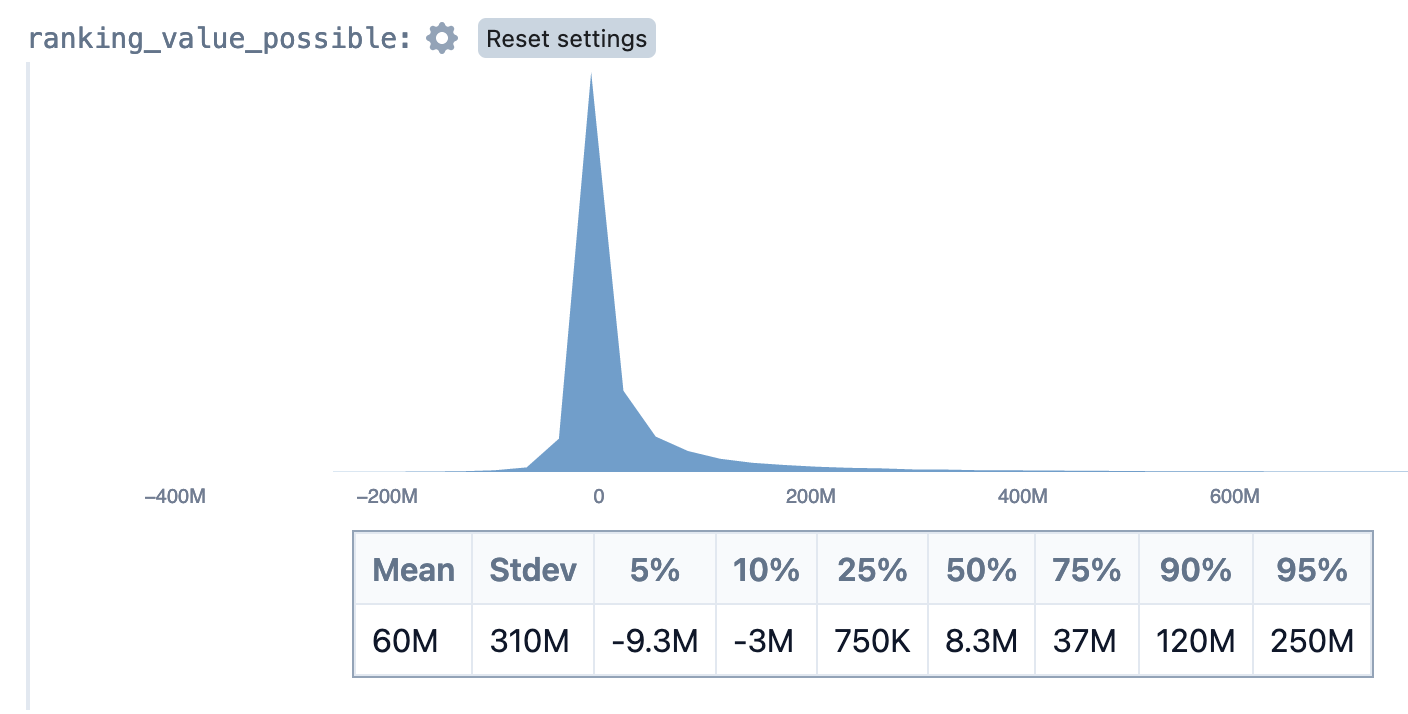

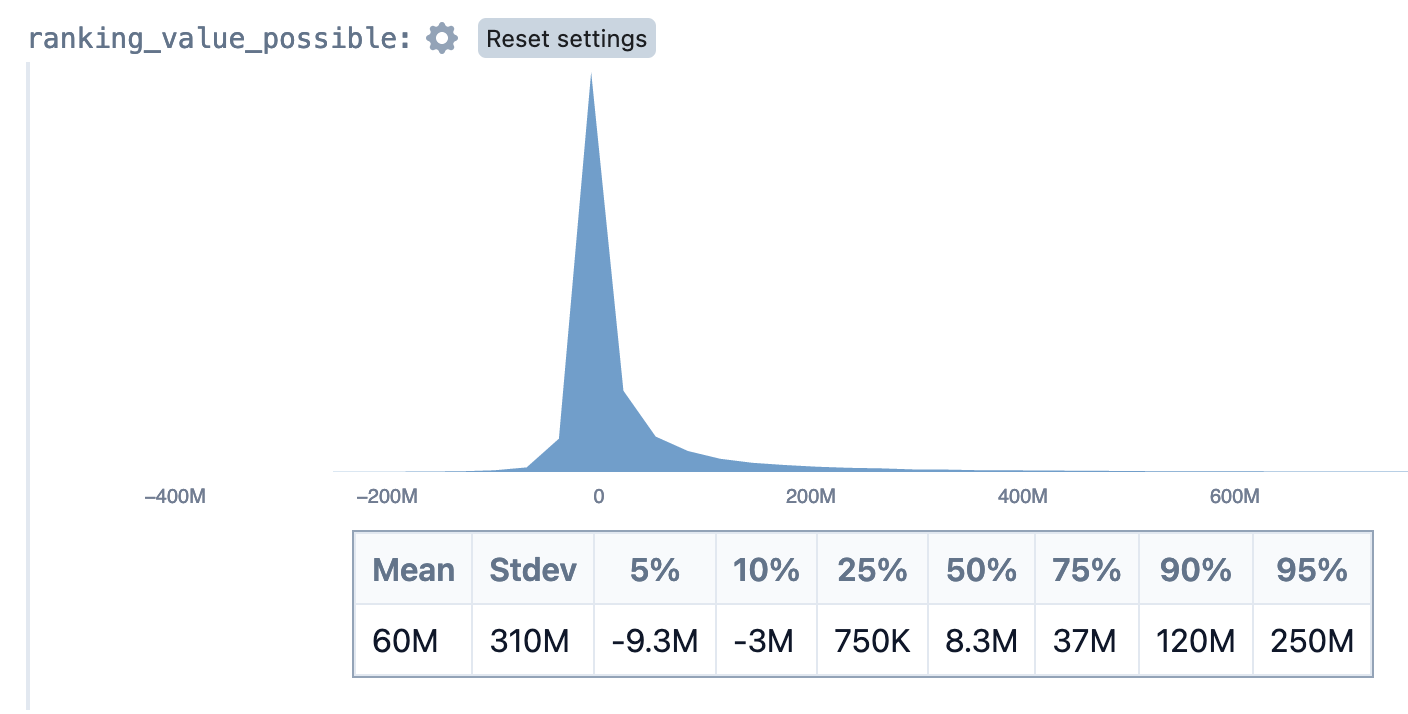

- Even with this, I estimate that if we change the way that the Forum ranks posts, that would create -$9mn and $250mn 90% CI (15 min video explanation here)

- We should test alternative ranking systems such as

- You choose whose karma you listen to

- Forecasting the result of the next Forum Review (watch this space)

- My sense is that the Forum team know this, but until I read something about it, I think surfacing the size of the problem is postive

The value of the EA Forum

The EA Forum is and should be a sufficient way to learn about EA. Those who scroll through the main feed should expect to find the most useful EA-related information and community discussions without having to look too hard. There are other ways that people learn about EA (for example, by reading foundational texts or listening to the 80,000 Hours podcast); but if you just read the top Forum posts, you ought to be up to speed with current discussions.

As such, the Forum is an important space that should be sorted and curated in a way that boosts high-value content. There might be other ways that we want to sort content — for example, by how much people enjoy it. But the most relevant ranking is ‘how much does this post contribute to making the world better?’ [1]

There is room for other rankings - we could rank posts by how funny they are. I’m happy for such a ranking to exist. But the primary goal of this community is to use resources to help the most and so the Forum should primarily rank things according to that metric. This has been discussed before, but I wanted to add some analysis and concrete suggestions[2].

Theory: The Forum ranks too much by consensus and not enough by value

My theory is as follows:

- Everyone gets to upvote or downvote posts

- Most of us are not experts in a given topic

- The posts which receive many upvotes are those that the median EA can understand and test

- The are likely to be about broad concepts like community, spending, giving or EAGs

- They are unlikely to be specific, valuable and hard to verify criticisms

- The Forum shows the incorrect balance of the two

- This leads to us creating and consuming less valuable content than we might otherwise

More broadly, I think we underrate how valuable it can be to lower the barriers to community education. When I was a Christian we would have called this “equipping” - ensuring that the church community is well prepared for the tasks it needs to do. Personally, I understand what I am to do in forecasting and twitter spaces, but as an EA in general, I struggle to understand my job/place/role less well and I guess others feel the same. I think improving the forum algorithm is low hanging fruit (another example of this is the fact that there aren’t that many high-quality brief summaries of EA content - see this article). I discuss community roles more broadly in this article, What are we for?.

Before we test my theory, this is another possible response: Karma doesn’t measure quality and nor should it. Karma measures popularity, and that's fine actually, because the purpose of karma isn't to measure quality but to nudge people towards reading some posts and away from reading others. And random forum users will get more out of an accessible post than a niche or technical post, even a high-quality one.

My response here is that I think the crux here is whether we can do better at providing people with things that they themselves will look back on and consider to have been valuable. It’s not a case of everyone reading OpenPhil grants, but rather thinking about which key pieces of content are worth everyone’s time.[3]

Testing the theory

First, I think the Forum team knows much of what I’m going to say and probably either agrees or has good reasons to disagree. They are struggling to hire and developers are at a particular premium given our views on AI. I still think surfacing the magnitude of the problem is the correct call.

If the theory (that the ranking algorithm leaves lots of room for improvement) were wrong, we’d expect to see:

- High karma posts well-correlated with some agreed ranking of “the best posts”. We’re gonna use the Decade Review

- Few posts that are either very overrated or very underrated by karma

My work here is mediocre and may have errors. If you want to improve on it, I’m happy to include yours and split any prizes for this piece.

First, let’s look at the Decade Review. The Review took place about 6 months ago. The aim was for people to choose which content had been most valuable to them. Any forum user could give posts from -9 to + 9 review karma. Then posts were ranked according to (some function of) these scores. This is a good way to get a good retrospective ranking, though it still likely undervalues posts which delivered huge value to small groups within EA.

If the Forum is good at ranking things, we should see the karma ranking and the Decade review ranking being similar.

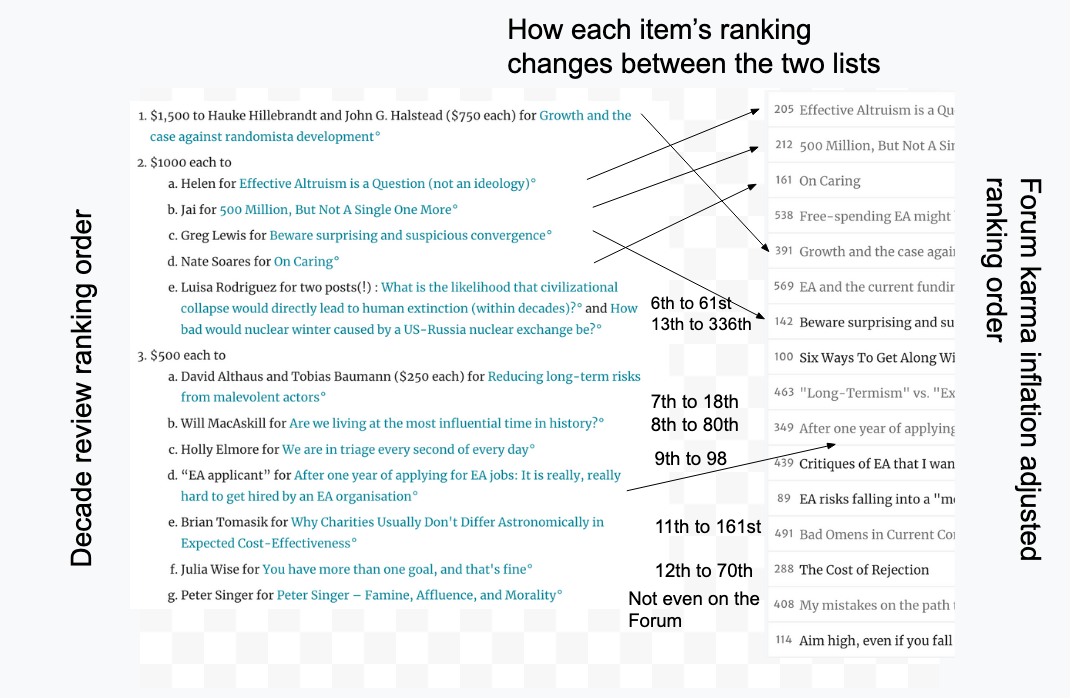

The column on the left shows top-ranked posts in the Decade Review. The column on the right shows the top-ranked posts on the Forum according to “Top (inflation-adjusted)”. Because the forum used to be smaller, older good posts have less karma. This was the Forum’s way of accounting for that. For that reason, the column on the right isn’t in strict numerical order. The arrows show where the top posts in the Decade Review fall in the Karma rankings.

The Decade Review has (at times) years of hindsight for us to think about which items were valuable. Karma has much less time for reflection. I think it does okay here.

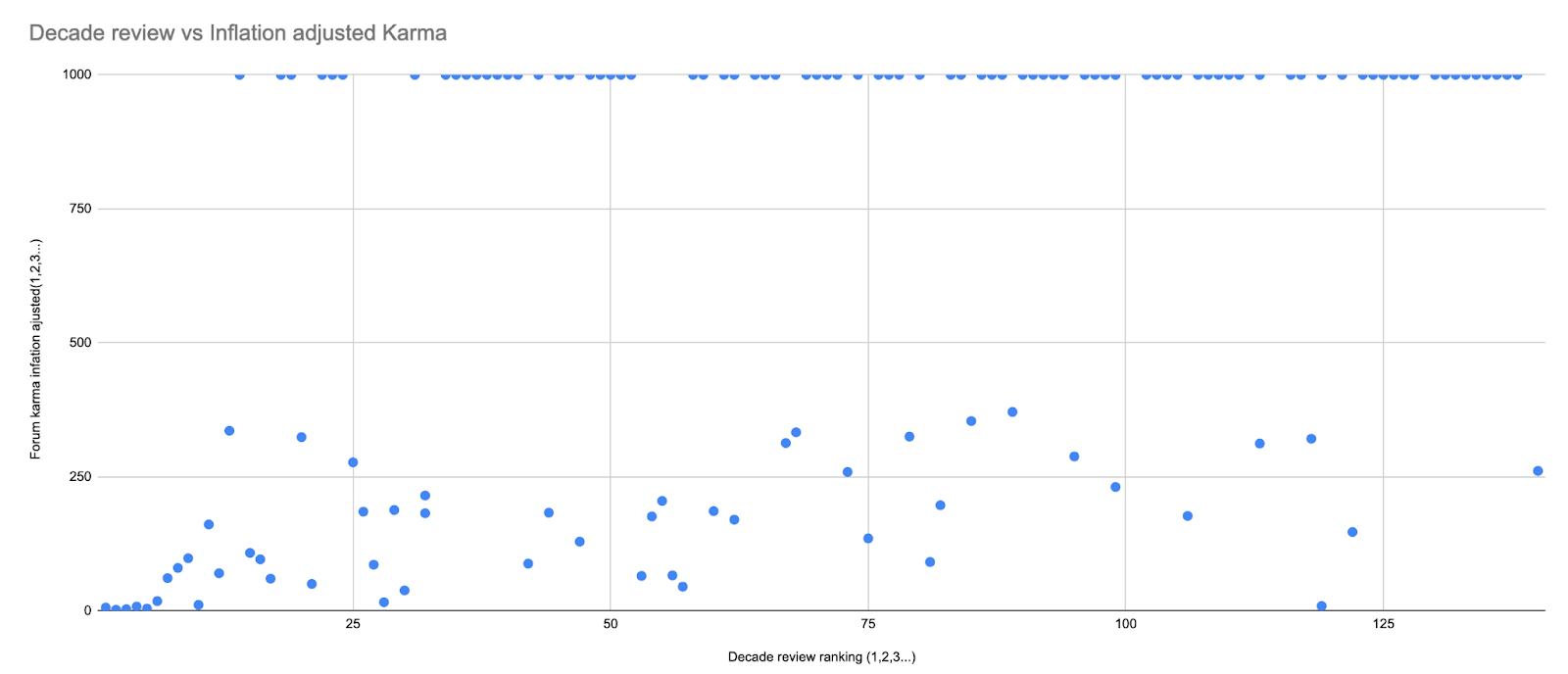

I also plotted the two rankings against one another. The x-axis is Decade Review, the y-axis is Top (inflation-adjusted) Forum karma

I scraped the first 150 articles by Decade review and the first 371 by articles by karma. Any Decade Review article that wasn’t in the 371 top-rated Forum articles I gave a score of 1000. If you want to improve, my data is here[4]

The correlation (CORREL in google sheets) here is .47 which I’m told is pretty good.

On the other hand, over half of the entries in Decade Review didn’t show up in the first 371 articles by Karma.

What’s more, the Decade Review isn’t a perfect metric for which posts are important ex-post. There were a lot of articles to read and I’m sure that content got missed. Influential EA content such as Doing Good Better, the Precipice and popular 80,000 Hours podcasts were missing, which suggests that the aim of the Decade Review was not to decide ‘what is the most valuable EA content.’

This seems like a passing grade though I think it warrants more investigation.

An alternate criticism is that the Forum does not merely rank incorrectly, it doesn’t rank quantitatively. You only have two choices, upvote or strong upvote, but some posts are 1000x more valuable than others. How do we signal that and how does the Forum signal that back to us?[5][6][7]

Ranking affects incentives

Some of the top articles on the Forum were written by people as part of their jobs. But many weren’t. These people could have easily decided to just not write the articles that they did.

Let’s take a look at Nuño Sempere’s piece ‘A Critical Review of Open Philanthropy’s Bet On Criminal Justice Reform’. (Full disclosure, Nuño is my friend). I don’t claim that this piece was ranked badly. While it is Nuño’s job to work on estimation, he isn’t an expert on Criminal Justice Reform. The article gives an in-depth evaluation of Open Philanthropy’s $200m in criminal justice spending. If Nuño had been incentivised to write this piece two years earlier than he did, perhaps Open Philanthropy would have spent less on criminal justice—I would guess between $1 million and $30 million less. That would have been a pretty significant amount of impact achieved by writing a Forum post.

Other articles like this don’t even get the clout this one did. Take a look at Daniel_Eth’s ‘Some potential lessons from Carrick’s Congressional bid’, which explores the risks and potential pitfalls associated with EAs running for office. How much value are we losing by not incentivising people to a) write this kind of content and b) read it? If your response is that there is a better way to incentivise this kind of content, great, but here is a tractable option with good befits compared to costs.

I made a squiggle model[8] to try and estimate the value of improving the forum.

The model gives a median benefit of $8mn in better allocated funding with a 5% chance of costing $9mn or more and a 5% chance of benefitting $250mn or more

Here is a link but I’ve also put the code here (and I explain it below).

//critical stuff

pc_to(a,b) = truncate(a to b,0,1)

//ranking improvmenet can close what % of distance between current and perfection

chance_ranked_correctly_before = pc_to(.4,.95) // for what it's worth ranking articles 40% might be really good

ranking_error = truncate(.1 to 1.1,0,2) //this needs to get smaller when better

timing_and_contribution_sensitivity = pc_to(.01, .5) //how sensitive are EAs writing articles in time to forum ranking? it might 10% to 10x improve

//stuff that's the same before and after

criminal_justice_error_spending = 0 to 30000000 //let's imagine the is the upper bound for an non-expert article on the forum

chance_change_given_article = pc_to(.05, .5)

//there is some chance that the forum is ranking perfectly

//the article needs to be written in time

value_per_nonexpert_article(article_value_upper_bound, chance_article_written_in_time, chance_change_given_article) = 0 to truncateLeft(article_value_upper_bound * chance_change_given_article * chance_article_written_in_time,0.01)

// then the article needs to be written at all

number_of_EAs = 2000 to 20000

//how this affects percentages is non-trivial

percentages_improving_by_percent(percentage, change, sensitivity) = truncate(percentage + (1 - percentage) * change * sensitivity,0,1)

//before

percent_nonexpert_EAs_contribute_before = pc_to(.001, .02)

chance_article_written_in_time_before = pc_to(.01, .1)

value_per_nonexpert_article_before = value_per_nonexpert_article(criminal_justice_error_spending, chance_article_written_in_time_before, chance_change_given_article)

eA_nonexpert_value_before = number_of_EAs*percent_nonexpert_EAs_contribute_before*value_per_nonexpert_article_before

//after

percent_nonexpert_EAs_contribute_after = percentages_improving_by_percent(percent_nonexpert_EAs_contribute_before, ranking_error, timing_and_contribution_sensitivity)

chance_article_written_in_time_after = percentages_improving_by_percent(chance_article_written_in_time_before, ranking_error, timing_and_contribution_sensitivity)

value_per_nonexpert_article_after = value_per_nonexpert_article(criminal_justice_error_spending, chance_article_written_in_time_after, chance_change_given_article)

eA_nonexpert_value_after = number_of_EAs*percent_nonexpert_EAs_contribute_after*value_per_nonexpert_article_after

//value creation possible

ranking_value_possible = eA_nonexpert_value_after - eA_nonexpert_value_before

If you are interested I recommend this video explanation (15 min). Run the model in your browser.

When I give 2 values I'm giving 90% confidence eg 90% of the time I think the value is more than a and less than b. Alternatively, there is a 5% chance that the value is below a and a 5% chance it is above b.

Roughly, the model works like this:

- A great EA non-expert article provides value of somewhere between, 0 and $30000000

- There are somewhere between 2000 and 20000 EAs

- Between .1% and 2% of EAs contribute outside their areas of expertise

- There is a .1% to 1% chance that they will contribute a really valuable article in time for a change to happen.

- If 0 is random and 1 is perfection, the Forum ranking is currently somewhere between .4 and .95

- If we try and improve the ranking we might make it 10% worse or move it 90% closer to perfection

- The Forum ranking has somewhere between a 1% and a 50% effect on whether people write non-expert articles and wether they write them in time.

All models a wrong, but some are useful. Ideally you will criticise my model and we can make it better.

Some comments:

- The 90% confidence interval is -9.2M to 250M. The median is 9.3M

- The reason 5% negative is because maybe changing the Forum’s ranking system will make it worse

- The 95% might seem high but if the community corrects a few key decisions each year, we could easily see that much value

- The model is very sensitive to the effects of karma on non-expert contributions. Perhaps you have different intuitions here, but for me, karma matters a lot!

- The downside risk shouldn’t make us give up here. I suggest we can do more work to become more confident of a value in this range.

- The model is about shifting money, because that was something that was easy for me to think about. That money might be in global health, or longtermist giving

Solutions

As I say above, I would prefer that one of these suggestions replaced karma as the central method of ranking; but if you want a more defensible view, imagine I’m arguing for alternative options.

Here are a few possible ranking methods, scored:

‘Core EA’ rankings

CEA denotes ~50 accounts as “core EAs” (elites) and allows you to look at the rankings from these accounts alone. Honestly, this seems like a perfectly serviceable solution to me. Some commenters told me to change the word “elites” because it had bad connotations. I cannot think of a more accurate word and I mean it positively.

- Ease: 10/10

- Close to ideal ranking: 7/10

Forecast the Decade Review (or if we do it more often):

Manifold Markets is building a system to forecast the results of EA contests (here is the Open Phil cause priortisation contest) and then allow people to bet on them. We then use the forecasts to rank the posts. They could easily do the same for the EA Forum review (ideally it wouldn't be a decade away[9]). This would be easy for the Forum to integrate (they just need to take a feed from the API) and would get very close to the decade review, which I’d argue is one of the best things we have.

- Ease 10/10

- Close to ideal ranking 9/10

Reduce community posts (the forum already does this)

The forum puts a negative modifier on all posts with the “community” tag. This was a good move but has bad incentives - people try to avoid receiving the community tag

- Ease: 10/10 (done)

- Close to ideal ranking 5/10

ML algorithm

Ben West found my draft and told me he’s excited about this. I am excited about it too. That said, I think it’s probably harder than several other methods here. And I'm not sure it will give a higher information feed. Feel free to disagree

- Ease: 0/10

- Close to ideal ranking 6.5/10

Create your own karma

Choose a set of accounts, only see karma generated by their upvotes and downvotes. This probably produces a better ranking, but seems like a lot of work.

- Ease: 2/10

- Close to ideal ranking: 8/10

"Most-followed" karma

As above, but with an additional step where the top 5% of accounts that people follow are denoted as "most-followed". This is like ‘create your own karma’, but now we can rank by some community consensus on who elites are. I think this will turn out pretty similar to CEA’s choice of elites, but at least I can pick my own elites.

- Ease 2/10

- Close to ideal ranking 8.5/10

Subforums

Again, I hear that some of the Forum team are investigating this one. I think my concern would be that it would fracture the community - I want to see what other people are working on. But maybe it would creat higher fidelity chat in those areas. Probably net postive

- Ease 0/10

- Close to ideal ranking 6 to 9/10

Impact markets

Everyone has said this, but the right way to do this is to allow people to purchase the impact from everything and then trade the certificates. This would be a huge pain to implement but if the value is high enough, it would be worth it. I sense that it is, but I’m not suggesting it here.

- Ease 0/10

- Close to ideal ranking 10/10

Potential criticisms of these options

‘I am unconvinced that elite choices or forecasting are a good way to predict value’

I argue here that elite choices are a good way to predict value. If you don’t think public markets to forecast value (with loans to incentivise long term betting) are a good way to forecast things, I’d be interested to hear why. If your reason is that this creates a high barrier to voting on posts, see below.

‘I am concerned that I will trust a voting system less if I understand it less’

Fair point. But while I think that these suggestions are better than the current karma system, and they should be the main systems, perhaps new users shouldn’t have them initially.

‘It’s expensive and difficult to implement’

I think the value we could generate from implementing these changes is worth the initial headache of fixing the current system. My model above assumes that the bestorum posts help reallocate 10s of millions of dollars. The value created here is at median $10 million a year. This is worth the expense.

Having worked with software developers, I can say that having an alternate ranking (alt button) seems like a task for more than half a PM and half a developer. This would cost about $200k a year.

‘This would be non-transparent and create barriers to voting’

If we cared about making this simple to understand, I think we could. Likewise this is one of the key EA community spaces (alongside the in-person community, 80k and Twitter). We should take finding ways to improve it seriously.

This could lead to some bad outcomes

Please comment on what they are. If you can’t, then that argument could apply to anything. Likewise, a new system can first exist alongside the current one. This way, we’d get to see which system people prefer, and adjust for any unexpected effects.

Conclusion: let’s sort this out

We could relatively easily implement a ranking system of CEA’s top 50 EAs or a prediction market for each of the Yearly and Decade Review. We could see how it compared to the current karma system and then replace karma, remove the changes, or allow people to have some mix of the both.

It seems to me that we are losing maybe $10 milliona year from not ranking content properly, and I feel that we generally don’t talk about how great a loss this is. I get that it’s hard to hire developers. I get that it’s tiresome to overhaul the current system. But I want to make sure that we’re all on the same page about what we’re missing out on and what the solutions are. This seems pretty fixable if we’re willing to put the effort in.

I originally spoke this to Rob Long who transcribed it (a fun afternoon with a friend is to do this back and forth) then Rachel Frankwick and I wrote it up and Amber Ace copy-edited it. Then I added a load more spelling errors back in. It takes a village.

This post is part of a test I'm doing on works-in-progress.

Appendix: Post publication edits

Appendix: Bets about this article

Appendix: Broader thoughts

As we grow as a community, our systems for gathering what we consider to be the most important content are going to be more important. We want a way for segments of the community to surface their disagreement early in order that we can deal with it. Currently I think we don’t take the ranking of the forum (or its dissemination) nearly seriously enough.

Appendix: other problems with the forum as a repository

- The forum presents high barriers to comment, by not making it easy to write in the right style. I’d like writing an article to have a process it leads users through

- It isn’t easy to pull information from the forum. As I wrote here the forum should have a much wider range of email, podcast, RSS options

- It doesn’t contain everything. Every EA and EA adjacent book should have a link post.

- It has no methods of synthesis. As I wrote here there should be a way for the community to aggregate content. The wiki isn’t it because:

- It’s hard to find wiki pages from the front page

- The forum team want the wiki pages to be more like tag explainers than community summaries

- ^

Do I need to spend more time on this?

- ^

Also, I wish that I could combine my version with Arepo's. One day

- ^

I also think the ranking isn't quantitative and incentivises new things more than value creation

- ^

If you want a longer explanation, there are two rankings - one good one, one that we are testing. I got both sets and matched them. If something appears highly in the good ranking but not in the other ranking, that's a point against the other ranking. To try and show that, I pretending it had been given a very poor rank (1000th). Feel free to suggest better methods.

- ^

I'd love suggestions for what a more quantative solution might look like. Currently all I have is impact markets.

- ^

It's frustrating that writing footnotes takes you out of context and that you can't search forum pages to link.

- ^

There is room for a discussion of the types of post that I think are misranked but I couldn't think of how to do that

- ^

Squiggle is really easy to use. Go on, click it.

- ^

though even then Manifold have recently done some work on long term bets

How are you getting the third estimate? I'd note that I think multiplying very small and large numbers together, as you do here, might lead to overestimation. And I think this might be the case, since you are estimating that a "valuable article" has a chance of being worth 30M

Incidentally, squiggle interprets 0 to 30000000 as a normal[1], so I think that your estimation would change (and become more accurate) if you use 1 to 30000000, or rather truncateRight(1 to 30000000, 100M). If you do so, you get much more reasonable estimates (mean is now 8M, and the chance of getting ~0 impact is 50%):

I think that a better bet might be to go through the top articles and see in which cases the article itself led to value being created, and using that historical frequency to arrive at an estimate.

This incident is probably bad enough that we should probably be throwing an error here. I added an issue here.

You're right and I will change.

I wish forum articles supported forks and pull requests. I'd definitely incorporate yours.

Quick thoughts on subforums:

I like your post and ideas!

Would love to have a version of this that works transitively, ie I choose a few accounts, and I see karma generated by them and by accounts they give karma to, recursively, with a decay factor. (Can think of it like google pagerank, except for accounts)

Another version could work similarly to Polis:

There is already a team working on the transitive pagerank-like karma over at EigenTrust.Net, with a functional prototype using Discord reacts. We'd love to integrate with EA Forum / LW / Alignment Forum. Feel free to drop by our dev channel!

Already in it mate :P

I think this is pretty similar to the "ML solution" proposed.

Hi Nathan,

Thanks for writing this!

I only saw your post now, but I had made a draft for a question related to the karma system about 1.5 months ago. Feel free to have a look.

Interestingly, the estimates I got for the correlation coefficient between karma and impact based on this analysis from Nuño are pretty similar to the 0.47 you obtained for the relationship between the inflation-adjusted karma and ranking of the posts of the Decade Review.

Go look at this post https://forum.effectivealtruism.org/posts/X47rn28Xy5TRfGgSj/21-criticisms-of-ea-i-m-thinking-about

To be honest for a moment, I'd appreciate an explanation of why Peter's post will do much better than mine. Peter is a better writer with a track record of good observations. But I sense it would do better anyway.

Should we rank A much higher than B?

A:

B:

I guess it may seem sour or something, but it just genuinely seems that we want to incentivise more posts like mine compared to what we currently see, even though Peter's is more fun to read.

Wait, which of you has one central theory and on clear tractable solution?

If you want to make suggestions to this post, reply and I'll make you a coathor and you can add them yourself.

Please upvote the ranking systems you like

Choose whose karma you see

Current karma system

Forecast results of the next Decade/Yearly review and rank by that