Prepared by James Fodor and Miles Tidmarsh

EAGxAustralia 2023 Committee

Abstract

EAGx conferences are an important component of the effective altruism community, and have proven a popular method for engaging EAs and spreading EA ideas around the world. However, to date relatively little publicly available empirical evidence has been collected regarding the long term impact of such conferences on attendees. In this observational study we aimed to assess the extent to which EAGx conferences bring about change by altering EA attitudes or behaviours. To this end, we collected survey responses from attendees of the EAGxAustralia 2023 conference both before and six months after the conference, providing a measure of changes in EA-related attitudes and behaviours over this time. As a control, we also collected responses to the same survey questions from individuals on the EA Australia mailing list who did not attend the 2023 conference. Across 20 numerical measures we collected, we did not find any statistically significant differences in the six-month changes across the two groups. Specifically, we are able to rule out effect sizes of larger than about 20% for most measures. In general, we found self-reported EA attitudes and behaviours were remarkably consistent across most individuals over this time period. We provide a discussion of these results in the context of developing better measures of the impact of EAGx conferences, and conclude with some specific recommendations for future conference organisers.

Background

‘EAGx’ is the branding used by the Centre for Effective Altruism (CEA) for centrally-supported but independently-organised conferences held around the world each year. The aim of these events is to communicate EA ideas, foster community growth and participation, and facilitate the formation of beneficial connections for EA projects. EAGx conferences have been organised in Australia every year since 2016 (with a hiatus in 2020 and 2021 due to the COVID-19pandemic), with the most recent event taking place in Melbourne in September 2023.

While EAGx conferences have proved popular with attendees, relatively little publicly available evidence has been collected regarding their impact or effectiveness. The main source of information can be found in the forum sequence by Ollie Base. Most conference retrospective reports give details about attendance and self-reported attendee satisfaction, but do not attempt to measure the impact of the conference in achieving any concrete goals. The limited range of publicly-available evaluations is surprising given the importance of these events to the EA community, and has prompted comment on the EA forum regarding the relative lack of evaluation of EA projects generally, and of EAGx conferences specifically.

For the past few years, the main form of EAGx evaluation has been a post-conference survey, along with a six-month follow-up, administered by CEA, in which attendees are asked to report the beneficial outcomes of the conference for them personally, including making new connections, starting new projects, or learning key information that informed major decisions. Of these, the number of new connections made is typically regarded as the most important, with the number of connections per dollar spent being used as a key metric by CEA in assessing effectiveness. These methods have a number of advantages, including ease of collection, ability to compare across locations and over time, and relative ease of interpretation.

A major limitation of these existing measures is that they require survey respondents to explicitly make value judgements about their experiences at the conference (e.g. ‘How many new connections did you make at this event?’ and ‘describe your most valuable experiences’). Such judgements may be liable to various biases, including an experimenter demand effect and belief-supportive bias of EAs who enjoyed the conference and are predisposed to believe such events are valuable. In addition, it is unclear to what extent such connections are maintained over time or actually result in valuable outcomes. Similarly, respondents are asked to make predictions about their future behaviour (e.g. ‘Which actions do you plan to take as a result of this event?’, and ‘what are your future plans for involvement in the EA community?’). Self-predicted future behaviour is often unreliable, and subject to cognitive biases such as optimism bias or the salience of a recent positive social interaction.

As a first step in developing additional evaluation methods, the organising committee of EAGxAustralia 2023 instigated a survey designed to measure EA-related behaviours and attitudes of conference attendees both before and six months after the conference. While still relying on self-reports, this methodology is designed to avoid explicit questions about experiences at the conference or predictions of future behaviour, instead relying entirely on reports of past behaviour and current attitudes. In addition, to provide a basis for comparison the survey also included a control group, consisting of respondents who had previous engagement with the EA community but did not attend the conference in 2023. By comparing the change in reported behaviours and attitudes between the treatment and control groups, the survey aimed to provide a measure of the impact of the conference on its attendees relative to non-attendees.

Methods

This study utilises a difference-in-differences design, in which we compare the change in self-reported attitudes and behaviours in a six month followup with the treatment group who attended the conference, to the same measurements collected in a control group of non-attendees. The control group was intended to match the treatment group in terms of overall levels of EA engagement at the initial measurement period (i.e. before the conference), while consisting of people who did not attend the EAGxAustralia 2023 conference.

To measure EA attitudes and behaviours, a survey with a total of twenty-one questions was prepared, which were designed to encompass various types of impact the conference aimed to bring about. With the exception of question 5, all were numerical or multiple choice questions. The questions are presented and explained in the following tables.

The first two questions relate to engagement with the EA community. It was hypothesised that exposure to the conference would help attendees make friends and connections in the EA community, and also learn more about their local groups, both of which may lead to increased attendance at events. Greater attendence at EA events may be a proxy for greater engagement in EA ideas and community. The third question measured donations to EA causes, which was hypothesised to increase following the conference owing to a combination of increased knowledge of EA causes and increased motivation to donate. The fourth question asked respondents to self-report the importance of EA ideas to their life, which was hypothesised to increase following positive and affirming experiences at the EA conference. Question five was the only long answer question, asking respondents to report their current plans for improving the world. It was hypothesised that attendance at the conference would lead to changes in these plans at a higher rate than non-attendees.

Table 1. Questions 1-5 of the survey.

| Question Number | Short Name | Full Question Text | Response Scale | Type |

| 1 | EA events | Approximately how many events organised by EA groups did you attend in the past six months? | Units | Community |

| 2 | EA friends | Approximately, how many people in the EA community do you know well enough to ask a favour? | Units | Community |

| 3 | Donations | How much money did you donate to EA causes or organisations in the past six months? | AUD Dollars | Donations |

| 4 | EA importance | Overall, how important are the principles of effective altruism to how you live your life? Choose the option that best matches your perspective. | 5 point Likert scale | Attitude |

| 5 | Current plans | Describe in a few sentences your current plans for improving the world. You can be general or specific. It's up to you! | Long answer text | Plans |

Questions 6-13 relate to attitudes about the relative importance of EA cause areas. The purpose was not to test for any particular type or direction of change, only to assess whether attendance at talks or networking at the EAGx conference led to higher rates of attitude change about EA causes than for non-attendees who did not have these experiences. Note that no restrictions were placed on how many cause areas each respondent identified as ‘top priority’.

Table 2. Questions 6-13 of the survey.

| Question Number | Full Question Text | Cause area | Response Scale |

| 6 | In your opinion, how much of a priority should each of the following causes be given in the effective altruism movement? | Global poverty | 0 (not a priority /insufficiently familiar) 1 (low priority) 2 (moderate priority) 3 (high priority) 4 (top priority) |

| 7 | AI risk | ||

| 8 | Climate change | ||

| 9 | Cause prioritisation | ||

| 10 | Animal welfare | ||

| 11 | Movement building | ||

| 12 | Biosecurity | ||

| 13 | Nuclear security |

The final set of questions, numbered 14-21, all related to the frequency of various EA behaviours. The hypothesis was that attendance at the conference would increase motivation and opportunity for engaging in these behaviours, and so lead to an increase in reported frequency relative to non-attendees.

Table 3. Questions 14-21 of the survey.

| Question Number | Full Question Text | Activity | Response Scale |

| 14 | When was the last time you did each of the following? Select the option that best reflects roughly the correct period of time. | Read an article on the EA Forum | 0 (never) 1 (more than six months) 2 (between six and one month) 3 (between a week and a month) 4 (between a week and a day) 5 (one day or less) |

| 15 | Participated in an online EA space (slack, twitter, facebook, etc) | ||

| 16 | Listened to an EA podcast | ||

| 17 | Read an EA book | ||

| 18 | Shared EA ideas with someone new | ||

| 19 | Volunteered for an EA cause or organisation | ||

| 20 | Applied for an EA grant | ||

| 21 | Applied to work at an EA organisation |

The survey was prepared as a Google form, which was sent via email to potential respondents. The treatment group was sourced from all successful conference applicants (approximately 300), who were invited to complete in the weeks prior to the conference in September 2023. The cut-off for completion was the Friday night of the conference weekend (i.e. the day before the conference began on Saturday), ensuring that no responses were influenced by any experiences at the conference itself. The control group was sourced from the EAGx Mailchimp email list, along with posts on EA Facebook groups, personal invitation, and emails to those accepted to the EAGxAustralia 2023 conference but who did attend. These responses were collected slightly after the treatment group, during October and November of 2023.

Followup was conducted approximately six months afterwards, from March to May 2024. All respondents were sent an initial email, followed by two reminder emails at roughly weekly intervals, and finally a personalised email or Facebook message communication. These multiple followup efforts resulted in a dropout rate of around 42% for both treatment and control groups, a positive result as the target was to achieve no more than 50% dropout. Respondents were incentivised with a novel sticker (designed especially for this purpose) which was mailed after completing the followup survey, as well as a directed donation of $50 AUD to an effective charity of their choice. The monetary cost of the survey was approximately $4000 AUD, in addition to roughly 100 hours of labour spent on designing, administering, and analysing the survey data.

The numbers of respondents in each group are summarised in the table below. Response rates for the initial survey are based on the number of attendees at the conference for the treatment group, and on an estimate of the research of email and Facebook outreach for the control group.

Table 4. Comparison of treatment and control groups.

| Group | Completed initial survey | Completed followup survey | Initial response rate | Final response rate | Drop-out rate |

| Treatment | 65 | 38 | 23% | 14% | 41.5% |

| Control | 69 | 40 | ~5% | ~3% | 42.0% |

During preprocessing of responses, it was observed that three respondents in the control group completed the followup survey twice. In these cases the second (final) set of responses was used for analysis. One participant in the control group recorded 200 friends in the initial survey and then 100 in followup. Since this variation is likely due to inaccurate estimation rather than a genuine change, we replaced both values with the followup mean in the control group of 5.2. In a few cases where respondents did not answer certain questions in the followup, we also excluded their initial responses to these questions for consistency. Finally, one respondent in the control group mentioned they only donate once per year. As such we excluded their donation question from analysis, so as to avoid a spurious appearance of change due to the change in the six month window. We also did not find any consistent or interpretable pattern in the long answer responses, and so do not discuss these any further.

Results

Conference attendees differ from non-attendees

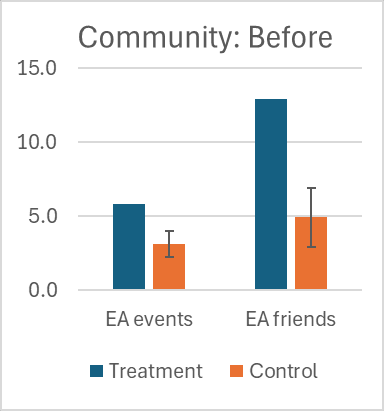

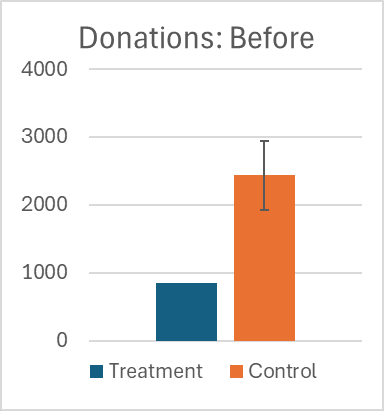

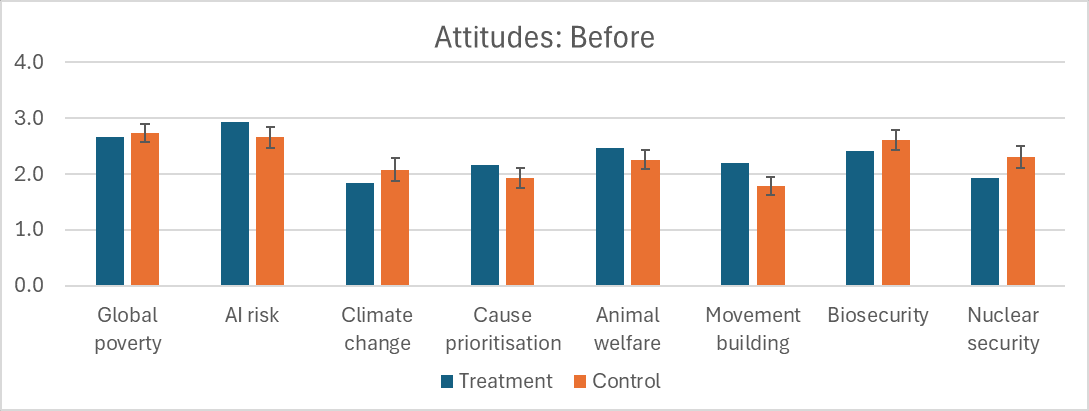

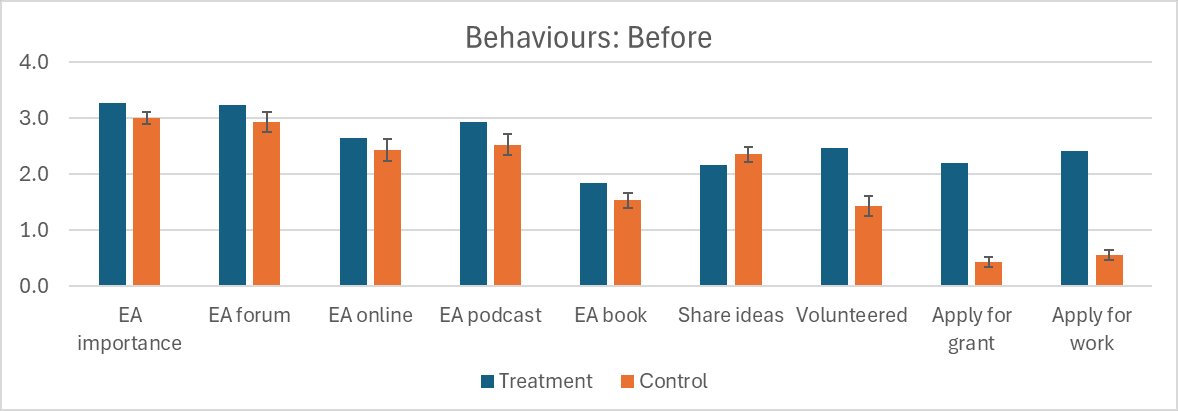

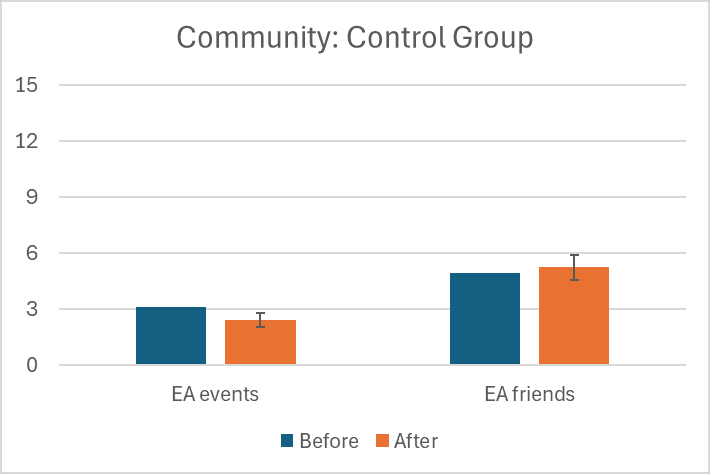

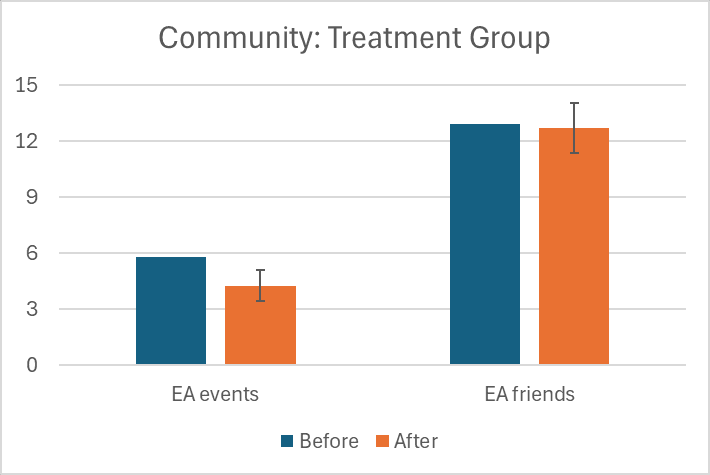

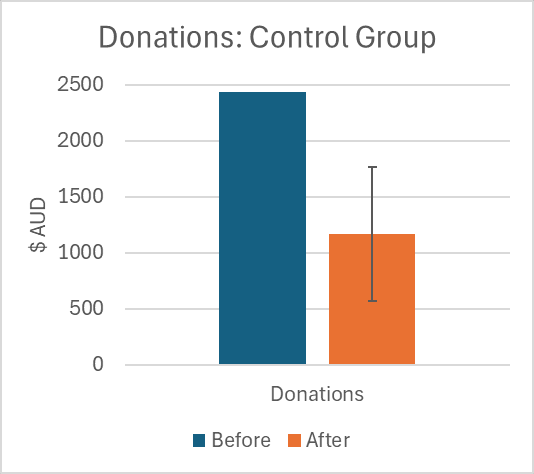

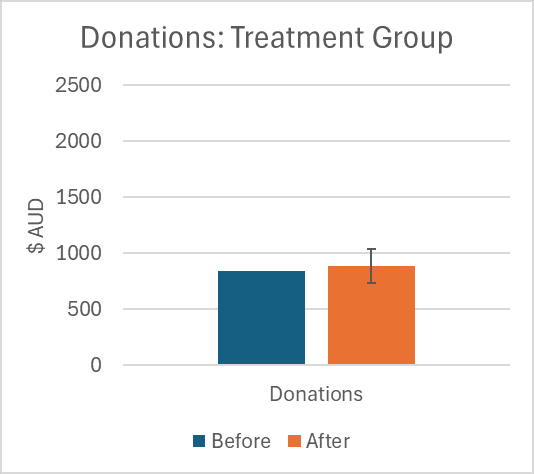

In the data from the initial collection period (‘before’), the control group and treatment groups showed several significant differences. Those in the treatment group attended significantly more EA events (5.7 compared to 3.1, p=0.003), had more EA friends (12.9 compared to 4.9, p<0.001), and donated less money ($839 compared to $2430, p=0.002). Participants in the treatment group also reported engaging in significantly more volunteering (2.46 compared to 1.43, p<0.001), applying for grants (2.19 compared to 0.43, p<0.001), and applying for work (2.41 compared to 0.55, p<0.001). There were also small differences in the self-reported importance of EA ideas (3.27 treatment compared to 3.00 control, p=0.016), and of the priority accorded to movement-building (2.19 compared to 1.78, p=0.013). These differences likely indicate demographic discrepancies between the two groups. We consider this further in the discussion. The first set of figures given below show the difference in the ‘before’ condition between the treatment and control groups. Error bars show pooled standard error of the mean for the two groups.

Figures 1-4. Comparison of treatment and control groups (before).

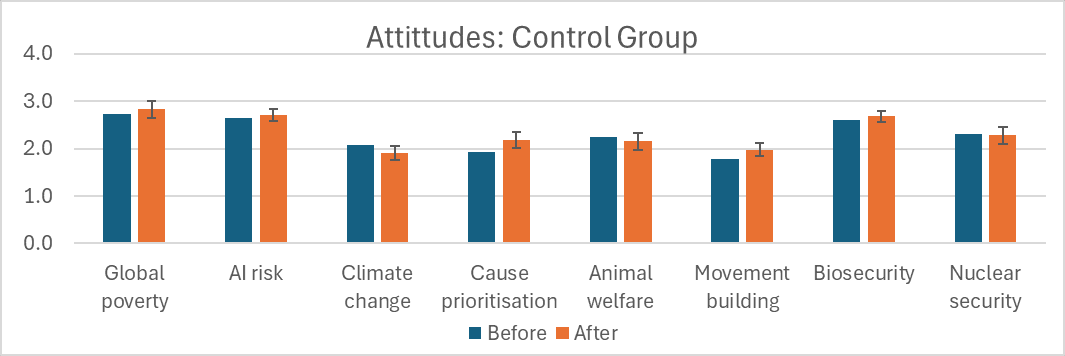

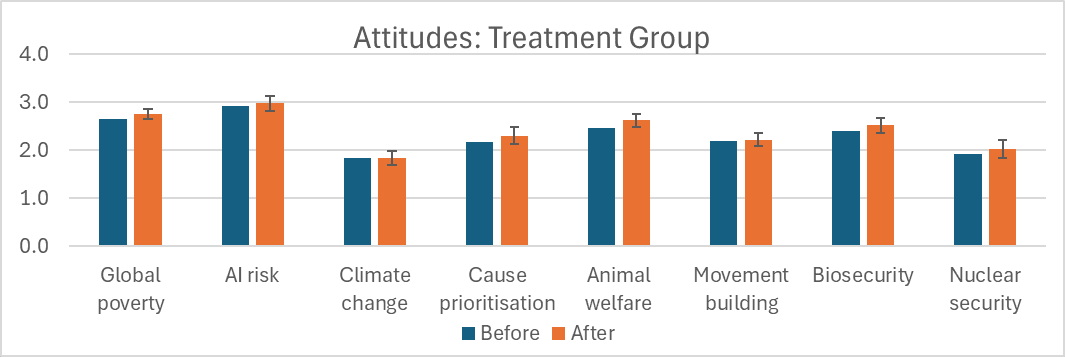

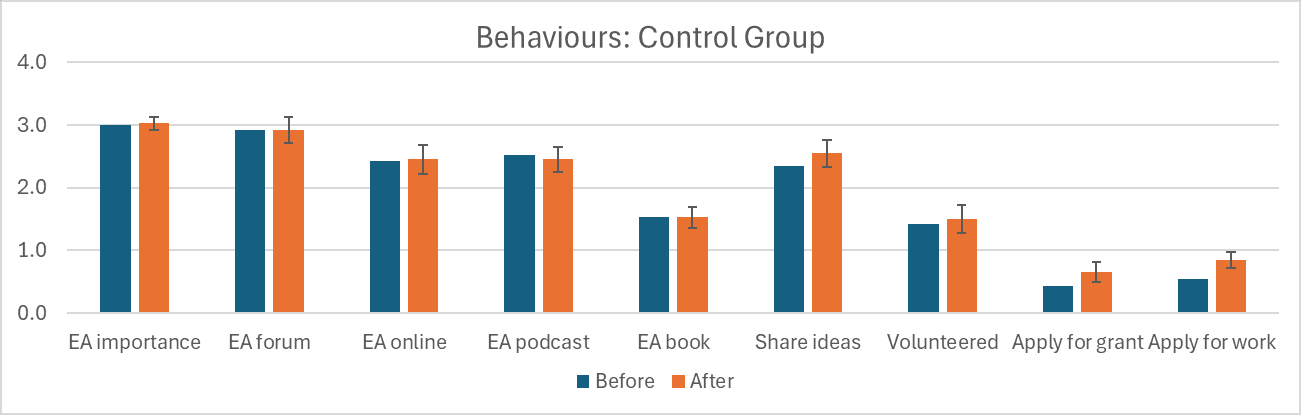

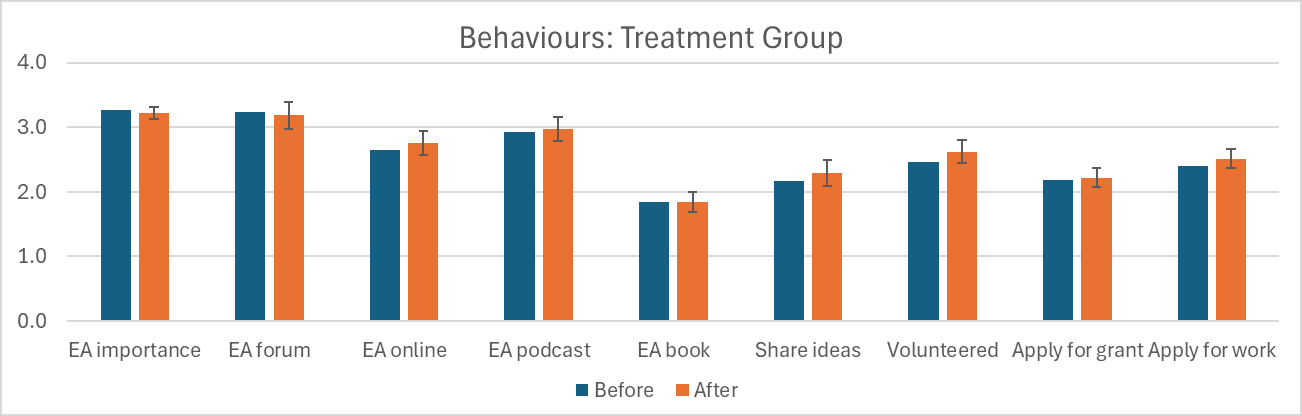

Results from both the ‘before’ and ‘after’ surveys for the treatment and control groups are shown in the figures below. In all cases, error bars on the ‘after’ group show 95% confidence intervals for the difference between the ‘before’ and ‘after’ time periods.

Figures 5-12. Comparison of treatment and control groups (after).

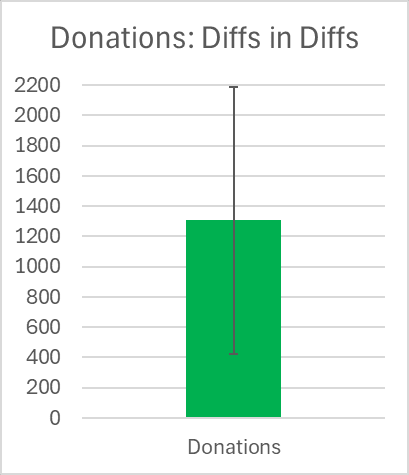

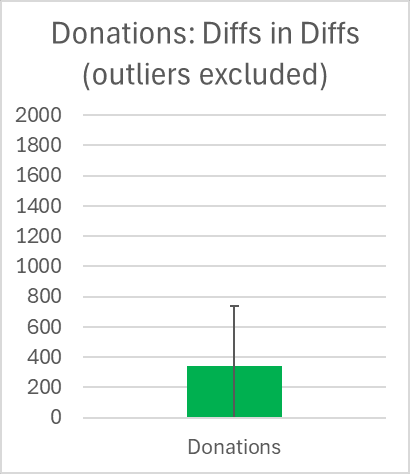

EA attitudes and behaviours are highly stable over time

Considering now the difference-in-differences analysis, in which we compare the changes in the treatment group to the changes in the control group, the only significant result was a change in donations. In the treatment group donations showed a slight but insignificant increase of $43, while in the control group donations changed by -$1263 (i.e. a reduction in donations). Although this difference in differences of $1306 was highly significant (p=0.004), we suspect this result is spurious owing to the high positive skew of donations data. In particular, most of the effect disappears if the three largest donors in the control group are removed. Additionally, it seems implausible that non-attendees reduced their donations by half, but would have maintained their donations if they had attended. We are therefore reluctant to attribute much significance to this result.

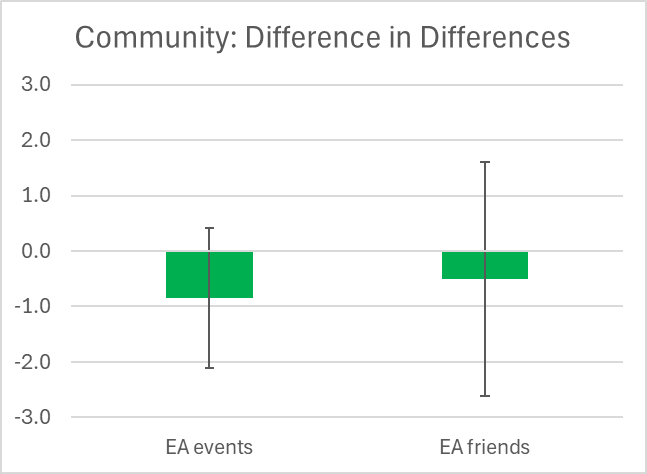

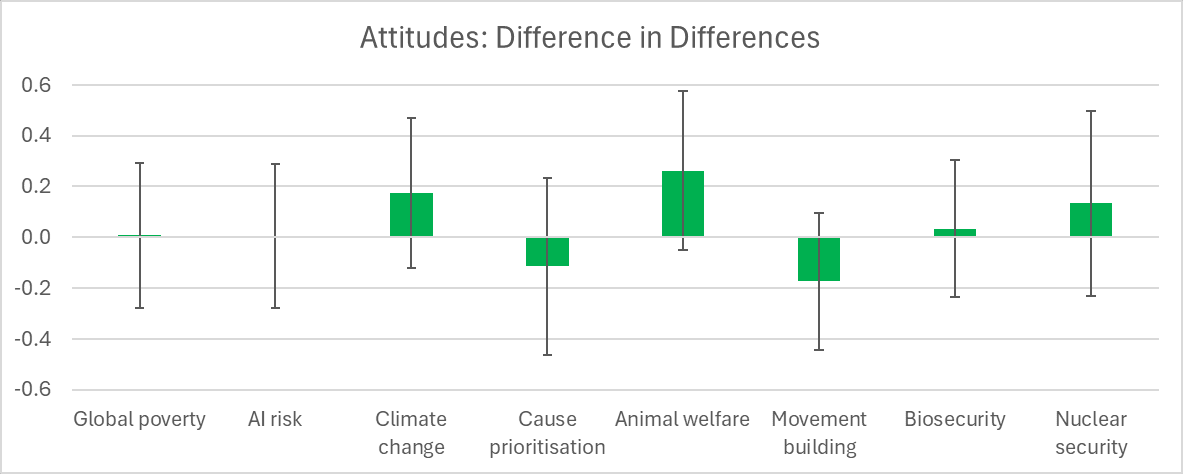

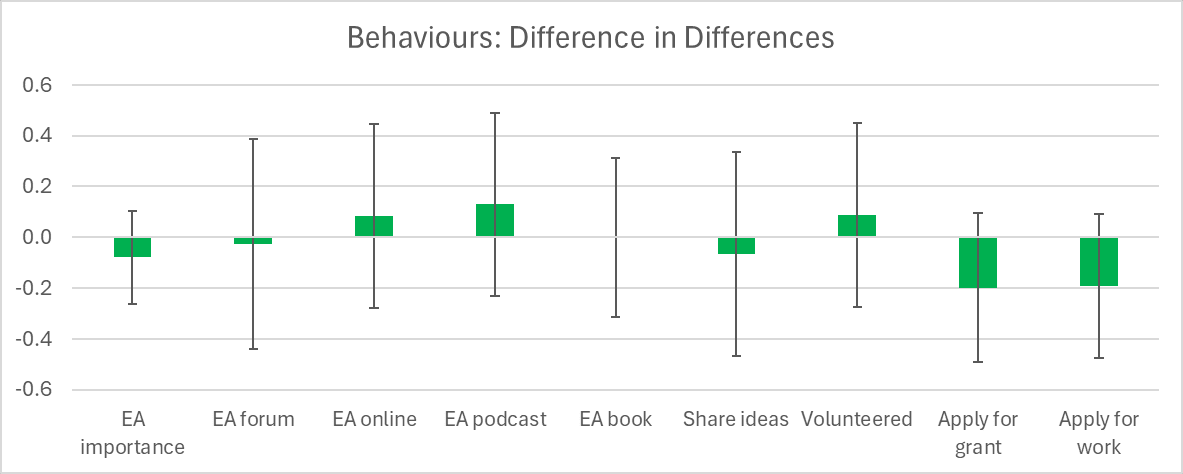

For all other variables no significant changes are observed, with all p-values above 0.1. As shown in the figures above, this is largely due to both the treatment and control group showing very little change in the ‘after’ survey relative to the ‘before’ survey. The figures below show the difference between the ‘before’ and ‘after’ values, computed as treatment group difference minus the control group difference. Error bars show 95% confidence intervals for this difference in differences.

Figures 13-17. Difference in differences analysis.

Discussion

This six-month longitudinal survey of EAGx attendees and a control group of non-attendees found two major results. First, attendees and non-attendees differ significantly in several important aspects of EA behaviour and attitude. Second, these attitudes and behaviours are highly stable over time, and show no evidence of significant change in either group during the six months following the conference. In this section we consider these results and provide additional commentary on how they may be of value to the EA community at large.

One fairly natural explanation of the differences between the treatment and control groups is that the latter comprises an older demographic with a larger number of professionals, compared to the treatment group with a larger proportion of young students. Professionals have higher income, hence accounting for the higher donations, while also having less spare time for attending EA events and making friends. This would also explain the lower levels of engagement in volunteering, applying for grants, and applying for work in the control group compared to the treatment group. Unfortunately the survey did not include demographic questions such as age or gender, so we cannot directly test for age differences between the groups. We elected to exclude demographic questions to keep the survey as short as possible, and also because we did not have any specific hypotheses about the relevance of such variables to the effectiveness of the conference, though in retrospect simple questions about age and gender would likely have been useful. Another possibility is that some EA event or activity around the time of the conference could have influenced the views of the control group, though this seems relatively implausible.

The statistical significance and internal coherence of these initial differences between treatment and control groups yield two key lessons. First, the results provide a partial validation of the study design, showing that it is possible to detect meaningful and interpretable differences in EA behaviours and attitudes even with a fairly small sample size (n=78). This provides evidence that the survey design was capable of detecting impacts of the conference on attendees if these impacts existed. Second, the results indicate that the audience of EAGx conferences is likely only a non-representative subset of the wider EA community, with an overrepresentation of younger persons and students. This may indicate the potential for greater efforts to attract older professionals to EAGx conferences, or perhaps shows that such people are less likely to assess existing conferences as relevant to their interests.

The major finding of the present study is that, besides the (likely spurious) difference in donations, there were no significant changes in EA behaviours or attitudes following the conference in either the treatment or control groups. These results are inconsistent with the hypothesis that EAGx conferences elicit substantial and sustained changes in EA attitudes or behaviour through social encouragement, networking, or other forms of learning. They are also inconsistent with an alternative hypothesis that EAGx conferences help to maintain existing EA connections, knowledge, and motivation, which otherwise tend to diminish over time without conference attendance. In fact, it appears that EA attitudes and behaviours are very robust over time, with no significant changes observed after a six month follow up.

As noted, the only exception was the decline in donations in the control group. We suspect this was largely driven by the wording of the question, which asked only about donations during the past six months. As the initial survey was completed around September while the followup was completed around March, this meant that any respondents who donate annually near the end of the financial year (end of June in Australia) would be recorded as making large donations in the initial survey and no donations in the followup. One respondent explicitly noted this in their extended response, and we suspect this was also relevant for two other large donors who reported zero donations in the followup survey (and perhaps to other donors). Removing these observations does not eliminate the donation difference entirely, but reduces it to only $341 (p=0.09). As such, we regard this result as owing to the survey design rather than any genuine tendency for those who did not attend the conference to decrease their donations over time. Future surveys should avoid this limitation by rewording the question, for example by explicitly asking for annual donors to answer with half their annual donation.

One obvious limitation of the present study is that the followup results for the treatment group only represent about 15% of conference attendees, and we have no data on how representative respondents are relative to all attendees. Owing to the time and effort required to fill in the survey, it is likely that respondents were more highly engaged with EA than non-respondents, though it is unclear how this would be expected to affect interpretation of our results. On the one hand, less-engaged attendees may have had larger changes in attitudes or behaviours from attending the conference owing to starting at a lower baseline of engagement. On the other hand, less-engaged attendees may have been less strongly affected by the conference compared to those more engaged in the community.

If true EA engagement is highly-volatile then treatment respondents would be on average more engaged in the initial survey than the follow-up (causing a decline in engagement). However this would apply more strongly to the control group (as the response rate was much lower), making the EAGx appear more effective than it really was. This would also invalidate the survey’s finding that engagement appears stable.

The results of this survey are in tension with anecdotal reports and CEA analyses, both of which indicate that EAGx conferences have sizable effects on attendees. Partly this reflects differences in methodology - instead of directly asking respondents to report how the conference impacted them, we sought to measure such effects indirectly by comparing the differences in self-reported measures of EA attitudes and behaviours before and after conference attendance. Given that most attendees enjoy EAGx conferences and are likely to believe they have a positive effect on attendees, directly asking attendees is likely to overestimate impact. Conversely, our method is likely to underestimate impact, as by design it only measures impact using pre-specified narrowly defined metrics, which are unlikely to capture the full range of effects that conferences have on attendees (though it would be surprising if all measured questions show null effects while most unmeasured effects were positive and substantial). As such, we believe that both methods should be used in tandem to provide a more holistic and richer picture of the impact of EAGx conferences.

Another major challenge in evaluating the effectiveness of EAGx conferences is that a large portion of the impact may be concentrated in a small subset of attendees. It is plausible that the majority of conference attendees experience few long term effects, while a small minority are led to major life or career changes as a result of connections made or motivation obtained at the conference. If, for example, the latter group comprised only 10% of conference attendees, the 14% response rate (for both initial and final surveys) obtained in this study would include only a handful of such attendees, making it difficult to measure any statistically significant results. At the same time, we think it is important that such hypothetical possibilities are not allowed to detract from the fact that our results do not provide evidence to support a significant effect of conference attendance on EA attitudes or behaviours for most attendees.

Recommendations

Based on our results, we make the following recommendations to people organising and evaluating large EA conferences:

- Seek opportunities for new methods of measurement and evaluation, such as using a similar longitudinal survey design with treatment and control groups.

- Consider spending more time and budget on evaluation. The total cost of roughly $10,000AUD to run this evaluation represents a small fraction of the budget of most EAGx conferences. Given that CEA spends several million dollars on such conferences every year, it would be useful if there was reporting on the proportion invested in evaluation and the expected value of information. Many sources (see here for example) report a guideline of about 10% of total program costs spent on evaluation.

- Consider independent evaluation. In addition to evaluation by CEA and conference organisers, it may be valuable to commission a third party to design and/or carry out an evaluation of the effectiveness of EAGx conferences. The EA Community Survey could perhaps serve as a model for this.

- Consider additional efforts to incentive survey completion. One method would be to incorporate the initial survey into the conference registration process, and then provide a partial rebate to those who complete the followup. This would introduce additional administrative overhead, but could substantially increase the response rate.

- Focus on identifying and targeting those most likely to experience large benefits from attending the conference. Better methods of identifying and directing resources to this small subset of attendees may deliver disproportionate impact.

- Develop ways to combine types of measures. For instance, information could be collected regarding self-assessed sources of impact or plans made soon after the conference, and then a followup survey could evaluate whether these plans are carried out, or whether the same aspects of the conference are regarded as impactful.

- Consider the use of randomisation. The EAGxAustralia conference is too small to randomly allocate tickets, but this is not necessarily true of all EAGx or EAG conferences. A simple design could involve a sample of around sixty potential attendees at the borderline of being accepted to the conference, which is then randomly divided into two, with half attending and the other half not attending the conference. Both groups would be asked to complete a six-month followup and could be paid for their participation as an incentive. This method would ameliorate most of the methodological limitations of the present study.

I admire the boldness of publishing a serious evaluation which shows a common EA intervention to have no significant effect (with all the caveats, of course).

As someone on the team running the intervention, I strongly agree with this!

Replying in full now!

Thanks once again for conducting this study and taking the time to write up the results so clearly. As Guy Raveh says, it takes courage to share your work publicly at all, let alone work that runs contrary to popular opinion on a forum full of people who make a living out of critiquing documents. The whole CEA events team really appreciates it!

Firstly, I want to concede and acknowledge that we haven’t done a great job at sharing how we measure and evaluate the impact of our programmes lately. There’s no complicated reason behind this; running large conferences around the world is very time-consuming, and analysing the impact of events is very difficult! That said, we’ve made more capacity on the team for this, and increasing our capacity for this work is a top priority. As a result, there’s been a lot more impact evaluation going on behind the scenes this year. We hope to share that work soon.

This null result is useful to us; while changes in behaviours and attitudes aren’t a key outcome we’re aiming for with EAGx events (more on this below), it is something we often allude to or claim as something that happens as a result of our events. This study is a data point against that, and that might mean we should redirect some efforts towards generating other kinds of outcomes. Thanks!

However, I don’t expect we’ll update our theory of change because of this study, which I don’t think will come as a surprise to you.

This was mentioned in the comments, and you acknowledge this too, but this survey is very likely underpowered, and I’d probably want to see a much larger sample size before reaching any firm conclusions.

Secondly, many of the behaviours and attitudes you ask about, particularly things like attending further events and engaging more in online EA spaces, aren’t the primary things we aim to influence via EAGx events. We’re typically aiming for concrete plan changes, such as finding new impactful roles, opportunities, and collaborators, and we think a lot of the value of events often comes from just a few cases.

You write:

This is our current best guess at what’s happening and what we target with our evaluations, but I don’t expect a study like this to pick up on this effect. I like your recommendation to find even more ways to actually identify these impacts!

That said, donating more to EA causes and creating more connections in the community are outcomes that we aim for, so it’s interesting that you didn’t see a long-term effect on these questions (though note the point about the survey being underpowered).

Thanks for writing up the recommendations. As mentioned, we’ve been investing more time in measuring our impact and hope to share some thoughts here soon. This post updated me a bit towards trying something more longitudinal too.

Thanks again!

To add some more context to this, my colleague @Willem Sleegers put together a simple online tool for looking at a power analysis for this study. The parameters can be edited to look at different scenarios (e.g. larger sample sizes or different effect sizes).

This suggests that power for mean differences of around 0.2 (which is roughly what they seemed to observe for the behaviour/attitude questions) with a sample of 40 and 38 in the control and treatment group respectively would be 22.8%. A sample size of around 200 in each would be needed for 80% power.[1] Fortunately, that seems like it might be within the realm of feasibility for some future study of EA events, so I'd be enthusiastic to see something like that happen.

It's also worth considering what these effect sizes mean in context. For example, a mean difference of 0.2 could reflect around 20% of respondents moving up one level on the scales used (e.g. from taking an EA action "between a week and a month" ago to taking one "between a week and a day" ago, which seems like it could represent a practically significant increase in EA engagement, though YMMV as to what is practically significant). As such, we also included a simple tool for people to look at what mean difference is implied by different distributions of responses.

It's important to note that this isn't an exact assessment of the power for their analyses, since we don't know the exact details of their analyses or their data (e.g. the correlation between the within-subjects components). But the provided tool is relatively easily adapated for different scenarios.

Though this is not taking into account any attempts to correct for differential attrition or differing characteristics of the control and intervention group, which may further reduce power, and this is also the power for a single test, and power may be further reduced if running multiple tests.

(Disclosure: I was an attendee at EAGx Australia in 2022 and 2023. I believe I am one of the data points in the Treatment group described.)

Thanks again for running this well-designed survey, which I know has taken a great deal of effort. The results do surprise me a little, and I notice that part of my motivation for writing this response is 'I feel like the conferences are really valuable so I want to add alternate explanations that would support that belief.' That said, I feel like some of my interpretations of this data might be of interest or add value to the conversation, so here goes.

The main thing that stands out to me in my interpretation of this data is that I think most EAs probably have an 'EA ceiling'. By that I mean, there's some maximum amount of engagement that each person is capable of, dependent on their circumstances. I think there may actually be two distinct cohorts of people who are representative of ceiling effects in the data.

The first cohort ('personal ceiling') are people who are doing everything they can, given their other goals and circumstances. I can't increase my donations if I'm really struggling financially (and I think it's important to acknowledge that Australia is in a major housing and cost-of-living crisis right now, which certainly affects my capacity to donate). I can't attend more events if I'm a single parent, or doing shift work on a rigid schedule, or living in a regional town with no active EA community. These people are at a personal ceiling.

The second cohort ('logical ceiling') are people who basically already run their entire lives around EA principles (and I met several at EAGx). They've taken the 10% pledge, they work at EA orgs, they are vegan, they attend every EA event they reasonably can, they volunteer, they are active online, etc. It's hard to imagine how people this committed could meaningfully increase their engagement with EA.

Given that attending EAGx requires a significant personal commitment of time and resources, it seems fairly obvious to me that conference attendees would be self-selected for BOTH 'has free time and resources to attend the conference' AND 'higher EA engagement in comparison to people on the mailing list who didn't attend the conference'. I think this is confirmed by the data: conference attendees had more EA friends and higher event attendance both before and after the conference. We should also consider that the survey response rate for non-conference-attendees was low, and the people who completed the survey are probably more engaged with EA than the average person on the mailing list. I think it would be really interesting to try to determine what percentage of respondents in each group are at either a personal or logical ceiling, and whether these 'ceiling participants' differ from other EAs in terms of the stability of their commitment and level of engagement over time. To resort to metaphor, it takes a lot more energy to keep a pot boiling than simmering, and it seems at least plausible to me that a large part of the value of EAGx is helping a relatively small group of extremely engaged people maintain their motivation, focus and commitment, and build new collaborations.

As an experimentalist (I'm a molecular biologist), the 'obvious' hypothesis test is one that was proposed in the OP: randomise would-be EAGx attendees into treatment and control groups, and then only let half of them attend. However, I think that using people at the borderline of being accepted or rejected as the basis for such a randomisation study would risk skewing the data. Specifically, I think it's likely that everyone at a logical ceiling and most people at a personal ceiling would be an 'automatic accept' for EAGx and at no risk of being considered 'borderline admits'. Therefore, the experimentally optimal way to run this would be to finalise the list of acceptances with 30 more acceptances than there are conference places, and then exclude 30 people totally at random. Unfortunately, there's significant downside risk to such an approach. It's likely that conference organisers, volunteers and speakers would be among those excluded, which would be disruptive to the conference and would likely reduce the value that other participants would get. I think it's also important to consider that missing out on attending EAG or EAGx is a massive bummer; people have written before about feelings of unimportance or inadequacy as a result of conference rejection pushing them away from further participation in EA, and we should take this into account if considering running experiments that would involve arbitrarily declining qualified applications. (Edited to add: the experience of people who applied but weren't selected for an EAGx, either because of a study or because they didn't make the cut, is likely very different from the experience of EAs if no EAGx was held. FOMO/resentment for having personally missed out when others went is not similar to 'oh, I hope there will be a conference next year' or [crickets].)

I do also think that the metrics used in this study but not in 'typical' EAGx impact surveys are missing a lot of dimensions via which EAs have impact, especially those which are most relevant to people at a logical ceiling who are already working or volunteering within EA orgs for multiple hours a week. Metrics like 'did you read books and forum posts, did you go to meetups, did you make friends' are great for measuring engagement with EA ideas and community, but not great for measuring outputs like 'Alex and Tsai had some great chats and have formed a technical collaboration' or 'Kate talked to Jess about her research and is now doing a PhD in her lab' or 'Kai inspired Josh to get professional mental health treatment and he's now able to spend another 10 hours a week on effective work'. On this basis, I completely agree with the original post that we need to combine BOTH self-reports of effectiveness based on subjective measures like meaningful connections or feeling motivated, AND objective measures of behaviour change. I wonder whether it would be possible to incorporate more metrics that would 'split the difference' in a way, while still relying on self-reports of past behaviour (which is important for all the reasons discussed in OP). For instance, could we ask at a 6-month follow-up, 'how many people that you met at EAGx have you interacted with in the past month'. Or, we could ask people to nominate specific actions they intended to take immediately after EAGx (with a control group of non-attendees) and then follow up 6 months later to ask them which of those actions they have actually taken. This design would be scalable to people with different levels of both engagement and ceiling-ness: a busy professional might commit to reading Scout Mindset and going to at least one meetup, while a student working to build an EA career might commit to applying for EAG, following up with 2 new connections and writing a forum post.

This is longer than I intended it to be, and I hope it doesn't come across as critical - I think this is very important work, and that we should always be open to considering that beloved interventions are less effective than we would like for them to be. I hope this is a useful addition to the discussion, at any rate. And thanks James for all the work you put into EAGx Australia!

I think 'engagement' can be a misleading way to think about this: you can be fully engaged, but still increase your impact by changing how you spend you efforts.

Thinking back over my personal experience, three years ago I think I would probably be counted in this "fully engaged" cohort: I was donating 50%, writing publicly about EA, co-hosting our local EA group, had volunteered for EA organizations and at EA conferences, and was pretty active on the EA Forum. But since then I've switched careers from earning to give to direct work in biosecurity and am now leading a team at the NAO. I think my impact is significantly higher now (ex: I would likely reject an offer to resume earning to give at 5x my previous donation level), but the change here isn't that I'm putting more of my time into EA-motivated work, but instead that (prompted by discussion with other EAs, and downstream from EA cause prioritization work) my EA-motivated work time is going into doing different things.

Yeah, I think this is an excellent point that you have made more clearly than I did: we are measuring engagement as a proxy for effectiveness. It might be a decent proxy for something like 'probability of future effectiveness' when considering young students in particular - if an intervention meaningfully increases the likelihood that some well-meaning undergrads make EA friends and read books and come to events, then I have at least moderate confidence that it also increases impact because some of those people will go on to make more impactful choices through their greater engagement with EA ideas. But I don't think it's a good proxy for the amount of impact being made by 'people who basically run their whole lives around EA ideas already.' It's hard to imagine how these people could increase their ENGAGEMENT with EA (they've read all the books, they RUN the events, they're friends with most people in the community, etc etc) but there are many ways they could increase their IMPACT, which may well be facilitated/prompted by EAGx but not captured by the data.

Out of curiosity, would you say that since switching careers, your engagement measured by these kind of metrics (books read, events attended, number of EA friends, frequency of forum activity, etc) has gone up, gone down, or stayed the same?

I think it's up, but a lot of that is pretty confounded by other things going in the community. For example, my five most-upvoted EA Forum posts are since switching careers, but several are about controversial community issues, and a lot of the recency effect goes away when looking at inflation-adjusted voting. I did attended EAG in 2023 for the first time since 2016, though, which was driven by wanting to talk to people about biosecurity.

I wonder if the Australian geographical context enhances the proportion of attendees who are at/near their ceiling. Most people in North American and Europe who are at/near ceiling will have a much wider range of conferences they can attend without significant travel time/expenses. They are less likely to attend EAGx given the marginal returns (and somewhat increasing costs) of attending a bunch of conferences. On the other hand, someone near/at ceiling in Australia (or another location far from most more selective conferences) may choose to attend EAGx Australia in large part because it is much more accessible to them.

This is a really good point actually! I have never attended either an EAG conference, or EAGx on another continent, so I don't really have a frame of reference for how they generally compare. In Australia, EAGx is THE annual conference, and most of us put decently high priority on showing up if we can.

Thanks for the feedback Laura, I think the point about ceiling effects is really interesting. If we care about increasing the mean participation then that shouldn't affect the conclusions (since it would be useless for people already at the ceiling), but if (as you suggest) the value is mostly coming from a handful of people maintaining/growing their engagement and networks then our method wouldn't detect that. Detecting effects like that is hard and while it's good practice to be skeptical of unobservable explanations, it doesn't seem that implausible.

Perhaps trying to systematically look at the histories of people who are working in high-impact jobs and joined EA after ~2015 and tracing through interviews with them and their friends whether we think they'd have ended up somewhere equally impactful if not for attending EAGs. But that would necessarily involve huge assumptions about how impactful EAGs are already, so may not add much information.

I agree that randomizing almost-accepted people would be statistically great but not informative about the impacts of non-marginal people, and randomly excluding highly-qualified people would be too costly in my opinion. We specifically reached out to people who were accepted but didn't attend for various reasons (which should be a good comparison point) but there's nowhere near enough of them for EAGxAus to get statistical results. If this was done for all EAG(x)'s for a few years we might actually get a great control group though!

We did consider having more questions and aiming more directly at the factors that are most indicative of direct impact but we decided on this compromise for two reasons: First, every extra question reduces the response rate. Given the 40% drop out and a small sample size I'd be reluctant to add too much. Second, questions that take time and through for people to answer is especially likely to lead to drop outs and inaccurate responses.

That said, leaving a text box for 'what EA connections and opportunities have you found in the last 6 months?' could be very powerful, though quanitifying the results would of require a lot of interpretation.

I feel like if it would give high quality answers about how valuable such events are, it would be well worth the cost of random exclusion.

But this one feels more like "one to consider doing when you're otherwise quite happy with the study design", or something? And willing to invest more in follow-up or incentives to reduce drop-out rates.

Thanks for the response, I really like hearing about other people's reasoning re: study design! I agree that randomly excluding highly qualified people would be too costly, and I think your idea of building a control group from accepted-cancelled EAGx attendees across multiple conferences is a great idea. I guess my only issue with it is that these people are likely still experiencing the FOMO (they wanted to go but couldn't). If we are considering a counterfactual scenario where the resources currently used to organise EAGx conferences are spent on something else, there's no conference to miss out on, so it removes a layer of experience related to 'damn, I wish I could have gone to that'.

I'm not familiar enough with survey design to comment on the risk of adding more questions reducing the response rate. If you think it would be a big issue, that's good enough for me - and also I imagine it would further skew the survey respondents towards more-engaged rather than less-engaged people. I do think that for the purpose of this survey, it would make more sense to prompt the EAGx attendees to answer whether they had followed up on any connections / ideas / opportunities from EAGx in the last 6 months. I'm not sure how to word that so that the same survey/questions could be used for both groups though.

Thanks for doing all this analysis, very interesting. Did you ask if people had ever attended an EAGx or EAG before? (I was in the control group but can’t remember whether I was asked this or not). For me personally, I’m pretty confident my first EAGx made the most counterfactual difference in continued engagement, vs subsequent EAGxes.

We considered it and I definitely agree that people who are attending their first EAGx are much more likely to be affected. The issue is that people in that bucket are already likely to be dramatically increasing their level of engagement, so it's hard to draw conclusions from the results on that front

I'm not sure that's true, though I may be biased by my own case

I would agree with Rebecca here. In my case, attending my first EAGx dramatically changed the course of my career (I'm now doing community building professionally), and if I hadn't attended, I think there's a fairly high chance that I'd now be in a totally different non-EA related career, and most likely a less impactful one (but that bit's harder to measure, of course). In comparison, I attended EAG London this year, which was my second in-person conference. And while it was a really great experience all round - I learned a lot, made a lot of useful connections & strengthened old ones, and also gave (hopefully useful) advice to others - it was definitely less impactful than my first EAGx in terms of how much it directly affected my career path.

If you ever run another of these, I recommend opening a prediction market first for what your results are going to be :)

cc @Nathan Young

What do you think can be gained from that?

Something like "noticing we are surprised". Also I think it would be nice to have prediction markets for studies in general and EA seem like early adopters (?)

I don't know why this was so downvoted/Xed

:/

I'm very confused why you were downvoted, this seems like an obviously good idea with no downsides I can see - it makes it much clearer whether a result is surprising or not and prevents hindsight bias

I downvoted and disagreevoted, though I waited until you replied to reassess.

I did so because I see absolutely no gain from doing this, I think the opportunity cost means it's net negative, and I oppose the hype around prediction markets - it seems to me like the movement is obsessed with them but practically they haven't led to any good impact.

Edit: regarding 'noticing we are surprised' - one would think this result is surprising, otherwise there'd be voices against the high amount of funding for EA conferences?

I think the methodology here was too weak for the result to be too surprising, even conditioned on EA conferences working

Do you maybe want to voice your opinion of the methodology in a top level comment? I'm not qualified to judge myself and I think it'd be informative.

I have seen some moderate pushback on the amount of money spent on EAGs (though not directed at EAGxes)

Hi, thanks for putting together a serious attempt to measure the effect of EAGx conferences.

Whilst I am highly supportive of your attempts to do this, I wanted to ask about your methodology. You are attempting a difference in differences, with a control and treatment group, but immediately state: ‘In the data from the initial collection period (‘before’), the control group and treatment groups showed several significant differences.’

To my knowledge this effectively means you no longer have a suitable control and treatment groups and makes a difference in differences ill-advised?

Very happy to be wrong here! But whilst you made some attempts to find a control and treatment group, your initial analysis of the two groups suggests you are comparing apples to oranges?

Control and treatment groups can have some differences, but critically we want the parallel trends assumption to hold. From your descriptive analysis of the two groups this is unlikely to be true. Would you agree that your control group and treatment group would have gone on to engage with EA in different ways if the conference had never happened? I’d say so, given that you demonstrate they are rather different groups of people!

If I’m right - I may not be - This still leaves room for analysing the effects on conference attendance on attendees behaviour, but just losing the control and using differing methodology. I hope I’m wrong - but I think this means that we can't use this part of your results.

Thanks for the feedback Sam. It's definitely a limitation but the diff-in-diff analysis still has significant value. The specific way the treatment and control groups are different constrains the stories we can tell where the conference did have a big (hopefully positive) effect but appared not to due to some unobserved factors. If none of these stories seem plausible then we can still be relatively confident in the results.

The post mentions that the difference in donation appears to be driven by a 3 respondents, and the idea that non-attendee donations fall by ~50% without attendance but would be unchanged with attendance seems unlikely (and confounded with high-earning professionals having presumably less time to attend).

Otherwise, the control group seems to have similar beliefs but is much less likely to take EA actions. This isn't surprising given attending EAGx is an EA action but does present a problem. Looking only at people who were planning to attend but didn't (for various reasons) would have given a very solid subgroup but there were too few of these to do any statistical analysis. Though a bigger conference could have looked specifically at that group, which I'd be really excited to see.

With diff-in-diff we need the parallel trends assumption as you point out, but we don't need parallel levels: if the groups would have continued at their previous (different) rates of engagement in the absence of the conference then we should be fine. Similarly, if there's some external event affecting EA in general and we can assume it would have impacted both groups equivalently (at least in % of engagement) then the diff-in-diff methodology should account for that.

So (excluding the donation case) we have a situation where a more engaged group and a less engaged group both didnt change their behavior.

If the conference had a big positive effect then this would imply that in the absence of the conference the attendees / the more engaged group would have decreased their engagement dramatically but that the effect of the conference happened to cancel that out. It also implies that whatever factor would have led attendees to become less engaged wouldn't have affected non-attendees (or at least is strongly correlated to attendance).

You could imagine the response rates being responsible, but I'm struggling to think of a credibly story for this: The 41% of attendees who dropped out of the follow-up survey would presumably be those least affected by the conference, which would make the data overestimate the impact of EAGx. Perhaps the 3% of contacted people who volunteered for the treatment group were much more consistent in their EA engagement than the (more engaged on average) attendees who volunteered and so were less affected by an EA-wide downturn that conference attendance happened to cancel out? But this seems tenuous and 'just-so'.

To me the most plausible way this could happen is reversion to the mean: EA engagement is highly volatile on a year-to-year level with only the most engaged going to EAGx and that results in them maintaining their high-level of EA engagement for at least the next year (roughly cancelling out the usual decline).

This last point is the biggest issue with the analysis in my opinion. Following attendees over the long-run with multiple surveys per year (to compare results before vs. after a conference) would help a lot, but huge incentives would be needed to maintain a meaningful sample for more than a couple of years.

The aforementioned Ollie from the CEA events team here. Thanks so much James and Miles for running this survey!

I reviewed this post before it went out, but I just wanted to quickly drop by to say that our team has seen this and that I intend to take some time soon to read the comments and provide some additional reactions and commentary here.

I don't understand this methodology. Shouldn't we expect most attendees of EAGx events to be roughly at equilibrium with their EA involvement, and even if they are not, shouldn't we expect the marginal variance explained by EAGx attendance to be very small?

I feel like in order for this study to have returned anything but a null-result, the people who attend EAGx events would have had to become a lot more involved over time. But, on average, in an equilibrium state, we should expect EAGx attendees to be as likely to be increasing their involvement in EA as they are to be decreasing their involvement in EA (otherwise we wouldn't be at equlibrium and we should expect EAGx attendance to increase each year).

Of course, de-facto EA is not in equilibrium, but I don't see how this study would be measuring anything but the overall growth rate of EA (which we know from other data is currently relatively low and probably slightly negative). If EA is shrinking overall, then we should expect members of EA (i.e. people who attend EAGx events) to be taking fewer EA-coded actions over time. If EA is growing overall then we should expect members of EA to continue to get more involved.

Whether EA is shrinking or growing is of course an interesting question to ask, but this also feels like a very indirect and noisy way of measuring the EA growth rate.

Thanks for the comment, this is a really strong point.

I think this can make us reasonably confident that the EAGx didn't make people more engaged on average and even though you already expected this, I think a lot of people did expect EAGs would lead to actively higher engagement among participants. We weren't trying to measure the EA growth rate of course, we were trying to measure whether the EAGs lead to higher counterfactual engagement among attendees.

The model where an EAG matters could look something like: There are two separate populations of EA: less-engaged members who don't attend EAGs, and more-engaged members who attend EAGs at least sometimes. And attending an EAG helps push people into being even more engaged and maintains their level of engagement that would otherwise flag. So even if both populations are stable, EAG keeps the high-engagement population more engaged and/or larger.

A similar model where EAG doesn't matter is that people stay engaged for other reasons and people attend EAG believing incorrectly it will help or as de-facto recreation.

If the first model is true then we should expect EA engagement to be a lot higher in the few months after the conference and gradually fall until at least the few weeks before the conference (and spiking again during/just after the conference). But if the second model is true then any effects on EA engagement from the conference should dissapear quickly, perhaps within a few weeks or even days.

While the survey isn't perfect for measuring this (6 months is a lot of time for the effects to decay and it would be better for the initial survey would've been better weeks before the conference might have been getting people excited) I think it provides significant value since it asks about behavior over the past 6 months in total. You'd expect if the conference had a big effect on maintaining motivation (which averages steady-state across years) that people would donate more, have more connections, attend more events etc. 0-5 months after a conference than 6-12 months after.

Given we don't see that, it seems harder to argue that EAGs have a big effect on motivation and therefore harder to argue that EAGs play an important role in maintaining the current steady-state motivation and energy of attendees.

It could still be that EAGs matter for other reasons (e.g. a few people get connections that create amazing value) but this seems to provide significant evidence against one major supposed channel of impact.

I agree that it provides a bit of evidence on whether EAGs have a very spiky effect, but I had a relatively low prior on that. My sense is the kind of people who attend EAGs already do a bunch of stuff that connects them to the EA community, and while I do expect a mild peak in the weeks afterwards, 6 months is really far beyond where I expect any measurable effect.

I don't understand this. Why would it be important for EAGs impact to have a spiky intervention profile? If EAGs and EAGx events are useful in a way where the benefit is spread out so that they form a relatively stable baseline for EA involvement (which honestly seems almost inevitable given the kind of behavior that you were measuring for EA involvement), then we would measure no effect with your methodology.

I agree there wouldn't be new effects at that point, but we're asking about total effects over the 6 months before/since the conference. If the connections etc. persist for 6 months then it should show up in the survey and if they have dissapeared within a few months then that indicates these effects of EAGx attendance are short-lived, which presumably makes them far less significant for a person's EA engagement and impact overall.

If the EAG impacts are spiky enough that they start disspiating substantially within several months (but get re-upped but future attendance) then we should be able to detect a change with our methodology (higher engagement after). You're right that if the effects persist for many years (and don't stack much with repeat attendance) then we wouldn't be able to measure any effect on repeat attendees but this would presume that it isn't having much impact on repeat attendees anyway. On the other hand, if effects persist for many years then we should be able to detect a strong effect for first-time attendees (though you'd need a bigger sample).

To be more explicit here. We absolutely did not learn whether EAGx events made people more engaged on average. Because overall EA membership behavior is not increasing/EA is not growing it is necessarily the case that the average EAGx attendee is reducing their involvement over time and that the average non-EAGX attendee is increasing their involvement over time. This of course does not mean EAGx is not having an effect, it just means that in-aggregate, there is churn on who participates in highly-engaged EA activities.

The effect-size of EAGx could be huge and the above methodology would not measure it, because the effect would only show up for EAs who are just in the process of getting more engaged.

That's an interesting point: Under this model if EAGx's don't matter then we'd expect engagement to decerase for attendees and stable engagement could eb interpeted as a positive effect. A proper cohort analysis could help determine the volatility/churn to give us a baseline and estimate the magnitude of this effect among the sort of people who might attend EAG(x) but didn't.

That said, I still think that any effect of EAG(x) would presumably be a lot stronger in the 6 months after a conference than in the 6 months after that (/6 months before a conference) so if it had a big effect and engagement of attendees was falling on average than you'd see a bump (or stabilization) in the few months after an event and a bigger decline after that. Though this survey has obvious limitations for detecting that.

What did you mean by the last sentence? Above I've assumed that it has an effect not just for new people who are attending a conference for the first time (though my intuition is that this would be bigger) but also in maintaining (on the margin) engagement of repeat attendees. Do you disagree?

It's great to see research like this being done. I strongly agree that more resources should be spent on efforts like this, and also that independent evaluation seems particularly useful. I also strongly concur that self-report measures about the usefulness of EA services are likely to often be significantly inflated due to social desirability / demand effects, and that efforts like this which try to assess objective differences in behaviour or attitudes seem neglected.

One minor point is that I didn't follow the reasoning here:

If I'm reading this correctly, it seems like the first tests you're referring to were looking at the large baseline differences between the different treatment and control groups (e.g. one group being older, more professionals etc.). But I don't follow how this tells about the power to detect differences in changes in the outcome variables between the two groups. It seems plausible to me that we'd expect the effect size for differences in EA behaviours/attitudes as a result of the conference to be smaller than the initial difference in composition between conference attendees and non-attendees. Looking at the error bars on the plots for the key outcomes, the results don't seem to be super tightly bounded. And various methods that one might do to account for possible differential attrition affecting these analyses would likely further reduce power. But either way, why not just directly look the power analyses for these latter tests, rather than first set of initial tests?

To be clear, I doubt this would change the substantive conclusions. And it further speaks to the need for more investment in this area so we can run larger samples.

Hi David,

The point I was trying to communicate here was simply that our design was able to find a pattern of differences between the control and treatment groups which is interpretable (i.e. in terms of different ages and career stage). I think this provides some validation of the design, in that if large enough differences exist then our measures pick up these differences and we can statistically measure them. We don't, for instance, see an unintelligable mess of results that would cast doubt on the validity of our measures or the design itself. Of course, if as you point out the effect size for attending the conference is smaller then we won't be able to detect that given our sample size. For most of our measures this was around 15-20%. But given we were able to measure sufficiently large effects using this design, I think it provides justification for thinking that a large enough sample size using a similar study design would be able to detect smaller effects, if they existed. Hope that clarifies a bit.

Thank you for this. I really appreciate this research because I think the EA community should do more to evaluate interventions (e.g., conferences, pledges, programs etc) considering the focus on cost-effectiveness etc. Especially repeat interventions. I also like the idea of having independent evaluations.

Possibly, though there is a trade-off here. We also hear in our 3–6 month follow-up surveys that people don't really remember conversations from the event. Maybe that's just a sign that nothing super valuable occurred but if you attend lots of events, questions about an event that occurred >6mo ago can be difficult to answer even if it was impactful. If we ask straight after the event, and 3–6 months later and a year later, I'd worry about survey fatigue.

Fair. Perhaps during the post event survey you could ask people who have attended previous events if they want to report any significant impacts from those past events? Then they can respond as relevant.

>emails to those accepted to the EAGxAustralia 2023 conference but who did attend

Was this meant to say "did not attend"?

Just to comment on the results: In hindsight, these results seem pretty obvious: in the first years of my Bachelor in Psychology we had this saying: "The best predictor of behavior is past behavior" and I know of a bit of research on the effectiveness of interventions over time, things such as implicit attitude change (e.g., https://psycnet.apa.org/manuscript/2016-29854-001.pdf) or fake news belief interventions (https://onlinelibrary.wiley.com/doi/full/10.1111/jasp.13049) typically lose a lot of effectiveness in days or weeks, so seeing an intervention not work after 6 months seems not surprising at all. But it would have been nice to see the expectations of most people who consider putting money on EAGx makes sense + people who typically do research into behavior change to know whether this lack of surprise I feel is just me being biased. Regardless, great to see the impact of EAGx being tested!

Just wanted to say thanks for your eye opening analaysis.

And I'm not surprised because Fodor relentlessly releases amazing content.

Check out his podcast if you don't believe me or just want to learn more about science.

Thanks James and Miles, I appreciate your summary at the start of this post. Good to read this and important work to attempt to validate the core aims of the conference. Couple of ideas for survey tweaks:

Thanks Rudstead, I agree about the "keen beans" limitation, though if anything that makes them more similar to EAGx attendees (which they're supposed to be a comparison to). In surveys in general there's also steeply diminishing returns for getting a higher response rate with more reminders or higher cash incentives.

(2) Agreed, but hopefully we'll be able to continue following people up over time. The main limitation is that loads of people in any cohort study are going to drop out over time, but if it succeeded such a cohort study could provide loads of information.

I like these ideas but have something to add re your 'keen beans', or rather, their opposite - at what point is someone insufficiently engaged with EA to bother considering them when assessing the effectiveness of interventions? If someone signs up to an EA mailing list and then lets all the emails go to their junk folder without ever reading them or considering the ideas again, is that person actually part of the target group for the intervention? They are part of our statistics (as in, they count towards the 95% of 'people on the EA mailing list' who did not respond to the survey), is that a good thing or a bad thing?

Very interesting, thanks so much for doing this!