| This is a Draft Amnesty Week draft. |

Last year, someone who was considering projects related to diversity and inclusion in EA noted that one challenge was not knowing what had been tried before. I drafted this summary but never got it out the door.

The atmosphere around DEI interventions, in the US at least, is different than it was when I first drafted this. I’m not intending this as commentary as anything going on currently, but as finally publishing an old draft. The main update I've made is to add some demographic data that recently came out from the 2024 EA Survey.

I don’t mean this post as a claim that EAs have done all the right things. I mean it as a historical record so people can better gauge what’s been tried over time, what might be worth trying differently, and what gaps are unfilled.

I’m sure I’ve left out some efforts by mistake; sorry about that. Some efforts listed here aren’t nominally about diversity, but serve the purpose of supporting people who might feel marginalized in EA. I’m not including most projects that were explored / had some initial steps, but were never publicly carried out.

Organizational / program efforts

2015: CEA’s EA Outreach team has a summer intern focused on how to support demographic diversity in EA and particularly at conferences. An early version of the code of conduct for EA Global is written, and the series has a community contact person to address any problems that come up. My impression is that the conference organizers particularly want to avoid the phenomenon of “something upsetting happens at a conference, it’s nobody’s job to do anything about it, and then it’s hashed out on the internet,” like Elevatorgate. Later in 2015 I take on the role, which eventually evolves into the community health team.

2016: EAGx conference series launches, including in Hong Kong and Nairobi.

2016: EA Global makes a more serious effort to platform a more demographically diverse array of speakers than in its initial year. This continues over time.

2017-2022: Encompass, led by Aryenish Birdie, provides DEI services and advising in the farmed animal advocacy space. Several EA organizations get consulting services from Encompass.

Starting around 2018: meetups for identity / affinity groups at EAG and EAGx conferences, eventually including

- Women and nonbinary people

- People of color

- Socioeconomic diversity (for people who grew up lower-income, first in their family to attend university, etc)

- Meetups for different religious groups, or for religious people generally

Various times: People start online groups to connect people from identity groups:

- Women and nonbinary people in EA

- Underrepresented racial and ethnic groups in EA

- Parents in EA

- LGBT+ in EA

- Disabled and chronically ill people in EA

- Diversity and inclusion in EA

- EAs from immigrant backgrounds

- EA for Jews

- Buddhists in EA

- Muslims for Effective Altruism

- EA for Christians

Various times: Some EA groups develop a code of conduct.

Various times: Some EA groups have some kind of community contact person, either volunteer or sometimes paid. (Finland, Sweden, Denmark, Norway, Israel, London, NYC, Germany, France, and others.) This role aims to help address interpersonal or community problems that come up within the group.

2018 onward: CEA’s groups team shares a folder of resources for EA groups, including community health guidance about welcomingness and handling problems that arise. 2019: EA Hub creates a website of resources for group organizers, including guidance on community health, which later evolves into this website.

2019: Sky Mayhew, who has a background in research on diversity interventions, joins the community health team at CEA. Her work explores projects around mentorship and ways to connect EAs who would otherwise feel isolated because of demographics.

2019: Magnify Mentoring (originally called WANBAM) is founded by Kathryn Mecrow-Flynn and Catherine Low, sparked by a discussion in a meetup for women and nonbinary people at EA Global. The goal is providing support and mentorship for women, nonbinary people, and trans people in doing more good with their careers. Later pilots broader programs (see 2023 entry).

2020: EA Anywhere forms to connect people who aren’t geographically near other groups, don’t want to attend their local group, or can’t attend their local group for some reason (including physical mobility).

2020: several EA groups and orgs start explicitly encouraging diversity on applications for fellowships and other programs, e.g.: "We are committed to building a diverse applicant pool. There is some evidence suggesting that individuals from underrepresented groups tend to underestimate their abilities. [Group] does not want the application process to dissuade potential candidates. We strongly encourage interested candidates to apply regardless of gender, race, ethnicity, nationality, physical ability, educational background, socioeconomic status, etc."

Ongoing, ramping up in 2020: Will MacAskill is the main face of EA, but he makes an ongoing effort to recruit and mentor people who aren’t white men to be spokespeople or public intellectuals in EA. He’s pitched me on this at intervals since 2016. My perception is that it doesn’t work as well as hoped; people are typically busy with their own work and/or don’t want to be a public face of EA. In 2023 Will and his team start more active connection of new spokespeople with journalists / op-ed opportunities / podcasts; my perception is this works somewhat better, although still has limited impact.

Over time: Many EA groups put attention toward how to support group members from underrepresented groups, and how to have better numeric balance within groups. One example is EA Sweden’s strategy of changes to communications, workshops, and focused career coaching, resulting in significant increases in women at their national conference and as new members.

2021-2023: Open Philanthropy runs a scholarship for international students admitted to top undergraduate programs in the US or UK.

2022: Before the fall of FTX, CEA offers travel support to many EAG attendees, allowing more attendees to travel from low and middle income countries (and for lower-income people in high income countries). Travel grants drop when funding is scarcer post-FTX.

2023: GPI holds a fully-funded 4-day global priorities workshop for students from underrepresented groups.

By 2023 / 2024, EAGx conferences include Latin America, India, Warsaw, and Philippines, plus a virtual conference accessible anywhere in the world.

2023: Magnify Mentoring runs a pilot aimed at underrepresented groups generally, including "people from low to middle income countries, people of color, people from low-income households, etc" aiming at high-impact altruism. [edited, see comment from KMF]

2024: Animal Advocacy Careers offers a series of hiring workshops with a focus on inclusivity.

2024: Launch of Athena, a research mentorship program for women in AI alignment research.

Efforts in hiring / staffing at EA orgs

2016 onward: Many EA orgs don’t originally have any written policy about parental leave. As in other industries, EA orgs begin to provide paid maternity leave (generous by US nonprofit standards, not necessarily by European standards) in an effort to be more successful at hiring and retaining women.

Ongoing over time: EA orgs think about how to improve diversity in hiring. Some practices (but not all) I’m aware of that EA-related orgs tried when recruiting for jobs, fellowships, etc:

- Asking staff and others to brainstorm specifically for candidates from underrepresented backgrounds, and doing personal outreach to these people

- Using language in job listings that doesn’t slant male

- Posting job openings on platforms aimed at groups underrepresented in EA, as well as on generic EA platforms

- Emphasis on trial tasks, to avoid weighting credentials or superficial characteristics

- Anonymizing the initial stages of applications (but several orgs note that this doesn’t seem to improve rates of people from underrepresented backgrounds making it into the pool).[1]

- Keeping hiring rounds open for longer until there are candidates from underrepresented groups who met the hiring criteria (one org says they stopped doing this after it meant keeping rounds open for a long time while programs struggled without enough hires)

- Extra encouragement throughout the hiring process for candidates from underrepresented groups

For some organizations, these practices have yielded a more demographically diverse staff (overall, not necessarily in leadership). I’ve heard mixed opinions on the effects these policies have had on organizations and individuals.

As far as I understand, it’s not legal in the US or UK to use race or gender as a criterion in a final hiring decision. There may be some exceptions for formal affirmative action plans in the US, or as a tiebreaker for equally-qualified candidates in the UK, but note that EA organizations tend to say there’s a strong difference between the person they hired and their second-preference applicant.

2017-2022: I carry out interview projects for 4 organizations with especially homogeneous staff, interviewing women* who were familiar with the org (people who had worked there, interned there, or had been offered jobs and decided not to work there) about what the org should consider changing. In most cases it’s not clear to me it led to useful changes. Some themes:

- It’s good if staff in the majority group make clear they value diversity and want a good experience for people in the minority group

- If ops staff aren’t treated well, and ops staff are disproportionately women, problems compound

- It's discouraging if women who aren’t ops staff get mistaken for visitors or ops staff

- Framing matters: “We invited you because of your demographics” is an insulting frame, vs. “We’re aware that bias might have led to overlooking people who don’t fit the usual mold”

When there are few people of your demographic in a space, you feel you’re sticking your neck out more if you speak up / feel you’ll reflect on your group if you make mistakes.

*In theory these would have focused on race too, but sadly I think something like 3 people of color worked at these combined 4 organizations at the time of the interviews. I’ve heard EA staff of color point out that diversity efforts often focus on gender because there are so few staff of color, ironically. In this case, the staff of color I interviewed didn’t have much to add about race-specific inclusivity on top of general inclusivity.

Other research / content / major discussions in the community

More here: EA Forum posts tagged diversity and inclusion

2011-2014 Early CEA / GWWC: discussions about the gender skew in EA, internal presentations by Bernadette Young and Carolina Flores on reducing it

2014: Several online discussions about how women are treated in EA, and how communities should handle concerns about unfair treatment.

2015: AGB's post EA Diversity: Unpacking Pandora's Box

2015 and 2016: some content at EA Globals about diversity from me and others; I don’t think the early content was very notable / useful.

2016: My post on making EA groups more welcoming

2015-2017: ACE is among the first EA orgs to write some pieces about social justice, especially racial equity

2016: Oxford and Cambridge UK EA/GWWC groups have committees focused on diversity (these committees / working groups come and go over time)

2017: Meeting about diversity and inclusion at EAG Boston, organized fairly spontaneously after a speaker raises the topic.

2017: Various workshops at EA Global on making EA groups more welcoming, similar to this post about this topic

2017: Georgia Ray’s EA Global talk and writeup about the research on diversity and team performance

2017: Kelly Witwicki’s Why & How to Make Progress on Diversity & Inclusion in EA and ensuing discussion

2016-2018: EA London collects data on attendance of their group by gender: “Women* are just as likely to attend one EA event as men. However, women are less likely to return to future EA events. Women and men are about equally likely to attend most learning-focused events, like talks and reading groups. Women are much less likely to attend socials and strategy meetings.” [*based on people’s names and gender presentation]

2018 onward: At first all EA groups are run by volunteers, but some groups start to get funding for organizer time. Within CEA, there’s attention to how much we should additionally weight groups that add geographic and demographic diversity. Kaleem's post An elephant in the community building room summarizes narrower and broader approaches. CEA strategy fluctuates between broader and narrower groups support over the years. Funding for groups in different areas rises and falls, based on changing CEA strategy, changing funding, and availability of strong candidates for community-building roles.

2019: Community health team starts to grow, with hire of Sky Mayhew who previously did academic research on diversity interventions. Sky explores a lot of models for possible DEI work, and views mentorship as one of the most promising. More data collecting within CEA begins, to track things like satisfaction with our programs across different demographic groups.

2019 onward: Multiple pieces on the dominance of the English language in EA

2019: EA London carries out focus groups on what women and men find appealing and offputting about the group

2019: Debate about a post in the Diversity and Inclusion in EA FB group, resulting in the writing of Making discussions in EA groups inclusive. Pushback from The importance of truth-oriented discussions in EA.

2019: CEA writes a stance on DEI

2019: Future of Life Institute profiles Women for the Future

2019: Vaidehi Agarwalla carries out a survey on ethnic diversity in EA

April 2020: 80,000 Hours post on Anonymous contributors answer: How should the effective altruism community think about diversity?

May 2020: Statistics on Racial demographics at longtermist organizations

June-August 2020: Racial justice becomes more salient, especially in the US. Debate within a lot of EA orgs and projects about whether and how to make some kind of statement about racial justice, as a lot of US institutions are doing at the time. At least among the Extremely Online parts of the community, there’s a lot of worry about how EA is handling current events and debates. Some people have strong worries about EA not taking social justice seriously enough; others worry that cancel culture and poor epistemics will have bad effects in EA.

Unusual number of online arguments about social justice, with some people leaving groups and platforms over it.

2020: Post and discussion on Geographic diversity in EA by AmAristizabal

2020 onwards: EA Infrastructure fund, Open Philanthropy, Meta Charity Funders (2024-) provide funding for EA groups (in addition to CEA), resulting in broader community building strategies from funders, sometimes leading to locations getting funded organisers even if they hadn’t been prioritized by CEA.

2020 / 2021: Heated discussion in an Effective Animal Advocacy online space about racism. ACE staff who were planning to speak at the CARE conference withdraw from the conference based partly on comments from a staff member at the sponsoring org. It seems possible that ACE will split from EA over this area, especially after they say they will not engage more with discussion about it on the EA Forum. Criticism here. Later, under new leadership, ACE voices the intention to stay involved with EA.

2023: Examples of content not explicitly based on demographics, but that I expect is more relevant to people from underrepresented groups:

- Power dynamics between people in EA by Julia Wise

- My experience with imposter syndrome — and how to (partly) overcome it by Luisa Rodriguez

2022: EA career guide for people from LMICs by Surbhi B, Mo Putera, varun_agr, AmAristizabal

Late 2022 / early 2023: After fall of FTX, a period of internal and external scrutiny on EA. Some responses reflect on diversity or experiences of different groups, e.g. I’m a 22-year-old woman involved in Effective Altruism. I’m sad, disappointed, and scared.

January 2023: Nick Bostrom makes a statement about a racist email he wrote 20 years before. Community responses include:

- A personal response to Nick Bostrom's "Apology for an Old Email" by Habiba Banu

- My Thoughts on Bostrom's "Apology for an Old Email" by Cinera

February 2023: TIME article about cultural problems in EA including sexual misconduct, and Owen Cotton-Barratt identifies himself as one of the people described. Community responses include:

- Why I Spoke to TIME Magazine, and My Experience as a Female AI Researcher in Silicon Valley by Lucretia

- Share the burden by Emma Richter

- If you’d like to do something about sexual misconduct and don’t know what to do… by Habiba Banu

- EA Community Builders’ Commitment to Anti-Racism & Anti-Sexism

- Things that can make EA a better place for women by lilly

2023: CEA’s community health team carries out an interview series and research project on gender-related experiences in EA, based on EAG feedback, groups surveys, some data from Rethink Priorities’ EA survey and approximately 40 interviews with women and non-binary people about their experiences that Charlotte conducted. Not currently public, but shared in 2023 with many EA organisation staff, office managers, and group organisers to inform efforts for their spaces.

2023: EAGxRotterdam holds a brainstorming session to produce advice on what might make EA better for women.

2023: Giving What We Can and One for the World produce a guide for inclusive events. At some point, Giving What We Can publishes a code of conduct for its own events.

2024: Series on experiences of non-Western EAs by Yi-Yang

2024: Alex Rahl-Kaplan and Marieke de Visscher, as community building grantees, carry out preliminary research on evidence-based arguments for and against prioritizing diversity. (Not currently published)

2024: EA Asia retreat session about diversity and EA in low and middle income countries

2024: EA Forum discussion about an event focused on prediction markets, which had significant overlap with the EA community in terms of people attending and speaking

- My experience at the controversial Manifest 2024 by Maniano

- Why so many “racists” at Manifest? by Austin

Demographic trends over time

Location

2020: The EA Survey finds that EAs live mostly in a few countries: “69% of respondents to the EA survey currently live in the same set of five high-income, western countries (the US, the UK, Germany, Australia, and Canada) that were most common in previous years.” But it’s changing a bit over time: “The percentage of respondents outside the top 5 countries has grown in recent years, from 22% in 2018, to 26% in 2019 and 31% in 2020.” “Overall satisfaction with the EA community is lower in the US and UK than in other regions and countries”, i.e. non-hub countries have higher satisfaction.

2019 - 2023: Areas with notable growth in EA include Philippines and Latin America.

Race

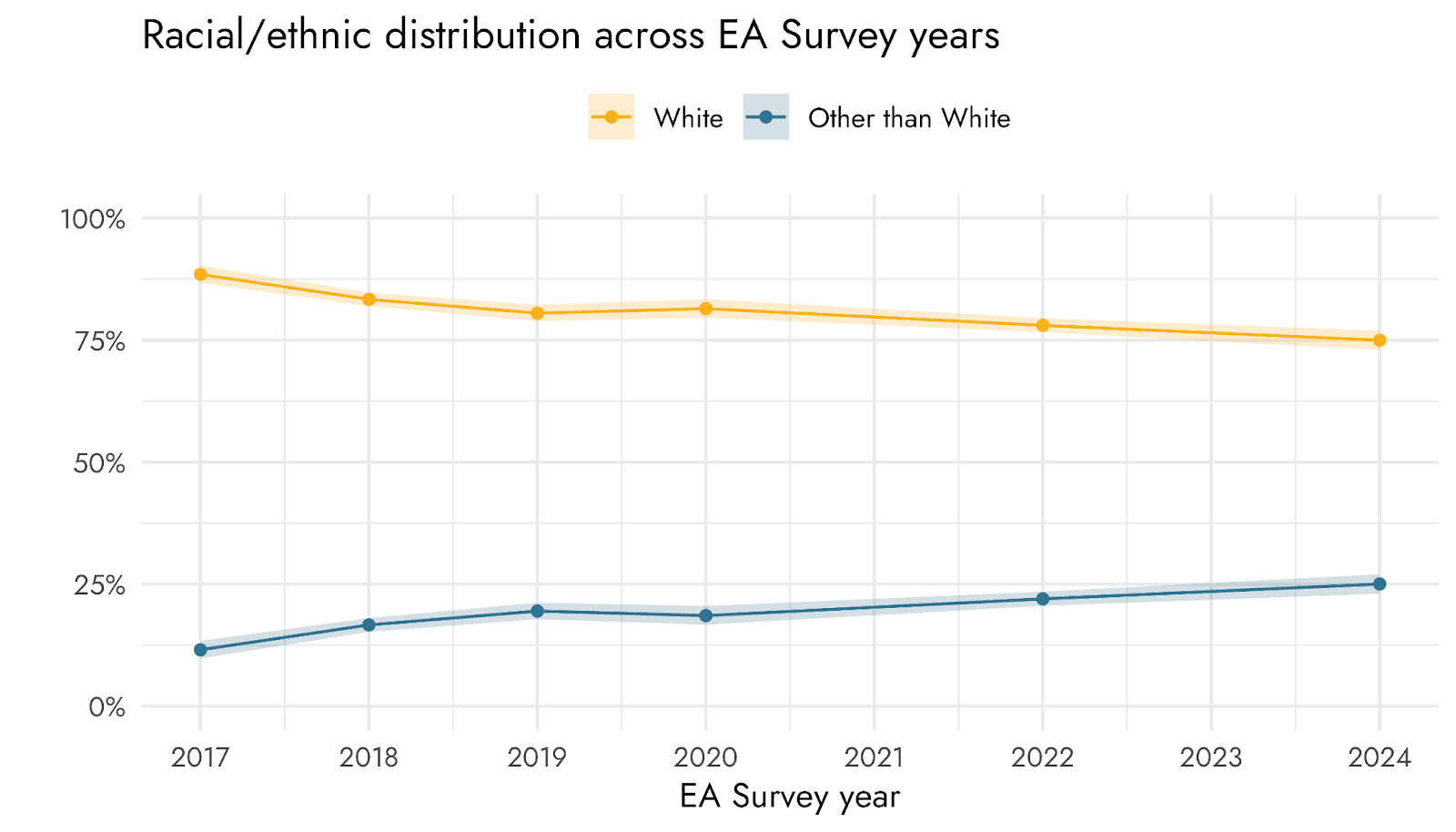

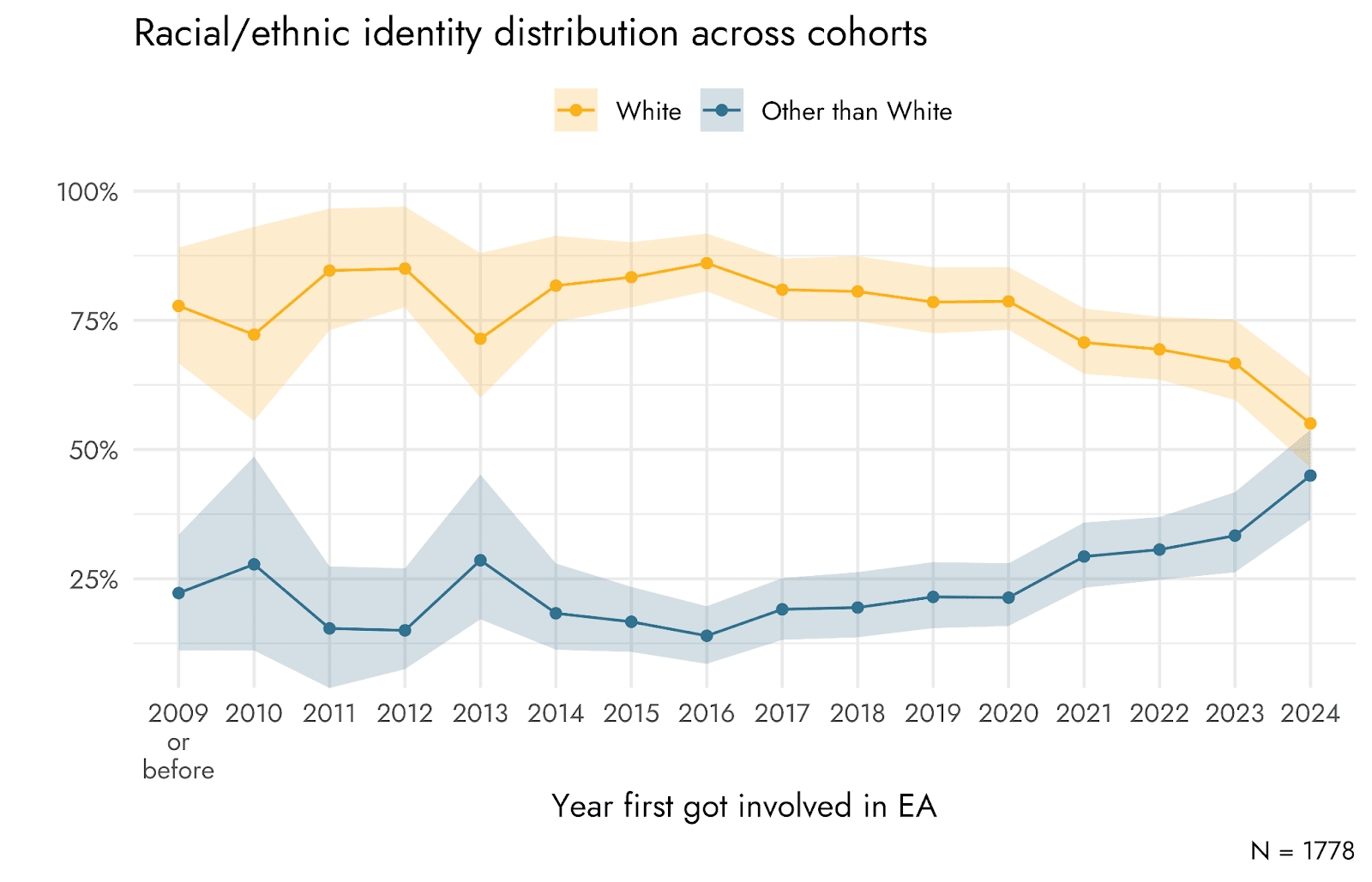

The population filling out the EA Survey has become slightly less white over time.

But the newest cohort (people who got involved in EA during the last year as of the 2024 survey) is markedly more racially diverse, and more diverse than the newest cohort was in the 2022 survey.

Gender

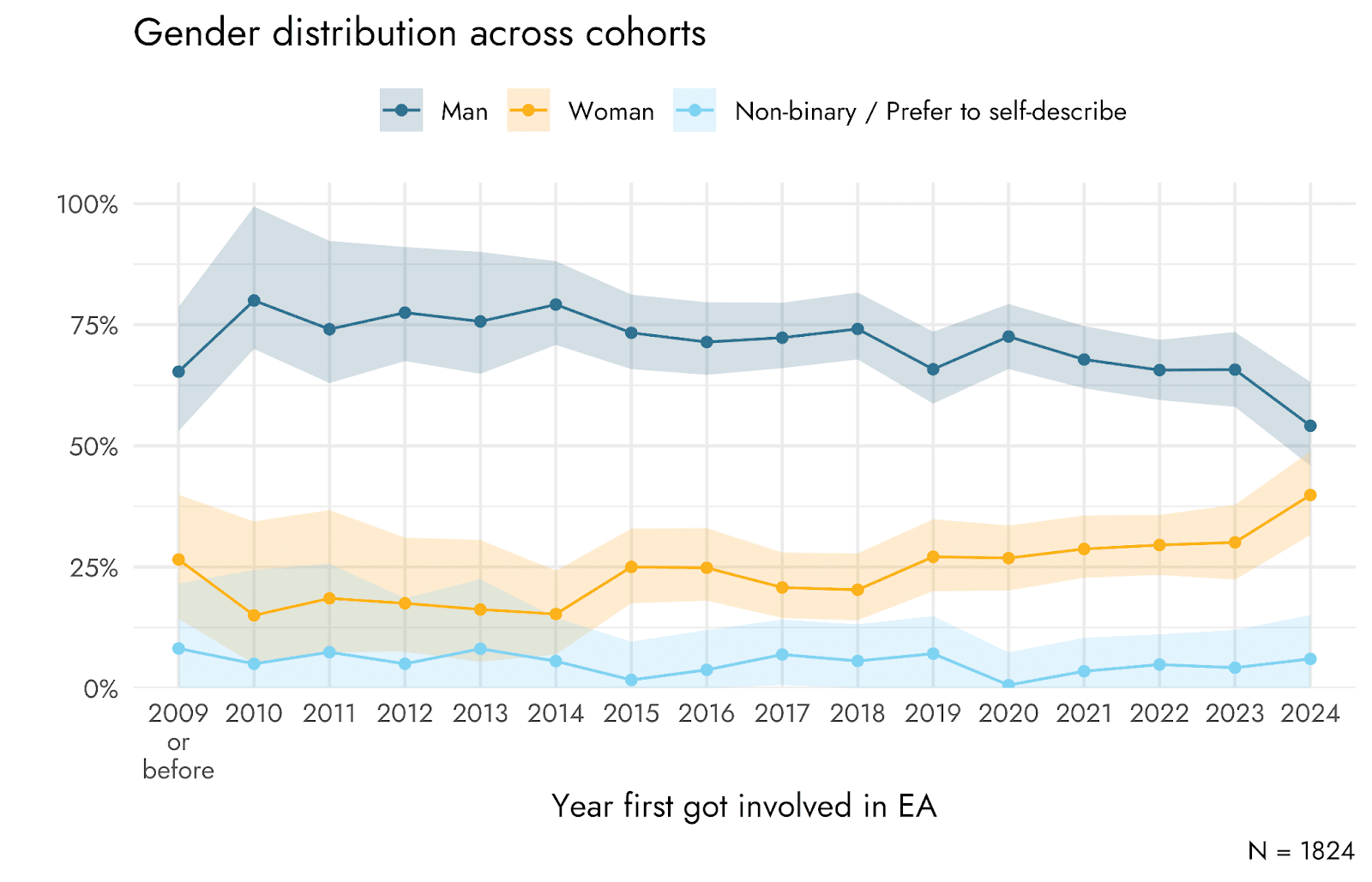

2014-2024: EA Survey participant ratios have gone from about 75% male in early years to about 69% male over the last 6 years.

As with race, the cohort of people who got involved in 2024 is markedly more balanced. This might fade if retention is lower for women, but this is still a different pattern than newcomers in the 2022 Survey. The EA Survey notes last week: “This pattern is compatible with an increased recruitment of women (and/or decreased recruitment of men) or disproportionate attrition of women over time, which we will assess in a future post.” I'm interested to see what they think might be happening here!

Gender balance varies by country, but it also varies quite a lot by year within the same country, which might reflect actual changes in EA in those countries but also might be statistical noise.

Political views

Note this post is mostly about demographic diversity, and isn't aiming to cover diversity of ideas or viewpoints. But I'll stray into that for a minute.

EA has been consistently left-leaning since data collection started in 2019. In 2024, 70% of EA survey respondents were left or center-left, less than 5% right or center-right, the rest libertarian or center. More detail here.

Age

EA has become less consistently young. From 2014 to 2024, median age moved from 25 to 31, and the age spread widened.

Experiences at events

2023: EA Global team publishes info about race and gender demographics at EAG, and attendee response data like how welcome they feel. Women and non-binary attendees reported that they find EA Global slightly less welcoming (4.46/5 compared to 4.56/5 for men). No statistically significant difference in terms of feelings of welcomeness and overall recommendation scores across groups in terms of gender or race/ethnicity.

- ^

On anonymizing applications:

- The evidence on anonymizing identities produces mixed results as far as whether more or fewer women and minority candidates advance to the next round, with some settings being markedly more likely to move forward resumes they think belong to women and racial minorities.

- Personally, I favor anonymizing applications in the early stages of EA hiring processes partly because organization staff are likely to know specific individuals applying. I feel better able to grade a trial task neutrally when I don’t know which applicant is which.

This is great stuff. I often find it hard to remember a lot of initiatives have happened (despite having read 80% of this list already) so this timeline is a good reference

As an aside, I think others may benefit from reading about diversity initiatives outside EA to remember this is hard problem. It's totally consistent for EA to be above-the-curve on this and still not move the needle much (directionally I think those two things are true but not confident on magnitudes), so linking some stuff I've been reading lately:

EA is "above-the-curve" on DEI stuff?

No, EA is the only place in the entire world I have been (and I have been many varied places) where I - a white straight male - am considered diverse, or at least semi-diverse... simply because I come from a typical white background that's not super wealthy; I'm the first to go to college in my family (and not ivy league); etc. (Or at least, there are "socioeconomic diversity" meetups at EAGs where they list me as diverse for these reasons. So I'm going off their definitions.)

And EA is aimed in many ways at maintaining exclusivity, even while incredible people like Julia make great strides in making it more inclusive. For example, some people in EA think my EA-oriented after-school program is a waste of time because it's not directed at the highest achievers. And indeed, there are few EA-oriented programs that are not directed at the highest achievers. Even 80,000 hours career advice applies not at all to the average person, but is oriented only to those who are already going to spend 6+ years shelling out money for undergrad and grad school, etc (at least last I checked).

This anecdote seems like very weak evidence for your claim. Claiming EA is 'aimed in many ways' at something implies a concerted effort to achieve it, even at the cost of other goals. In contrast, some people saying a program is a waste of time means just that - it's not producing much value. The whole point of EA is to prioritize - disfavoring donkey sanctuaries doesn't mean EAs hate donkeys, it just means there are other, better things to focus on.

This seems clearly false to me. To test it, I looked at the very first article in their career guide, one of their flagship products. It is about doing engaging work that helps others, doesn't have any major downsides, etc. As far as I can see, almost every part of it applied to average people. The income-satisfaction charts they include have x-axis running from $10k to $210k, a range than covers the median income. It is not in any way dependent on your having a postgraduate qualification. And I have no idea where you get '6+ years shelling out money' from - surely most of their advice applies also to autodidacts, people who finish more quickly, people in countries with state-funded universities, people who get scholarships, etc.?

(on the other hand you have a point that my anecdote is not the best... as plenty of people in EA do like my ideas for broadening out EA to the general public... so it's not like many people hate on me for this, although actually you seem to think this is a waste of time yourself?)

We can discuss the pros and cons of EA not being diverse. I think there are pros to its focus on the things it does, and I don't mean to sound like I'm bashing it 100%. But I think it's pretty clear that EA is not diverse as is, and doesn't resonate with people outside the general Ivy League world too too much.

I also share these frustrations with career advice from 80,000 Hours and the EA Forum. There was time about 2 years back where my forum activity was a lot of snarky complaints (of questionable insight) about career advice and diversity.

Like you mentioned, the career advice usually leaves a lot to be desired in the concrete details of navigating a lack of mentors, lack of credentials, lack of financial runway, family obligations, etc. I've sometimes wondered about writing an article to fill in the gap, but it's not exactly a "one article" sized hole. Maybe that's a yearlong project you or I or someone else can work on someday.

As for my comment on "above-the-curve", I think we're in agreement but I could have worded this better. I don't think the community is diverse but the initiatives are much higher quality than I see elsewhere. Usually, these initiatives range from bad to useless. Whereas this list of EA diversity initiatives feels mostly harmless or slightly positive nudges. A few feel like they'll pay dividends in a few years.

All makes sense Geoffery and glad it's not just me who thinks about these things, especially on the 80k advice.

I agree that this list that Julia presents is very impressive and way better than what a lot might do, in some contexts. Your point is well taken and your initial comment was good too, I maybe could have read the meaning a little better so maybe it was me that boxed it in.

Thanks so much, these threads I am posting on here, are I think the first time I am having productive back and forths on the forum so that's kinda cool :)

Something specific I would like to see... looking for feedback on the concept:

More outreach to bringing in specific people from low and middle income countries to EAGs. Most obviously on the topic of global health and global poverty. (But also for other topics.)

For example, Fistula Foundation, Give Directly, and many other orgs work with people who grew up and/or currently live/work in these places. These orgs could be contacted to see who they thought was ideal to bring in to EAGs, and I'm sure they would have many recommendations.

The people we are aiming to help should be well within the conversation. Or at least slightly in it. Rather than just be the helpers/(elites) meeting amongst themselves.

I do see this happen a bit; I've sought out and met with a number of people who were born in various low-income countries. They are always so insightful and have a lot of ideas about complex root problems etc that EA can gloss over in just looking at the straightforward fixes / band-aids.

(However to be honest I do also wonder if people in these cases think the long trip was worth it, because I don't know if many EAs really care to hear from such people as much as they care to hear from people more similar to themselves.)

Personally I would love for such people to not just be present, but also giving lots of talks.

I could personally find a bunch of these people if anyone thought it was worth my while; I already have a few ideas and specific people in mind.

To the 2 people who marked "disagree", and anyone else, again I am looking for feedback on this, so please take a moment to explain why you disagree with this, if you dare :)

I did not click Disagree; but I will say that I'm not sure I agree that "The people we are aiming to help should be well within the conversation". I don't mean to say that we should ignore their perspectives, values, or opinions, but I don't think having them attend EA Global is a useful way to achieve that. I've had a lot of interesting conversation with GiveDirectly and AMF beneficiaries, but I also think that the median beneficiary would not have much to contribute at EA Global, and if you choose exceptional beneficiaries to represent the class of beneficiaries as a whole, that leads to a different set of problems.

That's a fair point except that I certainly did not say nor mean "the median beneficiary" should be within the conversation at EAG and EAG-type contexts. I said that orgs like GiveDirectly and Fistula Foundation could be contacted to see which outstanding people they are in contact with might be ideal to bring to EAGs.

The people I speak of, don't even necessarily need to be be beneficiaries at all. They should just be, as I said, "people who grew up and/or currently live/work in these places." They might indeed be beneficiaries who now also work with these orgs in some capacity, or maybe they are not beneficiaries at all. Such people who grow up in a place, generally have way better understanding, and even sometimes out of the box thinking, about problems and solutions, etc.

I have met a very few such people at EAGs, so they sometimes are there in numbers of like 1-3 per conference, they are fairly easy to find and I sometimes wonder if I'm the only one who seeks them out, and if I have met more of these people than anyone else, even though I don't go to too many conferences overall. Personally I really enjoy speaking to them and have learned a lot from them. (But the fact that I think not too many people seem to care to find them and talk with them, makes me wonder if they themselves would find it worth it to visit an EAG in the first place from their own perspective.)

[edit 2 min after posting: "if you choose exceptional beneficiaries to represent the class of beneficiaries as a whole, that leads to a different set of problems." - I'm not sure what you meant by this part. surely it seems better to me to have some representation than zero representation.]

Just ideas, thank you so much for commenting back with your thoughts !!

If your idea is that in-country employees/contractors of organizations like GiveDirectly, Fistula Foundation, AMF, MC, Living Goods, etc., should be invited to EA Global — I agree, and I think these folks often have useful information to add to the conversation. Though I don't assume everyone in these orgs is a good fit, many are and it's worth having those voices. Some have an uncritical mindset, basically just doing what they're told, while others are a little bit too sharp-elbowed and are just looking at what can get funders' attention without caring how good it actually is.

On the other hand, if your idea is to (for example) invite some folks from villages where GiveDirectly is operating, I pretty strongly feel that this would be a waste of resources. We can get a much better perspective from this group by surveying (and indeed GiveWell and GiveDirectly have sponsored such surveys). If you were to just choose randomly, I think most of those chosen wouldn't be in a good position to contribute to discussions; and if you were to choose village elites, then you end up with a systematic bias to elite interests, which has been a serious systematic problem in trying to make bottom-up charitable interventions work.

"2023: Magnify Mentoring expands to serve people from underrepresented groups generally. “It includes, but is not limited to, people from low to middle income countries, people of color, people from low-income households, etc.” - The intention here was to pilot a round for people from underrepresented groups not captured by gender. We haven't reached consensus as to whether we will continue. It depends mostly on the impact asssessment of the round (which concludes this month). While it is accurate to say Magnify initially focused on improving diversity and inclusivity in the EA community, the organization's strategy is now focused on supporting the careers and wellbeing broadly of people who are working in evidence-based initiaves with or without an EA link. I mention this mostly because I don't want people to self-select out of applying for mentorship or mentoring with us.

Thanks for the correction! I've adjusted the entries, do let me know if there's anything still not right.

Note: I'm really unsure what I believe about the following comment, but I'm interested in hearing what others have to say about it.

Whenever we add an additional condition of the type of thing we want (say, diversity), we sacrifice some amount of the terminal aim (getting the best people). While there are good reasons to care about diversity (optics, founder effects, making people feel more comfortable), there are also ones that are more controversial (for instance -- in some cases like grant-making, diversity of sex or race as a proxy for getting a "more diverse outlook" on a particular subject). Let's call optics/ founder effects instrumental diversity and more diverse outlook diversity. Given this framing, I think two points are important:

Note: I understand that this framing is weird because the kind of diversity of knowledge/ experience is said to be good instrumentally -- i still wanted to make a different conceptual category for it because 1) it's more controversial and 2) some conditions might apply to it that may not apply to other constraints.

I'm interested in hearing what others have to say about this - especially if you think this comment overrates the amount that EAs care about diversity (vs instrumental diversity). I'm also interested in hearing if you think I'm underestimating the reasons for why diversity might be important that I might be missing.

I'm not 100% confident, but I suspect that I've read more on diversity than most EAs, so I'll venture a guess and share some musings. The goal of many diversity efforts is less about adding an additional condition, and more about removing a "hidden" condition that we weren't really cognizant of. In fact, my impression is that it is incredibly rare to have sex, race, etc., as a condition for employment (although it may often be an unofficial condition in a social, non-professional context). I do think that often this is done poorly and it often fails, but I don't think that is an EA-specific thing.

Broadly speaking, my informal mental framework is that there tend to be two reasons for diversity and similar initiatives for an organization/company: the justice/fairness argument, and the innovation/creativity argument. I'm at the risk of rambling here, so I'll instead just leave a link to a Notion page that I put together a while back: Joseph's notes on Diversity.

I don't have any data, but I hypothesize that most engaged EAs care about both instrumental diversity and diversity[1]. Each of them has uses/benefits, but it would be a bit foolish to naively assume that either of them is useful in all contexts, for all end goals. I do think that a diverse outlook (or experience, or knowledge) can be very helpful in certain contexts, but unfortunately we (I mean people in general) often use membership in a certain group as a sloppy and imperfect proxy for that outlook.[2] When superficial diversity is what gets emphasized, then you end up with "All skin folk ain’t kinfolk." I'm reminded of a quote from The Privileged Poor: How Elite Colleges Are Failing Disadvantaged Students, from a Mexican student at Harvard College who "struggled to come to terms with the huge gap between himself and other people who looked like him."

For a more light-hearted critique, here is a meme/joke about diversity along Wall Street Analysts. (for anybody without the culture context, the snarky joke here is that these people are all from incredibly wealthy backgrounds, and are quite similar in all ways aside from superficial diversity).

Although we might label them as something like the appearance of diversity and diversity of perspective. Both of them have value, but American pop-culture tends to focus much more on the former. I might also be engaged in too much sloppy generalization, assuming that my values and perspectives are widely shared. So take this all with a grain of salt.

I'm reminded about hearing how many black Americans were very happy in 2008 when Obama was elected as president, but who also felt that in a certain sense he didn't really represent them, because his childhood and his experiences were so different. The thought experiment that I occasionally use is to think of a stereotypical college brochures showing a group of students, and imagine which variation would be more diverse:

I have thought this to be so important for quite a while now, especially given the overall vibe of EA actually purposefully aiming to be exclusive (aiming purposefully at the most elite in a variety of ways). Thank you so much for your continued work on this subject Julia !!!!!!

I remember I showed this video talk of yours in 2020 to some fellows when I was helping run an EA fellowship, and one woman of color student in particular really appreciated your thoughts and tips. I don't see your talk listed above but it's really great as well (but perhaps you did more extensive writing that's better linked than this particular video, idk).

Nice to think that some of your hard work on this has really paid off! With the recent numbers you present that EA is at least slightly more diverse overall, (with perhaps a sudden increase just in the last year that hopefully continues also).

Thanks again !!!!!

I definitely don't want to frame this as primarily my work — I think projects like Magnify have played more of a role. But thank you for the kind words!

I also think it's really hard to tell what contributes to change or lack of change in demographics.

I think the Encompass link is expired.

Thanks, I've changed it to an article about them.

I really appreciate that you consolidated all this. This is a really helpful overview.

Thanks for organizing these efforts into a timeline! This is a post I am sure I will reference many times

Executive summary: This post provides a historical overview of diversity, equity, and inclusion (DEI) efforts in the Effective Altruism (EA) community, detailing key organizational initiatives, hiring practices, community discussions, and demographic trends over time.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.