Agreement

78 % of my donations so far have gone to the Long-Term Future Fund[1] (LTFF), which mainly supports AI safety interventions. However, I have become increasingly sceptical about the value of existential risk mitigation, and currently think the best interventions are in the area of animal welfare[2]. As a result, I realised it made sense for me to arrange a bet with someone very worried about AI in order to increase my donations to animal welfare interventions. Gregory Colbourn (Greg) was the 1st person I thought of. He said:

I think AGI [artificial general intelligence] is 0-5 years away and p(doom|AGI) is ~90%

I doubt doom in the sense of human extinction is anywhere as likely as suggested by the above. I guess the annual extinction risk over the next 10 years is 10^-7, so I proposed a bet to Greg similar to the end-of-the-world bet between Bryan Caplan and Eliezer Yudkowsky.

Meanwhile, I transferred 10 k€ to PauseAI[3], which is supported by Greg, and he agreed to the following. If Greg or any of his heirs are still alive by the end of 2027, they transfer to me or an organisation of my choice 20 k€ times the ratio between the consumer price index for all urban consumers and items in the United States, as reported by the Federal Reserve Economic Data (FRED), in December 2027 and April 2024. I expect inflation in this period, i.e. a ratio higher than 1. Some more details:

- The transfer must be made in January 2028.

- I will decide in December 2027 whether the transfer should go to me or an organisation of choice. My current preference is for it to go directly to an organisation, such that 10 % of it is not lost in taxes.

- If for some reason I am not able to decide (e.g. if I die before 2028), the transfer must be made to my lastly stated organisation of choice, currently The Humane League (THL).

As Founders Pledge’s Patient Philanthropy Fund, I have my investments in Vanguard FTSE All-World UCITS ETF USD Acc. This is an exchange-traded fund (ETF) tracking global stocks, which have provided annual real returns of 5 % from 1900 to 2022. In addition, Lewis Bollard expects the marginal cost-effectiveness of Open Philanthropy’s (OP’s) farmed animal welfare grantmaking “will only decrease slightly, if at all, through January 2028”[4], so I suppose I do not have to worry much about donating less over the period of the bet of 3.67 years (= 2028 + 1/12 - (2024 + 5/12)). Consequently, I think my bet is worth it if its benefit-to-cost ratio is higher than 1.20 (= (1 + 0.05)^3.67). It would be 2 (= 20*10^3/(10*10^3)) if the transfer to me or an organisation of my choice was fully made, so I need 60 % (= 1.20/2) of the transfer to be made. I expect this to be the case based on what I know about Greg, and information Greg shared, so I went ahead with the bet.

Here are my and Greg’s informal signatures:

- Me: Vasco Henrique Amaral Grilo.

- Greg: Gregory Hamish Colbourn.

Impact

I expect 90 % of the potential benefits of the bet to be realised. So I believe the bet will lead to additional donations of 8.72 k$ (= (0.9*20 - 10)*10^3*1.09). Saulius estimated corporate campaigns for chicken welfare improve 41 chicken-years per $, and OP thinks “the marginal FAW [farmed animal welfare] funding opportunity is ~1/5th as cost-effective as the average from Saulius’ analysis”, so I think corporate campaigns affect 8.20 chicken-years per $ (= 41/5). My current plan is donating to THL, so I expect my bet to improve 71.5 k chicken-years (= 8.72*10^3*8.20).

I also estimate corporate campaigns for chicken welfare have a cost-effectiveness of 15.0 DALY/$[5]. So I expect the benefits of the bet to be equivalent to averting 131 kDALY (= 8.72*10^3*15.0). According to OP, “GiveWell uses moral weights for child deaths that would be consistent with assuming 51 years of foregone life in the DALY framework (though that is not how they reach the conclusion)”. Based on this, the benefits of the bet are equivalent to saving 2.57 k lives (= 131*10^3/51).

Acknowledgements

Thanks to Greg for feedback on the draft, to Lewis Bollard for commenting on the future marginal cost-effectiveness of OP’s farmed animal welfare grantmaking, and to many other people who discussed the bet with me.

- ^

According to Giving What We Can's dashboard.

- ^

Under expected total hedonistic utilitarianism, which I strongly endorse.

- ^

PauseAI has a Dutch legal entity behind it (Stichting PauseAI), so it would have been better if I had transferred 10 k€ to a Dutch donor who would then donate to Pause AI, and pay less income tax as a result. I reached out to Effective Altruism Netherlands, Effective Giving and Giving What We Can, and shared the idea on Hive’s Slack, and the Facebook group Highly Speculative EA Capital Accumulation, but I did not manage to find such a donor.

- ^

This makes theoretical sense. Funding should be moved from the worst to the best periods/interventions until their marginal cost-effectiveness is the same.

- ^

1.44 k times the cost-effectiveness of GiveWell’s top charities.

If there was a marketplace where you could make bets like this in a low friction way, without much risk besides simply being wrong, would you use it? Please agree-vote for yes, disagree-vote for no

For anyone who wants to bet on doom:

This would not be good for you unless you were an immoral sociopath with no concern for the social opprobrium that results from not honouring the bet.

There is some element of this for me (I hope to more than 2x my capital in worlds where we survive). But it's not the main reason.

The main reason it's good for me is that it helps reduce the likelihood of doom. That is my main goal for the next few years. If the interest this is getting gets even one more person to take near-term AI doom as seriously as I do, then that's a win. Also the $x to PauseAI now is worth >>$2x to PauseAI in 2028.

This is not without risk (of being margin called in a 50% drawdown)[1]. Else why wouldn't people be doing this as standard? I've not really heard of anyone doing it.

And it could also be costly in borrowing fees for the leverage.

I think it's slightly bad when people publicly make negative EV (on a financial level) bets that are framed as object-level epistemic decisions, when in reality they primarily are hoping to make up for the negative financial EV via PR/marketing benefits for their cause[1]. The general pattern is one of several pathologies that I was worried about re:prediction markets, especially low-liquidity ones.

But at least this particular example is unusually public, so I commend you for that.

An even more hilariously unsound example is this Balaji/James bet (https://www.forbes.com/sites/brandonkochkodin/2023/05/02/balaji-srinivasan-concedes-bet-that-bitcoin-will-reach-1-million-in-90-days/?sh=2d43759d76c6).

I really wish we didn’t break the fourth wall on this, but EA can’t help itself;

“The phrase that comes closest to describing this phenomenon is:"The Disclosive Corruption of Motive"

This phrase, coined by philosopher Bernard Williams, suggests that revealing too much about our motivations or reasons for acting can actually corrupt or undermine the very motives we initially had.

Williams argued that some motives are inherently "opaque" and that excessive transparency can damage their moral value. By revealing too much, we can inadvertently transform our actions from genuine expressions of care or kindness into mere calculations, thereby diminishing their moral worth.”

Agree with this. I think doing weird signaling stuff with bets worsens the signal that bets have on understanding people's actual epistemic states.

But I don't even think it's negative financial EV (see above - because I'm 50% on not having to pay it back at all because doom, and I also think the EV of my investments is >2x over the timeframe).

I would hypothetically use it, but I expect that on almost every issue there will be people both to the left and to the right of me who would rather bet with each other than bet with me, so I won't end up making any bets. I think a marketplace like this would be most useful for people with outlier beliefs.

(There might be some way of resolving this problem, I haven't really thought about it.)

but if there's one person to your right and two to your left, then one of them wants to bet with you, right?

(stylised example, hopefully you get what I mean)

Just flagging that I, and others I know, would also be happy to take folks up on the animal welfare side of this trade if AI-concerned folks are interested! Reach out if so (and happy to discuss basically all amounts above $1,000).

I'd personally strongly consider betting $1000-$10,000 USD so long as it's secured against the value of some illiquid asset (e.g. a building). Please DM me if you're interested in betting that the world ends.

Do you know a way of securing such a bet against a house in a water-tight way? I've been told by someone who's consulted a lawyer that such a civil contract would not be enforceable if it came down to enforcement being necessary.

$250k?

I'm also interested in the same bet against you, Greg. Happy to do $10K.

I am surprised that you don't understand Eliezer's comments in this thread. I claim you'd do better to donate $X to PauseAI now than lock up $2X which you will never see again (plus lock up more for overcollateralization) in order to get $X to PauseAI now.

Did you follow the thread(s) all the way to the end? I do see the overcollateralized part of the lock-up again. And I'm planning on holding ~50% long term (i.e. past 5 years) because I'm on ~50% that we make it past then.

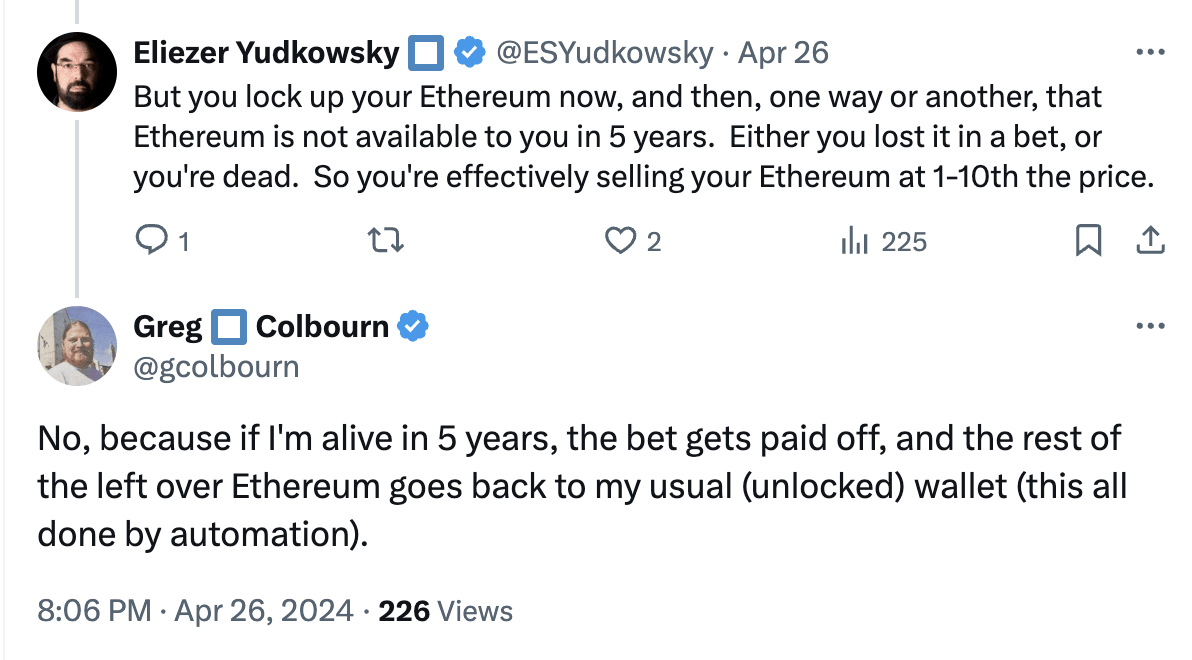

Alas, Eliezer did not answer my question at the end.

@EliezerYudkowsky

You can't get loans at better rates, accounting for your P(doom) (and/or without setting aside as much collateral)?

No. I can only get crypto-backed loans (e.g. Aave). Currently on ~10% interest; no guarantee they won't go above 15% over 5 years + counterparty risk to my collateral.

I’m an AI-concerned person who would also likely take this bet (or similar bets) against Greg's side, i.e. I don't think that AGI will kill us all by the end of 2027. Please do reach out if you’d like to bet in the $100-$10k range.

(Edited for clarity)

Note that I made an exception on timelines for this bet, to get it to happen as the first of it's kind. I'd be more comfortable making it 5 years from the date of the bet. Anyway, open to doing $10k, especially if it's done in terms of donations (makes things easier in terms of trust, I think). But I'm also going to say that I think the signalling value would be higher if it was against someone who wasn't EA or concerned about AI x-risk.

When you say 'AI concerned' does that mean you would be interested in taking Greg's side of the bet (that everyone will die)? That is my interpretation, but the fact that you didn't say this explicitly makes me unsure.

Oops, I meant that I would like to bet against Greg, but I can see how my last comment was ambiguous, and I appreciate your comment.

Happy to see this! These kinds of trades seem quite great to me. Everyone wins in (their) expected EV.

Thanks for making it public.

Greg made a bad bet. He could do strictly better, by his lights, by borrowing 10K, giving it to PauseAI, and paying back ~15K (10K + high interest) in 4 years. (Or he could just donate 10K to PauseAI. If he's unable to do this, Vasco should worry about Greg's liquidity in 4 years.) Or he could have gotten a better deal by betting with someone else; if there was a market for this bet, I claim the market price would be substantially more favorable to Greg than paying back 200% (plus inflation) over <4 years.

[Edit: the market for this bet is, like, the market for 4-year personal loans.]

Thanks for the comment, Zach! Jason suggested something similar to Greg:

Greg replied the following on April 17:

@OscarD and @calebp, I am just tagging you to let you know you are right that a major motivation for Greg is signalling his concern for AI risk (see quote just above).

Yes, this. The major motivation is signalling, or "putting my money where my mouth is". But also, the loans available to me (e.g. Aave) might not have that much better interest rates over the timescale.

This isn't really against Zach’s point, but presumably, a lot of Greg's motivation here is signalling that he is serious about this belief and having a bunch of people in this community see it for advocacy reasons. I think that taking out a loan wouldn't do nearly as well on the advocacy front as making a public bet like this.

This isn’t true if Greg values animal welfare donations above most non-AI things by a sufficient amount. He could have tried to shop around for more favorable conditions with someone else in EA circles but it seems pretty likely that he’d end up going with this one. There’s no market for these bets.

What's the relevant threshold here? Greg has to prefer $10k going to animal charities in 3 years' time over that $10k going partly to debt interest and partly to whatever he wants?

Thanks for the comment, mlsbt!

I think Greg would have been happy to go ahead with this bet regardless of my donation plans.

For reference, Greg has had a similar offer to this bet for around a year on X, although I guess many do not know about it (I did not until 2 months ago).

Yes, I would go ahead regardless of the other party's plans for their winnings (but for the record I very much approve of Vasco's valuing of animal welfare).

I originally intended this bet to be made with enemies, not friends[1]. i.e. I was hoping an e/acc would take it, rather than an EA!

I'm using "friend" in a general sense here, as in someone broadly value aligned. I don't actually know Vasco.

Want to chip in that the interest for this post and huge comment thread lends evidence towards the bet being good for Greg's reason below, even if he could have done far better on the odds/financial front.

"Also, the signalling value of the wager is pretty important too imo. I want people to put their money where their mouth is if they are so sure that AI x-risk isn't a near term problem. And I want to put my money where my mouth is too, to show how serious I am about this."

I would be interested in @Greg_Colbourn's thoughts here! Possibly part of the value is in generating discussion and publicly defending a radical idea, rather than just the monetary EV. But if so maybe a smaller bet would have made sense.

Yes (see comments above in this thread). I think a smaller amount would not have the same impact (there is already Caplan's bet with Yudkowsky) - it needs to be actually a significant amount of money. I have actually offered to go up to $250k (worst case I can sell my rental house to pay off the bet; and I do have significant other investments).

So far I am in discussion with a couple of others for similar amounts to the bet in OP, but the problem of guaranteeing my payout is proving quite a difficult one[1]. I guess making it direct donations makes things a lot easier.

My preferred method is trading on my public reputation (which I value higher than the bet amounts); but I can't expect people to take my word for it.

It could also be done by drawing up a contract with my house as collateral, but I've been told that this likely wouldn't be enforceable.

Then there is using escrow (but the escrow needs to be trusted; and the funds tied up in the escrow - and I prefer to hold crypto, which complicates things).

Then there are crypto smart contracts, but these need to be trusted, and also massively overcollateralised to factor in deep market drawdowns (with the opportunity cost that brings).

I'll just throw my name in as well against Greg in case there are any takers. Would be willing to make bets up to 6 figures

Thanks for sharing, Marcus! @Greg_Colbourn, I am tagging you just in case you are not aware of the above.

I've been getting a few offers from EAs recently. I might accept some. What I'd really like to do though is bet against an e/acc.

I mean, in terms of signalling it's not great to bet people (or people from a community) who are basically on your side, i.e. think AI x-risk is a problem, but just not that big a problem; as opposed to people who think the whole thing is nonsense and are actively hostile to you and dismissive of your concerns.

This is great! I am personally very interested in making such bets but don't know how to get started / do it well. Maybe this content exists elsewhere, but I think a step by step instructional on how to set up a bet well (among well meaning people) could be really valuable!

Unfortunately it seems as though bets like this[1] (for significant sums of money) might be truly unprecedented. Still working on trying to establish workable mechanisms for trust / ensuring the payout (but I think having it in terms of donations makes things easier).

Peer-to-peer, between people who don't know each other.

Also, one potential benefit from Greg's POV is that it increases incentives for those betting against him to have AI slowed and reduces their potential COI in AI progress, although the effect is probably small, especially for those bullish on AI progress but skeptical of doom.

Great to see this public demonstration of both of your respective beliefs!

There seems to be quite a few people who are keen to take up the bet against extinction in 2027... are there many who would be willing to take the opposite bet (on equal terms (i.e. bet + inflation) as opposed to the 200% loss that Greg is on the hook for)?

Do people also mind where their bet goes? In this case I see the money went to PauseAI, would people be willing to make the bet if the money went to that person for them to just spend as they want? I could see someone who believed p(doom) by 2027 was 90%+ might just want more money to go on holiday before the end if they doubt any intervention will succeed. This is obviously hypothetical for interest sake as a real trade would need some sort of guarantee the money would be repaid etc. etc.

Thanks for the questions, James!

Note Greg does not expect to lose much money, since 10 k$ plus a 50 % chance of losing 20 k$ equals 0[1].

Greg having an altruistic motivation to go ahead with the bet makes me more confident that he will pay in case he loses. I also like that my money is going towards an organisation:

In reality, Greg's median time of human extinction is a little after Janury 2028, so he expects to lose a little bit of money.

Although I did not formally consider it a donation in the context of e.g. my Giving What We Can Pledge.

For the impact estimation, are you taking into account how much of the donation to THL would be used specifically for corporate campaings? (since they also work on movement building, for instance)

Thanks for the question, Joan! No, I did not take that into account. I assumed the cost-effectiveness of the donation is equal to that of corporate campaigns for chicken welfare, so I implicitly supposed other activities my donation supports are as cost-effective as the campaigns. I have not investigated which programs of the THL are more cost-effective, but I am happy to defer to them. Relatedly, OP grants to THL in 2021, 2022 and 2023 were also "general support" (which I assume means unrestricted).

However, it is possible to make a restricted donation to the Open Wing Alliance[1]:

For reference, here is the break-down of THL's expenditure in 2023:

From here:

I really don't understand why Greg agreed to this -or why would anyone agree. It is a sure-loss situation. If he is wrong and we all (most of us) are alive and kicking in 4 years, he has to pay. If he's right, he's dead. Could anyone explain me why on Earth would anyone agree to this bet? Thanks.

He's using the money to try to prevent AI doom (or at least delay it). If the money turns out to be decisive in averting AI doom, that would be worth far more to him than having to pay it back 2x + inflation.

Thanks for asking!

Greg thinks a given amount of money can do more good in 2024 than at the end of 2027:

In addition, Greg does not expect to lose much money, since 10 k$ plus a 50 % chance of losing 20 k$ equals 0[1].

In reality, Greg's median time of human extinction is a little after January 2028, so he expects to lose a little bit of money.

Yes. Also another consideration is that I expect my high risk investing strategy to return >200% over the time in question, in worlds where we survive (all it would take is 1/10 of my start-up investments to blow up, for example, or crypto going up another >2x).

I’m happy to join that bet.

Hi Remmelt,

Joining the bet on which side?

Ah, I wasn't clear. To bet that AI will not kill us all by the end of 2027.

I don't think that makes sense, given the world-complexity "AI" would need to learn and evolve and get tinkered to be able to navigate. I've had some conversations with Greg about this.